artificial intelligence (ai) and natural language processing (NLP) have seen significant advances in recent years, particularly in the development and deployment of large language models (LLMs). These models are essential for a variety of tasks, such as text generation, question answering, and document synthesis. However, while LLMs have demonstrated remarkable capabilities, they encounter limitations when processing long input sequences. The fixed context windows inherent to most models limit their ability to handle large data sets, which can negatively impact their performance on tasks that require the retention of complex and widely distributed information. This challenge requires the development of innovative methods to extend the effective context windows of models without sacrificing performance or requiring excessive computational resources.

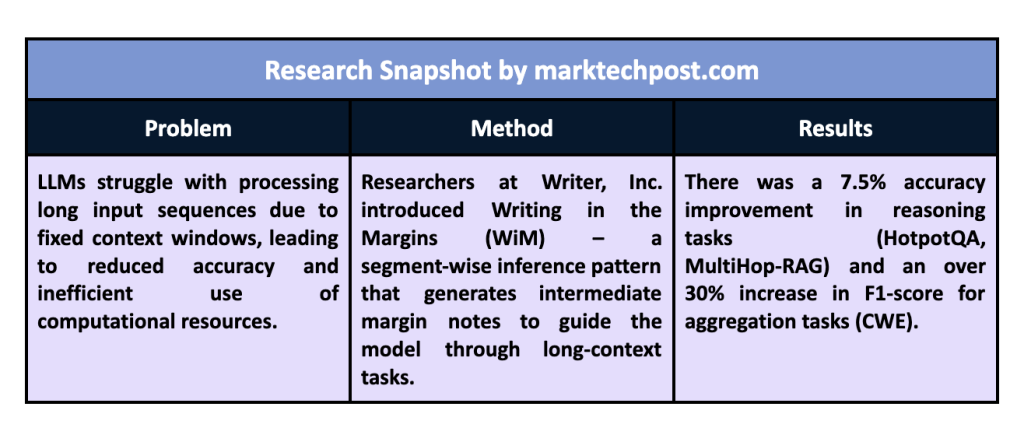

The key issue with LLMs is maintaining accuracy when working with large amounts of input data, especially in retrieval-oriented tasks. As the input size increases, models often struggle to focus on relevant information, leading to performance degradation. The task becomes more complex when critical information is buried among irrelevant or less important data. With a mechanism to guide the model toward the essential parts of the input, significant computational resources are often spent on processing unnecessary sections. Traditional approaches to handling long contexts, such as simply increasing the context window size, are computationally expensive and do not always yield the desired performance improvements.

Several methods have been proposed to address these limitations. One of the most common approaches is sparse attention, which selectively focuses the model's attention on smaller subsets of the input, thereby reducing the computational burden. Other strategies include length extrapolation, which attempts to extend the model's effective input length without dramatically increasing its computational complexity. Techniques such as context compression, which condenses the most important information in a given text, have also been employed. Stimulus strategies such as chain of thought (CoT) break down complex tasks into smaller, more manageable steps. These approaches have achieved varying levels of success, but are often accompanied by trade-offs between computational efficiency and model accuracy.

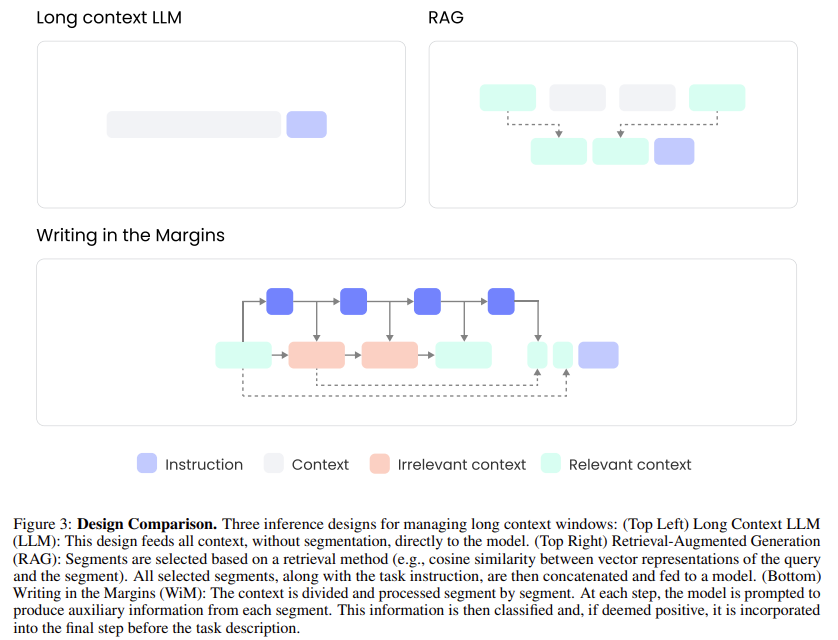

Researchers at Writer, Inc. introduced a new inference pattern called Writing in the margins (WiM)This method aims to optimize the performance of LLMs on tasks that require extensive context retrieval by leveraging a novel chunk-wise processing technique. Instead of simultaneously processing the entire input sequence, WiM breaks the context into smaller, more manageable chunks. During the processing of each chunk, intermediate marginal notes guide the model. These notes help the model identify relevant information and make more informed predictions. By incorporating this chunk-wise approach, WiM significantly improves model efficiency and accuracy without requiring any fine-tuning.

The WiM method splits the input into fixed-size chunks during the pre-filling phase. This allows the model’s key-value (KV) cache to be filled incrementally, allowing the model to process the input more efficiently. This process generates side notes, which are extractive summaries based on queries. These notes are then reintegrated into the final output, providing the model with more detailed information to guide its reasoning. This approach minimizes computational overhead while improving the model’s understanding of long contexts. The researchers found that this method improves model performance and increases the transparency of its decision-making process, as end users can see the side notes and understand how the model arrives at its conclusions.

In terms of performance, WiM delivers impressive results on several benchmarks. For reasoning tasks such as HotpotQA and MultiHop-RAG, the WiM method improves the model’s accuracy by an average of 7.5%. More notably, for tasks involving data aggregation, such as the Common Words Extraction (CWE) benchmark, WiM delivers an increase of over 30% in F1 score, demonstrating its effectiveness on tasks that require the model to synthesize information from large datasets. The researchers reported that WiM offers a significant advantage in real-time applications as it reduces the latency of model responses by allowing users to view the progress as the input is processed. This feature enables an early exit from the processing phase if a satisfactory answer is found before the entire input is processed.

The researchers also implemented WiM using the Hugging Face Transformers library, making it accessible to a broader audience of ai developers. By publishing the code as open source, they encourage further experimentation and development of the WiM method. This strategy aligns with the growing trend of making ai tools more transparent and explainable. The ability to see intermediate results, such as side notes, makes it easier for users to trust the model’s decisions, as they can understand the reasoning behind its output. In practical terms, this can be especially valuable in fields such as legal document analysis or academic research, where transparency of ai decisions is crucial.

In conclusion, Writing in the Margins offers a novel and effective solution to the most important challenge of LLMs: the ability to handle long contexts without sacrificing performance. By introducing chunk processing and margin note generation, the WiM method increases accuracy and efficiency on long context tasks. It improves reasoning skills, as demonstrated by a 7.5% accuracy increase on multi-hop reasoning tasks, and excels on aggregation tasks, with a 30% increase in F1 score for CWE. Furthermore, WiM provides transparency into ai decision-making, making it a valuable tool for applications that require explainable results. The success of WiM suggests that it is a promising direction for future research, particularly as ai continues to be applied to increasingly complex tasks that require processing large data sets.

Take a look at the Paper and GitHub PageAll credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter and join our Telegram Channel and LinkedIn GrAbove!. If you like our work, you will love our fact sheet..

Don't forget to join our SubReddit of over 50,000 ml

FREE ai WEBINAR: 'SAM 2 for Video: How to Optimize Your Data' (Wednesday, September 25, 4:00 am – 4:45 am EST)

Asif Razzaq is the CEO of Marktechpost Media Inc. As a visionary engineer and entrepreneur, Asif is committed to harnessing the potential of ai for social good. His most recent initiative is the launch of an ai media platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is technically sound and easily understandable to a wide audience. The platform has over 2 million monthly views, illustrating its popularity among the public.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>

NEWSLETTER

NEWSLETTER