The development of large language models (LLM) in artificial intelligence represents an important advance. These models underpin many of today's advanced natural language processing tasks and have become indispensable tools for understanding and generating human language. However, the computational and memory demands of these models, especially during inference with long sequences, pose substantial challenges.

The main challenge in implementing LLM efficiently lies in the self-attention mechanism, which significantly affects performance due to its memory-intensive operations. The memory complexity of the mechanism grows with the length of the context, resulting in higher inference costs and limitations on system performance. This challenge is exacerbated by the trend toward models that process increasingly longer sequences, highlighting the need for optimized solutions.

Previous attempts to address the inefficiencies of LLM inference have explored various optimization strategies. However, these solutions often must balance computational efficiency and memory usage, especially when handling long sequences. The limitations of existing approaches underscore the need for innovative solutions that can navigate the complexities of optimizing LLM inference.

The research presents ChunkAttention, an innovative method developed by a Microsoft team designed to improve the efficiency of the self-attention mechanism in LLMs. By employing a prefix-aware key/value (KV) cache system and a novel two-phase partitioning algorithm, ChunkAttention optimizes memory utilization and accelerates the self-attention process. This approach is particularly effective for applications that use LLM with shared system messages, a common feature in many LLM implementations.

The core of ChunkAttention's innovation is KV cache management. The method organizes key/value tensors into smaller, more manageable chunks and structures them within an auxiliary prefix tree. This organization allows these tensors to be dynamically shared and efficiently used across multiple requests, significantly reducing memory waste. Additionally, by batching operations for sequences with matching notice prefixes, ChunkAttention improves speed and computational efficiency.

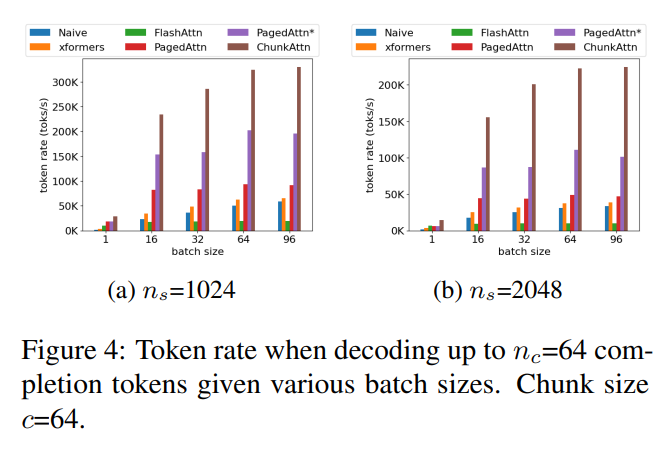

The effectiveness of ChunkAttention is demonstrated through rigorous empirical testing, revealing a substantial improvement in inference speed. The method achieves a speedup of 3.2 to 4.8 times compared to existing state-of-the-art implementations for sequences with shared system cues. These results attest to the method's ability to address the dual challenges of memory efficiency and computational speed in LLM inference.

In conclusion, the introduction of ChunkAttention marks a significant advance in artificial intelligence, particularly in optimizing the inference processes of large language models. This research paves the way for more effective and efficient implementation of LLM in a wide range of applications by addressing critical inefficiencies in the self-attention mechanism. The study highlights the potential of innovative optimization strategies and sets a new benchmark for future research in this field.

Review the Paper. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on Twitter and Google news. Join our 38k+ ML SubReddit, 41k+ Facebook community, Discord channeland LinkedIn Grabove.

If you like our work, you will love our Newsletter..

Don't forget to join our Telegram channel

You may also like our FREE ai Courses….

![]()

Hello, my name is Adnan Hassan. I'm a consulting intern at Marktechpost and soon to be a management trainee at American Express. I am currently pursuing a double degree from the Indian Institute of technology, Kharagpur. I am passionate about technology and I want to create new products that make a difference.

<!– ai CONTENT END 2 –>