ai has seen significant advancement in coding, math, and reasoning tasks. These advances are largely due to the increased use of large language models (LLMs), essential for automating complex problem-solving tasks. These models are increasingly being used to handle highly specialized and structured problems in competitive programming, mathematical proofs, and real-world coding problems. This rapid evolution is transforming the way ai is applied across industries, showing the potential to tackle difficult computational tasks that require deep learning models to accurately understand and solve these challenges.

One of the main challenges facing ai models is optimizing their performance during inference, which is the stage where models generate solutions based on provided data. In most cases, LLM models only get one chance to solve a problem, resulting in missed opportunities to arrive at correct solutions. This limitation persists despite significant investments in training models on large datasets and improving their ability to handle reasoning and problem solving. The core problem is the limited computing resources allocated during inference. Researchers have long realized that training larger models has led to improvements, but inference, the process where models apply what they have learned, still lags behind in optimization and efficiency. Consequently, this bottleneck limits the full potential of ai in high-stakes real-world tasks such as coding competitions and formal verification problems.

Several computational methods have been used to address this gap and improve inference. One popular approach is to increase model size or use techniques such as chain-of-thought induction, where models generate step-by-step reasoning before delivering their final answers. While these methods improve accuracy, they come at a significant cost. Larger models and advanced inference techniques require more computational resources and longer processing times, which is only sometimes practical. Because models are often limited to making a single attempt at solving a problem, they must be allowed to fully explore different solution paths. For example, state-of-the-art models such as GPT-4o and Claude 3.5 Sonnet can produce a high-quality solution on the first attempt, but the high costs associated with their use limit their scalability.

Researchers from Stanford University, the University of Oxford, and Google DeepMind introduced a novel solution to these limitations called “repeated sampling.” This approach involves generating multiple solutions to a problem and using domain-specific tools, such as unit tests or test checkers, to select the best answer. In the repeated sampling approach, the ai generates numerous results. Instead of relying on just one, researchers review a batch of generated solutions and then apply a checker to choose the correct one. This method shifts the focus from requiring the most powerful model for a single attempt to maximizing the probability of success across multiple attempts. Interestingly, the process shows that weaker models can be amplified through repeated sampling, often outperforming stronger models in a single attempt. The researchers apply this method to tasks ranging from competitive coding to formal mathematics, demonstrating the cost-effectiveness and efficiency of the approach.

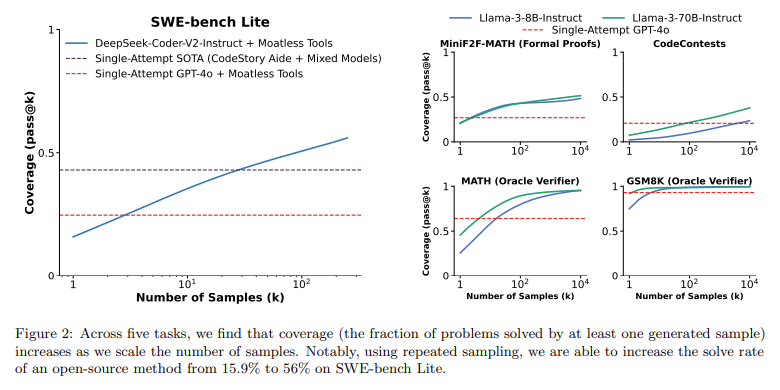

One of the key technical aspects of this repeated sampling method is the ability to scale the number of solutions generated and systematically narrow down the best ones. The technique works especially well in domains where verification is easy, such as coding, where unit tests can quickly identify whether a solution is correct. For example, in coding competitions, the researchers used repeated sampling on the CodeContests dataset, which consists of coding problems that require models to generate correct Python3 programs. Here, the researchers generated up to 10,000 attempts per problem, leading to significant performance improvements. In particular, coverage, or the fraction of problems solved by any sample, increased substantially as the number of samples grew. For example, with the Gemma-2B model, the success rate increased from 0.02% on the first attempt to 7.1% when samples reached 10,000. Similar patterns were observed with the Llama-3 models, where coverage increased exponentially as the number of trials increased, showing that even the weakest models could outperform the strongest ones when given enough opportunities.

The performance benefits of repeated sampling were especially noticeable on the SWE-bench Lite dataset, which consists of real-world GitHub issues where models must modify codebases and verify their solutions with automated unit tests. By allowing a model like DeepSeek-V2-Coder-Instruct to make 250 attempts, the researchers were able to solve 56% of coding problems, outperforming the state-of-the-art single-attempt performance of 43% achieved by more powerful models like GPT-4o and Claude 3.5 Sonnet. This improvement shows the benefits of applying multiple samples rather than relying on a single, costly attempt at a solution. In practical terms, sampling five times from the cheaper DeepSeek model was more cost-effective than using a single sample from premium models like GPT-4o or Claude, while also solving more problems.

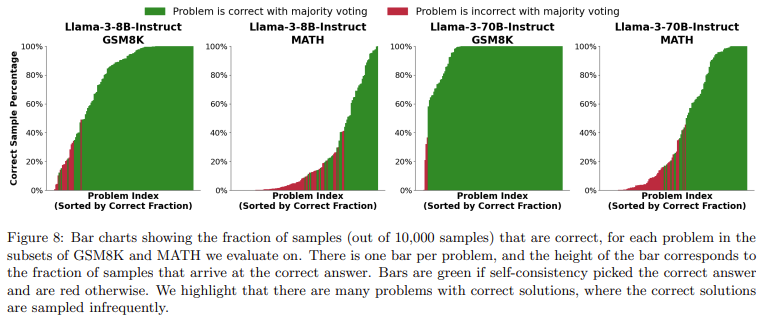

Beyond coding and formal proof problems, repeated sampling also showed promise for solving mathematical word problems. In settings where automatic checkers, such as proof checkers or unit tests, are not available, researchers noticed a gap between coverage and the ability to choose the correct solution from a set of generated samples. On tasks such as the MATH dataset, Llama-3 models achieved 95.3% coverage with 10,000 samples. However, common methods for selecting the correct solution, such as majority voting or reward models, stalled beyond a few hundred samples and had to scale with the full sampling budget. These results indicate that while repeated sampling can generate many correct solutions, identifying the correct one remains a challenge in domains where solutions cannot be automatically verified.

The researchers found that the relationship between coverage and sample count followed a log-linear trend in most cases. This behavior was modeled using an exponential power law, providing insight into how inference compute scales with sample count. In simpler terms, as models generate more attempts, the probability of solving the problem increases in a predictable way. This pattern held across multiple models, including Llama-3, Gemma, and Pythia, which ranged from 70M to 70B parameters. Coverage increased steadily with sample count, even in smaller models such as Pythia-160M, where coverage improved from 0.27% with one attempt to 57% with 10,000 samples. The repeated sampling method proved adaptable to various tasks and model sizes, reinforcing its versatility in improving ai performance.

In conclusion, the researchers concluded that repeated sampling improves problem coverage and offers a cost-effective alternative to using more expensive and powerful models. Their experiments showed that amplifying a weaker model through repeated sampling could often yield better results than relying on a single attempt at a more capable model. For example, using the DeepSeek model with multiple samples reduced overall computational costs and improved performance metrics, solving more problems than models such as GPT-4o. While repeated sampling is especially effective on tasks where verifiers can automatically identify correct solutions, it also highlights the need for better verification methods in domains without such tools.

Take a look at the Paper, Dataset, and Project. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter and LinkedInJoin our Telegram Channel.

If you like our work, you will love our fact sheet..

Don't forget to join our SubReddit of over 50,000 ml

Nikhil is a Consultant Intern at Marktechpost. He is pursuing an integrated dual degree in Materials from Indian Institute of technology, Kharagpur. Nikhil is an ai and Machine Learning enthusiast who is always researching applications in fields like Biomaterials and Biomedical Science. With a strong background in Materials Science, he is exploring new advancements and creating opportunities to contribute.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>

NEWSLETTER

NEWSLETTER