In the rapidly evolving domain of text-to-3D generative methods, the challenge of creating comprehensive and reliable evaluation metrics is paramount. Previous approaches have relied on specific criteria, such as how well a generated 3D object aligns with its textual description. However, these methods often need to improve versatility and alignment with human judgment. The need for a more adaptable and comprehensive evaluation system is evident, especially in a field where the complexity and creativity of results is continually increasing.

A team of researchers from the Chinese University of Hong Kong, Stanford University, Adobe Research, S-Lab Nanyang Technological University and Shanghai artificial intelligence Laboratory have developed an evaluation metric using GPT-4V to address this challenge, a variant of the Generative Pre-Trained Transformer Model 4 (GPT-4). This metric introduces a two-pronged approach:

- First, generate several input prompts that accurately reflect various evaluation needs.

- Secondly, evaluating 3D models according to these indications using GPT-4V.

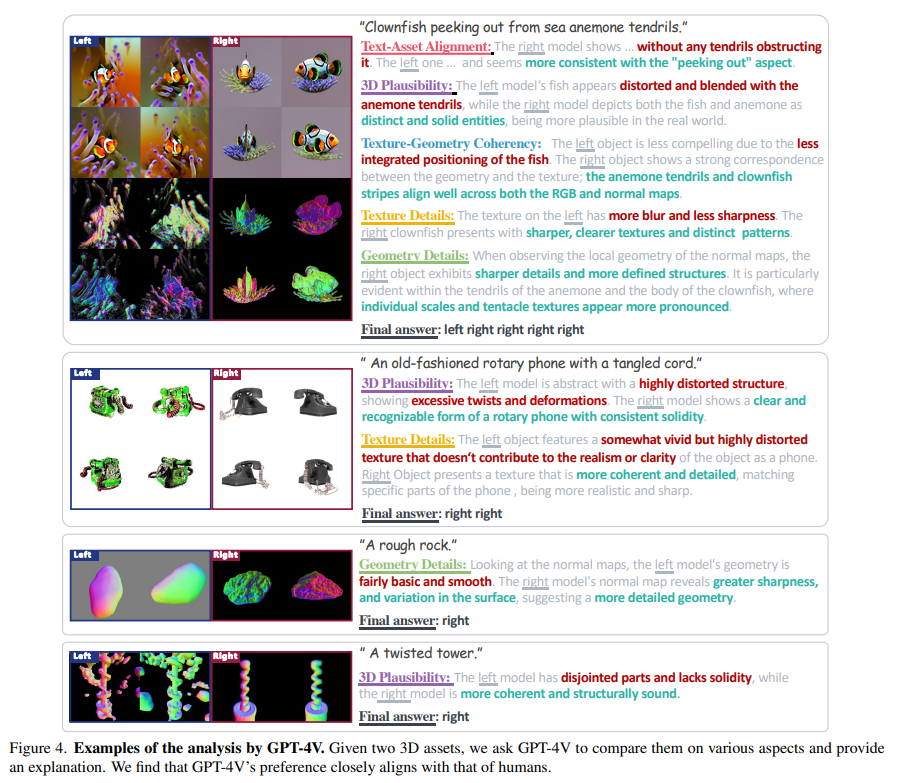

This approach provides a multifaceted evaluation, considering several aspects such as text and resource alignment, 3D plausibility, and texture details, offering a more comprehensive evaluation than previous methods.

The core of this new methodology lies in its early generation and comparative analysis. The prompt generator, powered by GPT-4V, creates various evaluation prompts, ensuring that a wide range of user demands are met. After this, GPT-4V compares pairs of 3D shapes generated from these cues. The comparison is based on several user-defined criteria, making the evaluation process flexible and comprehensive. This technique enables a scalable and holistic way to evaluate 3D text models, overcoming the limitations of existing metrics.

This new metric aligns strongly with human preferences across multiple evaluation criteria. It offers a comprehensive view of the capabilities of each model, particularly in texture sharpness and form plausibility. The adaptability of the metric is evident as it performs consistently across different criteria, improving significantly over previous metrics that typically excelled in only one or two areas. This demonstrates the metric's ability to provide a balanced and nuanced evaluation of generative text-to-3D models.

The highlights of the research can be summarized in the following points:

- This research marks a significant advance in the evaluation of generative 3D text models.

- A key development is the introduction of a versatile, human-aligned evaluation metric using GPT-4V.

- The new tool excels on multiple criteria and offers a comprehensive evaluation that closely aligns with human judgment.

- This innovation paves the way for more accurate and efficient model evaluations in 3D text generation.

- The approach sets a new standard in the field, guiding future advances and research directions.

Review the Paper and GitHub. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on Twitter. Join our 36k+ ML SubReddit, 41k+ Facebook community, Discord channeland LinkedIn Grabove.

If you like our work, you'll love our newsletter.

![]()

Hello, my name is Adnan Hassan. I'm a consulting intern at Marktechpost and soon to be a management trainee at American Express. I am currently pursuing a double degree from the Indian Institute of technology, Kharagpur. I am passionate about technology and I want to create new products that make a difference.

<!– ai CONTENT END 2 –>