amazon Bedrock is a fully managed service that offers a selection of high-performance foundation models (FM) from leading ai companies such as AI21 Labs, Anthropic, Cohere, Meta, Mistral ai, Stability ai and amazon through a single API, along with a broad set of capabilities you need to build generative ai applications with security, privacy, and responsible ai.

Batch inference in amazon Bedrock efficiently processes large volumes of data using basic models (FM) when real-time results are not necessary. It is ideal for workloads that are not latency sensitive, such as fetching embeddings, entity extraction, FM judging evaluations, and text categorization and summarization for business reporting tasks. A key advantage is its cost-effectiveness, as batch inference workloads are charged at a 50% discount compared to on-demand pricing. See Supported Regions and Models for Batch Inference for currently supported AWS regions and models.

Although batch inference offers many benefits, it is limited to 10 batch inference jobs submitted per model and region. To address this consideration and improve the use of batch inference, we have developed a scalable solution using AWS Lambda and amazon DynamoDB. This post guides you through implementing a queue management system that automatically monitors available workspaces and submits new jobs as spaces become available.

We walk you through our solution and detail the core logic of Lambda functions. By the end, you will understand how to implement this solution so you can maximize the efficiency of your batch inference workflows in amazon Bedrock. For instructions on how to start your amazon Bedrock batch inference job, see Improve call center efficiency using batch inference for transcript summarization with amazon Bedrock.

The power of batch inference

Organizations can use batch inference to process large volumes of data asynchronously, making it ideal for scenarios where real-time results are not critical. This capability is particularly useful for tasks such as asynchronous embedding generation, large-scale text classification, and bulk content analysis. For example, companies can use batch inference to generate embeds of large collections of documents, classify large data sets, or analyze substantial amounts of user-generated content efficiently.

One of the key advantages of batch inference is its cost-effectiveness. amazon Bedrock offers select FMs for batch inference at 50% of the on-demand inference price. Organizations can process large data sets more economically due to this significant cost reduction, making it an attractive option for businesses looking to optimize their generative ai processing spend while maintaining the ability to handle substantial volumes of data.

Solution Overview

The solution presented in this post uses batch inference in amazon Bedrock to process many requests efficiently using the following solution architecture.

This architecture workflow includes the following steps:

- A user uploads files for processing to an amazon Simple Storage Service (amazon S3) bucket

br-batch-inference-{Account_Id}-{AWS-Region}in it to process file. amazon S3 invokes the{stack_name}-create-batch-queue-{AWS-Region}Lambda function. - The invoked Lambda function creates new job entries in a DynamoDB table with the status Pending. The DynamoDB table is crucial for tracking and managing batch inference jobs throughout their lifecycle. Stores information such as job ID, status, creation time, and other metadata.

- The amazon EventBridge rule scheduled to run every 15 minutes invokes the

{stack_name}-process-batch-jobs-{AWS-Region}Lambda function. - He

{stack_name}-process-batch-jobs-{AWS-Region}The Lambda function performs several key tasks:- Scans the DynamoDB table for jobs in

InProgress,Submitted,ValidationandScheduledstate - Updates the job status in DynamoDB based on the latest information from amazon Bedrock

- Calculate available jobs and submit new jobs from the

Pendingqueue if spaces are available - Handles error scenarios by updating job status to

Failedand log error details for troubleshooting

- Scans the DynamoDB table for jobs in

- The Lambda function makes the GetModelInvocationJob API call to get the latest status of amazon Bedrock batch inference jobs.

- The Lambda function then updates the status of the jobs in DynamoDB by calling the UpdateItem API, ensuring that the table always reflects the most current status of each job.

- The Lambda function calculates the number of available slots before the service quota limit is reached for batch inference jobs. Based on this, check jobs in Pending status that can be submitted.

- If a slot is available, the Lambda function will make calls to the CreateModelInvocationJob API to create new batch inference jobs for the pending jobs.

- Updates the DynamoDB table with the status of the batch inference jobs created in the previous step.

- After a batch job completes, its output files will be available in the S3 bucket.

br-batch-inference-{Account_Id}-{AWS-Region}processed folder

Prerequisites

To perform the solution, you need the following prerequisites:

Implementation guide

To deploy the pipeline, complete the following steps:

- Choose the launch stack button:

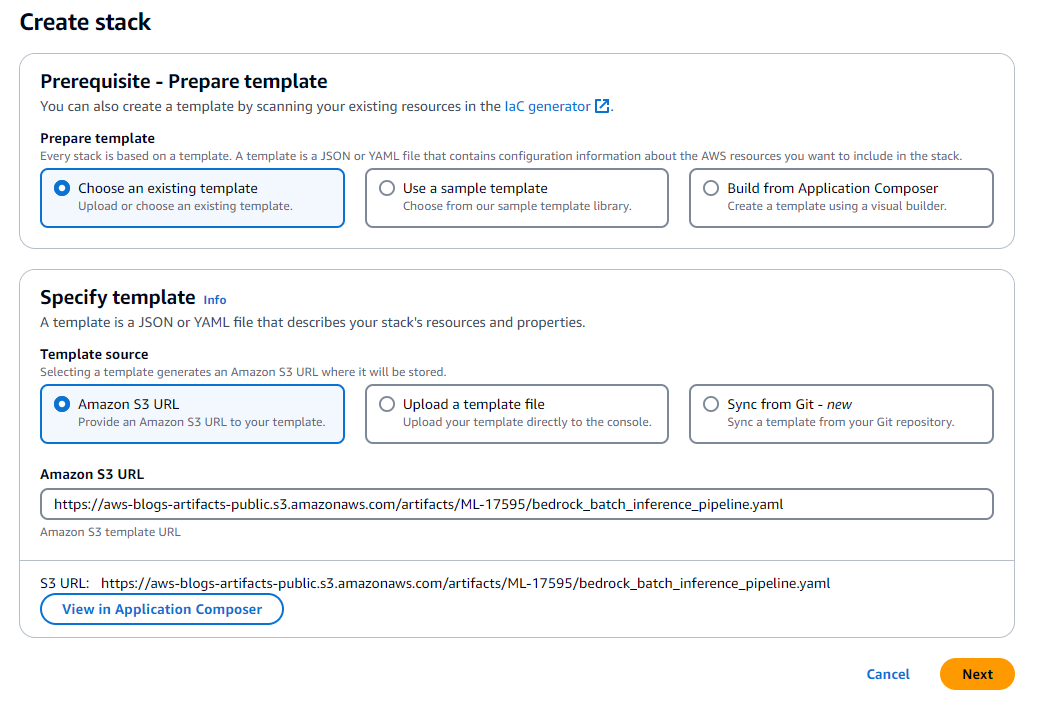

- Choose Nextas shown in the following screenshot

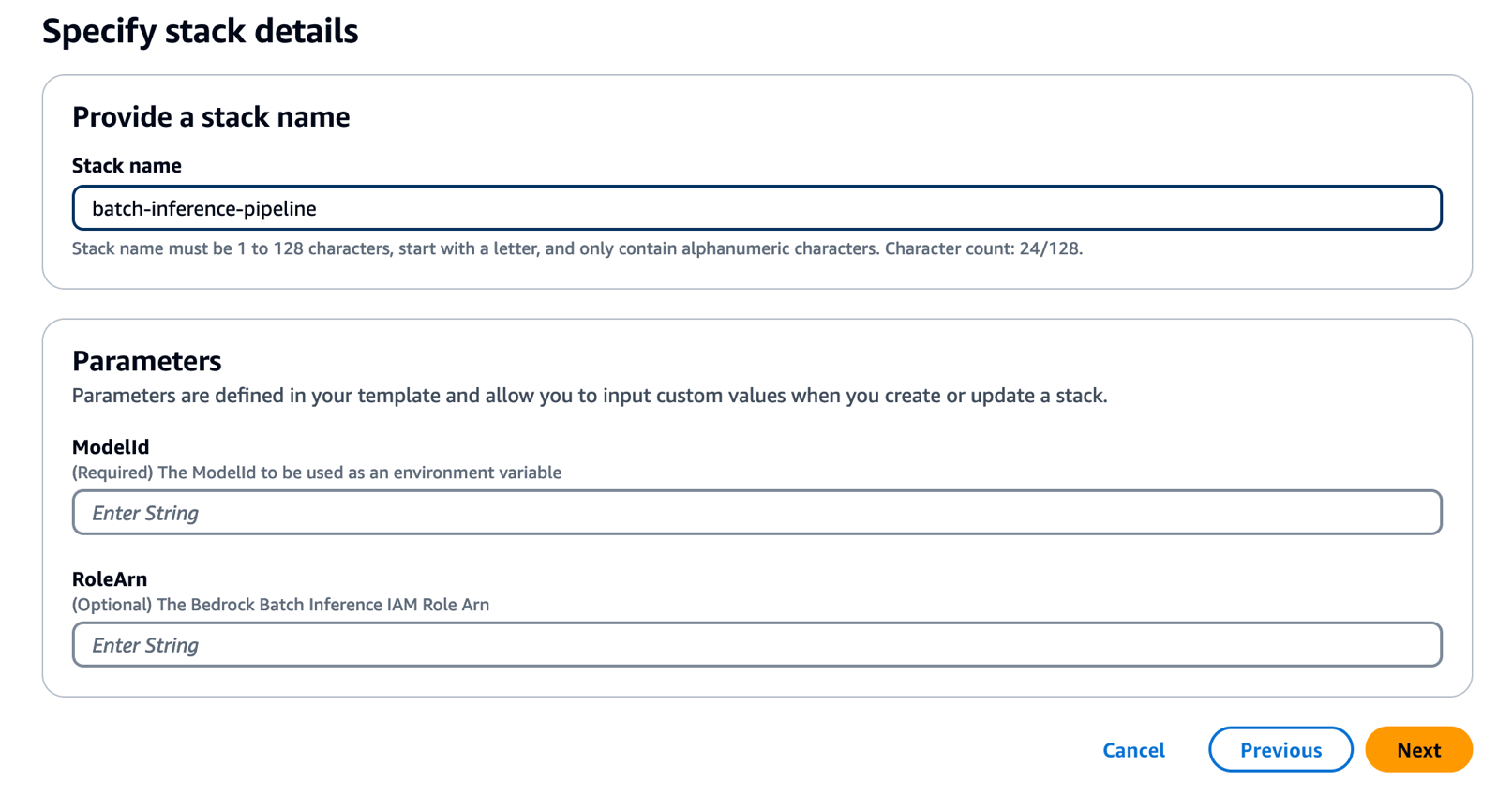

- Specify the details of the pipeline with options that fit your use case:

- First name (required) – The name that you specified for this AWS CloudFormation. The name must be unique in the region you are creating it in.

- Model ID (required) – Provide the ID of the model with which you need to run the batch job.

- RoleArn (optional) – By default, the CloudFormation stack will deploy a new IAM role with the necessary permissions. If you have a role that you want to use instead of creating a new role, provide the amazon Resource Name (ARN) of the IAM role that has sufficient permission to create a batch inference job in amazon Bedrock and read/write to the S3 bucket created.

br-batch-inference-{Account_Id}-{AWS-Region}. Follow the instructions in the prerequisites section to create this role.

- In the amazon configuration stack options section, add optional tags, permissions, and other advanced settings if necessary. Or you can just leave it blank and choose Nextas shown in the following screenshot.

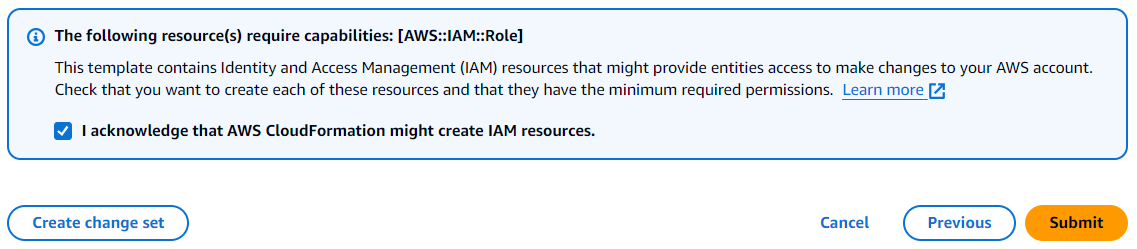

- Review the stack details and select I recognize that AWS CloudFormation could create AWS IAM resourcesas shown in the following screenshot.

- Choose Deliver. This starts the pipeline deployment to your AWS account.

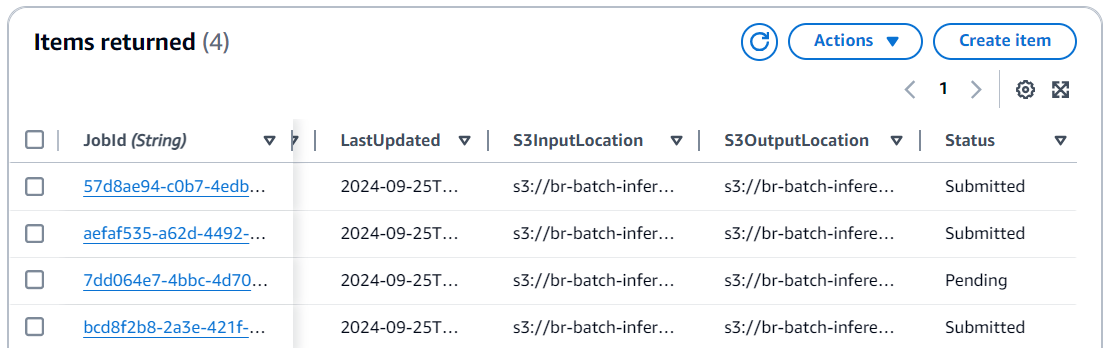

- Once the stack is successfully implemented, you can start using the pipeline. First, create a /to-process folder in the created amazon S3 location for the entry. A .jsonl uploaded to this folder will have a batch job created with the selected model. The following is a screenshot of the DynamoDB table where you can track job status and other types of job-related metadata.

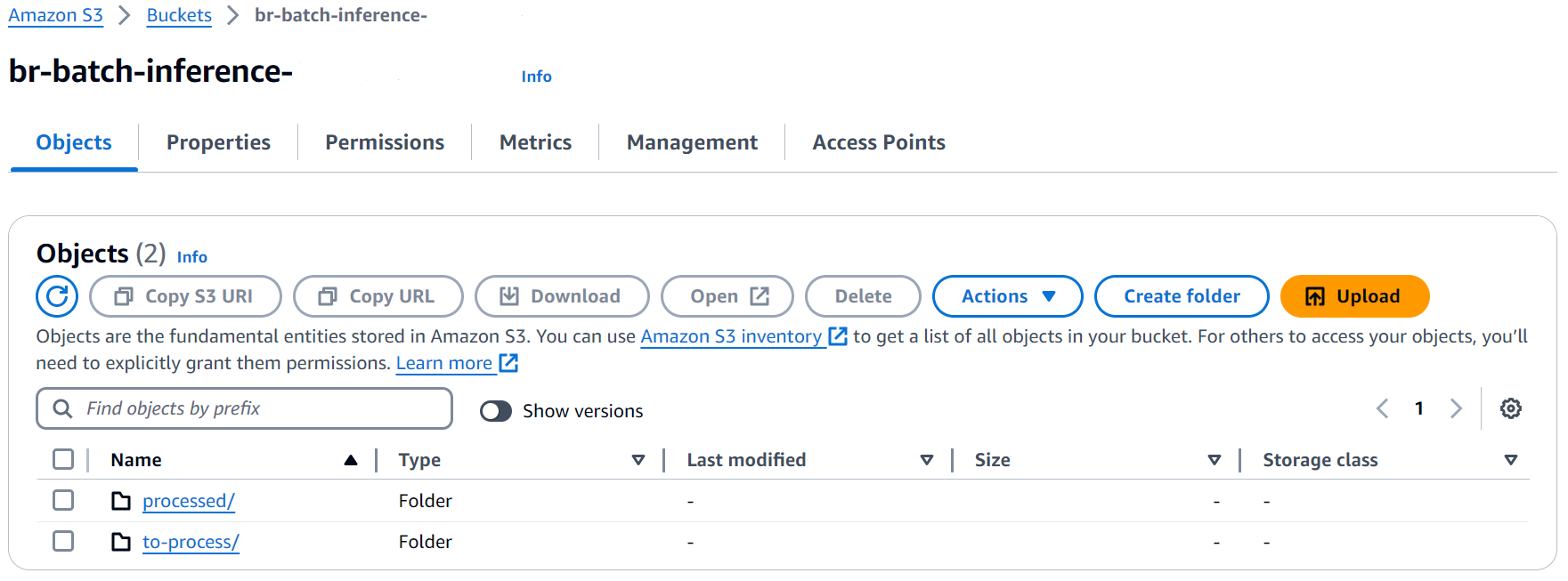

- After the first batch job in the pipeline completes, the pipeline will create a /processed folder in the same bucket, as shown in the following screenshot. The results of batch jobs created by this pipeline will be stored in this folder.

- To start using this channel, load the

.jsonlfiles that you have prepared for batch inference in amazon Bedrock

You're done! You have successfully deployed your pipeline and can check the status of the batch job in the amazon Bedrock console. If you want to have more insights about each one .jsonl file status, navigate to the created DynamoDB table {StackName}-DynamoDBTable-{UniqueString} and check the status there. You may need to wait up to 15 minutes to observe the created batch jobs because EventBridge is scheduled to scan DynamoDB every 15 minutes.

Clean

If you no longer need this automated pipeline, follow these steps to delete the resources you created and avoid additional costs:

- In the amazon S3 console, manually delete the content within the buckets. Make sure the bucket is empty before proceeding to step 2.

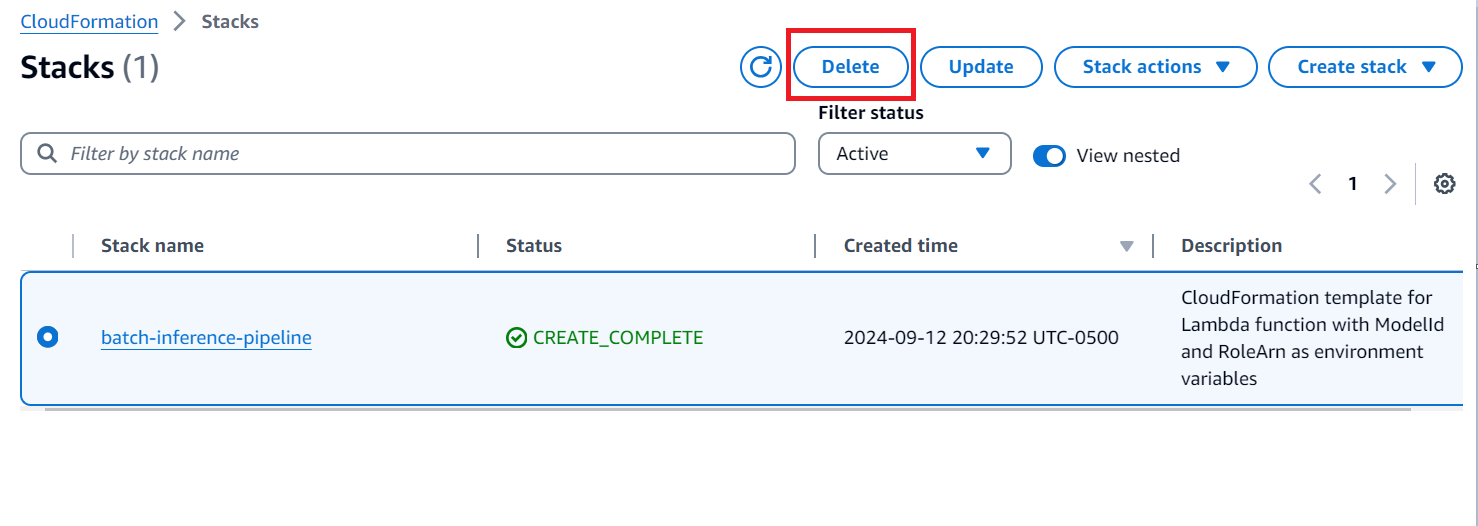

- In the AWS CloudFormation console, choose batteries in the navigation panel.

- Select the created stack and choose Deleteas shown in the following screenshot.

This automatically removes the implemented stack.

Conclusion

In this post, we present a scalable and efficient solution to automate batch inference jobs in amazon Bedrock. Using AWS Lambda, amazon DynamoDB, and amazon EventBridge, we have addressed key challenges in managing large-scale batch processing workflows.

This solution offers several important benefits:

- Automated queue management – Maximize performance through dynamic management of spaces and job submissions

- Cost optimization – Uses 50% discount on batch inference pricing for economical large-scale processing

This automated pipeline significantly improves your ability to process large amounts of data using batch inference for amazon Bedrock. Whether you're generating embeddings, classifying text, or analyzing content en masse, this solution offers a scalable, efficient, and cost-effective approach to batch inference.

As you implement this solution, remember to periodically review and optimize your configuration based on your specific workload patterns and requirements. With this automated pipeline and the power of amazon Bedrock, you are well equipped to tackle large-scale ai inference tasks efficiently and effectively. We encourage you to try it out and share your feedback to help us continually improve this solution.

For additional resources, see the following:

About the authors

Yanyan Zhang is a Senior Generative ai Data Scientist at amazon Web Services, where she has been working on cutting-edge ai/ML technologies as a Generative ai Specialist, helping clients use generative ai to achieve desired outcomes. Yanyan graduated from Texas A&M University with a PhD in Electrical Engineering. Outside of work, she loves to travel, exercise, and explore new things.

Yanyan Zhang is a Senior Generative ai Data Scientist at amazon Web Services, where she has been working on cutting-edge ai/ML technologies as a Generative ai Specialist, helping clients use generative ai to achieve desired outcomes. Yanyan graduated from Texas A&M University with a PhD in Electrical Engineering. Outside of work, she loves to travel, exercise, and explore new things.

Ishan Singh is a Generative ai Data Scientist at amazon Web Services, where he helps clients build innovative and responsible generative ai solutions and products. With a strong background in ai/ML, Ishan specializes in creating generative ai solutions that drive business value. Outside of work, he enjoys playing volleyball, exploring local bike trails, and spending time with his wife and dog, Beau.

Ishan Singh is a Generative ai Data Scientist at amazon Web Services, where he helps clients build innovative and responsible generative ai solutions and products. With a strong background in ai/ML, Ishan specializes in creating generative ai solutions that drive business value. Outside of work, he enjoys playing volleyball, exploring local bike trails, and spending time with his wife and dog, Beau.

Neeraj Lamba is a cloud infrastructure architect with global public sector professional services for amazon Web Services (AWS). Helps clients transform their businesses by helping them design their cloud solutions and providing technical guidance. Outside of work he likes to travel, play tennis and experiment with new technologies.

Neeraj Lamba is a cloud infrastructure architect with global public sector professional services for amazon Web Services (AWS). Helps clients transform their businesses by helping them design their cloud solutions and providing technical guidance. Outside of work he likes to travel, play tennis and experiment with new technologies.