As demand for generative ai continues to grow, developers and enterprises are looking for more flexible, cost-effective, and powerful accelerators to meet their needs. Today, we are excited to announce the availability of G6e instances powered by NVIDIA L40S Tensor Core GPUs on amazon SageMaker. You will have the option to provision nodes with 1, 4, and 8 L40S GPU instances, with each GPU providing 48 GB of high-bandwidth memory (HBM). This release provides organizations with the ability to use a single-node GPU instance (G6e.xlarge) to host powerful open source base models such as Llama 3.2 11 B Vision, Llama 2 13 B, and Qwen 2.5 14B, offering organizations a cost-effective, high-performance option. This makes it a perfect choice for those looking to optimize costs while maintaining high performance for inference workloads.

Highlights of G6e instances include:

- Double the GPU memory compared to G5 and G6 instances, enabling deployment of large language models in FP16 up to:

- 14B parameter model on a single GPU node (G6e.xlarge)

- Parameter model 72B on a 4 GPU node (G6e.12xlarge)

- Parameter model 90B on an 8 GPU node (G6e.48xlarge)

- Up to 400 Gbps network performance

- Up to 384 GB GPU memory

Use cases

G6e instances are ideal for tuning and deploying open large language models (LLMs). Our benchmarks show that G6e provides higher performance and is more cost-effective compared to G5 instances, making them an ideal choice for use in real-time, low-latency use cases such as:

- Chatbots and conversational ai

- Text generation and summary.

- Generation of images and vision models.

We have also found that G6e works well for inference with high concurrency and longer contexts. We have provided complete benchmarks in the following section.

Performance

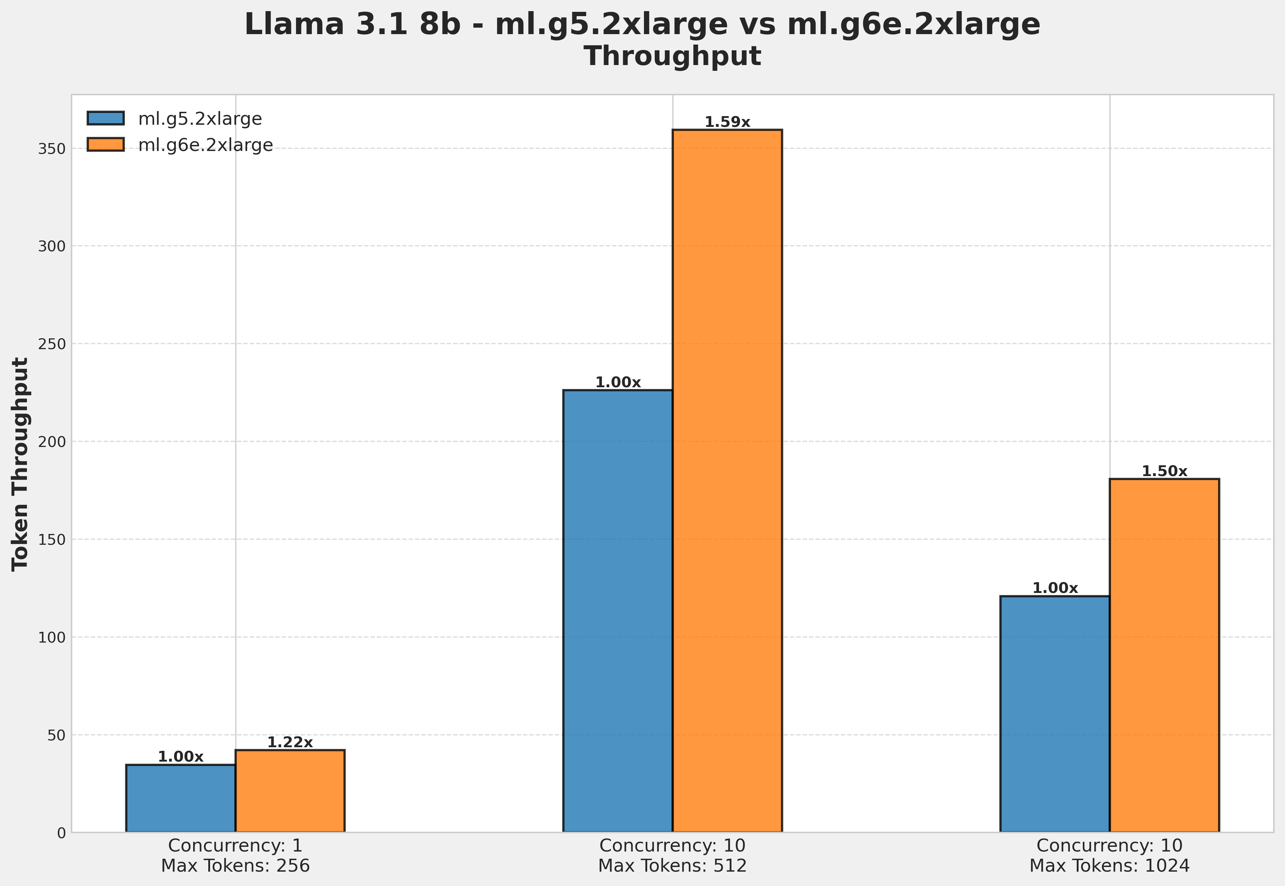

In the two figures below, we see that for long-length contexts of 512 and 1024, G6e.2xlarge provides up to 37% more latency and 60% better throughput compared to G5.2xlarge for a Llama 3.1 8B model.

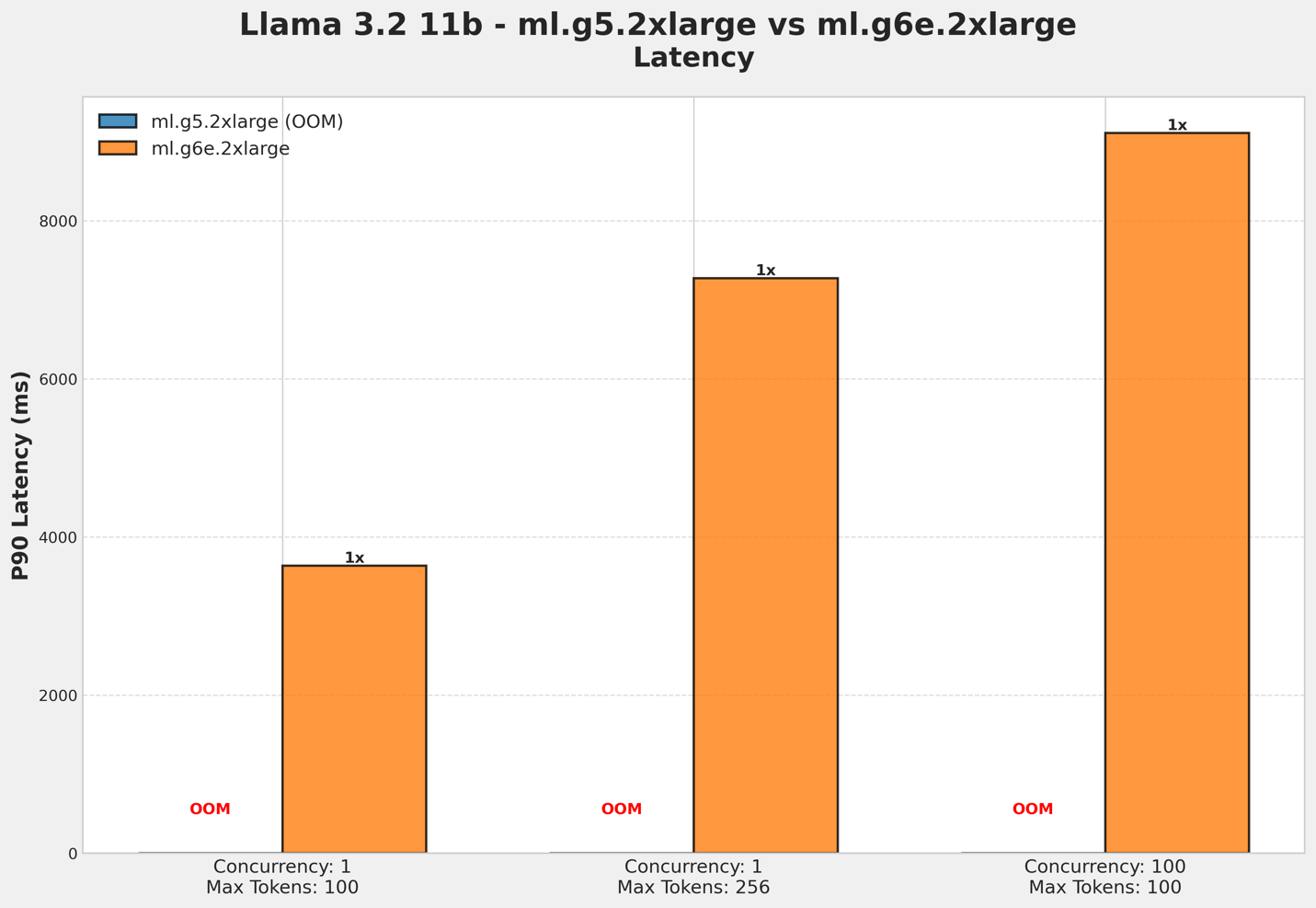

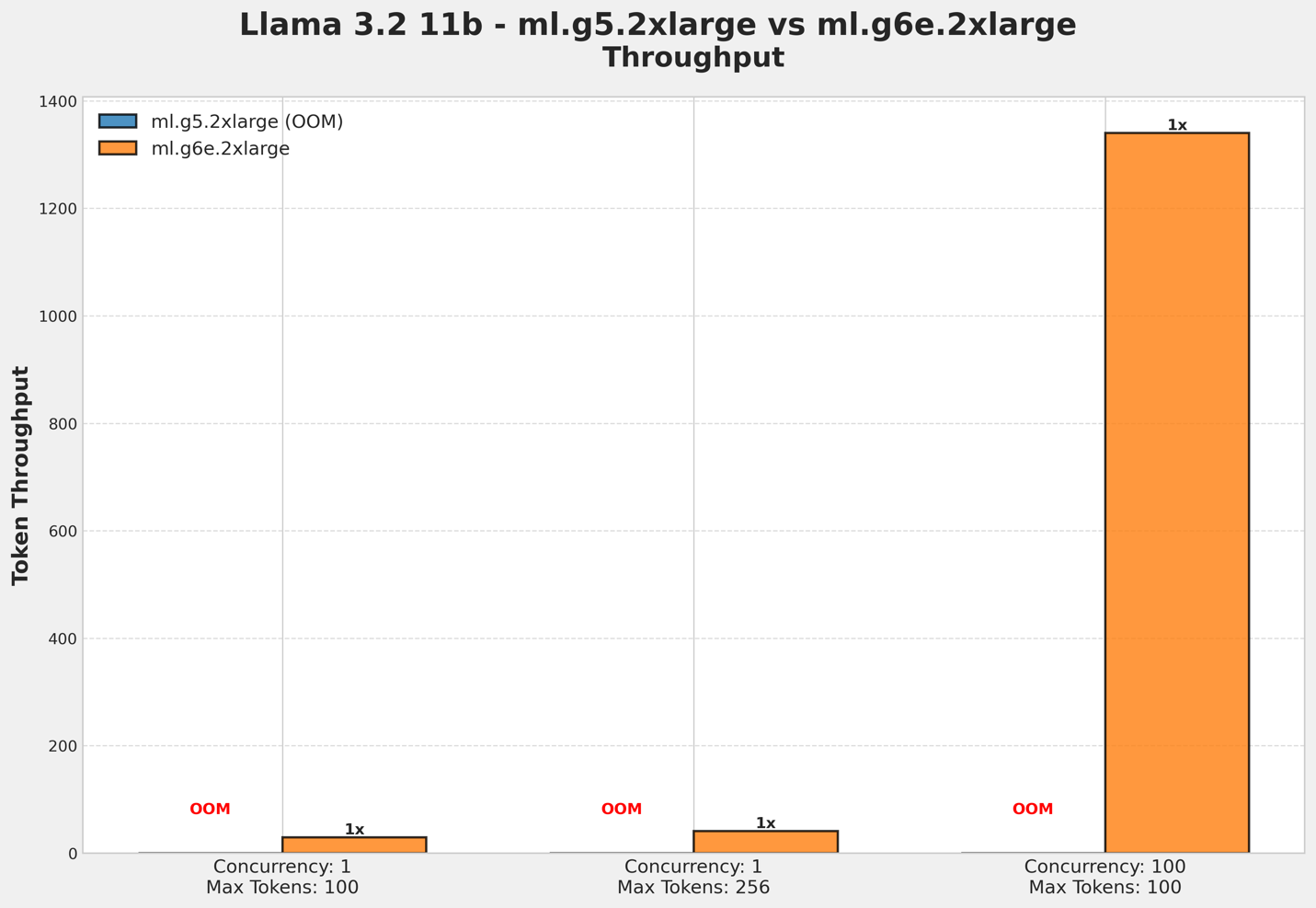

In the following two figures, we see that G5.2xlarge generates out-of-memory (OOM) CUDA by implementing the LLama 3.2 11B Vision model, while G6e.2xlarge provides great performance.

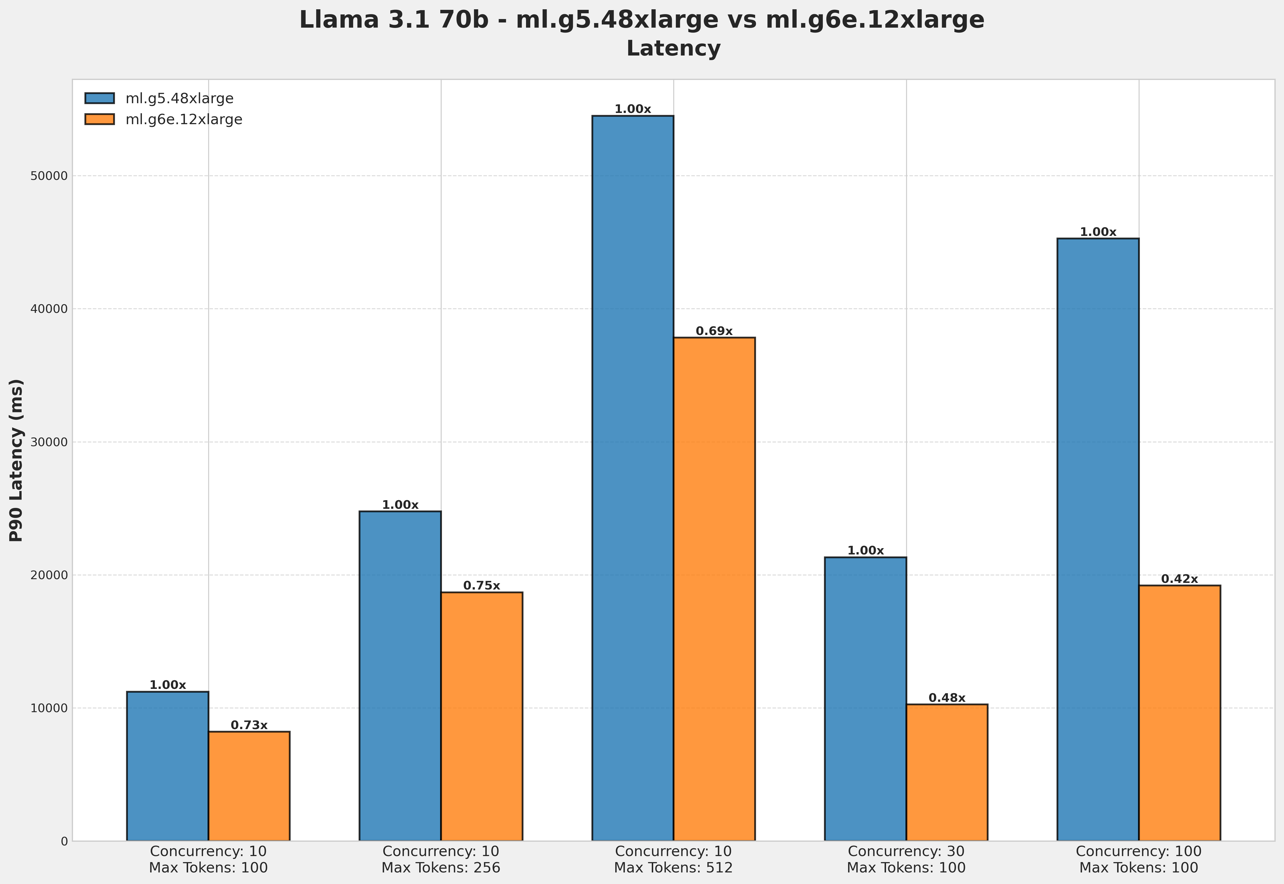

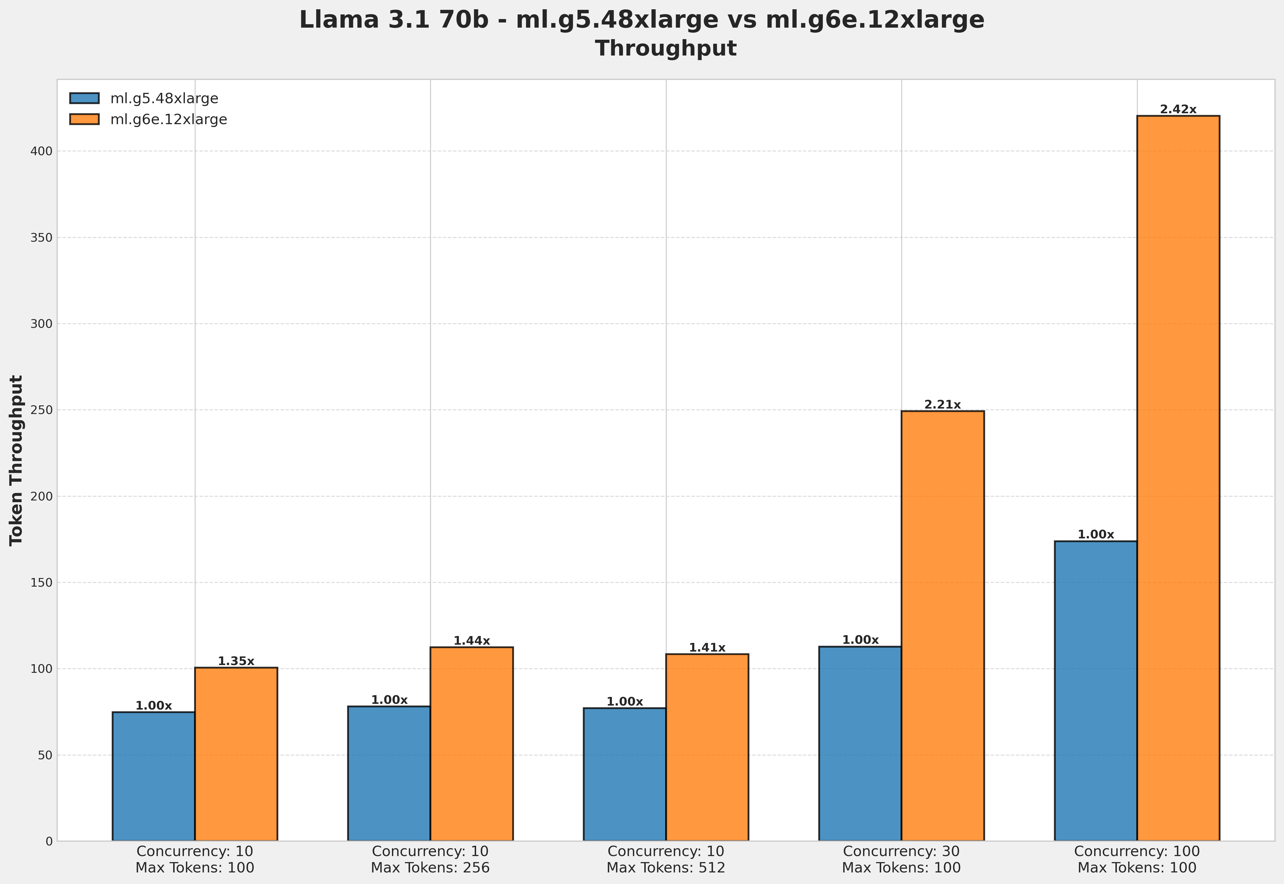

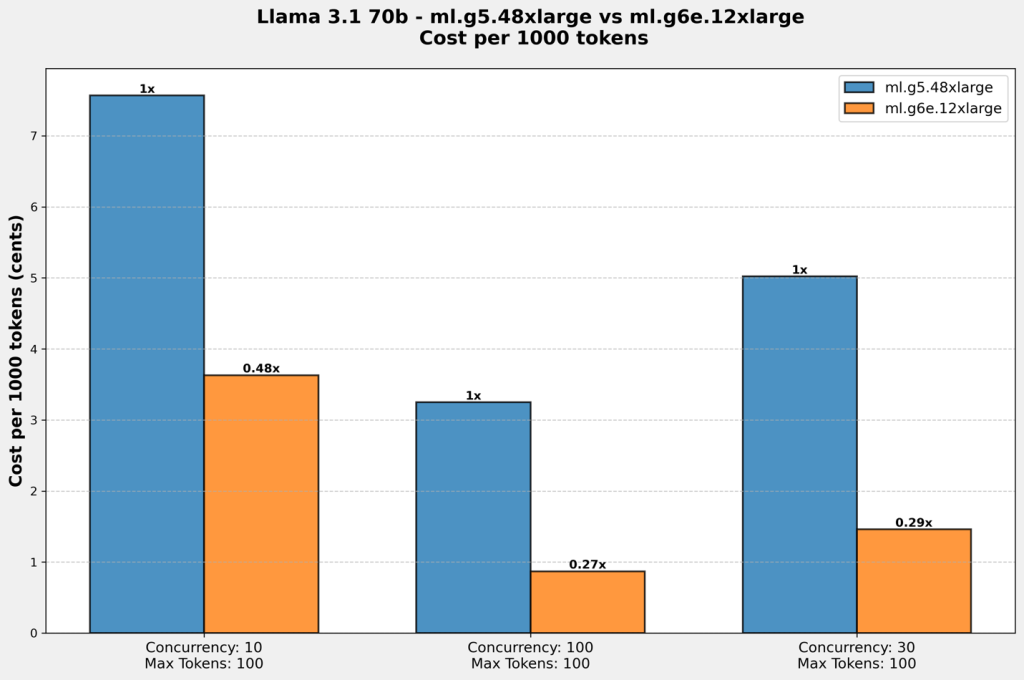

In the two figures below, we compare the G5.48xlarge (8 GPU) node with the G6e.12xlarge (4 GPU) node, which costs 35% less and has more performance. For higher concurrency, we see that G6e.12xlarge provides 60% lower latency and 2.5x higher throughput.

In the figure below, we compare the cost per 1000 tokens when deploying Llama 3.1 70b, further highlighting the cost/performance benefits of using G6e instances compared to G5.

Deployment tutorial

Prerequisites

To try this solution with SageMaker, you will need the following prerequisites:

Deployment

You can clone the repository and use the provided notebook. here.

Clean

To avoid incurring unnecessary charges, it is recommended that you clean up deployed resources when you are finished using them. You can remove the deployed model with the following code:

predictor.delete_predictor()

Conclusion

G6e instances in SageMaker unlock the ability to deploy a wide variety of open source models cost-effectively. With superior memory capacity, improved performance, and cost-effectiveness, these instances represent a compelling solution for organizations looking to deploy and scale their ai applications. The ability to handle larger models, support longer context lengths, and maintain high performance makes G6e instances particularly valuable for modern ai applications. Try the code to implement with G6e.

About the authors

Vivek Gangasani is a Senior Solutions Architect specializing in GenAI at AWS. Help GenAI startups create innovative solutions using AWS services and accelerated computing. He is currently focused on developing strategies to tune and optimize the inference performance of large language models. In his free time, Vivek enjoys hiking, watching movies, and trying different dishes.

Vivek Gangasani is a Senior Solutions Architect specializing in GenAI at AWS. Help GenAI startups create innovative solutions using AWS services and accelerated computing. He is currently focused on developing strategies to tune and optimize the inference performance of large language models. In his free time, Vivek enjoys hiking, watching movies, and trying different dishes.

Alan Tan is a Senior Product Manager at SageMaker and leads efforts in large model inference. He is passionate about applying machine learning to the area of analytics. Outside of work, enjoy the outdoors.

Alan Tan is a Senior Product Manager at SageMaker and leads efforts in large model inference. He is passionate about applying machine learning to the area of analytics. Outside of work, enjoy the outdoors.

Pavan Kumar Madduri is an associate solutions architect at amazon Web Services. He has a keen interest in designing innovative solutions in generative ai and is passionate about helping customers harness the power of the cloud. He earned his master's degree in Information technology from Arizona State University. Outside of work, he enjoys swimming and watching movies.

Pavan Kumar Madduri is an associate solutions architect at amazon Web Services. He has a keen interest in designing innovative solutions in generative ai and is passionate about helping customers harness the power of the cloud. He earned his master's degree in Information technology from Arizona State University. Outside of work, he enjoys swimming and watching movies.

Michael Nguyen is a Senior Startup Solutions Architect at AWS specializing in leveraging ai/ML to drive innovation and develop business solutions on AWS. Michael holds 12 AWS certifications and holds bachelor's and master's degrees in electrical and computer engineering and an MBA from Penn State University, Binghamton University, and the University of Delaware.

Michael Nguyen is a Senior Startup Solutions Architect at AWS specializing in leveraging ai/ML to drive innovation and develop business solutions on AWS. Michael holds 12 AWS certifications and holds bachelor's and master's degrees in electrical and computer engineering and an MBA from Penn State University, Binghamton University, and the University of Delaware.