As generative artificial intelligence (ai) inference becomes increasingly critical for businesses, customers are looking for ways to scale their generative ai operations or integrate generative ai models into existing workflows. Model optimization has emerged as a crucial step, allowing organizations to balance cost-effectiveness and responsiveness, improving productivity. However, price and performance requirements vary widely across use cases. For chat applications, minimizing latency is key to delivering an interactive experience, while real-time applications such as recommendations require maximizing throughput. Navigating these trade-offs poses a significant challenge to rapidly adopting generative ai, because you must carefully select and evaluate different optimization techniques.

To overcome these challenges, we are excited to introduce the Inference Optimization Toolkit, a fully managed model optimization feature in amazon SageMaker. This new feature delivers up to ~2x performance while reducing costs by up to 50% for generative ai models such as Llama 3, Mistral, and Mixtral models. For example, with a Llama 3-70B model, you can achieve up to ~2,400 tokens/sec on an ml.p5.48xlarge instance, compared to ~1,200 tokens/sec previously without any optimization.

This inference optimization toolkit uses the latest generative ai model optimization techniques, such as Compilation, quantificationand speculative decoding to help you reduce generative ai model optimization time from months to hours while achieving the best price-performance ratio for your use case. For compilation, the toolkit uses Neuron Compiler to optimize the model’s computational graph for specific hardware, such as AWS Inferentia, enabling faster runtimes and lower resource utilization. For quantization, the toolkit uses Activation-aware Weight Quantization (AWQ) to efficiently reduce model size and memory footprint while preserving quality. For speculative decoding, the toolkit employs a faster draft model to predict candidate outputs in parallel, improving inference speed for longer text generation tasks. For more information about each technique, see Optimizing Model Inference with amazon SageMaker. For more details and benchmark results for popular open source models, see Achieve up to ~2x higher performance while reducing costs by up to ~50% for generative ai inference on amazon SageMaker with the new inference optimization toolkit – Part 1.

In this post, we demonstrate how to get started with the Inference Optimization Toolkit for models supported by amazon SageMaker JumpStart and Python SDK for amazon SageMakerSageMaker JumpStart is a fully managed model hub that allows you to explore, tune, and deploy popular open source models with just a few clicks. You can use pre-optimized models or create your own custom optimizations. Alternatively, you can accomplish this using the SageMaker Python SDK, as shown below. laptopFor the full list of supported models, see Optimizing Model Inference with amazon SageMaker.

Using pre-optimized models in SageMaker JumpStart

The Inference Optimization Toolkit provides pre-optimized models that have been optimized to achieve the best cost-performance ratio at scale, without compromising accuracy. You can choose the configuration based on the latency and throughput requirements of your use case and deploy with a single click.

Taking the Meta-Llama-3-8b model in SageMaker JumpStart as an example, you can choose Deploy From the model page, in the deployment settings, you can expand the model configuration options, select the number of concurrent users, and deploy the optimized model.

Deploying a pre-optimized model with the SageMaker Python SDK

You can also deploy a pre-optimized generative ai model using the SageMaker Python SDK in just a few lines of code. In the following code, we set up a ModelBuilder Class for SageMaker JumpStart model. ModelBuilder is a class in the SageMaker Python SDK that provides fine-grained control over various deployment aspects, such as instance types, network isolation, and resource allocation. You can use it to create a deployable model instance, converting framework models (such as XGBoost or PyTorch) or inference specifications into SageMaker-compatible models for deployment. See Building a Model in amazon SageMaker with ModelBuilder for more details.

List the available pre-comparison configurations with the following code:

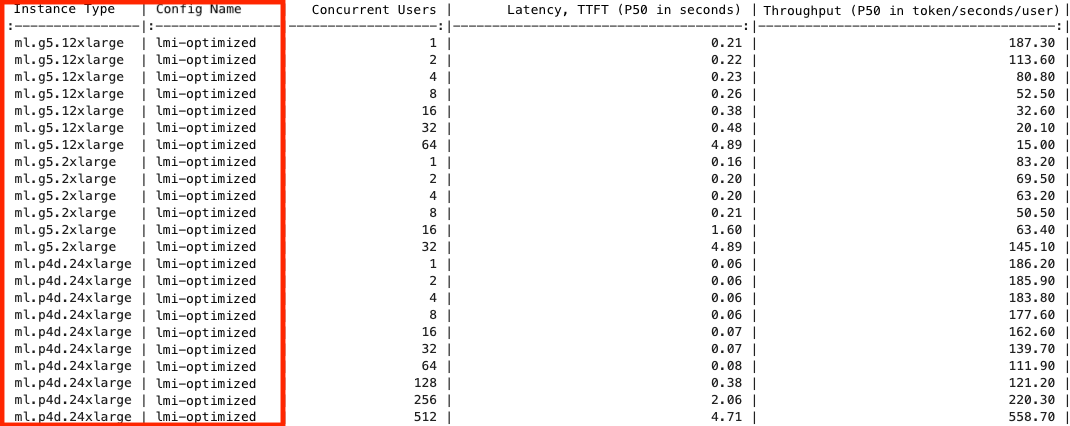

Choose the right one instance_type and config_name from the list based on concurrent users, latency, and throughput requirements. In the table above, you can see the latency and throughput at different levels of concurrency for the given instance type and configuration name. If the configuration name is lmi-optimizedThat means the configuration is pre-optimized by SageMaker. Then you can call .build() to run the optimization job. When the job is complete, you can deploy it to an endpoint and test the model predictions. See the following code:

Using the Inference Optimization Toolkit to Create Custom Optimizations

In addition to creating a pre-optimized model, you can create custom optimizations based on the instance type you choose. The following table provides a complete list of available combinations. In the following sections, we first explore compilation on AWS Inferentia and then try other optimization techniques for GPU instances.

| Instance Types | Optimization technique | Settings |

| AWS Inference | Compilation | Neuron compiler |

| GPU | Quantization | AWQ |

| GPU | Speculative decoding | Draft template provided by SageMaker or Bring Your Own (BYO) |

SageMaker JumpStart Compilation

For compilation, you can select the same Meta-Llama-3-8b model from SageMaker JumpStart and choose Optimize On the model page. On the optimization settings page, you can choose ml.inf2.8xlarge for your instance type. Then, provide an amazon Simple Storage Service (amazon S3) output location for the optimized artifacts. For large models like Llama 2 70B, for example, the build job can take over an hour. Therefore, we recommend using the Inference Optimization Toolkit to perform the build in advance. That way, you only need to build once.

Compiling with the SageMaker Python SDK

For the SageMaker Python SDK, you can configure the build by modifying environment variables in the .optimize() function. For more details on compilation_configRefer to ai/docs/serving/serving/docs/lmi/tutorials/tnx_aot_tutorial.html” target=”_blank” rel=”noopener”>LMI NeuronX Model Early Compilation Tutorial

SageMaker JumpStart Speculative Decoding and Quantization

To optimize models on the GPU, ml.g5.12xlarge is the default deployment instance type for Llama-3-8b. You can choose quantization, speculative decoding, or both as optimization options. Quantization uses AWQ to reduce model weights to low-bit (INT4) representations. Finally, you can provide an S3 output URL to store the optimized artifacts.

With speculative decoding, you can improve latency and throughput by using the draft model provided by SageMaker or by bringing your own draft model from the Hugging Face public model hub or loading from your own S3 bucket.

After the optimization job is complete, you can deploy the model or run further evaluation jobs on the optimized model. In the SageMaker Studio UI, you can choose to use the default sample datasets or provide your own using an S3 URI. At the time of writing, the performance evaluation option is only available through the amazon SageMaker Studio UI.

Speculative Decoding and Quantization Using the SageMaker Python SDK

The following is the code snippet from SageMaker Python SDK for quantification. You just need to provide the quantization_config attribute in the .optimize() function.

For speculative decoding, you can switch to a speculative_decoding_config Attribute and configure SageMaker or a custom model. You may need to adjust GPU utilization based on the sizes of your draft and target models so that both fit on the instance for inference.

Conclusion

Optimizing generative ai models for inference performance is crucial to delivering cost-effective and responsive generative ai solutions. With the launch of the Inference Optimization Toolkit, you can now optimize your generative ai models, using the latest techniques such as speculative decoding, compilation, and quantization, to achieve up to approximately 2x performance and reduce costs by up to 50%. This helps you achieve the optimal balance between price and performance for your specific use cases with just a few clicks in SageMaker JumpStart or a few lines of code using the SageMaker Python SDK. The Inference Optimization Toolkit significantly simplifies the model optimization process, enabling your enterprise to accelerate generative ai adoption and unlock more opportunities to drive better business outcomes.

For more information, see Optimize Model Inference with amazon SageMaker and achieve up to ~2x faster performance and up to ~50% lower costs for generative ai inference on amazon SageMaker with the new Inference Optimization Toolkit – Part 1.

About the authors

James Wu is a Senior ai/ML Specialist Solutions Architect

Saurabh Trikande is Senior Product Manager

Rishabh Ray Chaudhury is Senior Product Manager

Kumara Swami Borra is a front-end engineer

Alwin (Qiyun) Zhao is a senior software development engineer

Qinglan is a senior SDE

NEWSLETTER

NEWSLETTER