Originally, PyTorch used an eager mode where each PyTorch operation that forms the model is executed independently as soon as it is reached. PyTorch 2.0 introduced torch.compile to speed up PyTorch code compared to the default eager mode. Unlike eager mode, the Torch.compile file pre-compiles the entire model into a single graph in a way that is optimal to run on a given hardware platform. AWS optimized PyTorch's Torch.compile function for AWS Graviton3 processors. This optimization results in up to 2x better performance for Hugging face Model inference (based on geometric mean performance improvement for 33 models) and up to 1.35x better performance for Torch bank Model inference (geometric mean performance improvement for 45 models) compared to default eager mode inference on various natural language processing (NLP), computer vision (CV), and recommendation models on amazon EC2 instances based on AWS Graviton3. Starting with PyTorch 2.3.1, optimizations are available in Torch Python wheels and AWS Graviton PyTorch Deep Learning Container (DLC).

In this blog post, we show how we optimized Torch.Compile performance on AWS Graviton3-based EC2 instances, how to use the optimizations to improve inference performance, and the resulting speedups.

Why Torch.Compile and what is its goal?

In eager mode, operators of a model are executed immediately when encountered. It is easier to use, more suitable for machine learning (ML) researchers, and is therefore the default mode. However, eager mode incurs runtime overhead due to redundant kernel startup and memory read overhead. Whereas in Torch compilation mode, operators are first synthesized into a graph, where one operator is merged with another to reduce and localize memory reads and total kernel startup overhead.

The goal of the AWS Graviton team was to optimize the Torch.Compile backend for Graviton3 processors. PyTorch's enthusiast mode was already optimized for Graviton3 processors with Arm Compute Library (ACL) kernels using oneDNN (aka MKLDNN). So the question was, how to reuse those kernels in torch compilation mode to get the best of graphics compilation and optimized kernel performance together?

Results

The AWS Graviton team extended the Torch inductor and oneDNN primitives that reused ACL cores and optimized compiler mode performance on Graviton3 processors. Starting with PyTorch 2.3.1, the optimizations are available in Torch Python wheels and the AWS Graviton DLC. See Run an inference Section that follows for instructions on installation, runtime configuration, and how to run tests.

To demonstrate performance improvements, we use NLP, CV, and recommendation models. Torch bank and the most downloaded NLP models of Hugging face in question answering, text classification, token classification, translation, zero-shot classification, translation, summarization, feature extraction, text generation, text-to-text generation, fill masking, and sentence similarity tasks to cover a wide variety of customer use cases.

We start by measuring the TorchBench model inference latency, in milliseconds (ms), for eager mode, which is marked as 1.0 with a red dotted line in the graph below. We then compare Torch.compile improvements for the same model inference; the normalized results are plotted in the graph. You can see that for the 45 models we evaluated, there is a 1.35x latency improvement (geographic average for all 45 models).

Image 1: Improving PyTorch model inference performance with Torch.Compile on AWS Graviton3-based c7g instance using TorchBench framework. Benchmark mode performance is marked as 1.0 (higher is better).

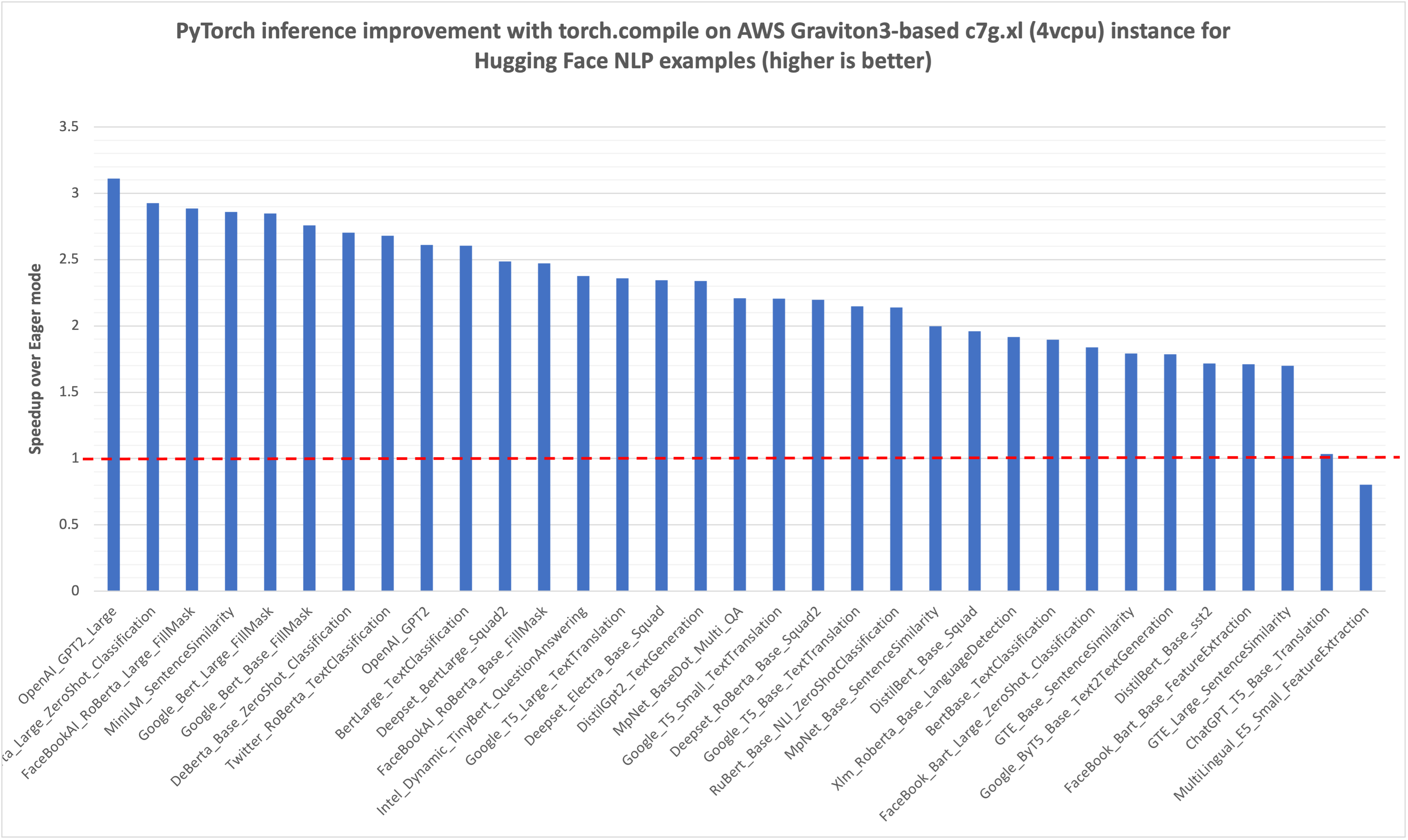

Similar to the TorchBench inference performance graph above, we start by measuring the inference latency of the NLP Hugging Face model, in ms, for eager mode, which is marked as 1.0 with a red dotted line in the graph below. We then compare the improvements of Torch.compile for the same model inference; the normalized results are plotted in the graph. You can see that, for the 33 models we evaluated, there is around a 2x performance improvement (geographic average for all 33 models).

Image 2: Improving Hugging Face NLP model inference performance with Torch.Compile on AWS Graviton3-based c7g instance using Hugging Face example scripts. Baseline eager mode performance is marked as 1.0. (higher is better)

Run an inference

Starting with PyTorch 2.3.1, optimizations are available in the Torch Python wheel and the AWS Graviton PyTorch DLC. This section shows how to run inference in anxious and Torch.Compile modes using Torch Python wheels and benchmark scripts from the Hugging Face and TorchBench repositories.

To successfully run the scripts and reproduce the speedup numbers mentioned in this post, you need an instance of the Graviton3 hardware family (c7g/r7g/m7g/hpc7g). For this post, we are using the c7g.4xl instance (16 vCPU). The instance, AMI details, and required Torch library versions are mentioned in the following snippet.

The generic runtime settings implemented for eager mode inference are equally applicable for Torch.Compile mode, therefore, we set the following environment variables to further improve the performance of Torch.Compile on AWS Graviton3 processors.

TorchBench Benchmark Scripts

TorchBench is a collection of open-source benchmarks used to evaluate the performance of PyTorch. We evaluated 45 models using the scripts from the TorchBench repository. The following code shows how to run the scripts for run mode in compile mode using the Inductor backend.

After the inference runs are complete, the script stores the results in JSON format. The sample output is shown below:

Hugging Face Benchmarking Scripts

Google's T5 small text translation model is one of the 30 Hugging Face models we benchmarked. We are using it as a sample model to demonstrate how to run inference in both build and staging modes. The additional configurations and APIs required to run it in build mode are highlighted in BOLDSave the following script as google_t5_small_text_translation.py .

Run the script with the following steps.

After the inference runs are complete, the script prints the output of the Torch Profiler with the latency breakdown of the Torch operators. The following is the sample output of the Torch Profiler:

Whats Next

Next, we will extend the Torch Inductor CPU backend support for compiling the Llama model and add support for fused GEMM kernels to enable optimization of Torch Inductor operator fusion on AWS Graviton3 processors.

Conclusion

In this tutorial, we explain how we optimized Torch.Compile performance on AWS Graviton3-based EC2 instances, how to use the optimizations to improve PyTorch model inference performance, and demonstrate the resulting speedups. We hope you'll give it a try! If you need help with machine learning software on Graviton, please open an issue in the AWS Graviton Technical Guide GitHub.

About the Author

Sunita Nadampalli is a Software Development Manager and ai/ML expert at AWS. She leads performance optimizations of AWS Graviton software for ai/ML and HPC workloads. She is passionate about open source software development and delivering high-performance, sustainable software solutions for Arm ISA-based SoCs.

Sunita Nadampalli is a Software Development Manager and ai/ML expert at AWS. She leads performance optimizations of AWS Graviton software for ai/ML and HPC workloads. She is passionate about open source software development and delivering high-performance, sustainable software solutions for Arm ISA-based SoCs.