This post is co-written with NVIDIA's Eliuth Triana, Abhishek Sawarkar, Jiahong Liu, Kshitiz Gupta, JR Morgan, and Deepika Padmanabhan.

At the NVIDIA GTC 2024 conference, we announced support for ai-microservices-for-developers” target=”_blank” rel=”noopener”>NVIDIA NIM Inference Microservices on amazon SageMaker Inference. This integration enables you to deploy industry-leading large language models (LLMs) in SageMaker and optimize their performance and cost. Optimized pre-built containers enable deployment of state-of-the-art LLMs in minutes instead of days, making it easy to seamlessly integrate them into enterprise-grade ai applications.

NIM is based on technologies such as NVIDIA Tensor RT, NVIDIA TensorRT-LLMand ai/en/latest/” target=”_blank” rel=”noopener”>Master of LawsNIM is designed to enable simple, secure, high-performance ai inference on NVIDIA GPU-accelerated instances hosted by SageMaker. This allows developers to harness the power of these advanced models using SageMaker APIs and just a few lines of code, accelerating the deployment of cutting-edge ai capabilities within their applications.

NIM, part of the ai-enterprise/” target=”_blank” rel=”noopener”>NVIDIA ai for Business The software platform listed on AWS Marketplace is a set of inference microservices that bring the power of next-generation LLMs to your applications, providing natural language processing and understanding (NLP) capabilities, whether you're developing chatbots, summarizing documents, or deploying other NLP-powered applications. You can use pre-built NVIDIA containers to host popular LLMs that are optimized for specific NVIDIA GPUs for rapid deployment. Companies like ai-microservices-for-healthcare-integrate-with-aws/” target=”_blank” rel=”noopener”>Amgen, ai-microservices-for-healthcare-integrate-with-aws/” target=”_blank” rel=”noopener”>A-Alpha Biography, ai-microservices-for-healthcare-integrate-with-aws/” target=”_blank” rel=”noopener”>Agilentand ai-microservices-for-healthcare-integrate-with-aws/” target=”_blank” rel=”noopener”>Hippocratic ai are among those using NVIDIA ai on AWS to accelerate computational biology, genomic analysis, and conversational ai.

In this post, we provide guidance on how customers can use generative artificial intelligence (ai) and LLM models by integrating NVIDIA NIM with SageMaker. We demonstrate how this integration works and how these state-of-the-art models can be deployed in SageMaker, optimizing their performance and cost.

You can use pre-built, optimized NIM containers to deploy LLM and integrate them into your enterprise-grade ai applications built with SageMaker in minutes, instead of days. We also share a sample notebook you can use to get started, showing the simple APIs and a few lines of code required to leverage the capabilities of these advanced models.

Solution Overview

Getting started with NIM is very easy. Within the NVIDIA API CatalogDevelopers have access to a wide range of NIM-optimized ai models that they can use to build and deploy their own ai applications. You can start prototyping directly in the catalog using the GUI (as shown in the screenshot below) or interact directly with the API for free.

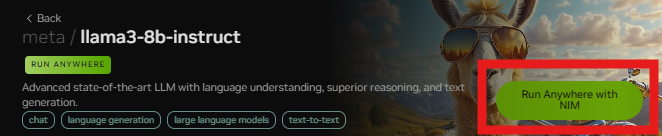

To deploy NIM in SageMaker, you need to download NIM and then deploy it. You can start this process by choosing Run anywhere with NIM for the model of your choice, as shown in the following screenshot.

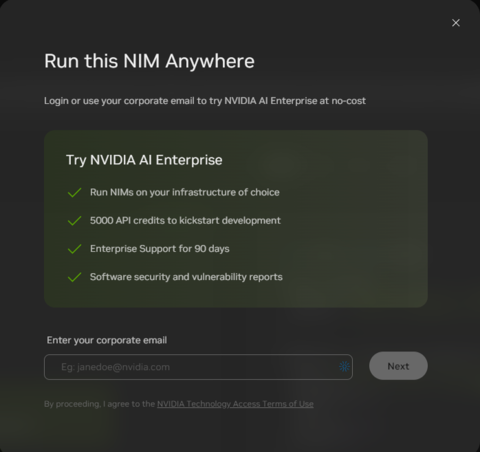

You can sign up for the free 90-day evaluation license in the API Catalog by registering with your organization's email address. This will grant you a personal NGC API key to pull assets from NGC and run them in SageMaker. For details on SageMaker pricing, see amazon SageMaker pricing.

Prerequisites

As a prerequisite, set up an amazon SageMaker Studio environment:

- Make sure that the existing SageMaker domain has Docker access enabled. If not, run the following command to update the domain:

- Once Docker access is enabled for the domain, create a user profile by running the following command:

- Create a JupyterLab space for the user profile you created.

- After creating the JupyterLab space, run the following amazon-sagemaker-local-mode/blob/main/sagemaker_studio_docker_cli_install/sagemaker-distribution-docker-cli-install.sh” target=”_blank” rel=”noopener”>bash script to install the Docker CLI.

Setting up the Jupyter notebook environment

For this series of steps, we're using a SageMaker Studio JupyterLab notebook. You also need to attach an amazon Elastic Block Store (amazon EBS) volume of at least 300 MB in size, which you can do in the SageMaker Studio domain settings. In this example, we're using an ml.g5.4xlarge instance, powered by an NVIDIA A10G GPU.

We start by opening the provided example notebook in our JupyterLab instance, importing the appropriate packages, and setting up the SageMaker session, role, and account information:

Pull the NIM container from the public container to push it to your private container

The NIM container that comes with built-in SageMaker integration is available at amazon ECR Public GalleryTo deploy it to your own SageMaker account securely, you can pull the Docker container from the public amazon Elastic Container Registry (amazon ECR) container maintained by NVIDIA and upload it back to your own private container:

Setting up NVIDIA API Key

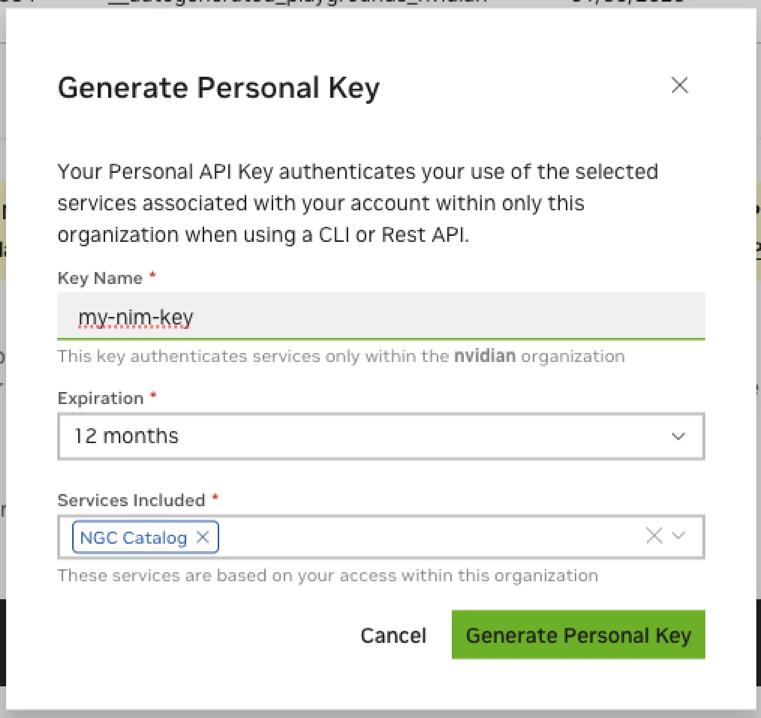

NIMs can be accessed through the NVIDIA API Catalog. You just need to register for an NVIDIA API key from the website NGC Catalog by choice Generate personal key.

When creating an NGC API key, choose at least NGC Catalogue in it Services included Dropdown menu. You can include more services if you plan to reuse this key for other purposes.

For the purposes of this post, we store it in an environment variable:

NGC_API_KEY = YOUR_KEY

This key is used to download pre-optimized model weights when running NIM.

Create your SageMaker endpoint

We now have all the resources ready to deploy to a SageMaker endpoint. If you are using your notebook after setting up your Boto3 environment, you must first make sure to reference the container you pushed to amazon ECR in a previous step:

Once the model definition is set up correctly, the next step is to define the endpoint configuration for deployment. In this example, we deploy the NIM to a ml.g5.4xlarge instance:

Finally, create the SageMaker endpoint:

Running inference against SageMaker endpoint with NIM

Once the endpoint is successfully deployed, you can run requests against the NIM-powered SageMaker endpoint using the REST API to test different questions and prompts to interact with the generative ai models:

That's it! You now have a working endpoint using NIM in SageMaker.

NIM Licenses

NIM is part of NVIDIA's enterprise license. NIM comes with a 90-day evaluation license to get you started. To use NIM in SageMaker beyond the 90-day license, Connect with NVIDIA for AWS Marketplace private pricingNIM is also available as a paid offering as part of the NVIDIA ai Enterprise software subscription available on AWS Marketplace.

Conclusion

In this post, we show you how to get started using NIM in SageMaker for pre-built models. Feel free to try it out by following the instructions. example of notebook.

We encourage you to explore NIM to adopt it for your own use cases and applications.

About the authors

Saurabh Trikande Saurabh is a Senior Product Manager for amazon SageMaker Inference. He is passionate about working with customers and driven by the goal of democratizing machine learning. He focuses on core challenges related to deploying complex machine learning applications, multi-tenant machine learning models, cost optimizations, and making deploying deep learning models more accessible. In his free time, Saurabh enjoys hiking, learning about innovative technologies, following TechCrunch, and spending time with his family.

Saurabh Trikande Saurabh is a Senior Product Manager for amazon SageMaker Inference. He is passionate about working with customers and driven by the goal of democratizing machine learning. He focuses on core challenges related to deploying complex machine learning applications, multi-tenant machine learning models, cost optimizations, and making deploying deep learning models more accessible. In his free time, Saurabh enjoys hiking, learning about innovative technologies, following TechCrunch, and spending time with his family.

James Park is a Solutions Architect at amazon Web Services. He works with amazon.com to design, build, and deploy technology solutions on AWS and has a particular interest in ai and machine learning. In his spare time, he enjoys seeking out new cultures, new experiences, and staying up to date with the latest technology trends. You can find him at LinkedIn.

James Park is a Solutions Architect at amazon Web Services. He works with amazon.com to design, build, and deploy technology solutions on AWS and has a particular interest in ai and machine learning. In his spare time, he enjoys seeking out new cultures, new experiences, and staying up to date with the latest technology trends. You can find him at LinkedIn.

Qinglan Qing is a Software Development Engineer at AWS. He has worked on several challenging products at amazon, including high-performance ML inference solutions and high-performance systems of record. Qing’s team successfully launched the first billion-parameter model on amazon Advertising with very low latency required. Qing has deep knowledge on infrastructure optimization and deep learning acceleration.

Qinglan Qing is a Software Development Engineer at AWS. He has worked on several challenging products at amazon, including high-performance ML inference solutions and high-performance systems of record. Qing’s team successfully launched the first billion-parameter model on amazon Advertising with very low latency required. Qing has deep knowledge on infrastructure optimization and deep learning acceleration.

Rahu Ramesh is a Senior GenAI/ML Solutions Architect on the amazon SageMaker Service team. He focuses on helping customers build, deploy, and migrate production ML workloads to SageMaker at scale. He specializes in the machine learning, ai, and computer vision domains and holds a master’s degree in computer science from the University of Texas at Dallas. In his spare time, he enjoys traveling and photography.

Rahu Ramesh is a Senior GenAI/ML Solutions Architect on the amazon SageMaker Service team. He focuses on helping customers build, deploy, and migrate production ML workloads to SageMaker at scale. He specializes in the machine learning, ai, and computer vision domains and holds a master’s degree in computer science from the University of Texas at Dallas. In his spare time, he enjoys traveling and photography.

Eliuth Triana Eliuth is a Developer Relations Manager at NVIDIA responsible for training amazon’s MLOps, DevOps, AWS ai scientists and technical experts to master the NVIDIA compute stack to accelerate and optimize Generative ai Foundation models, spanning data curation, GPU training, model inference, and production deployment on AWS GPU instances. Eliuth is also passionate about mountain biking, skiing, tennis, and poker.

Eliuth Triana Eliuth is a Developer Relations Manager at NVIDIA responsible for training amazon’s MLOps, DevOps, AWS ai scientists and technical experts to master the NVIDIA compute stack to accelerate and optimize Generative ai Foundation models, spanning data curation, GPU training, model inference, and production deployment on AWS GPU instances. Eliuth is also passionate about mountain biking, skiing, tennis, and poker.

Abhishek Sawarkar is a product manager on the NVIDIA ai Enterprise team, working on integrating NVIDIA ai software into Cloud MLOps platforms. He focuses on integrating the end-to-end NVIDIA ai stack within Cloud platforms and improving the user experience in accelerated computing.

Abhishek Sawarkar is a product manager on the NVIDIA ai Enterprise team, working on integrating NVIDIA ai software into Cloud MLOps platforms. He focuses on integrating the end-to-end NVIDIA ai stack within Cloud platforms and improving the user experience in accelerated computing.

Jia Hong Liu is a Solutions Architect on NVIDIA’s Cloud Service Provider team. He helps customers adopt ai and machine learning solutions that leverage NVIDIA accelerated computing to address their training and inference challenges. In his spare time, he enjoys origami, DIY projects, and playing basketball.

Jia Hong Liu is a Solutions Architect on NVIDIA’s Cloud Service Provider team. He helps customers adopt ai and machine learning solutions that leverage NVIDIA accelerated computing to address their training and inference challenges. In his spare time, he enjoys origami, DIY projects, and playing basketball.

Kshitiz Gupta is a Solutions Architect at NVIDIA. He enjoys teaching cloud customers about the GPU ai technologies NVIDIA has to offer and helping them accelerate their machine learning and deep learning applications. Outside of work, he enjoys running, hiking, and wildlife watching.

Kshitiz Gupta is a Solutions Architect at NVIDIA. He enjoys teaching cloud customers about the GPU ai technologies NVIDIA has to offer and helping them accelerate their machine learning and deep learning applications. Outside of work, he enjoys running, hiking, and wildlife watching.

JR Morgan is a Principal Technical Product Manager in NVIDIA’s Enterprise Products Group, excelling at the intersection of Partner Services, APIs, and Open Source. After work, you can find him riding a Gixxer, at the beach, or spending time with his wonderful family.

JR Morgan is a Principal Technical Product Manager in NVIDIA’s Enterprise Products Group, excelling at the intersection of Partner Services, APIs, and Open Source. After work, you can find him riding a Gixxer, at the beach, or spending time with his wonderful family.

Deepika Padmanabhan is a Solutions Architect at NVIDIA. She enjoys building and deploying NVIDIA software solutions in the cloud. Outside of work, she enjoys solving puzzles and playing video games like Age of Empires.

Deepika Padmanabhan is a Solutions Architect at NVIDIA. She enjoys building and deploying NVIDIA software solutions in the cloud. Outside of work, she enjoys solving puzzles and playing video games like Age of Empires.

NEWSLETTER

NEWSLETTER