In language model alignment, the effectiveness of reinforcement learning from human feedback (RLHF) depends on the excellence of the underlying reward model. A key concern is ensuring the high quality of this reward model, as it significantly influences the success of RLHF applications. The challenge lies in developing a reward model that accurately reflects human preferences, a critical factor for achieving optimal performance and alignment in language models.

Recent advances in large language models (LLMs) have been facilitated by aligning their behavior with human values. RLHF, a predominant strategy, guides models toward preferred results by defining a nuanced loss function that reflects the subjective quality of the text. However, accurately modeling human preferences involves expensive data collection. The quality of preference models depends on the amount of feedback, the distribution of responses, and the accuracy of the labels.

Researchers from eth Zurich, the Max Planck Institute for Intelligent Systems in Tübingen and Google Research have presented West of N: Synthetic Preference Generation for Improved Reward Modeling, a novel method to improve reward model quality by incorporating synthetic preference data into the training data set. Leveraging the success of Best-of-N sampling strategies in training language models, they extend this approach to reward model training. The proposed self-training strategy generates preference pairs by selecting the best and worst candidates from groups of responses to specific queries.

The proposed West-of-N method generates synthetic preference data by selecting the best and worst responses to a given policy query from the language model. Inspired by Best-of-N sampling strategies, this self-training strategy significantly improves reward model performance, comparable to the impact of incorporating a similar amount of human preference data. The approach is detailed in Algorithm 1, which includes a theoretical guarantee of correct labeling for the generated preference pairs. Filtering steps based on model confidence and response distribution further improve the quality of the data generated.

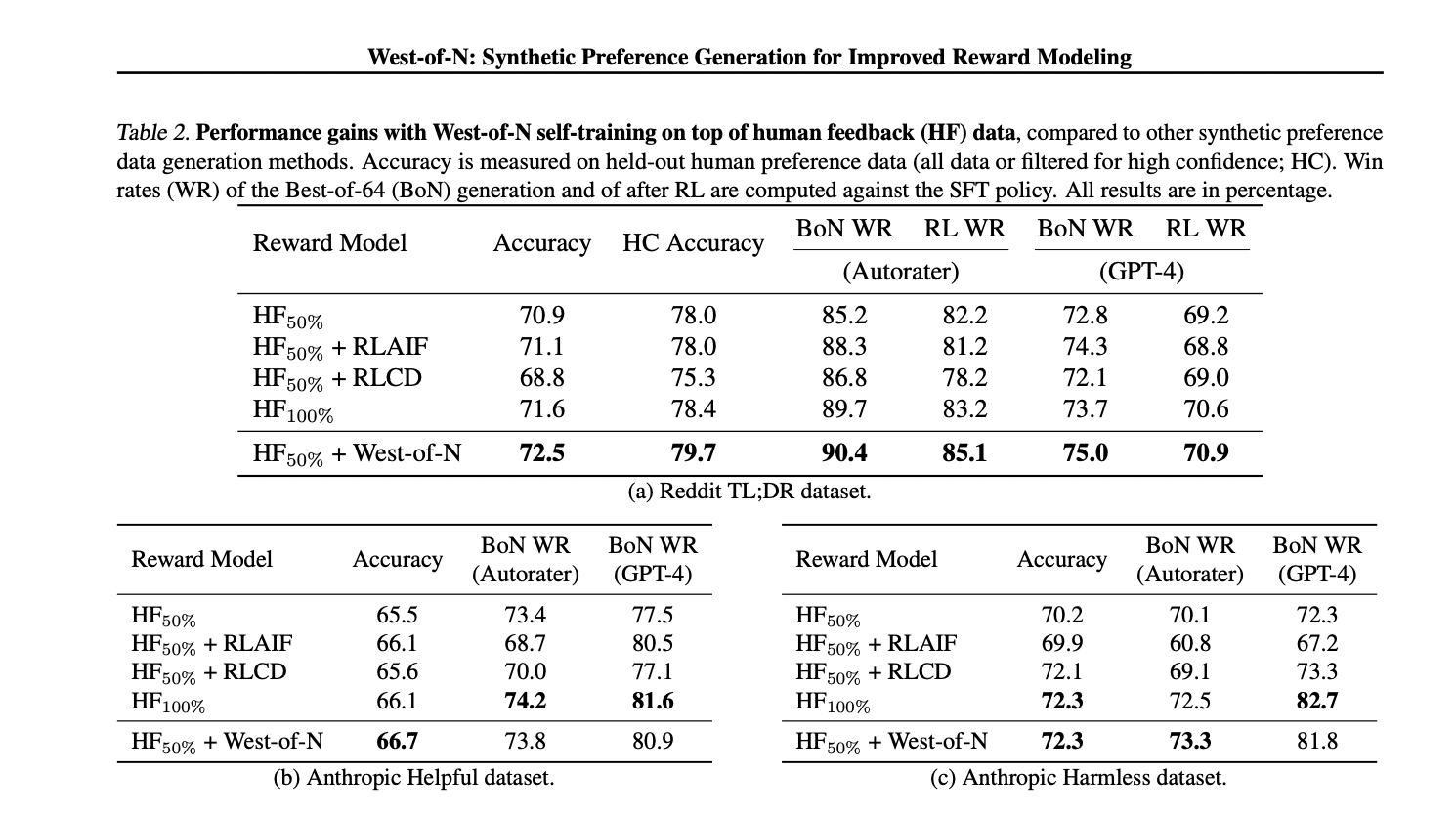

The study evaluates the West of N Synthetic preference data generation method on Reddit TL;DR, useful and harmless anthropic dialogue summary and datasets. The results indicate that West-of-N significantly improves the performance of the reward model, outperforming the gains from additional human feedback data and outperforming other synthetic preference generation methods such as RLAIF and RLCD. West of N It constantly improves model accuracy, Best-of-N sampling, and RL fine-tuning on different types of base preferences, proving its effectiveness in language model alignment.

To conclude, researchers from Google Research and other institutions have proposed an effective strategy, West of N, to improve the performance of the reward model (RM) in RLHF. The experimental results show the effectiveness of the method on various data sets and initial preference data. The study highlights the potential of Best-of-N sampling and semi-supervised learning for preference modeling. Furthermore, they suggested exploring more methods such as training noisy learners to elevate RM performance along with West-of-N.

Review the Paper. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on Twitter. Join our 36k+ ML SubReddit, 41k+ Facebook community, Discord channeland LinkedIn Grabove.

If you like our work, you will love our Newsletter..

Don't forget to join our Telegram channel

![]()

Asjad is an internal consultant at Marktechpost. He is pursuing B.tech in Mechanical Engineering at Indian Institute of technology, Kharagpur. Asjad is a machine learning and deep learning enthusiast who is always researching applications of machine learning in healthcare.

<!– ai CONTENT END 2 –>