Large language models (LLMs), such as ChatGPT and Llama, have attracted substantial attention due to their exceptional natural language processing capabilities, enabling diverse applications ranging from text generation to code completion. Despite their immense usefulness, the high operational costs of these models have posed a significant challenge, leading researchers to seek innovative solutions to improve their efficiency and scalability.

Since generating a single response incurs an average cost of $0.01, the expenses associated with scaling these models to serve billions of users, each with multiple daily interactions, can quickly become substantial. These costs can increase exponentially, particularly in complex tasks like code autocompletion, where the model is continuously involved during the coding process. Recognizing the urgent need to optimize the decoding process, researchers have explored techniques to streamline and accelerate the attention operation, a crucial component in generating coherent and contextually relevant text.

LLM inference, often called decoding, involves the generation of tokens step by step, with the attention operation being an important factor in determining the total generation time. While advances such as FlashAttention v2 and FasterTransformer have improved the training process by optimizing memory bandwidth and computational resources, challenges during the inference phase persist. One of the main limitations encountered during decoding concerns the scalability of the attention operation with longer contexts. As LLMs are increasingly tasked with handling larger documents, conversations, and codebases, the attention operation can consume a substantial amount of inference time, impeding overall model efficiency.

The researchers introduced an innovative technique called Flash-Decoding to address these challenges, building on the foundation established by previous methodologies. The key innovation of Flash-Decoding lies in its novel parallelization approach, which focuses on the length of the sequence of keys and values. By strategically splitting keys and values into smaller chunks, the approach enables highly efficient GPU utilization, even with smaller batch sizes and extended contexts. Flash-Decoding significantly reduces GPU memory requirements by leveraging parallel attention computations and the log-sum-exp function, facilitating optimized and efficient computation across the entire model architecture.

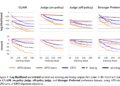

To evaluate the effectiveness of Flash-Decoding, comprehensive benchmark tests were performed on the state-of-the-art CodeLLaMa-34b model, recognized for its robust architecture and advanced capabilities. The results showed an impressive 8x improvement in decoding speeds for longer sequences compared to existing approaches. Furthermore, microbenchmarks performed on multi-head attention scaled for various sequence lengths and batch sizes further validated the effectiveness of Flash-Decoding, demonstrating its consistent performance even when the sequence length was scaled up to 64k. This exceptional performance has played a critical role in significantly improving the efficiency and scalability of LLMs, marking a substantial advancement in large language model inference technologies.

In summary, Flash-Decoding has emerged as a transformative solution to address the challenges associated with attention operation during the decoding process of large language models. By optimizing GPU utilization and improving overall model performance, Flash-Decoding has the potential to substantially reduce operating costs and promote greater accessibility of these models in various applications. This pioneering technique represents a major milestone in large language model inference, paving the way for greater efficiency and accelerated advances in natural language processing technologies.

Review the Reference page and Project page. All credit for this research goes to the researchers of this project. Also, don’t forget to join. our 31k+ ML SubReddit, Facebook community of more than 40,000 people, Discord channel, and Electronic newsletterwhere we share the latest news on ai research, interesting ai projects and more.

If you like our work, you’ll love our newsletter.

We are also on WhatsApp. Join our ai channel on Whatsapp.

![]()

Madhur Garg is a consulting intern at MarktechPost. He is currently pursuing his Bachelor’s degree in Civil and Environmental Engineering from the Indian Institute of technology (IIT), Patna. He shares a great passion for machine learning and enjoys exploring the latest advancements in technologies and their practical applications. With a keen interest in artificial intelligence and its various applications, Madhur is determined to contribute to the field of data science and harness the potential impact of it in various industries.

<!– ai CONTENT END 2 –>