LLMs are driving important advances in research and development today. There has been a significant shift in research objectives and methodologies towards an LLM-focused approach. However, they are associated with high expenses, making LLMs for large-scale utilization inaccessible to many. Therefore, it is a major challenge to reduce the latency of operations, especially in dynamic applications that demand responsiveness.

The KV cache is used for autoregressive decoding in LLM. It stores key-value pairs in multi-head attention during the pre-population inference phase. During the decoding stage, new KV pairs are added to memory. The KV cache stores intermediate key and value activations in the attention mechanism, reducing the complexity from quadratic to linear order. The KV cache allows for improved efficiency, but grows linearly with batch size, sequence length, and model size. The increasing memory size of the KV cache exceeds the handling capacity of the GPUs and transferring it to the CPU introduces several bottlenecks, increasing latency and reducing performance.

PCIe interfaces become a limiting factor, especially when transferring cache from the CPU to the GPU for calculations. Slow PCIe interfaces can cause latency to exceed normal levels by an order of magnitude, resulting in substantial GPU downtime.

Previous work attempted to mitigate the problem of slow PCIe performance. Still, these approaches often fail due to mismatched data transfer and GPU computation times, particularly with large batch sizes and contexts. Others were dependent on CPU resources, which again became a limiting factor. This article discusses a novel approach to PCIe and GPU optimization.

Researchers at the University of Southern California propose an efficient CPU-GPU I/O-aware LLM inference method for optimized PCIe utilization. It leverages partial KV cache recomputation and asynchronous overlay to address the system bottleneck when loading large KV caches. Their process involves transferring smaller activation segments from the cache to the GPU instead of transferring the entire KV cache. The GPU then rebuilds the entire cache from these smaller trigger bits. The key is to calculate attention scores that ensure minimal loss of information.

The authors propose a fully automated method for determining recalculation and communication splits. This work consists of three modules to minimize GPU latency:

- profiler module– Collects system hardware information, such as PCIe bandwidth and GPU processing speed.

- Programmer Module: Formulates the problem as a linear programming task to determine the optimal KV split point using hardware information and user settings. The goal is to maximize the overlap between computing and communication processes.

- Runtime module– Coordinates data transfer between the two devices and manages memory allocations.

He Programmer Modulewhich is responsible for finding the optimal KV split, works in two ways:

Row-by-row scheduling: Reduce latency with a row-by-row execution plan. Here, the GPU starts rebuilding the KV cache while the remaining activations are loaded asynchronously. Column-by-column programming: Maximizes performance and supports meaningful inference on batch sizes by reusing model weights across batches. It overlays the transmission of KV cache and activations with MHA (multi-head attention) computation in multiple batches instead of processing each layer sequentially in a batch. Furthermore, using a six-process communication parallelism strategy, the Runtime module enables simultaneous GPU computation and CPU-GPU communication.

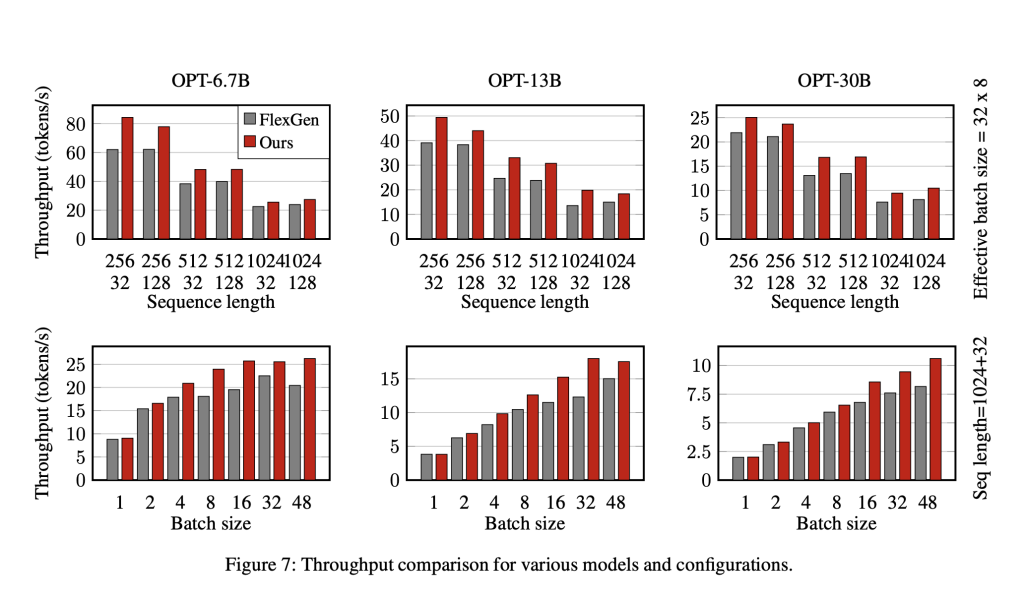

The authors tested the proposed framework for efficient LLM inference using an NVIDIA A100 GPU connected to a CPU via a PCIe 4.0 x16 interface. Experiments were conducted with two objectives to evaluate the performance of the framework:

- Latency-oriented workload: The proposed method outperformed the baselines and reduced the latency by 35.8%.

- Performance-oriented workload: The method achieved up to 29% improvement relative to the baseline.

Conclusion:

The CPU-GPU I/O-aware LLM inference method efficiently reduces latency while increasing throughput in LLM inference. It takes advantage of partial KV cache recomputation and overlays it with streaming data to minimize GPU downtime and improve efficiency.

Verify he Paper. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on <a target="_blank" href="https://twitter.com/Marktechpost”>twitter and join our Telegram channel and LinkedIn Grabove. If you like our work, you will love our information sheet.. Don't forget to join our SubReddit over 60,000 ml.

(Partner with us): 'Upcoming magazine/report: Open source ai in production'

Adeeba Alam Ansari is currently pursuing her dual degree from the Indian Institute of technology (IIT) Kharagpur, where she earned a bachelor's degree in Industrial Engineering and a master's degree in Financial Engineering. With a keen interest in machine learning and artificial intelligence, she is an avid reader and curious person. Adeeba firmly believes in the power of technology to empower society and promote well-being through innovative solutions driven by empathy and a deep understanding of real-world challenges.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>