The use of large language models (LLM) has revolutionized artificial intelligence applications, enabling advances in natural language processing tasks such as conversational ai, content generation, and automated code completion. Often with billions of parameters, these models rely on enormous memory resources to store intermediate computation states and large key-value caches during inference. The computational intensity and increasing size of these models demand innovative solutions to manage memory without sacrificing performance.

A critical challenge with LLMs is the limited memory capacity of GPUs. When GPU memory becomes insufficient to store necessary data, systems offload portions of the workload to CPU memory, a process known as swapping. While this expands memory capacity, it introduces delays due to data transfer between the CPU and GPU, which significantly impacts the performance and latency of LLM inference. The trade-off between increasing memory capacity and maintaining computing efficiency remains a key bottleneck to advancing LLM deployment at scale.

Current solutions such as vLLM and FlexGen attempt to address this problem using various sharing techniques. vLLM employs a paged memory structure to manage the key-value cache, which improves memory efficiency to some extent. FlexGen, on the other hand, uses offline profiles to optimize memory allocation between GPU, CPU, and disk resources. However, these approaches often require more predictable latency, delayed computations, and an inability to dynamically adapt to changes in workload, leaving room for greater innovation in memory management.

UC Berkeley researchers introduced Pie, a novel inference framework designed to overcome the challenges of memory limitations in LLMs. Pie employs two main techniques: transparent performance sharing and adaptive expansion. Leveraging predictable memory access patterns and advanced hardware features like the high-bandwidth NVLink of the NVIDIA GH200 Grace Hopper Superchip, Pie dynamically extends memory capacity without adding computational delays. This innovative approach allows the system to mask data transfer latencies by running them simultaneously with GPU calculations, ensuring optimal performance.

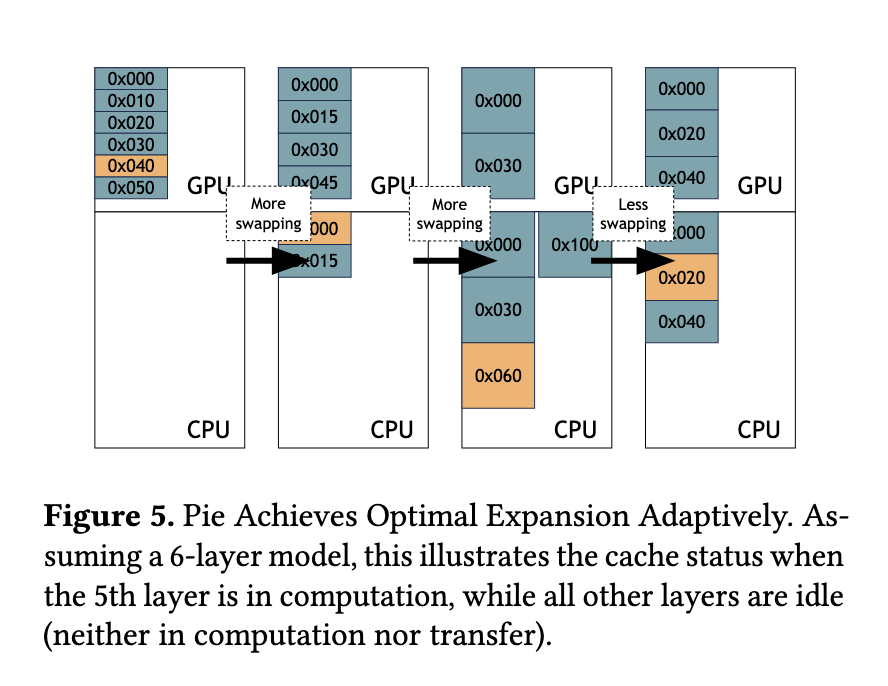

Pie's methodology revolves around two fundamental components. Transparent performance sharing ensures that memory transfers do not delay GPU calculations. This is achieved by prefetching data into GPU memory before use, utilizing the high bandwidth of modern GPUs and CPUs. Meanwhile, adaptive expansion adjusts the amount of CPU memory used for swapping based on real-time system conditions. By dynamically allocating memory as needed, Pie prevents underutilization or oversharing that could degrade performance. This design allows Pie to seamlessly integrate CPU and GPU memory, effectively treating the combined resources as a single extended memory pool for LLM inference.

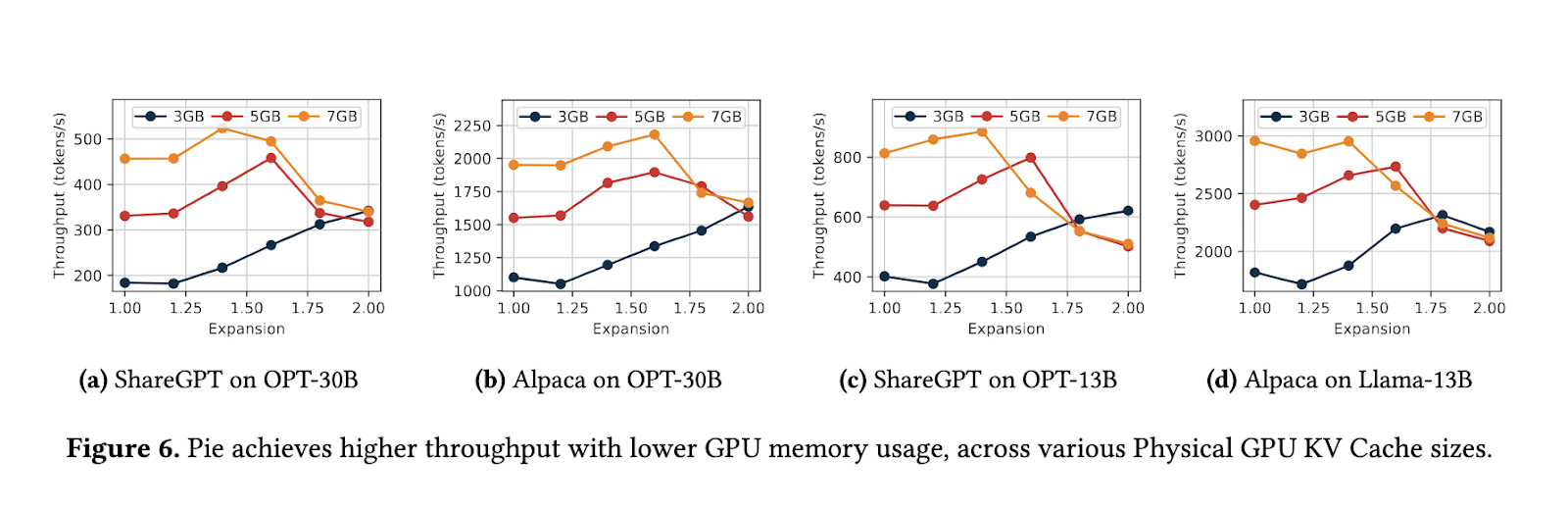

Experimental evaluations of Pie demonstrated notable improvements in performance metrics. Compared to vLLM, Pie achieved up to 1.9x higher throughput and 2x lower latency across multiple benchmarks. Furthermore, Pie reduced GPU memory usage by 1.67 times while maintaining comparable performance. Against FlexGen, Pie showed an even greater advantage, achieving up to 9.4x higher throughput and significantly reduced latency, particularly in scenarios involving larger prompts and more complex inference workloads. The experiments used state-of-the-art models, including OPT-13B and OPT-30B, and ran on NVIDIA Grace Hopper instances with up to 96GB of HBM3 memory. The system efficiently handled real-world workloads from datasets such as ShareGPT and Alpaca, demonstrating its practical feasibility.

Pie's ability to dynamically adapt to different workloads and system environments sets it apart from existing methods. The adaptive expansion mechanism quickly identifies the optimal memory allocation configuration during runtime, ensuring minimum latency and maximum performance. Even under limited memory conditions, Pie's transparent performance sharing enables efficient resource utilization, avoiding bottlenecks and maintaining high system responsiveness. This adaptability was particularly evident during high load scenarios, where Pie effectively scaled to meet demand without compromising performance.

Pie represents a significant advancement in ai infrastructure by addressing the long-standing challenge of memory limitations in LLM inference. Its ability to seamlessly expand GPU memory with minimal latency paves the way for implementing larger, more complex language models on existing hardware. This innovation improves the scalability of LLM applications and reduces the cost barriers associated with upgrading hardware to meet the demands of modern ai workloads. As LLMs grow in scale and application, frameworks like Pie will enable efficient and widespread use.

look at the Paper. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on <a target="_blank" href="https://twitter.com/Marktechpost”>twitter and join our Telegram channel and LinkedIn Grabove. If you like our work, you will love our information sheet.. Don't forget to join our SubReddit over 55,000ml.

Why ai language models remain vulnerable: Key insights from Kili technology's report on large language model vulnerabilities (Read the full whitepaper here)

Nikhil is an internal consultant at Marktechpost. He is pursuing an integrated double degree in Materials at the Indian Institute of technology Kharagpur. Nikhil is an ai/ML enthusiast who is always researching applications in fields like biomaterials and biomedical science. With a strong background in materials science, he is exploring new advances and creating opportunities to contribute.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>