in the generative Rise of ai, data is the new oil. So why shouldn't you be able to sell your own?

From big tech companies to startups, ai makers are licensing e-books, images, videos, audio, and more to data brokers, all in order to form more capable (and more legally defensible) ai-powered products. ). Shutterstock has agreements with Meta, Google, amazon and Apple to supply millions of images for model training, while OpenAI has signed agreements with several news organizations to train their models on news archives.

In many cases, the individual creators and owners of that data have not seen a cent of the money change hands. A startup called Old wants to change that.

Anna Kazlauskas and Art Abal, who met in a class at the MIT Media Lab focused on creating technology for emerging markets, co-founded Vana in 2021. Before Vana, Kazlauskas studied computer science and economics at MIT and eventually left to launch a fintech. Automation startup Iambiq from Y Combinator. A corporate attorney by training and education, Abal was an associate at The Cadmus Group, a Boston-based consulting firm, before leading impact sourcing at data annotation company Appen.

With Vana, Kazlauskas and Abal set out to build a platform that allows users to “bundle” their data (including chats, voice recordings and photos) into data sets that can then be used for training generative ai models. They also want to create more personalized experiences (for example, daily motivational voice messages based on your wellness goals or an art-generating app that understands your style preferences) by adjusting public models based on that data.

“Vana infrastructure, in effect, creates a trove of user-owned data,” Kazlauskas told TechCrunch. “It does this by allowing users to aggregate their personal data in a non-custodial manner… Vana allows users to own ai models and use their data in ai applications.”

This is how Vana presents its platform and API to developers:

Vana's API connects a user's cross-platform personal data… to allow you to customize your app. Your app gains instant access to a user's custom ai model or underlying data, simplifying onboarding and eliminating concerns about computing costs… We believe users should be able to bring their personal data from walled gardens, like instagram , facebook, and Google, to your app, so you can create an incredible personalized experience from the first time a user interacts with your consumer ai app.

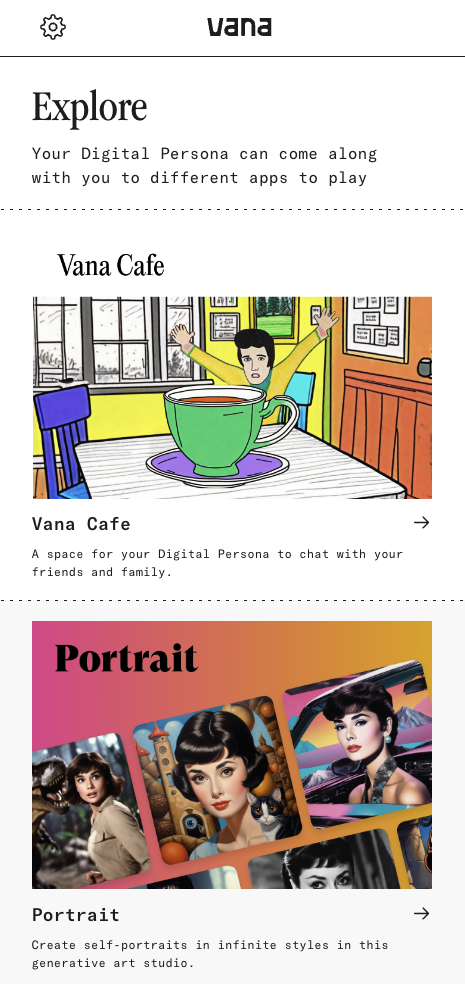

Creating an account with Vana is quite simple. After confirming your email, you can attach data to a digital avatar (such as selfies, a description of yourself, and voice recordings) and explore apps built with Vana's platform and datasets. The selection of apps ranges from ChatGPT-style chatbots and interactive storybooks to a Hinge profile generator.

Image credits: Old

Now, you might wonder why, in this era of heightened data privacy awareness and ransomware attacks, would anyone ever offer their personal information to an anonymous startup, let alone a venture-backed one? (Vana has raised $20 million to date from Paradigm, Polychain Capital and other backers.) Can any for-profit company really be trusted not to abuse or mishandle any monetizable data it has in its hands?

Image credits: Old

In response to that question, Kazlauskas emphasized that Vana's goal is for users to “regain control over their data,” noting that Vana users have the option to self-host their data instead of storing it on Vana's servers and controlling how your data works. Data is shared with applications and developers. He also argued that because Vana makes money by charging users a monthly subscription (starting at $3.99) and charging a “data transaction” fee to developers (for example, for transferring data sets for the training ai models), the company has no incentive to exploit users and the troves of personal data they bring with them.

“We want to create user-governed and owned models where everyone contributes their data,” Kazlauskas said, “and allow users to take their data and models with them to any application.”

Now while Old It's not selling user data to companies for generative ai model training (or so it says), it wants to let users do it themselves if they want, starting with its posts on Reddit.

This month, Vana launched what she calls the Reddit Data DAO (Digital Autonomous Organization), a program that brings together multiple users' Reddit data (including their karma and posting history) and lets them decide together how that combined data is used. After joining with a Reddit account, send a order to Reddit to obtain their data and upload it to the DAO, users gain the right to vote alongside other DAO members on decisions such as licensing the combined data to generative ai companies for a shared benefit.

<div class="embed breakout embed-oembed embed–twitter“>

<blockquote class="twitter-tweet” data-width=”550″ data-dnt=”true”>

We've crunched the numbers and r/datadao is now the largest data DAO in history: Phase 1 welcomed 141,000 reddit users with 21,000 completed data loads.

— r/datadao (@rdatadao) twitter.com/rdatadao/status/1778263621631611231?ref_src=twsrc%5Etfw”>April 11, 2024

It's something of a response to Reddit's recent moves to commercialize data on its platform.

Previously, Reddit did not prevent access to posts and communities for generative ai training purposes. But it changed course late last year, before its IPO. Since the policy change, Reddit has collected more than $203 million in licensing fees from companies like Google.

“The whole idea (with the DAO is) to free up user data from the major platforms that are looking to hoard and monetize it,” Kazlauskas said. “This is a first and is part of our push to help people combine their data into user-owned datasets to train ai models.”

Unsurprisingly, Reddit, which does not work with Vana in any official capacity, is not happy with the DAO.

Reddit banned Vana's subreddit dedicated to the discussion about the DAO. And a Reddit spokesperson accused Vana of “exploiting” its data export system, which is designed to comply with data privacy regulations like the GDPR and the California Consumer Privacy Act.

“Our data agreements allow us to put up barriers to such entities, including public information,” the spokesperson told TechCrunch. “Reddit does not share non-public personal data with commercial companies, and when Redditors request an export of their data from us, they receive non-public personal data from us in accordance with applicable laws. “Direct partnerships between Reddit and vetted organizations, with clear terms and accountability, are important, and these partnerships and agreements prevent misuse and abuse of people's data.”

But does Reddit have any real reason to worry?

Kazlauskas predicts that DAO will grow to the point where it affects the amount Reddit can charge customers for their data. That's a long way off, assuming it ever happens; The DAO has just over 141,000 members, a small fraction of Reddit's 73 million user base. And some of those members could be bots or duplicate accounts.

Then there is the question of how to fairly distribute the payments the DAO might receive from data buyers.

Currently, the DAO grants “tokens” (cryptocurrencies) to users corresponding to its Reddit. karma. But karma might not be the best measure of quality contributions to the dataset, particularly in smaller Reddit communities with fewer opportunities to obtain it.

Kazlauskas raises the idea that DAO members could choose to share their demographic and cross-platform data, making the DAO potentially more valuable and incentivizing sign-ups. But that would also require users to trust Vana even more to treat their sensitive data responsibly.

Personally, I don't see Vana's DAO reaching critical mass. The obstacles that stand in the way are too many. However, I believe it will not be the last popular attempt to assert control over the data that is increasingly used to train generative ai models.

Startups like Spawning are working on ways to allow creators to impose rules that guide how their data is used for training, while providers like Getty Images, Shutterstock and Adobe continue to experiment with compensation schemes. But no one has cracked the code yet. Can you even be cracked? Given the killer nature of the generative ai industry, it is undoubtedly a difficult task. But maybe someone will find a way, or the authorities will force her to do it.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>

NEWSLETTER

NEWSLETTER