In response to security concerns, Microsoft details how it has overhauled its controversial ai-powered recovery feature that creates screenshots of almost everything you see or do on a computer. Recall was originally supposed to debut with Copilot Plus PCs in June, but Microsoft has spent the last few months reworking the security behind it to make it an optional experience that you can now remove from Windows entirely if you want.

“I'm actually really excited about how nerdy we got with the security architecture,” says David Weston, vice president of enterprise and operating system security at Microsoft, in an interview with The edge. “I'm excited because I think the security community will realize how much we've pushed (for the Recall).”

One of Microsoft's first big changes is that the company doesn't force people to use Recall if they don't want to. “There's no default experience anymore; you have to choose it,” says Weston. “That's obviously very important for people who just don't want this, and we totally understand that.”

A recovery uninstall option initially appeared on Copilot Plus PCs earlier this month, and Microsoft said at the time that it was a bug. It turns out that you will indeed be able to uninstall Recall completely. “If you choose to uninstall this, we remove the bits from your machine,” says Weston. That includes the artificial intelligence models that Microsoft is using to power Recall.

Security researchers initially discovered that the Recall database, which stores snapshots taken every few seconds of your computer, was not encrypted and that malware could have accessed the Recall feature. Everything that is confidential to Recall, including your screenshot database, is now fully encrypted. Microsoft also relies on Windows Hello to protect against malware manipulation.

Encryption in Recall is now tied to the Trusted Platform Module (TPM) that Microsoft requires for Windows 11, so the keys are stored in the TPM and the only way to gain access is to authenticate via Windows Hello. The only time recovery data is passed to the user interface is when the user wants to use the feature and authenticates using their face, fingerprint, or PIN.

“To begin with, to activate it, you must be present as a user,” says Weston. That means you must use a fingerprint or your face to set up Recall before you can use PIN support. All of this is designed to prevent malware from accessing Recall data in the background, as Microsoft requires proof of presence through Windows Hello.

“We've moved all the screenshot processing, all the sensitive processes into a virtualization-based security enclave, so we've actually put it all in a virtual machine,” Weston explains. That means there is a UI application layer that does not have access to raw screenshots or the Recall database, but when a Windows user wants to interact with Recall and search, it will generate the Windows Hello message, will query the virtual machine and return the data to the application memory. Once the user closes the Recall application, whatever is in memory is destroyed.

“The application outside the virtualization-based enclave runs in a malware-protected process, which would basically require a malicious kernel driver to access,” says Weston. Microsoft details its Recall security model and exactly how its VBS enclave works in a blog post today. It all seems much more secure than Microsoft had planned to offer and even hints at how the company might protect Windows apps in the future.

So how come Microsoft almost released Recall in June without a lot of security in the first place? I'm still not very clear and Microsoft is not revealing much. Weston confirms that Recall was reviewed as part of the company's Safe Future Initiative that was introduced last year, but being a preliminary product, it apparently had some different restrictions. “The plan was always to follow Microsoft basics, such as encryption. But we also heard from people saying 'we're really concerned about this,'” so the company decided to accelerate some of the additional security work it was planning for Recall so that security concerns wouldn't be a factor in deciding whether to someone wanted to use the function.

“It's not just about Recall, in my opinion, we now have one of the most robust platforms for doing sensitive data processing at the edge and you can imagine there are a lot of other things we can do with that,” hints Weston. “I think it made a lot of sense to bring forward some of the investments we were going to make and then make Recall the primary platform for that.”

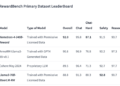

We will also remember now only operate on a Copilot Plus PC, preventing people from downloading it on Windows machines as we saw before its planned debut in June. The recall will verify that a Copilot Plus PC has BitLocker, virtualization-based security enabled, safe boot and system protection protection measures, and kernel DMA protection.

Microsoft has also made a number of revisions to Recall's improved security. The Microsoft Offensive Research Security Engineering (MORSE) team has “conducted months of design reviews and penetration testing on Recall,” and a third-party security vendor “was retained to perform an independent review of the security design” and also tests.

Now that Microsoft has had more time to work on Recall, there are some additional configuration changes to give even more control over how the ai-powered tool works. You will now be able to filter specific apps from Recall along with the ability to block a custom list of websites from appearing in the database. Sensitive content filtering, which allows Recall to leak things like passwords and credit cards, will also block financial and health websites from being stored. Microsoft is also adding the ability to delete a time range, all content from an app or website, or everything stored in the Recall database.

Microsoft says it remains on track to preview Recall with Windows Insiders on Copilot Plus PCs in October, meaning Recall won't ship to these new laptops and PCs until the Windows community has tested it further. background.

NEWSLETTER

NEWSLETTER