Keeping up with an industry that evolves as quickly as ai is a difficult task. So until an ai can do it for you, here's a helpful summary of recent stories in the world of machine learning, along with notable research and experiments that we didn't cover on their own.

This week at ai, Microsoft introduced a new standard PC keyboard layout with a “Copilot” key. You heard correctly: in the future, Windows machines will have a dedicated key to launch Microsoft's ai-powered Copilot assistant, replacing the right Control key.

The move is imagined to signal the seriousness of Microsoft's investment in the race for ai dominance for consumers (and businesses, for that matter). It's the first time Microsoft has changed the Windows keyboard layout in ~30 years; Laptops and keyboards with the Copilot key are scheduled to ship in late February.

But is it all bragging? Do Windows users really want An ai shortcut, or the Microsoft type of ai?

Microsoft certainly made a show of injecting almost all of its new and old products with “Copilot” functionality. With compelling speeches, clever demos, and now an ai key, the company is making its ai technology stand out and banking on this to drive demand.

Demand is not a sure thing. But to be fair. Some vendors have managed to turn viral ai successes into successes. Look at OpenAI, the creator of ChatGPT, which reportedly It surpassed $1.6 billion in annualized revenue by the end of 2023. Generative art platform Midjourney is apparently also profitable, and has yet to receive a cent of outside capital.

Emphasis on some, although. Most vendors, burdened by the costs of training and running cutting-edge ai models, have had to seek ever-larger tranches of capital to stay afloat. For example, Anthropic is said to be lifting $750 million in a round that would bring the total raised to more than $8 billion.

Microsoft, along with its chip partners AMD and Intel, expects ai processing to increasingly shift from expensive data centers to on-premise silicon, commoditizing ai in the process, and it may well be right. Intel's new line of consumer chips includes cores custom designed to run ai. Additionally, new data center chips like Microsoft's could make model training a less expensive task than it currently is.

But there is no guarantee. The real test will be whether Windows users and enterprise customers, bombarded with what amounts to Copilot advertising, show an appetite for the technology and pay for it. If they don't, it may not be long before Microsoft has to redesign the Windows keyboard once again.

Here are some other notable ai stories from the past few days:

- Copilot comes to mobile: In more Copilot news, Microsoft quietly brought Copilot clients to Android and iOS, along with iPadOS.

- GPT Store: OpenAI announced plans to launch a GPT store, custom apps based on its text-generating ai models (e.g. GPT-4), within the next week. The GPT Store was announced last year during OpenAI's first annual developer conference, DevDay, but was delayed in December, almost certainly due to the leadership shakeup that occurred in November, just after the initial announcement.

- OpenAI reduces registration risk: In other OpenAI news, the startup is looking to reduce its regulatory risk in the EU by channeling much of its overseas business through an Irish entity. Natasha writes that the move will reduce the ability of some of the bloc's privacy watchdogs to act unilaterally on concerns.

- Training robots: Google's DeepMind Robotics team is exploring ways to give robots a better understanding of exactly what we humans want from them, Brian writes. The team's new system can manage a fleet of robots working together and suggest tasks that can be performed using the robots' hardware.

- Intel's new company: Intel is falling apart a new platform company, Articul8 ai, backed by DigitalBridge, an asset manager and investor based in Boca Raton, Florida. As an Intel spokesperson explains, Articul8's platform “delivers artificial intelligence capabilities that keep customer data, training and inference within the enterprise security perimeter,” an attractive prospect for customers in highly regulated industries such as healthcare and financial services.

- Dark fishing industry exposed: Satellite imagery and machine learning offer a new and much more detailed view of the maritime industry, specifically the number and activities of fishing and transport vessels at sea. It turns out that there is shape more than publicly available data would suggest, a fact revealed by new research published in Nature by a Global Fishing Watch team and several collaborating universities.

- ai Powered Search: Perplexity ai, a platform that applies ai to web search, raised $73.6 million in a funding round that valued the company at $520 million. Unlike traditional search engines, Perplexity offers a chatbot-like interface that allows users to ask questions in natural language (e.g., “Do we burn calories while we sleep?”, “What is the least visited country?” , etc.).

- Clinical notes, written automatically: In more funding news, Paris-based startup Nabla It raised a cool $24 million. The company, which has a association with the Permanente Medical Groupa division of US healthcare giant Kaiser Permanente, is working on an “ai co-pilot” for doctors and other clinical staff that automatically takes notes and writes medical reports.

More machine learning

You may remember several examples of interesting work done over the past year that involved making minor changes to images that cause machine learning models to confuse, for example, an image of a dog with an image of a car. They do this by adding “disturbances”, minor changes to the image pixels, in a pattern that only the model can perceive. Or at least they thought only the model could perceive it.

An experiment by Google DeepMind researchers showed that when an image of flowers was altered to make it look more feline to the ai, people were more likely to describe that image as more feline even though it definitely no longer looked like a cat. The same goes for other common objects like trucks and chairs.

Image credits: Google DeepMind

Because? As? The researchers don't really know, and all participants felt like they were choosing at random (in fact, the influence, while reliable, is barely above chance). It seems we are more perceptive than we think, but this also has implications for security and other measures, as it suggests that subliminal signals could spread through images without anyone noticing.

Another interesting experiment involving human perception emerged from MIT this week, using machine learning to Help elucidate a particular system of language understanding.. Basically, some simple sentences, such as “I walked to the beach,” require almost no brain power to decode, while complex or confusing ones, such as “in whose aristocratic system a grim revolution is taking place,” produce greater and broader activation, as measured by resonance. functional magnetic.

The team compared the activation readings of humans reading a variety of such sentences with how the same sentences activated the equivalent of cortical areas in a large language model. They then made a second model that learned how the two activation patterns corresponded to each other. This model was able to predict, for novel sentences, whether they would affect human cognition or not. It may sound a little arcane, but it's definitely super interesting, believe me.

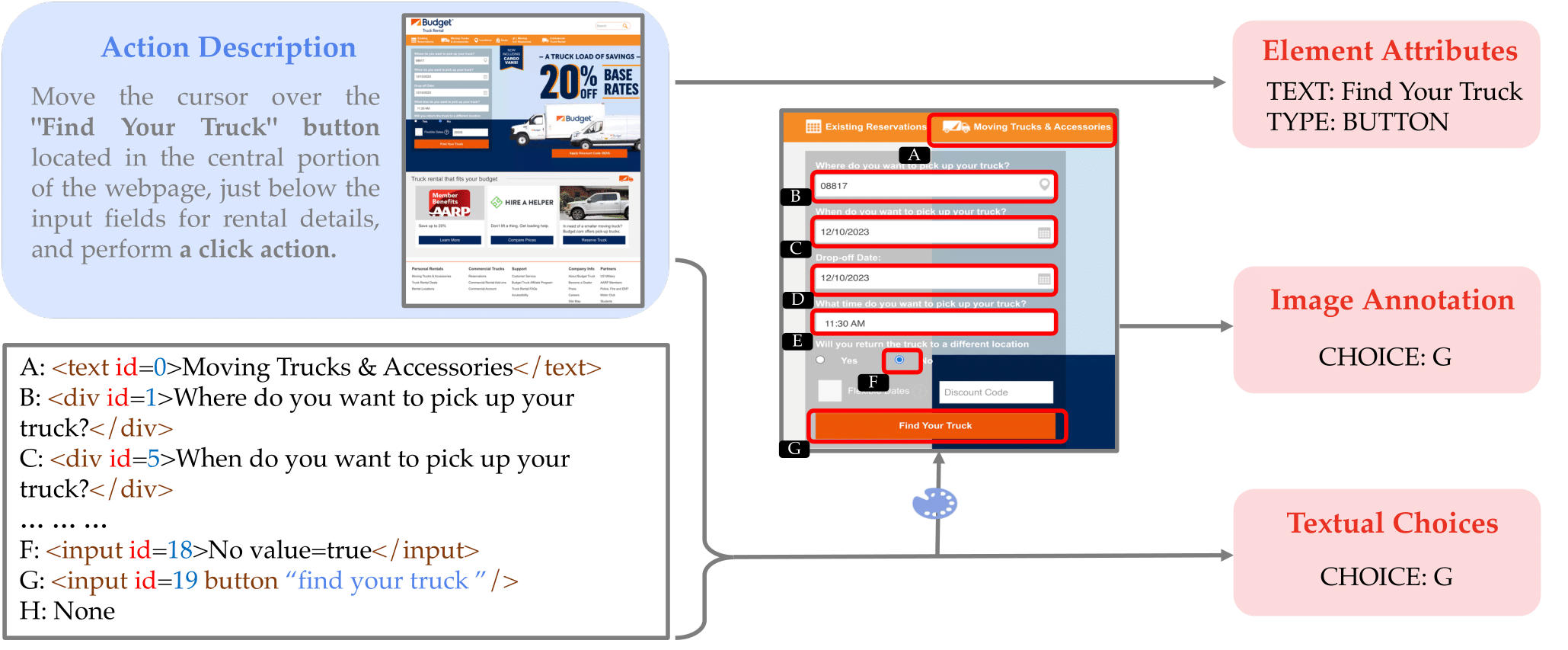

It is still an open question whether machine learning can mimic human cognition in more complex areas, such as interacting with computer interfaces. However, there is a lot of research out there and it's always worth taking a look. This week we have WatchActa system of Ohio State researchers that works by painstakingly grounding an LLM's interpretations of possible actions in real-world examples.

Image credits: Ohio State University

Basically, you can ask a system like GPT-4V to create a reservation on a site, and it will know what its task is and that you should click the “make reservation” button, but it doesn't really know how to do it. . By improving how you perceive interfaces with explicit labels and knowledge of the world, you can do much better, even if you only succeed a fraction of the time. These agent models have a long way to go, but many big claims are expected this year anyway! I just listened to some today.

Next, check out this interesting solution to a problem you had no idea existed but makes a lot of sense. Autonomous ships are a promising area of automation, but when the sea is angry it is difficult to ensure they are on the right track. GPS and gyroscopes are not enough, and visibility can also be poor, but the most important thing is that the systems that govern them are not very sophisticated. So they can go completely off target or waste fuel on big detours if they don't know any better – a big problem if you're running on battery power. I hadn't even thought about that!

Korea Maritime and Ocean University (another thing I learned today) proposes a more powerful path-finding model based on simulating ship movements in a computational fluid dynamics model. They propose that this better understanding of wave action and its effect on hulls and propulsion could seriously improve the efficiency and safety of autonomous shipping. It might even make sense to use it on human-guided vessels whose captains aren't quite sure what the best angle of attack is for a given squall or wave!

Finally, if you want a good summary of last year's big advances in computer science, which in 2023 overlapped greatly with ML research, check out Quanta's excellent review.