Implementing artificial intelligence in schools can be a difficult discussion to have. As ai grows and evolves, the way we use it changes with it. How can we keep up with such a malleable landscape while also addressing any issues that may arise with its use?

Kerry Gallagher, assistant principal of Teaching and Learning at St. John's Prep in Danvers, Massachusetts, explains a method she created to help recognize and implement the use of ai in her district, as well as make an ongoing commitment to staying engaged and informed with its use.

As a recipient of a tech & Learning Innovative Leader Award the most innovative deputy director, presented during the recent Northeast Regional SummitWe are privileged to learn more about Gallagher's approach to understanding and implementing ai in your school and doing so in an inclusive way.

<h2 id="staying-open-minded-about-ai“>Keep an open mind about ai

Overcoming the initial media coverage of ai in schools was a major challenge, Gallagher says.

“I think most of the media attention (to ai) at the time was pretty negative and aimed at scaring people,” he says. “Then we had some colleagues who were scared. We also had some colleagues who were curious and some people who had a mix of all those emotions. We started by first listening to our colleagues and what their concerns were. “We then began to take note, with a very trained eye, of what types of work students might be submitting and try to identify how we can discern whether a student has used ai in the course of creating their work.”

Understanding both educators' concerns and the ways students were using ai helped Gallagher get a better idea of how to approach the idea of ai in schools.

“In retrospect, I'm proud of the way we didn't rush to adopt a ban, a policy or create guidelines before approaching the situation with curiosity,” she says. “We looked at our academic integrity policies and our data privacy policies, then we did some work on how those existing policies apply to ai.”

Regardless of who was involved in the process, everyone knew what was happening with ai within the school.

“We shared what we learned with students, parents and teachers, all at the same time, on the same day,” Gallagher says. “Everyone in our school community, no matter what their role, was on the same page.”

<h2 id="addressing-ai-through-existing-policy”>Address ai through existing policy

As it turned out, it wasn't necessary to create a new policy for ai, as the existing policy already handled its use among students, as Gallagher explains.

“We also talked about how our academic integrity policy requires students to cite sources if they use a source other than themselves,” he says. “That would include generative ai and requires them not to use computer-generated materials unless they have permission from their teacher. That particular wording was already in our integrity policy, so we didn't have to create anything new. “We just help people understand how to apply generative ai.”

During the time spent in the ai integration cycle, Gallagher implemented the Academic Integrity Policy whenever deemed necessary. He also kept families informed about where the school was in the integration process.

“And that's how we closed out the school year, enforcing our policies as promised and allowing people who were really interested in exploring (ai) to explore on a small scale,” he says.

Building on the existing model

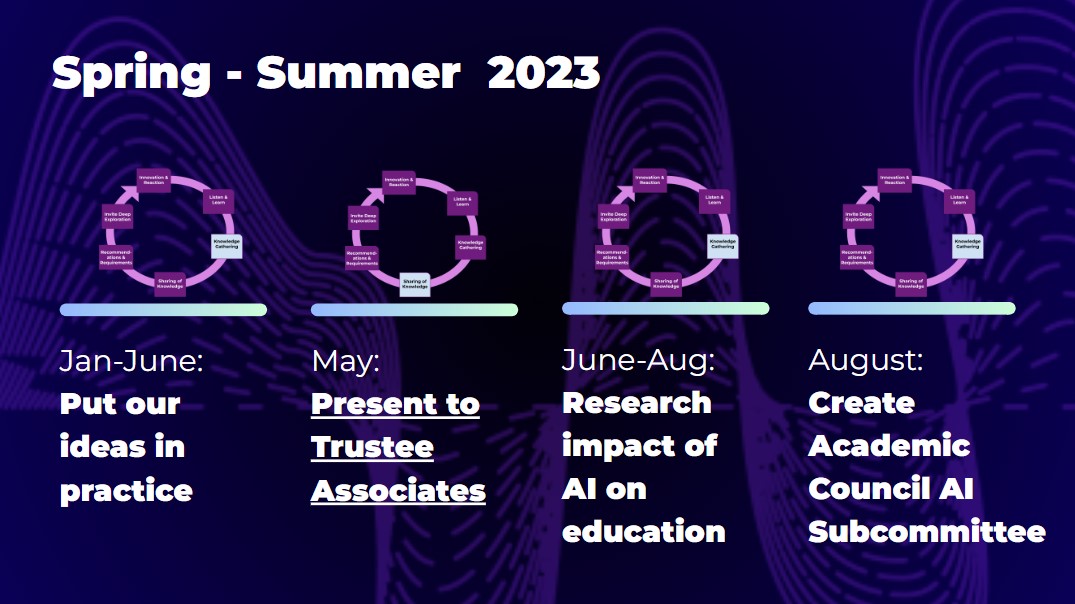

After creating an initial ai integration cycle last school year, Gallagher expanded it, focusing on specific ai platforms.

“This school year, we took the next step forward and decided to focus on finding generative ai tools that would be the tools for our teachers in our school that we could approve,” he says. “(The tools) would be something that would be helpful to our teachers and that we could do more dedicated professional learning so our teachers would feel more prepared. We select a tool, implement it, and train all teachers in it across the board.”

Training all teachers on the use of ai helped make it a more accepted concept, even if not everyone uses it in their classrooms.

“As a result (of the widespread training), in addition to ongoing listening sessions and professional learning, I am confident to say that we have normalized the use of generative ai by our teachers in the course of their work,” Gallagher says. “I am confident that our teachers are using it in a way that is aligned with our mission and our sense of ethics as a school. And the reason I have that confidence is that the platform we choose allows us to see what our teachers are doing. “We have given teachers the opportunity to share with us what they are doing as part of our routine and structure.”

With a more stable structure, Gallagher says that this time the ai provokes more positive feelings.

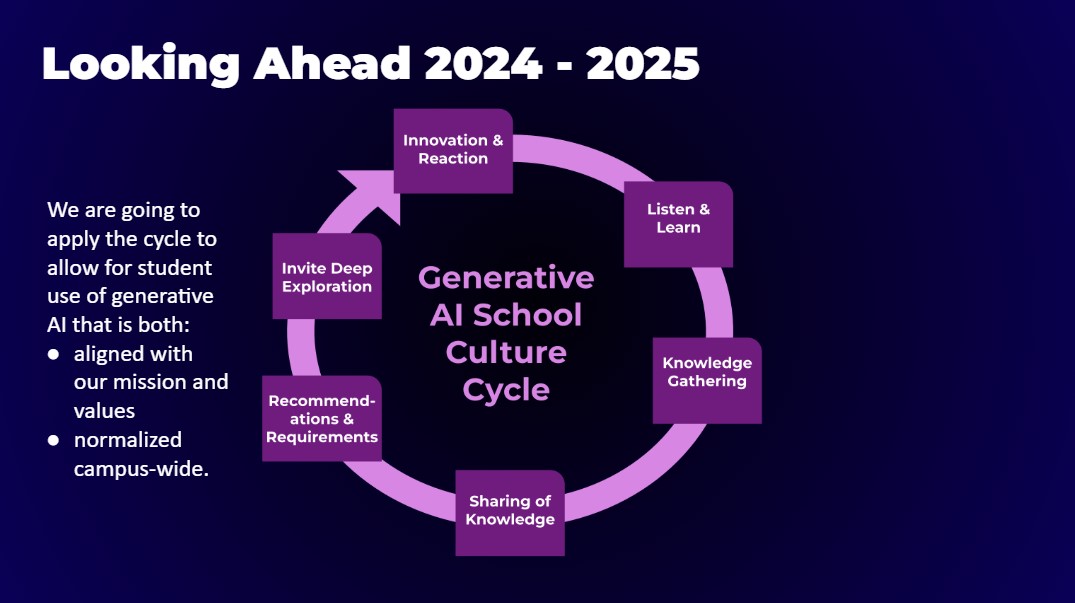

“I think the enthusiasm is growing. Curiosity is increasing,” she states. “Feelings of fear and anxiety have not disappeared, but they are decreasing. So the next step is to do the same cycle of work on what a student might use ai for. But we need to do the same cultural work that we did with our teachers. We need to start by listening and not assuming that we know what people's concerns are. We need to start by training everyone, communicating effectively and applying our values to what we are seeing.”

This approach to ai helps mitigate inherent biases and fears about its use while fostering a learning environment for teachers, students, and parents. By turning apprehension into curiosity and without compromising school policy, Gallagher has safely put ai on the radar and initiated its use through professional development and open dialogue.