Large language models (LLMs) built using transformative architectures rely heavily on pre-training with large-scale data to predict sequential tokens. This complex and resource-intensive process requires enormous computational infrastructure and well-built data pipelines. The growing demand for efficient and accessible LLMs has led researchers to explore techniques that balance resource use and performance, emphasizing the achievement of competitive results without relying on industrial-scale resources.

LLM development is full of challenges, especially when it comes to computation and data efficiency. Pre-training models with billions of parameters require advanced techniques and substantial infrastructure. High-quality data and robust training methods are crucial as models face gradient instability and performance degradation during training. Open source LLMs often struggle to match their proprietary counterparts due to limited access to computational power and high-caliber data sets. Therefore, the challenge lies in creating efficient and high-performance models, allowing smaller research groups to actively participate in the advancement of ai technology. Solving this problem requires innovation in data management, training stabilization, and architectural design.

Existing research in LLM training emphasizes structured data pipelines, using techniques such as data cleaning, dynamic programming, and curricular learning to improve learning outcomes. However, stability remains a persistent problem. Large-scale training is susceptible to gradient explosions, loss spikes, and other technical difficulties, requiring careful optimization. Training long context models introduces additional complexity as the computational demands of attention mechanisms grow quadratically with sequence length. Existing approaches, such as advanced optimizers, initialization strategies, and synthetic data generation, help alleviate these problems, but often fall short when scaled to full-size models. The need for scalable, stable and efficient methods in LLM training is more urgent than ever.

Researchers from the Gaoling School of artificial intelligence at Renmin University of China developed YuLan-Mini. With 2.42 billion parameters, this language model improves computational efficiency and performance with data-efficient methods. By leveraging publicly available data and focusing on data-efficient training techniques, YuLan-Mini achieves remarkable performance comparable to larger industrial models.

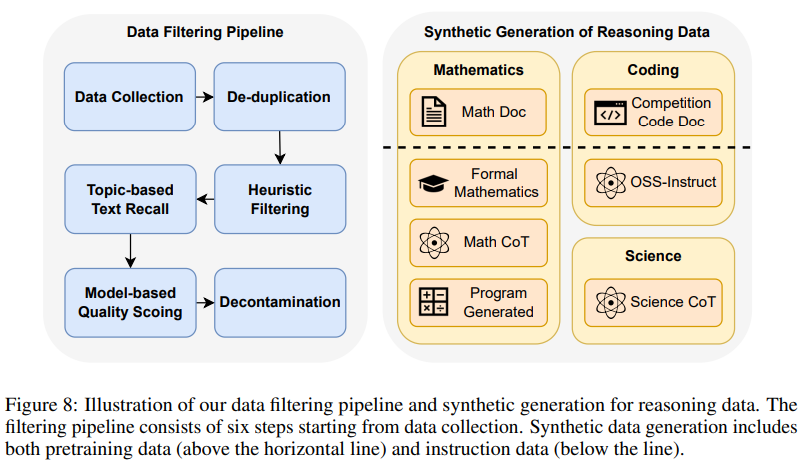

YuLan-MiniThe architecture incorporates several innovative elements to improve training efficiency. Its decoder-only transformer design employs integrated linking to reduce parameter size and improve training stability. The model uses Rotary Positional Embedding (ROPE) to handle long contexts effectively, extending its context length to 28,672 tokens, an advance over typical models. Other key features include SwiGLU activation functions for better data representation and a carefully designed annealing strategy that stabilizes training while maximizing learning efficiency. The synthetic data was critical, complementing the 1.08 trillion tokens of training data obtained from open web pages, code repositories, and mathematical datasets. These features allow YuLan-Mini to deliver solid performance on a limited computing budget.

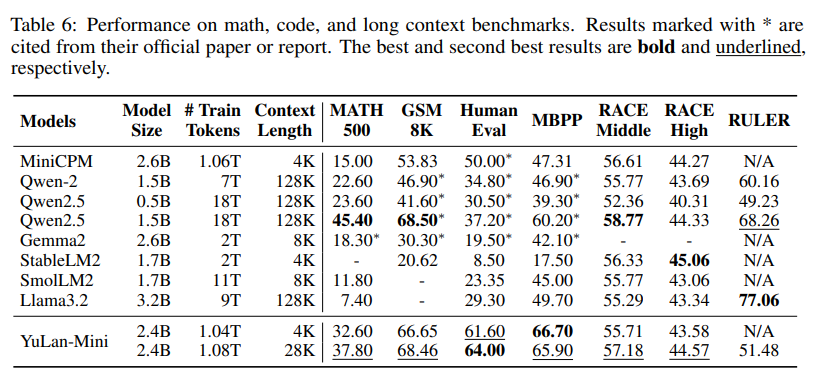

YuLan-MiniPerformance achieved scores of 64.00 on HumanEval in zero-shot scenarios, 37.80 on MATH-500 in four-shot settings, and 49.10 on MMLU in five-shot tasks. These results underline its competitive advantage, as the model's performance is comparable to its much larger and resource-intensive counterparts. The innovative extension of the context length to 28K tokens allowed YuLan-Mini to excel in long text scenarios while maintaining high accuracy in short text tasks. This dual capability sets it apart from many existing models, which often sacrifice one for the other.

Key findings from the research include:

- Using a meticulously designed data channel, YuLan-Mini reduces dependence on massive data sets while ensuring high-quality learning.

- Techniques such as systematic optimization and annealing prevent common problems such as loss spikes and gradient explosions.

- Expanding the context length to 28,672 tokens improves the applicability of the model to complex long text tasks.

- Despite its modest computational requirements, YuLan-Mini achieves results comparable to those of much larger models, demonstrating the effectiveness of its design.

- Synthetic data integration improves training results and reduces the need for proprietary data sets.

In conclusion, YuLan-Mini is a great new addition to the evolving efficient LLMs. Its ability to deliver high performance with limited resources addresses critical barriers to ai accessibility. The research team's focus on innovative techniques, from data efficiency to training stability, highlights the potential for smaller-scale research to significantly contribute to the field. With only 1.08T tokens, YuLan-Mini sets a benchmark for LLMs that efficiently use resources.

Verify he Paper and GitHub page. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on <a target="_blank" href="https://twitter.com/Marktechpost”>twitter and join our Telegram channel and LinkedIn Grabove. Don't forget to join our SubReddit over 60,000 ml.

Trending: LG ai Research launches EXAONE 3.5 – three frontier-level bilingual open-source ai models that deliver unmatched instruction following and broad context understanding for global leadership in generative ai excellence….

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of artificial intelligence for social good. Their most recent endeavor is the launch of an ai media platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is technically sound and easily understandable to a wide audience. The platform has more than 2 million monthly visits, which illustrates its popularity among the public.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>