Specialization becomes necessary

A hospital is packed with experts and doctors, each with their own specialization, solving unique problems. Surgeons, cardiologists, pediatricians—experts of all kinds come together to provide care, often collaborating to get patients the care they need. We can do the same with ai.

Mixture of Experts (MoE) architecture in ai is defined as a combination of different “expert” models working together to address or respond to complex data inputs. When it comes to ai, each expert in a MoE model specializes in a much larger problem, just like each doctor specializes in their medical field. This improves efficiency and increases the effectiveness and accuracy of the system.

Mistral ai offers open source basic LLMs that rival those of OpenAI. They have formally discussed using a MoE architecture in their Mixtral 8x7B model, a revolutionary advancement in the form of a cutting-edge Large Language Model (LLM). We will delve into why Mistral ai’s Mixtral stands out among other basic LLMs and why current LLMs now employ the MoE architecture highlighting its speed, size, and accuracy.

Common ways to update large language models (LLMs)

To better understand how MoE architecture improves our LLMs, let’s look at common methods for improving LLM efficiency. ai practitioners and developers improve models by increasing parameters, tuning the architecture, or fine-tuning it.

- Increasing parameters: By feeding in more information and interpreting it, the model's ability to learn and represent complex patterns increases. However, this can lead to overfitting and hallucinations, which requires reinforcement learning from human feedback (RLHF).

- Modifying the architecture: The introduction of new layers or modules allows to adapt to the growing number of parameters and improve performance in specific tasks. However, changes in the underlying architecture are difficult to implement.

- Fine tuning: Pre-trained models can be fine-tuned with specific data or through transfer learning, allowing existing LLMs to handle new tasks or domains without starting from scratch. This is the easiest method and does not require significant changes to the model.

What is MoE architecture?

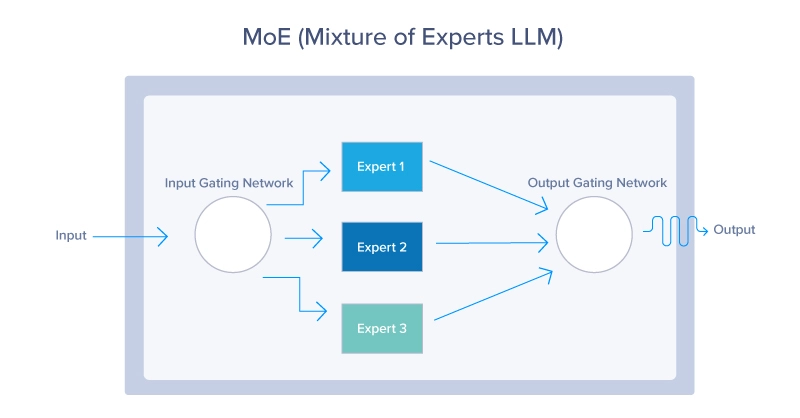

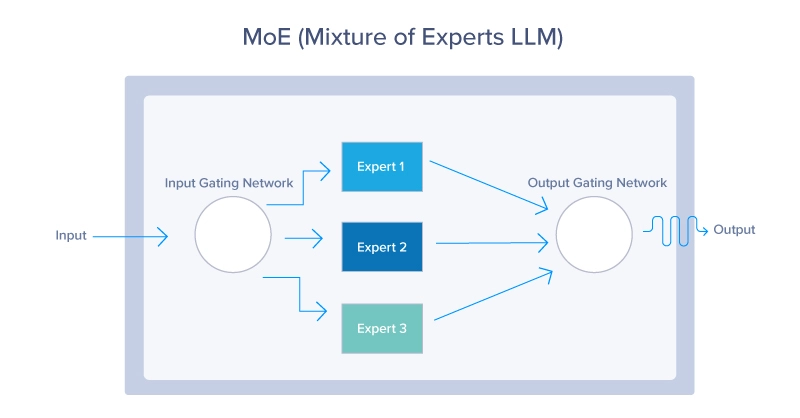

Mixture of Experts (MoE) architecture is a neural network design that improves efficiency and performance by dynamically activating a subset of specialized networks, called experts, for each input. A gate network determines which experts to activate, resulting in sparse activation and reduced computational cost. The MoE architecture consists of two critical components: the gate network and the experts. Let’s break this down:

In essence, the MoE architecture works like an efficient traffic system that routes each vehicle (or in this case, data) to the best route based on real-time conditions and the desired destination. Each task is dispatched to the most appropriate expert or sub-model, specialized in handling that particular task. This dynamic routing ensures that the most capable resources are employed for each task, improving the overall efficiency and effectiveness of the model. The MoE architecture leverages all three ways to improve the fidelity of a model.

- By implementing multiple experts, MoE inherently augments the model.

- parameter size by adding more parameters per expert.

- MoE changes the classical neural network architecture that incorporates a gated network to determine which experts to employ for a designated task.

- Every ai model has some degree of fine-tuning, so every expert in an MoE is primed to perform as expected thanks to an additional layer of fine-tuning that traditional models could not leverage.

MoE Gate Network

The control network acts as the decision maker or controller within the MoE model. It evaluates incoming tasks and determines which expert is appropriate to handle them. This decision is typically based on learned weights, which are fine-tuned over time through training, further improving its ability to associate tasks with experts. The control network can employ a variety of strategies, from probabilistic methods where flexible tasks are assigned to multiple experts, to deterministic methods that assign each task to a single expert.

Experts from the Ministry of Education

Each expert in the MoE model represents a smaller neural network, machine learning model, or LLM optimized for a specific subset of the problem domain. For example, in Mistral, different experts can specialize in understanding certain languages, dialects, or even query types. Specialization ensures that each expert is competent in their niche, which, when combined with contributions from other experts, will lead to superior performance across a wide range of tasks.

MoE loss function

Although not considered a core component of the MoE architecture, the loss function plays a critical role in the future performance of the model as it is designed to optimize both the individual experts and the control network.

Typically, it combines the losses calculated for each expert, weighted by the probability or importance assigned to them by the control network. This helps to tune the experts for their specific tasks, while also tuning the control network to improve routing accuracy.

The Ministry of Education process from start to finish

Now let's summarize the whole process, adding more details.

Here is a brief explanation of how the routing process works from start to finish:

- Input Processing: Initial handling of incoming data. Mainly our Prompt in the case of LLMs.

- Feature Extraction: Transforming raw data for analysis.

- Gating network evaluation: Assessing the suitability of experts using probabilities or weights.

- Weighted routing: Assigning data based on calculated weights. This completes the process of selecting the most suitable LLM. In some cases, multiple LLMs are chosen to respond to a single data item.

- Task execution: Processing the information assigned by each expert.

- Integration of expert results: combining individual expert results to obtain a final result.

- Feedback and adaptation: Using performance feedback to improve models.

- Iterative optimization: Continuous refinement of routing and model parameters.

Popular models using MoE architecture

- OpenAI's GPT-4 and GPT-4o: GPT-4 and GPT4o power the premium version of ChatGPT. These multimodal models use MoE to be able to ingest different source media such as images, text, and speech. It is rumored and mildly confirmed that GPT-4 has 8 experts, each with 220 billion parameters, which adds up to a total of over 1.7 trillion parameters in the entire model.

- Mistral ai Mixtral 8x7b: Mistral ai offers very powerful open-source ai models and has claimed that its Mixtral model is a sMoE model or a sparse mixture of experts model delivered in a small package. Mixtral 8x7b has a total of 46.7 billion parameters but only uses 12.9 billion parameters per token, so it processes inputs and outputs at that cost. Its MoE model consistently outperforms Llama2 (70 billion) and GPT-3.5 (175 billion) while costing less to run.

The benefits of MoE and why it is the preferred architecture

Ultimately, the main goal of the MoE architecture is to introduce a paradigm shift in the way complex machine learning tasks are tackled. It offers unique advantages and demonstrates its superiority over traditional models in several ways.

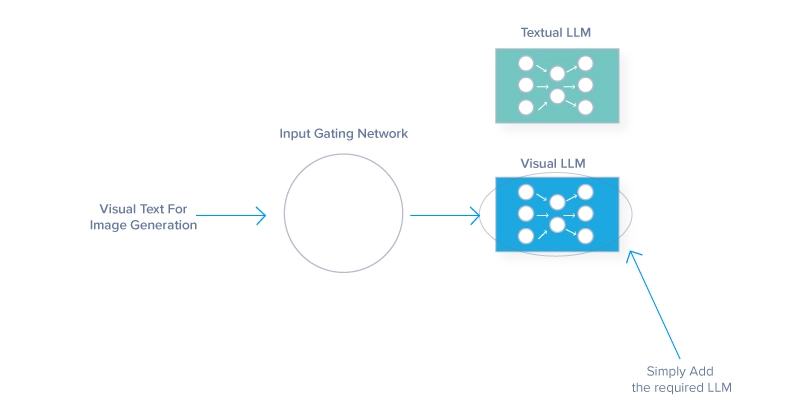

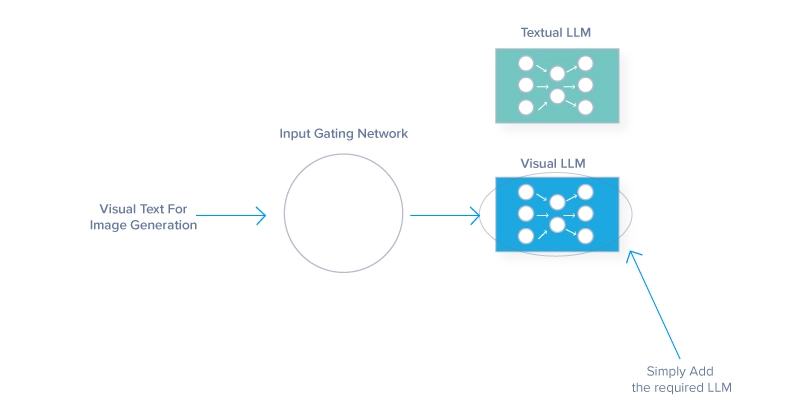

- Improved model scalability

- Each expert is responsible for a portion of a task, so scaling by adding experts will not result in a proportional increase in computational demands.

- This modular approach can handle larger and more diverse data sets and facilitates parallel processing, speeding up operations. For example, adding an image recognition model to a text-based model can integrate an additional LLM expert to interpret images while still being able to generate text. Or

- Versatility allows the model to extend its capabilities across different types of data inputs.

- Greater efficiency and flexibility

- MoE models are extremely efficient and only selectively involve the experts needed to obtain specific insights, unlike conventional architectures that use all their parameters independently.

- The architecture reduces the computational burden for inference, allowing the model to adapt to different data types and specialized tasks.

- Specialization and precision:

- Each expert in an MoE system can be tailored to specific aspects of the overall problem, leading to greater expertise and accuracy in those areas.

- A specialization like this is useful in fields like medical imaging or financial forecasting, where accuracy is key.

- MoE can generate better results from narrow domains due to its nuanced understanding, detailed knowledge, and the ability to outperform generalist models on specialized tasks.

The Disadvantages of MoE Architecture

While MoE architecture offers significant advantages, it also presents challenges that can impact its adoption and effectiveness.

- Model complexity: Managing multiple neural network experts and a control network to direct traffic makes MoE development and operational costs challenging.

- Training stability: The interaction between the control network and the experts introduces unpredictable dynamics that prevent achieving uniform learning rates and require exhaustive tuning of hyperparameters.

- Imbalance: Leaving experts idle is a poor optimization for the MoE model, as resources are wasted on unused experts or certain experts are over-reliant. Balancing the workload distribution and tuning an effective gate is crucial for high-performance MoE ai.

It should be noted that the above-mentioned drawbacks usually diminish over time as the MoE architecture is improved.

The future determined by specialization

Reflecting on the MoE approach and its human parallel, we see that just as specialized teams accomplish more than a generalized workforce, specialized models outperform their monolithic counterparts in ai models. Prioritizing diversity and expertise turns the complexity of large-scale problems into manageable segments that experts can effectively address.

As we look to the future, let’s consider the broader implications of specialized systems on the advancement of other technologies. MoE principles could influence developments in sectors such as healthcare, finance, and autonomous systems, promoting more efficient and accurate solutions.

MoE’s journey is just beginning, and its continued evolution promises to drive further innovation in ai and beyond. As high-performance hardware continues to advance, this mix of expert AIs may reside in our smartphones, capable of delivering even smarter experiences. But first, someone will have to train one.

Kevin Vu manages Exxact Corp Blog and works with many of its talented authors who write about different aspects of deep learning.

NEWSLETTER

NEWSLETTER