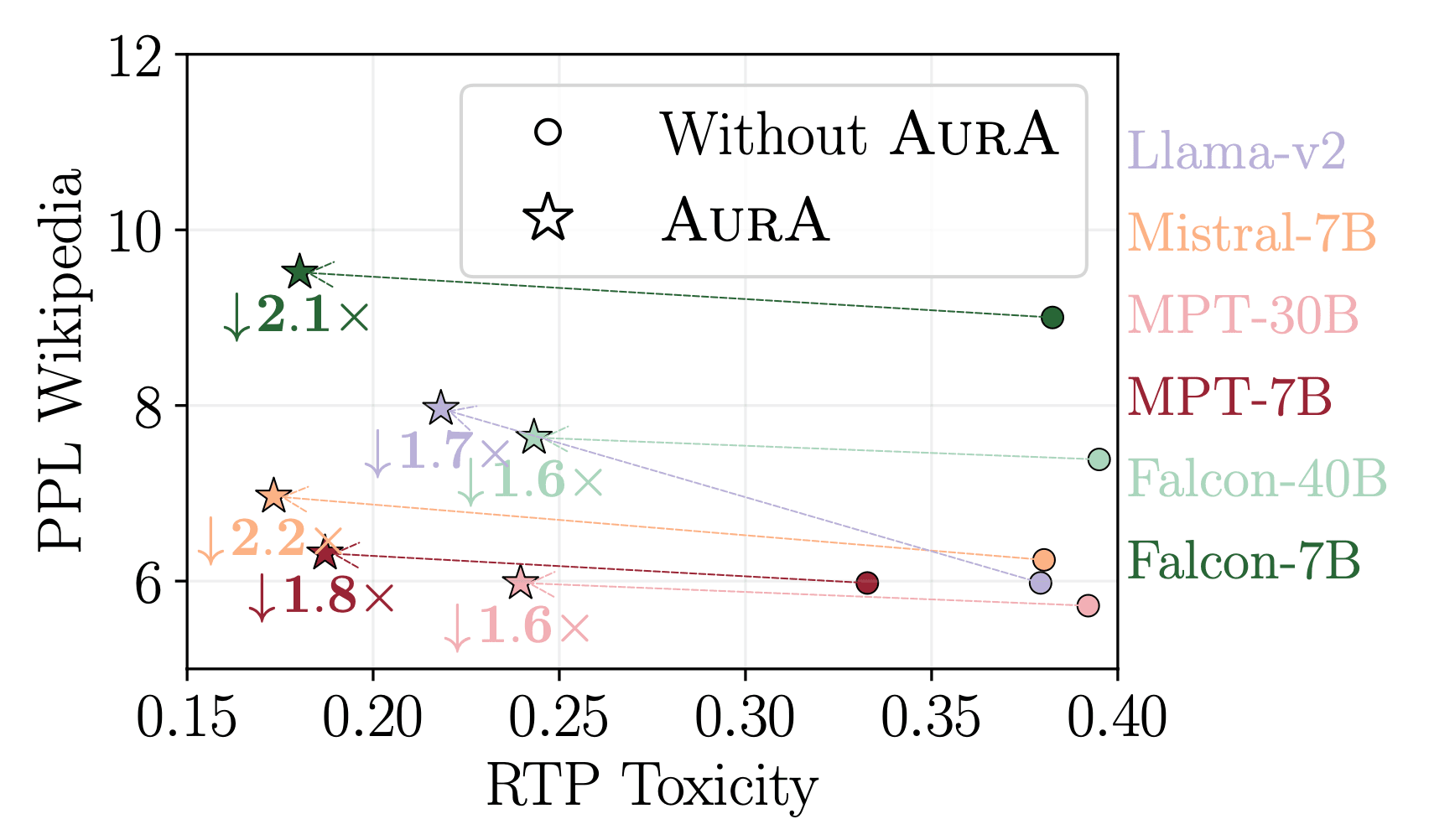

A major problem with large language models (LLMs) is their unintended ability to generate toxic language. In this work, we show that the neurons responsible for toxicity can be determined by their ability to discriminate toxic sentences, and that toxic language can be mitigated by reducing their activation levels proportionally to this ability. We propose AUROC adaptation (AURA), an intervention that can be applied to any pre-trained LLM to mitigate toxicity. As the intervention is proportional to each neuron’s ability to discriminate toxic content, it is free from any model-dependent hyperparameters. We show that AURA can achieve up to a 2.2× reduction in toxicity with only a 0.72 perplexity increase. We also show that AURA is effective with models of different scales (from 1.5B to 40B parameters), and its effectiveness in mitigating toxic language, while preserving zero-firing commonsense capabilities, is maintained across all scales. AURA can be combined with pre-warning strategies, increasing its average mitigation potential from 1.28x to 2.35x. Additionally, AURA can counter adversarial pre-warnings that maliciously cause toxic content, making it an effective method for implementing safer, less toxic models.

Whispering experts: mitigating toxicity in pre-trained language models by attenuating expert neurons

ADVERTISEMENT

NEWSLETTER

NEWSLETTER