Our previous guide discussed the first Agentic ai design pattern from the Reflection, Tool Use, Planning, and Multi-Agent Patterns list. Now, we will talk about the Tool Use Pattern in Agentic ai.

Firstly, let us reiterate the Reflection Pattern article: That article sheds light on how LLMs can use an iterative generation process and self-assessment to improve output quality. The core idea here is that the ai, much like a course creator revising lesson plans, generates content, critiques it, and refines it iteratively, improving with each cycle. The Reflection Pattern is particularly useful in complex tasks like text generation, problem-solving, and code development. In the reflection pattern, the ai plays dual roles: creator and critic. This cycle repeats, often until a certain quality threshold or stopping criterion is met, such as a fixed number of iterations or an acceptable level of quality. The comprehensive article is here: What is Agentic ai Reflection Pattern?

Now, let’s talk about the Tool Use Pattern in Agentic ai, a crucial mechanism that enables ai to interact with external systems, APIs, or resources beyond its internal capabilities.

Also, here are the 4 Agentic ai Design Pattern: Top 4 Agentic ai Design Patterns for Architecting ai Systems.

Overview

- The Tool Use Pattern in Agentic ai enables language models to interact with external systems, transcending their limitations by accessing real-time information and specialized tools.

- It addresses the traditional constraints of LLMs, which are often limited to outdated pre-trained data, by allowing dynamic integration with external resources.

- This design pattern uses modularization, where tasks are assigned to specialized tools, enhancing efficiency, flexibility, and scalability.

- Agentic ai can autonomously select, utilize, and coordinate multiple tools to perform complex tasks without constant human input, demonstrating advanced problem-solving capabilities.

- Examples include agents that conduct real-time web searches, perform sentiment analysis, and fetch trending news, all enabled by tool integration.

- The pattern highlights key agentic features such as decision-making, adaptability, and the ability to learn from tool usage, paving the way for more autonomous and versatile ai systems.

Tool Use is the robust technology that represents a key design pattern transforming how large language models (LLMs) operate. This innovation enables LLMs to transcend their natural limitations by interacting with external functions to gather information, perform actions, or manipulate data. Through Tool Use, LLMs are not just confined to producing text responses from their pre-trained knowledge; they can now access external resources like web searches, code execution environments, and productivity tools. This has opened up vast possibilities for ai-driven agentic workflows.

Traditional Limitations of LLMs

The initial development of LLMs focused on using pre-trained transformer models to predict the next token in a sequence. While this was groundbreaking, the model’s knowledge was limited to its training data, which became outdated and constrained its ability to interact dynamically with real-world data.

For instance, take the query: “What are the latest stock market trends for 2024?” A language model trained solely on static data would likely provide outdated information. However, by incorporating a web search tool, the model can perform a live search, gather recent data, and deliver an up-to-the-minute analysis. This highlights a key shift from merely referencing preexisting knowledge to accessing and integrating fresh, real-time information into the ai’s workflow.

The above-given diagram represents a conceptual Agentic ai tool use pattern, where an ai system interacts with multiple specialised tools to efficiently process user queries by accessing various information sources. This approach is part of an evolving methodology in ai called ai/courses/ai-agentic-design-patterns-with-autogen/lesson/1/introduction” target=”_blank” rel=”noreferrer noopener nofollow”>Agentic ai, designed to enhance the ai’s ability to handle complex tasks by leveraging external resources.

Core Idea Behind the Tool Use Pattern

- Modularization of Tasks: Instead of relying on a monolithic ai model that tries to handle everything, the system breaks down user prompts and assigns them to specific tools (represented as Tool A, Tool B, Tool C). Each tool specializes in a distinct capability, which makes the overall system more efficient and scalable.

- Specialized Tools for Diverse Tasks:

- Tool A: This could be, for example, a fact-checker tool that queries databases or the internet to validate information.

- Tool B: This might be a mathematical solver or a code execution environment designed to handle calculations or run simulations.

- Tool C: Another specialized tool, possibly for language translation or image recognition.

- Each tool in the diagram is visualized as being capable of querying information sources (e.g., databases, web APIs, etc.) as needed, suggesting a modular architecture where different sub-agents or specialized components handle different tasks.

- Sequential Processing: The model likely runs sequential queries through the tools, which means that multiple prompts can be processed one by one, and each tool independently queries its respective data sources. This allows for fast, responsive results, especially when combined with tools that excel in their specific domain.

End-to-End Process:

- Input: User asks “What is 2 times 3?”

- Interpretation: The LLM recognizes this as a mathematical operation.

- Tool Selection: The LLM selects the multiply tool.

- Payload Creation: It extracts relevant arguments (a: 2 and b: 3), prepares a payload, and calls the tool.

- Execution: The tool performs the operation (2 * 3 = 6), and the result is passed back to the LLM to present to the user.

<h3 class="wp-block-heading" id="h-why-this-matters-in-agentic-ai“>Why this Matters in Agentic ai?

This diagram captures a central feature of agentic ai, where models can autonomously decide which external tools to use based on the user’s query. Instead of merely providing a static response, the LLM acts as an agent that dynamically selects tools, formats data, and returns processed results, which is a core part of tool-use patterns in agentic ai systems. This type of tool integration allows LLMs to extend their capabilities far beyond simple language processing, making them more versatile agents capable of performing structured tasks efficiently.

We will implement the tool use pattern in 3 ways:

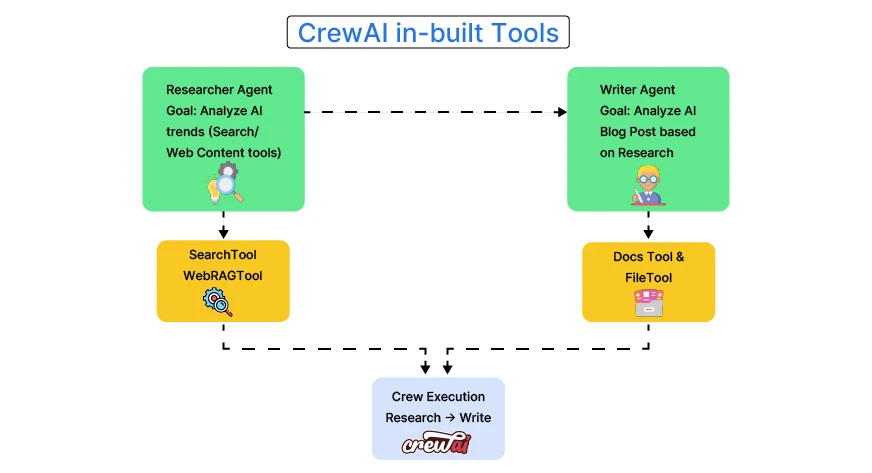

CrewAI in-built Tools (Blog Research and Content Generation Agent (BRCGA))

We are building a Blog Research and Content Generation Agent (BRCGA) that automates the process of researching the latest trends in the ai industry and crafting high-quality blog posts. This agent leverages specialised tools to gather information from web searches, directories, and files, ultimately producing engaging and informative content.

The BRCGA is divided into two core roles:

- Researcher Agent: Focused on gathering insights and market analysis.

- Writer Agent: Responsible for creating well-written blog posts based on the research.

Here’s the code:

import os

from crewai import Agent, Task, Crew- os module: This is a standard Python module that provides functions to interact with the operating system. It’s used here to set environment variables for API keys.

- crewai: This custom or fictional module contains Agent, Task, and Crew classes. These classes are used to define ai agents, assign tasks to them, and organize a team of agents (crew) to accomplish those tasks.

# Importing crewAI tools

from crewai_tools import (

DirectoryReadTool,

FileReadTool,

SerperDevTool,

WebsiteSearchTool

)Explanations

- crewai_tools: This module (also fictional) provides specialized tools for reading files, searching directories, and performing web searches. The tools are:

- DirectoryReadTool: Used to read content from a specified directory.

- FileReadTool: Used to read individual files.

- SerperDevTool: This is likely an API integration tool for performing searches using the server.dev API, which is a Google Search-like API.

- WebsiteSearchTool: Used to search and retrieve content from websites.

os.environ("SERPER_API_KEY") = "Your Key" # serper.dev API key

os.environ("OPENAI_API_KEY") = "Your Key"These lines set environment variables for the API keys of the Serper API and OpenAI API. These keys are necessary for accessing external services (e.g., for web searches or using GPT models).

# Instantiate tools

docs_tool = DirectoryReadTool(directory='/home/xy/VS_Code/Ques_Ans_Gen/blog-posts')

file_tool = FileReadTool()

search_tool = SerperDevTool()

web_rag_tool = WebsiteSearchTool()Explanations

- docs_tool: Reads files from the specified directory (/home/badrinarayan/VS_Code/Ques_Ans_Gen/blog-posts). This could be used for reading past blog posts to help in writing new ones.

- file_tool: Reads individual files, which could come in handy for retrieving research materials or drafts.

- search_tool: Performs web searches using the Serper API to gather data on market trends in ai.

- web_rag_tool: Searches for specific website content to assist in research.

# Create agents

researcher = Agent(

role="Market Research Analyst",

goal="Provide 2024 market analysis of the ai industry",

backstory='An expert analyst with a keen eye for market trends.',

tools=(search_tool, web_rag_tool),

verbose=True

)Explanations

Researcher: This agent conducts market research. It uses the search_tool (for online searches) and the web_rag_tool (for specific website queries). The verbose=True setting ensures that the agent provides detailed logs during task execution.

writer = Agent(

role="Content Writer",

goal="Craft engaging blog posts about the ai industry",

backstory='A skilled writer with a passion for technology.',

tools=(docs_tool, file_tool),

verbose=True

)Writer: This agent is responsible for creating content. It uses the docs_tool (to gather inspiration from previous blog posts) and the file_tool (to access files). Like the researcher, it is set to verbose=True for detailed task output.

# Define tasks

research = Task(

description='Research the latest trends in the ai industry and provide a

summary.',

expected_output="A summary of the top 3 trending developments in the ai industry

with a unique perspective on their significance.",

agent=researcher

)Research task: The researcher agent is tasked with identifying the latest trends in ai and producing a summary of the top three developments.

write = Task(

description='Write an engaging blog post about the ai industry, based on the

research analyst’s summary. Draw inspiration from the latest blog

posts in the directory.',

expected_output="A 4-paragraph blog post formatted in markdown with engaging,

informative, and accessible content, avoiding complex jargon.",

agent=writer,

output_file="blog-posts/new_post.md" # The final blog post will be saved here

)Write task: The writer agent is responsible for writing a blog post based on the researcher’s findings. The final post will be saved to ‘blog-posts/new_post.md’.

# Assemble a crew with planning enabled

crew = Crew(

agents=(researcher, writer),

tasks=(research, write),

verbose=True,

planning=True, # Enable planning feature

)Crew: This is the team of agents (researcher and writer) tasked with completing the research and writing jobs. The planning=True option suggests that the crew will autonomously plan the order and approach to completing the tasks.

# Execute tasks

result = crew.kickoff()This line kicks off the execution of the tasks. The crew (agents) will carry out their assigned tasks in the order they planned, with the researcher doing the research first and the writer crafting the blog post afterwards.

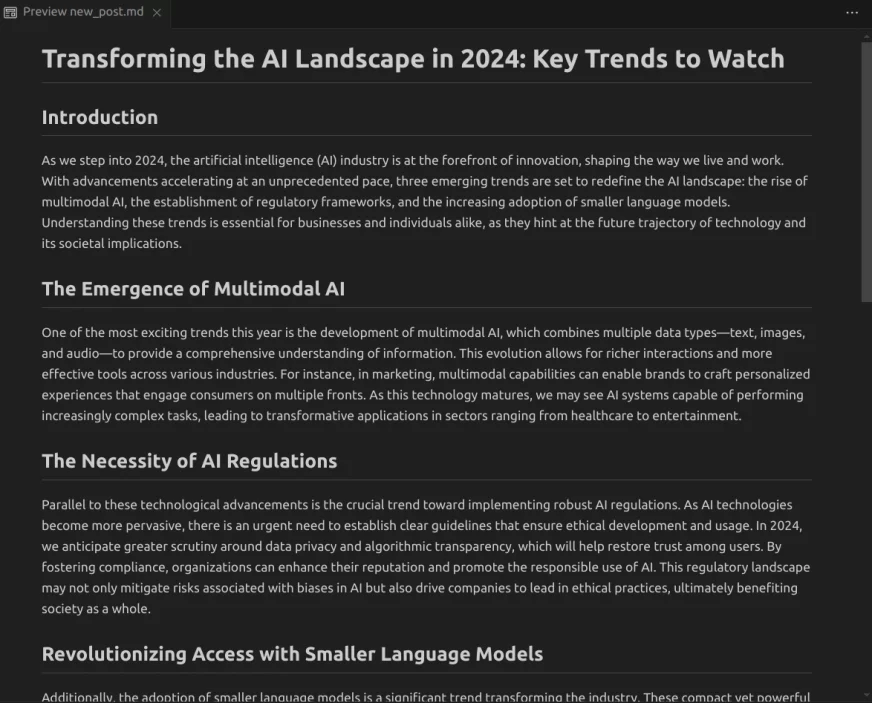

Here’s the article:

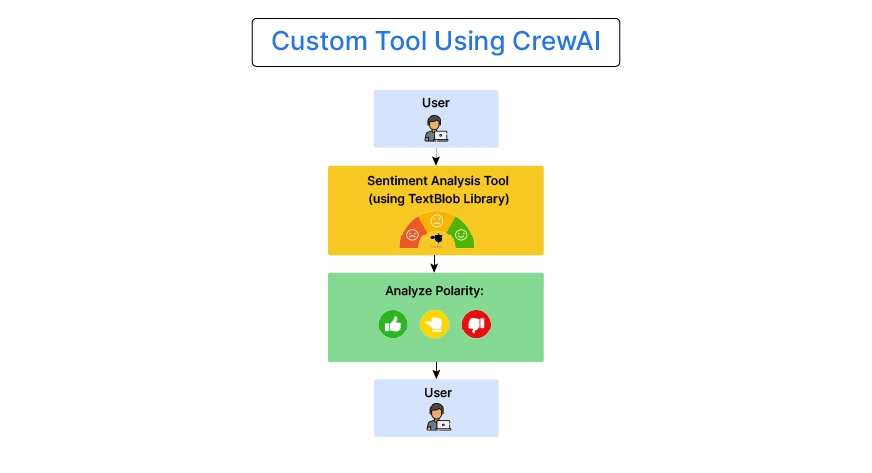

Custom Tool Using CrewAI (SentimentAI)

The agent we’re building, SentimentAI, is designed to act as a powerful assistant that analyses text content, evaluates its sentiment, and ensures positive and engaging communication. This tool could be used in various fields, such as customer service, marketing, or even personal communication, to gauge the emotional tone of the text and ensure it aligns with the desired communication style.

The agent could be deployed in customer service, social media monitoring, or brand management to help companies understand how their customers feel about them in real time. For example, a company can use Sentiment ai to analyse incoming customer support requests and route negative sentiment cases for immediate resolution. This helps companies maintain positive customer relationships and address pain points quickly.

Agent’s Purpose:

- Text Analysis: Evaluate the tone of messages, emails, social media posts, or any other form of written communication.

- Sentiment Monitoring: Identify positive, negative, or neutral sentiments to help users maintain a positive engagement.

- User Feedback: Provide actionable insights for improving communication by suggesting tone adjustments based on sentiment analysis.

Implementation Using CrewAI Tools

Here’s the link: CrewAI

from crewai import Agent, Task, Crew

from dotenv import load_dotenv

load_dotenv()

import os

# from utils import get_openai_api_key, pretty_print_result

# from utils import get_serper_api_key

# openai_api_key = get_openai_api_key()

os.environ("OPENAI_MODEL_NAME") = 'gpt-4o'

# os.environ("SERPER_API_KEY") = get_serper_api_key()Here are the agents:

- sales_rep_agent

- lead_sales_rep_agent

sales_rep_agent = Agent(

role="Sales Representative",

goal="Identify high-value leads that match "

"our ideal customer profile",

backstory=(

"As a part of the dynamic sales team at CrewAI, "

"your mission is to scour "

"the digital landscape for potential leads. "

"Armed with cutting-edge tools "

"and a strategic mindset, you analyze data, "

"trends, and interactions to "

"unearth opportunities that others might overlook. "

"Your work is crucial in paving the way "

"for meaningful engagements and driving the company's growth."

),

allow_delegation=False,

verbose=True

)

lead_sales_rep_agent = Agent(

role="Lead Sales Representative",

goal="Nurture leads with personalized, compelling communications",

backstory=(

"Within the vibrant ecosystem of CrewAI's sales department, "

"you stand out as the bridge between potential clients "

"and the solutions they need."

"By creating engaging, personalized messages, "

"you not only inform leads about our offerings "

"but also make them feel seen and heard."

"Your role is pivotal in converting interest "

"into action, guiding leads through the journey "

"from curiosity to commitment."

),

allow_delegation=False,

verbose=True

)

from crewai_tools import DirectoryReadTool, \

FileReadTool, \

SerperDevTool

directory_read_tool = DirectoryReadTool(directory='/home/badrinarayan/Downloads/instructions')

file_read_tool = FileReadTool()

search_tool = SerperDevTool()

from crewai_tools import BaseTool

from textblob import TextBlob

class SentimentAnalysisTool(BaseTool):

name: str ="Sentiment Analysis Tool"

description: str = ("Analyzes the sentiment of text "

"to ensure positive and engaging communication.")

def _run(self, text: str) -> str:

# Perform sentiment analysis using TextBlob

analysis = TextBlob(text)

polarity = analysis.sentiment.polarity

# Determine sentiment based on polarity

if polarity > 0:

return "positive"

elif polarity < 0:

return "negative"

else:

return "neutral"

sentiment_analysis_tool = SentimentAnalysisTool()Explanation

This code demonstrates a tool use pattern within an agentic ai framework, where a specific tool—Sentiment Analysis Tool—is implemented for sentiment analysis using the TextBlob library. Let’s break down the components and understand the flow:

Imports

- BaseTool: This is imported from the crew.ai_tools module, suggesting that it provides the foundation for creating different tools in the system.

- TextBlob: A popular Python library used for processing textual data, particularly to perform tasks like sentiment analysis, part-of-speech tagging, and more. In this case, it’s used to assess the sentiment of a given text.

Class Definition: SentimentAnalysisTool

The class SentimentAnalysisTool inherits from BaseTool, meaning it will have the behaviours and properties of BaseTool, and it’s customised for sentiment analysis. Let’s break down each section:

Attributes

- Name: The string “Sentiment Analysis Tool” is assigned as the tool’s name, which gives it an identity when invoked.

- Description: A brief description explains what the tool does, i.e., it analyses text sentiment to maintain positive communication.

Method: _run()

The _run() method is the core logic of the tool. In agentic ai frameworks, methods like _run() are used to define what a tool will do when it’s executed.

- Input Parameter (text: str): The method takes a single argument text (of type string), which is the text to be analyzed.

- Sentiment Analysis Logic:

- The code creates a TextBlob object using the input text: analysis = TextBlob(text).

- The sentiment analysis itself is performed by accessing the sentiment.polarity attribute of the TextBlob object. Polarity is a float value between -1 (negative sentiment) and 1 (positive sentiment).

- Conditional Sentiment Determination: Based on the polarity score, the sentiment is determined:

- Positive Sentiment: If the polarity > 0, the method returns the string “positive”.

- Negative Sentiment: If polarity < 0, the method returns “negative”.

- Neutral Sentiment: If the polarity == 0, it returns “neutral”.

Instantiating the Tool

At the end of the code, sentiment_analysis_tool = SentimentAnalysisTool() creates an instance of the SentimentAnalysisTool. This instance can now be used to run sentiment analysis on any input text by calling its _run() method.

For the full code implementation, refer to this link: Google Colab.

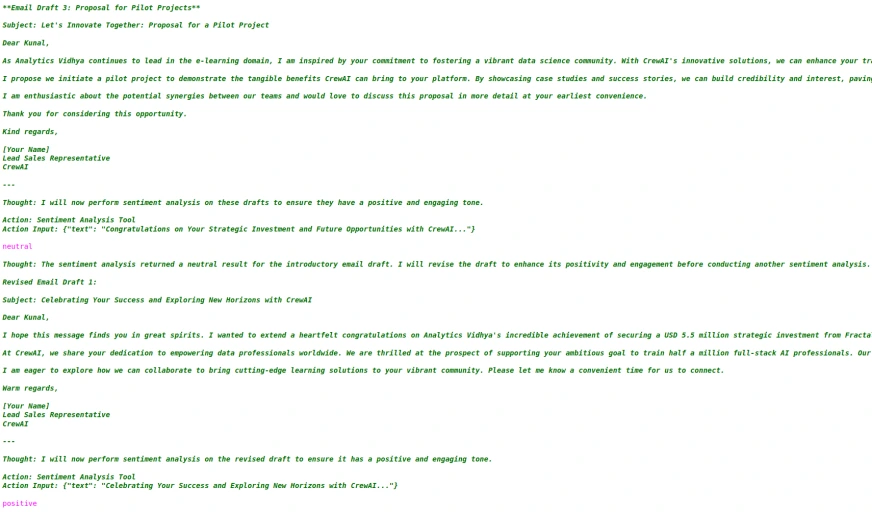

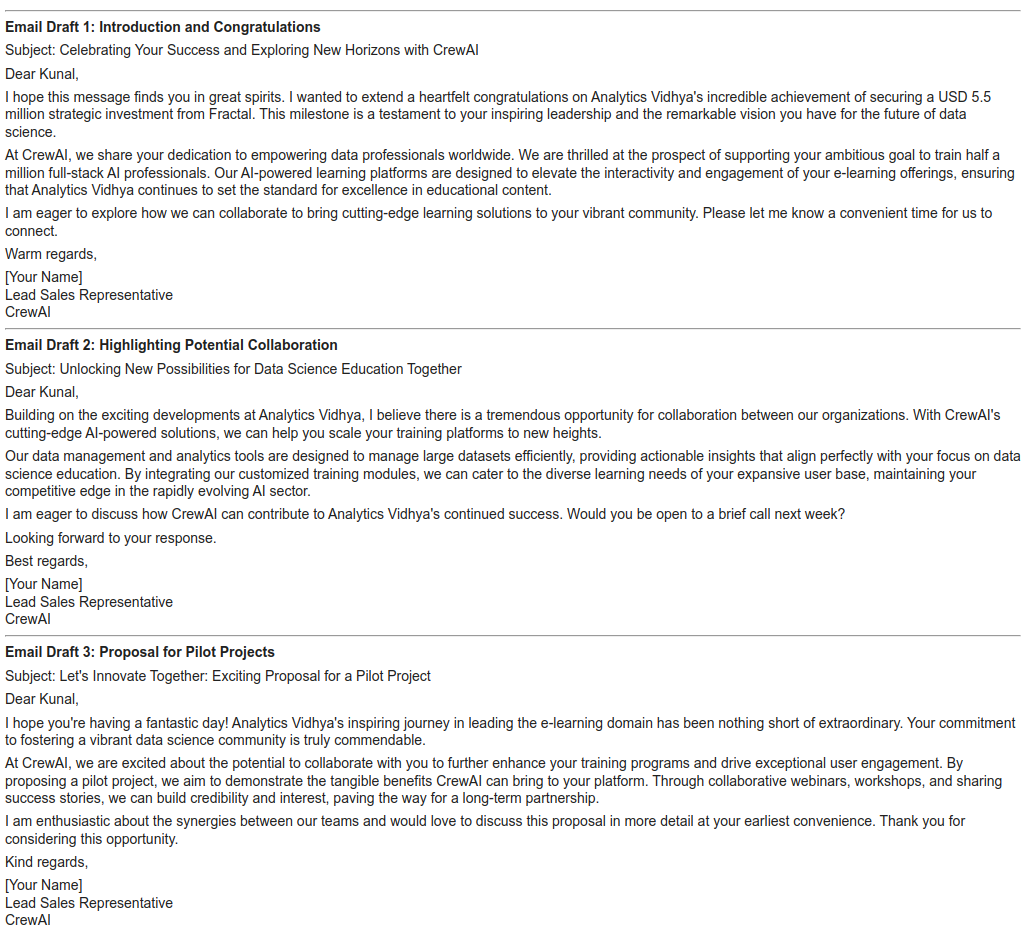

Output

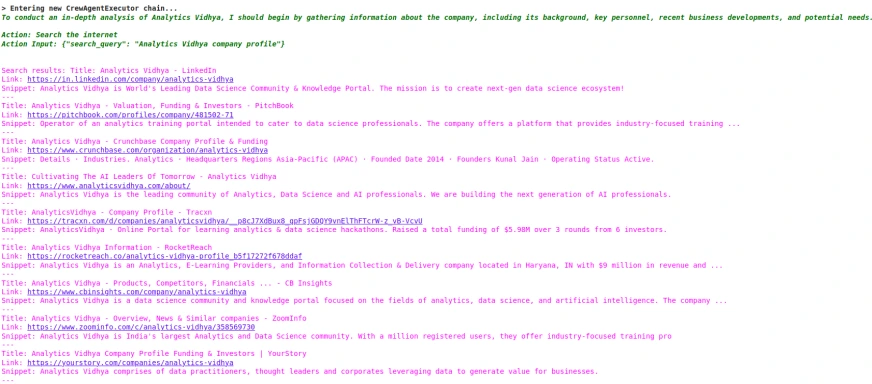

Here, the agent retrieves information from the internet based on the “search_query”: “Analytics Vidhya company profile.”

Here’s the output with sentiment analysis:

Here, we are displaying the final result as Markdown:

You can find the full code and output here: Colab Link

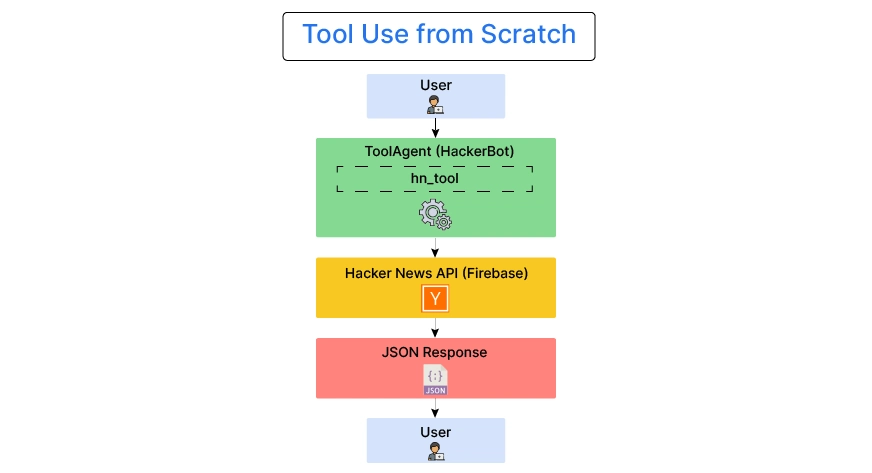

Tool Use from Scratch (HackerBot)

HackerBot is an ai agent designed to fetch and present the latest top stories from hacker_news_stories, a popular news platform focused on technology, startups, and software development. By leveraging the Hacker News API, HackerBot can quickly retrieve and deliver information about the top trending stories, including their titles, URLs, scores, and more. It serves as a useful tool for developers, tech enthusiasts, and anyone interested in staying updated on the latest tech news.

The agent can interact with users based on their requests and fetch news in real time. With the ability to integrate more tools, HackerBot can be extended to provide other tech-related functionalities, such as summarizing articles, providing developer resources, or answering questions related to the latest tech trends.

Note: For tool use from scratch, we are referring to the research and implementation of the Agentic ai design pattern by Michaelis Trofficus.

Here is the code:

import json

import requests

from agentic_patterns.tool_pattern.tool import tool

from agentic_patterns.tool_pattern.tool_agent import ToolAgent- json: Provides functions to encode and decode JSON data.

- requests: A popular HTTP library used to make HTTP requests to APIs.

- tool and ToolAgent: These are classes from the agentic_patterns package. They allow the definition of “tools” that an agent can use to perform specific tasks based on user input.

For the class implementation of “from agentic_patterns.tool_pattern.tool import tool” and “from agentic_patterns.tool_pattern.tool_agent import ToolAgent” you can refer to this repo.

def fetch_top_hacker_news_stories(top_n: int):

"""

Fetch the top stories from Hacker News.

This function retrieves the top `top_n` stories from Hacker News using the

Hacker News API.

Each story contains the title, URL, score, author, and time of submission. The

data is fetched

from the official Firebase Hacker News API, which returns story details in JSON

format.

Args:

top_n (int): The number of top stories to retrieve.

"""- fetch_top_hacker_news_stories: This function is designed to fetch the top stories from Hacker News. Here’s a detailed breakdown of the function:

- top_n (int): The number of top stories the user wants to retrieve (for example, top 5 or top 10).

top_stories_url="https://hacker-news.firebaseio.com/v0/topstories.json"

try:

response = requests.get(top_stories_url)

response.raise_for_status() # Check for HTTP errors

# Get the top story IDs

top_story_ids = response.json()(:top_n)

top_stories = ()

# For each story ID, fetch the story details

for story_id in top_story_ids:

story_url = f'https://hacker-news.firebaseio.com/v0/item/{story_id}.json'

story_response = requests.get(story_url)

story_response.raise_for_status() # Check for HTTP errors

story_data = story_response.json()

# Append the story title and URL (or other relevant info) to the list

top_stories.append({

'title': story_data.get('title', 'No title'),

'url': story_data.get('url', 'No URL available'),

})

return json.dumps(top_stories)

except requests.exceptions.RequestException as e:

print(f"An error occurred: {e}")

return ()Main Process

- URL Definition:

- top_stories_url is set to Hacker News’ top stories API endpoint (https://hacker-news.firebaseio.com/v0/topstories.json).

- Request Top Stories IDs:

- requests.get(top_stories_url) fetches the IDs of the top stories from the API.

- response.json() converts the response to a list of story IDs.

- The top top_n story IDs are sliced from this list.

- Fetch Story Details:

- For each story_id, a second API call is made to retrieve the details of each story using the item URL: https://hacker-news.firebaseio.com/v0/item/{story_id}.json.

- story_response.json() returns the data of each individual story.

- From this data, the story title and URL are extracted using .get() (with default values if the fields are missing).

- These story details (title and URL) are appended to a list top_stories.

- Return JSON String:

- Finally, json.dumps(top_stories) converts the list of story dictionaries into a JSON-formatted string and returns it.

- Error Handling:

- requests.exceptions.RequestException is caught in case of HTTP errors, and the function prints an error message and returns an empty list.

json.loads(fetch_top_hacker_news_stories(top_n=5))

hn_tool = tool(fetch_top_hacker_news_stories)

hn_tool.name

json.loads(hn_tool.fn_signature)Tool Definition

- hn_tool = tool(fetch_top_hacker_news_stories): This line registers the fetch_top_hacker_news_stories function as a “tool” by wrapping it inside the tool object.

- hn_tool.name: This likely fetches the name of the tool, although it is not specified in this code.

- json.loads(hn_tool.fn_signature): This decodes the function signature of the tool (likely describing the function’s input and output structure).

The ToolAgent

tool_agent = ToolAgent(tools=(hn_tool))

output = tool_agent.run(user_msg="Tell me your name")

print(output)

I don't have a personal name. I am an ai assistant designed to provide information and assist with tasks.

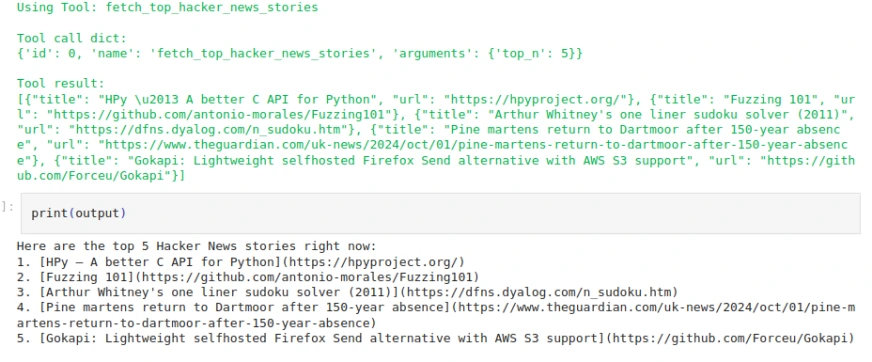

output = tool_agent.run(user_msg="Tell me the top 5 Hacker News stories right now")- tool_agent = ToolAgent(tools=(hn_tool)): A ToolAgent instance is created with the Hacker News tool (hn_tool). The agent is designed to manage tools and interact with users based on their requests.

- output = tool_agent.run(user_msg=”Tell me your name”): The run method takes the user message (in this case, “Tell me your name”) and tries to use the available tools to respond to the message. The output of this is printed. The agent here responds with a default message: “I don’t have a personal name. I am an ai assistant designed to provide information and assist with tasks.”

- output = tool_agent.run(user_msg=”Tell me the top 5 Hacker News stories right now”): This user message requests the top 5 Hacker News stories. The agent uses the hn_tool to fetch and return the requested stories.

Output

Code Flow

- The user asks the agent to get the top stories from Hacker News.

- The fetch_top_hacker_news_stories function is called to make requests to the Hacker News API and retrieve story details.

- The tool (wrapped function) is registered in the ToolAgent, which handles user requests.

- When the user asks for the top stories, the agent triggers the tool and returns the result.

- Efficiency and Speed: Since each tool is specialized, queries are processed faster than if a single ai model were responsible for everything. The modular nature means updates or improvements can be applied to specific tools without affecting the entire system.

- Scalability: As the system grows, more tools can be added to handle an expanding range of tasks without compromising the efficiency or reliability of the system.

- Flexibility: The ai system can switch between tools dynamically depending on the user’s needs, allowing for highly flexible problem-solving. This modularity also enables the integration of new technologies as they emerge, improving the system over time.

- Real-time Adaptation: By querying real-time information sources, the tools remain current with the latest data, offering up-to-date responses for knowledge-intensive tasks.

The way an agentic ai uses tools reveals key aspects of its autonomy and problem-solving capabilities. Here’s how they connect:

1. Pattern Recognition and Decision-Making

Agentic ai systems often rely on tool selection patterns to make decisions. For instance, based on the problem it faces, the ai needs to recognise which tools are most appropriate. This requires pattern recognition, decision-making, and a level of understanding of both the tools and the task at hand. Tool use patterns indicate how well the ai can analyze and break down a task.

Example: A natural language ai assistant might decide to use a translation tool if it detects a language mismatch or a calendar tool when a scheduling task is required.

2. Autonomous Execution of Actions

One hallmark of agentic ai is its ability to autonomously execute actions after selecting the right tools. It doesn’t need to wait for human input to choose the correct tool. The pattern in which these tools are used demonstrates how autonomous the ai is in completing tasks.

For instance, if a weather forecasting ai autonomously selects a data scraping tool to gather up-to-date weather reports and then uses an internal modeling tool to generate predictions, it’s demonstrating a high degree of autonomy through its tool use pattern.

3. Learning from Tool Usage

Agentic ai systems often employ reinforcement learning or similar techniques to refine their tool use patterns over time. By tracking which tools successfully achieved goals or solve problems, the ai can adapt and optimise its future behaviour. This self-improvement cycle is essential for increasingly agentic systems.

For instance, an ai system might learn that a certain computation tool is more effective for solving specific types of optimisation problems and will adapt its future behaviour accordingly.

4. Multi-Tool Coordination

An advanced agentic ai doesn’t just use a single tool at a time but may coordinate the use of multiple tools to achieve more complex objectives. This pattern of multi-tool use reflects a deeper understanding of task complexity and how to manage resources effectively.

Example: An ai performing medical diagnosis might pull data from a patient’s health records (using a database tool), run symptoms through a diagnostic ai model, and then use a communication tool to report findings to a healthcare provider.

The more diverse an ai’s tool use patterns, the more flexible and generalisable it tends to be. Systems that can effectively apply various tools across domains are more agentic because they aren’t limited to a predefined task. These patterns also suggest the ai’s capability to abstract and generalise knowledge from its tool-based interactions, which is key to achieving true agency.

As ai systems evolve, their ability to dynamically acquire and integrate new tools will further enhance their agentic qualities. Current ai systems usually have a pre-defined set of tools. Future agentic ai might autonomously find, adapt, or even create new tools as needed, further deepening the connection between tool use and agency.

If you are looking for an ai Agent course online, then explore: the Agentic ai Pioneer Program.

Conclusion

The Tool Use Pattern in Agentic ai allows large language models (LLMs) to transcend their inherent limitations by interacting with external tools, enabling them to perform tasks beyond simple text generation based on pre-trained knowledge. This pattern shifts ai from relying solely on static data to dynamically accessing real-time information and performing specialised actions, such as running simulations, retrieving live data, or executing code.

The core idea is to modularise tasks by assigning them to specialised tools (e.g., fact-checking, solving equations, or language translation), which results in greater efficiency, flexibility, and scalability. Instead of a monolithic ai handling all tasks, Agentic ai leverages multiple tools, each designed for specific functionalities, leading to faster processing and more effective multitasking.

The Tool Use Pattern highlights key features of Agentic ai, such as decision-making, autonomous action, learning from tool usage, and multi-tool coordination. These capabilities enhance the ai’s autonomy and problem-solving potential, allowing it to handle increasingly complex tasks independently. The system can even adapt its behaviour over time by learning from successful tool usage and optimizing its performance. As ai continues to evolve, its ability to integrate and create new tools will further deepen its autonomy and agentic qualities.

I hope you find this article informative, in the next article of the series ” Agentic ai Design Pattern” we will talk about: Planning Pattern

If you’re interested in learning more about Tool Use, I recommend:

Frequently Asked Questions

Ans. The Agentic ai Tool Use Pattern refers to the way ai tools are designed to operate autonomously, taking initiative to complete tasks without requiring constant human intervention. It involves ai systems acting as “agents” that can independently decide the best actions to achieve specified goals.

Ans. Unlike traditional ai that follows pre-programmed instructions, agentic ai can make decisions, adapt to new information, and execute tasks based on goals rather than fixed scripts. This autonomy allows it to handle more complex and dynamic tasks.

Ans. A common example is using a web search tool to answer real-time queries. For instance, if asked about the latest stock market trends, an LLM can use a tool to perform a live web search, retrieve current data, and provide accurate, timely information, instead of relying solely on static, pre-trained data.

Ans. Modularization allows tasks to be broken down and assigned to specialized tools, making the system more efficient and scalable. Each tool handles a specific function, like fact-checking or mathematical computations, ensuring tasks are processed faster and more accurately than a single, monolithic model.

Ans. The pattern enhances efficiency, scalability, and flexibility by enabling ai to dynamically select and use different tools. It also allows for real-time adaptation, ensuring responses are up-to-date and accurate. Furthermore, it promotes learning from tool usage, leading to continuous improvement and better problem-solving abilities.