A major challenge in ai-powered game simulation is the ability to accurately simulate complex interactive environments in real-time using neural models. Traditional game engines rely on manually created loops that collect user input, update game states, and render images at high frame rates, which is crucial to maintaining the illusion of an interactive virtual world. Replicating this process with neural models is particularly difficult due to issues such as maintaining visual fidelity, ensuring stability over extended sequences, and achieving the necessary real-time performance. Addressing these challenges is essential to advancing ai capabilities in game development, paving the way for a new paradigm where game engines are powered by neural networks rather than manually written code.

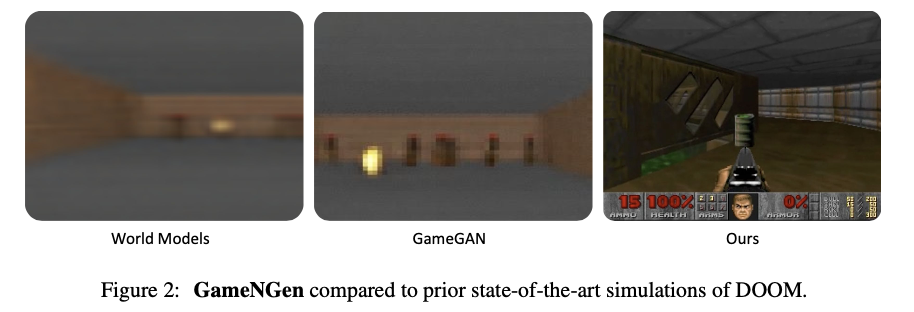

Current approaches to simulate interactive environments with neural models include methods such as reinforcement learning (RL) and diffusion models. Techniques such as World Models by Ha and Schmidhuber (2018) and GameGAN by Kim et al. (2020) have been developed to simulate game environments using neural networks. However, these methods face significant limitations, including high computational costs, instability on long trajectories, and poor visual quality. For example, GameGAN, while effective for simpler games, struggles with complex environments such as DOOM, often producing blurry and low-quality images. These limitations make these methods less suitable for real-time applications and restrict their utility in more demanding game simulations.

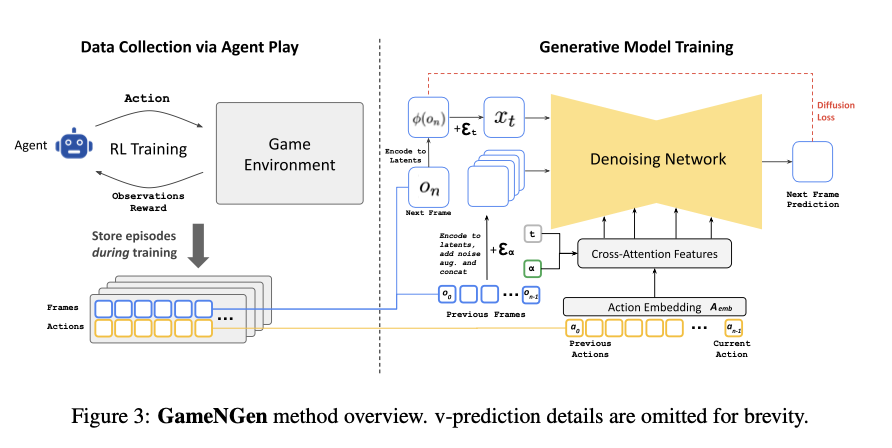

Google and Tel Aviv University researchers present GameNGena novel approach that uses an augmented version of the Stable Diffusion v1.4 model to simulate complex interactive environments, such as the game DOOM, in real-time. GameNGen overcomes the limitations of existing methods by employing a two-phase training process: first, an RL agent is trained to play the game, generating a dataset of gameplay trajectories; second, a generative diffusion model is trained on these trajectories to predict the next frame of gameplay based on past actions and observations. This approach leverages diffusion models for game simulation, enabling high-quality, stable, real-time interactive experiences. GameNGen represents a significant advancement in ai-powered game engines, demonstrating that a neural model can match the visual quality of the original game while running interactively.

The development of GameNGen involves a two-stage training process. Initially, an RL agent is trained to play DOOM, creating a diverse set of gameplay trajectories. These trajectories are then used to train a generative diffusion model, a modified version of Stable Diffusion v1.4, to predict subsequent gameplay frames based on action sequences and past observations. Model training includes rate parameterization to minimize diffusion loss and optimize frame sequence predictions. To address autoregressive drift, which degrades frame quality over time, noise augmentation is introduced during training. Additionally, the researchers tuned a latent decoder to improve image quality, in particular for the game’s HUD (heads-up display). The model was tested in a VizDoom environment with a dataset of 900 million frames, using a batch size of 128 and a learning rate of 2e-5.

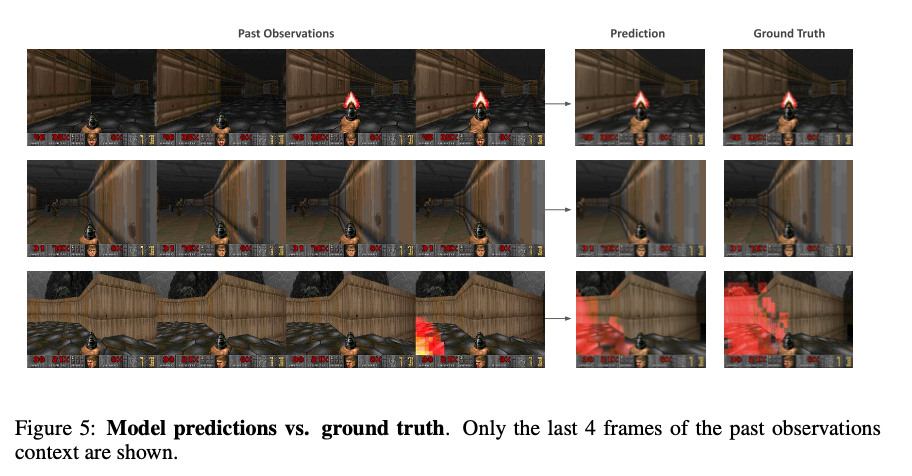

GameNGen demonstrates impressive simulation quality, producing images nearly indistinguishable from the original DOOM game, even over long sequences. The model achieves a peak signal-to-noise ratio (PSNR) of 29.43, similar to lossy JPEG compression, and a low learned perceptual image patch similarity (LPIPS) score of 0.249, indicating high visual fidelity. The model maintains high-quality output across multiple frames, even when simulating long trajectories, with minimal degradation over time. Furthermore, the approach shows robustness in maintaining game logic and visual consistency, effectively simulating complex gameplay scenarios in real-time at 20 frames per second. These results underscore the model’s ability to deliver stable, high-quality performance in real-time game simulations, marking a significant advancement in the use of ai for interactive environments.

GameNGen represents a major advancement in ai-driven game simulation by demonstrating that complex interactive environments like DOOM can be effectively simulated using a real-time neural model while maintaining high visual quality. This proposed method addresses critical challenges in the field by combining RL and diffusion models to overcome the limitations of previous approaches. With its ability to run at 20 frames per second on a single TPU while delivering visuals on par with the original game, GameNGen signifies a potential shift towards a new era in game development, where games are created and controlled using neural models instead of traditional code-based engines. This innovation could revolutionize game development, making it more accessible and cost-effective.

Take a look at the Paper and Project. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter and join our Telegram Channel and LinkedIn GrAbove!. If you like our work, you will love our fact sheet..

Don't forget to join our SubReddit of over 50,000 ml

Below is a highly recommended webinar from our sponsor: ai/webinar-nvidia-nims-and-haystack?utm_campaign=2409-campaign-nvidia-nims-and-haystack-&utm_source=marktechpost&utm_medium=banner-ad-desktop” target=”_blank” rel=”noreferrer noopener”>'Developing High-Performance ai Applications with NVIDIA NIM and Haystack'

Aswin AK is a Consulting Intern at MarkTechPost. He is pursuing his dual degree from Indian Institute of technology, Kharagpur. He is passionate about Data Science and Machine Learning and has a strong academic background and hands-on experience in solving real-world interdisciplinary challenges.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>

NEWSLETTER

NEWSLETTER