Since prehistoric times, humans have used sketches to convey and document ideas. Even in the presence of language, his capacity for expressiveness remains unmatched. Consider the times when you feel the need to turn to pen and paper (or a Zoom whiteboard) to sketch out an idea.

In the last decade, sketch research has experienced significant growth. A wide range of studies have covered various aspects, including traditional tasks such as classification and synthesis, as well as more sketch-specific topics such as visual abstraction modeling, style transfer, and continuous stroke adjustment. In addition, there have been fun and practical applications, such as turning sketches into photo sorters.

However, exploration of sketch expressiveness has mainly focused on sketch-based image retrieval (SBIR), particularly the fine-grained variant (FGSBIR). For example, let’s say you’re looking for the image of a specific dog in his collection, sketching its image in your mind can help you find it faster.

Notable progress has been made and recent systems have reached a level of maturity suitable for commercial use.

In the research paper reported in this article, the authors explore the potential of human sketches to improve fundamental vision tasks, with a particular focus on object detection. The summary of the proposed approach is presented in the following figure.

The goal is to develop a sketch-enabled object detection framework that detects objects based on sketch content, allowing users to express themselves visually. For example, when a person sketches a scene such as a “zebra eating grass”, the proposed framework should be able to detect that specific zebra among a herd of zebras, using instance-aware detection. In addition, it will allow users to be specific about the parts of objects, thus enabling part-aware detection. Therefore, if someone wants to focus solely on the “head” of the “zebra”, they can draw the head of the zebra to achieve the desired result.

Rather than developing a sketch-enabled object detection model from scratch, the researchers demonstrate seamless integration between basic models, such as CLIP, and readily available SBIR models, which elegantly address the problem. This approach takes advantage of the strengths of CLIP for model generalization and SBIR for bridging the gap between sketches and photos.

To achieve this, the authors adapt CLIP to create sketch and photo coders (branches within a shared SBIR model) by training independent warning vectors separately for each modality. During training, these request vectors are added to the input sequence of the first layer of the CLIP ViT backbone transformer, while the remaining parameters are kept frozen. This integration introduces the generalization of the model in the learned photo and sketch distributions.

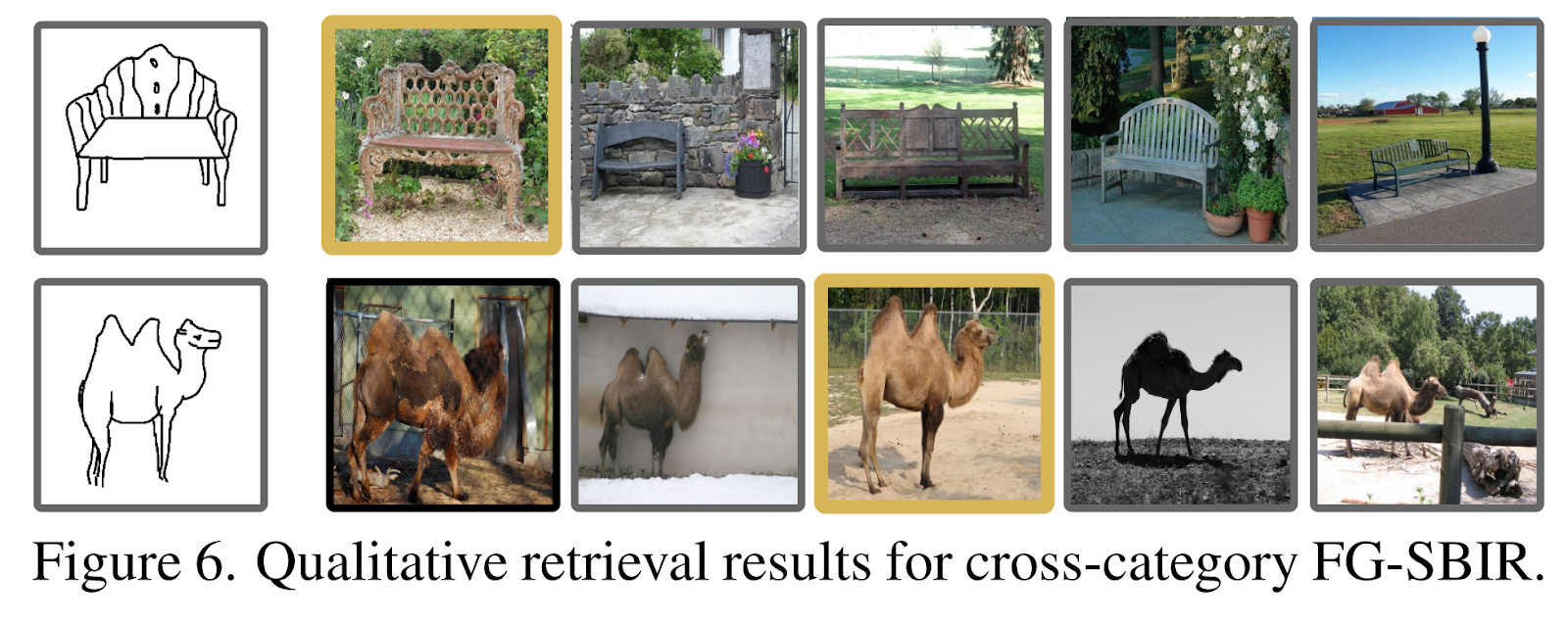

Some specific results of the retrieval task for cross-category FG-SBIR are reported below.

This was the brief for a new AI technique for sketch-based image retrieval. If you are interested and would like to learn more about this job, you can find out more by clicking on the links below.

review the Paper. Don’t forget to join our 26k+ ML SubReddit, discord channel, and electronic newsletter, where we share the latest AI research news, exciting AI projects, and more. If you have any questions about the article above or if we missed anything, feel free to email us at [email protected]

🚀 Check out 900+ AI Tools at the AI Tools Club

![]()

Daniele Lorenzi received his M.Sc. in ICT for Internet and Multimedia Engineering in 2021 from the University of Padua, Italy. He is a Ph.D. candidate at the Institute of Information Technology (ITEC) at the Alpen-Adria-Universität (AAU) Klagenfurt. He currently works at the Christian Doppler ATHENA Laboratory and his research interests include adaptive video streaming, immersive media, machine learning and QoS / QoE evaluation.