Transformers are neural network architectures that learn context by tracing relationships in sequential data, such as words in a sentence. They were developed to solve the problem of sequence transduction, that is, transforming input sequences into output sequences, for example, translating one language into another.

Before Transformers, the recurrent neural network (RNN) was used to understand text using deep learning. Suppose we were to translate the following sentence into Japanese -“Linkin Park is an American rock band. The band was formed in 1996.An RNN would take this sentence as input, process it word by word, and sequentially give the Japanese counterpart of each word as output. This would lead to grammatical errors, since in any language, word order is important.

Another problem with RNNs is that they are difficult to train and cannot be parallelized, since they process words sequentially. This is where Transformers came into the picture. The first model was developed by researchers at Google and the University of Toronto in 2017 for the translation of texts. Transformers can be efficiently parallelized and trained on very large data sets (GPT-3 was trained on 45TB of data)

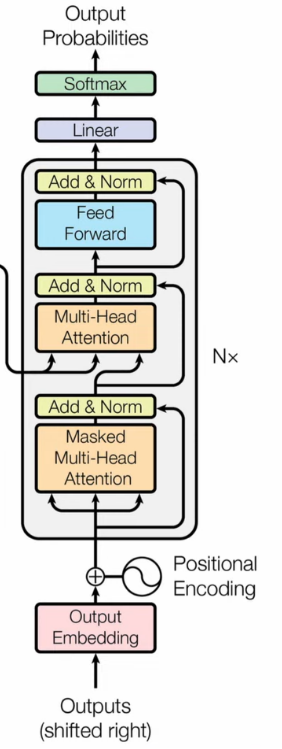

In the above figure, the left side represents the encoder block and the decoder block is represented on the right side.

The transformers consist of six similar encoders and six similar decoders. Each encoder has two layers: a self-attention layer and a forward neural network. The decoder has both layers, but between them is an attention layer that helps you focus only on the relevant parts of the input.

Let’s understand how Transformers works by considering the translation of a sentence from English to French.

encoder block

Before passing the words as input, input embeddings convert each word into the form of an n-dimensional vector and positional encodings are added. Positional encodings help the model understand the position of the word in the sentence.

The self-attention part focuses on the relevance of a word with respect to the other words present in the sentence. We can create an attention vector for each word that highlights the relationship between each word in the sentence.

In the figure above, the lighter the color of the square, the more attention the model pays to that word. Suppose the model has to translate the sentence: “The agreement on the European Economic Area was signed in August 1992” into French. When he is translating the word “agreement,” he is focusing on the French word “agreement.” The model paid attention correctly when translating “European Economic Area”. In French, the order of these words is reversed (“européenne économique zone”) compared to English.

The model, for each word, weighs its much higher value on itself in the sentence without considering its relationship to other words in the sentence. To address this, multiple attention vectors are used for each word and then a weighted average is taken to calculate the final attention vector for each word. This process is known as multi-headed attention blocking, as it uses multiple attention vectors to comprehend the meaning of a sentence.

The next step is the feed-forward neural network. A forward neural network is applied to each attention vector to transform it into a form acceptable to the next encoder or decoder layer. The feed-forward network accepts attention vectors independently. Unlike the RNNs, each of these attention vectors is independent of the other. Parallelism can be applied here, which makes a big difference.

decoder block

To train the model, we input the French translation into the decoder block. The embedding and positional encoding layers transform each word into their respective vectors.

The input is then passed through the masked multi-headed attention block, where attention vectors are generated for each word in the French sentence to determine the relevance of each word to the other words in the sentence. The model uses previously translated English words to match and compare with the French translation fed into the decoder. By comparing these two, the model updates its array values and continues to learn through multiple iterations.

To ensure that the model is learning effectively, the next French word is hidden. The model should predict it using previous results instead of knowing the correct translation. That is why it is called a “masked” multi-headed attention block.

Now, the vector resulting from the first attention block and the encoder block vectors are passed through another multi-headed attention block. This block is where the actual mapping of English to French words happens. The output is the attention vector for each word in English and French sentences.

Now, each attention vector goes to an advance unit. The model converts these vectors into a form readily acceptable to a linear layer or other decoder block. A linear layer then expands the dimensions of the vector into word numbers in the French language after translation.

The output is then passed through a softmax layer that transforms it into a probability distribution, which is human interpretable. The word with the highest probability is produced as the output.

The input runs through all six layers of encoders, and the final output is sent to the multihead care layer of all decoders. The masked multihead attention layer takes the output of the above decoder blocks as input. In this way, the decoders take into consideration the word of the previous time step and the context of the word of the encoding process.

All decoders work together to create an output vector that is transformed to a logit vector using a linear transformation. The logits vector has a size equal to the number of words in the vocabulary. This vector is then passed through a softmax function, which tells us how likely a word is to be the next word in the generated sentence. The softmax function basically tells us what the next word will be.

Transformers are mainly used in natural language processing (NLP) and computer vision (CV). In fact, any sequential text, image, or video data is a candidate for transformative models. Over the years, transformers have been highly successful in language translation, speech recognition, speech translation, and time series prediction. Pretrained models such as GPT-3, BERT, and RoBERTa have demonstrated the potential of transformers to find real-world applications such as document summarization, document generation, biological sequence analysis, and video understanding.

In 2020, GPT-2 was shown to be able to be tuned to play chess. Transformers have been applied to the field of image processing and have shown competitive results with convolutional neural networks (CNNs).

Due to their wide adoption in computer vision and language modeling, they have begun to be adopted in new domains such as medical imaging and speech recognition.

Researchers are using transformers to gain a deeper understanding of the relationships between genes and the amino acids in DNA and proteins. This allows for faster drug design and development. Transformers are also employed in various fields to identify patterns and detect unusual activity to prevent fraud, optimize manufacturing processes, suggest personalized recommendations, and improve healthcare. These powerful tools are also commonly used in everyday applications such as search engines like Google and Bing.

The following figure shows how the different transformers are related to each other and to which family they belong.

Chronological Timeline of Transformers:

Timeline of transformers with y-axis representing their size (in millions of parameters):

The following are some of the more common transformers:

| TRANSFORMER NAME | FAMILY | APPLICATION | YEAR OF PUBLICATION | NUMBER OF PARAMETERS | DEVELOPED BY |

| BERT | BERT | Answer to general questions and comprehension of the language. | 2018 | Base = 110M, Large = 340M | |

| Roberta | BERT | Answer to general questions and comprehension of the language. | 2019 | 356M | YOU/Google |

| XL transformer | – | General language tasks | 2019 | 151M | CMU/Google |

| bart | BERT for encoder and GPT for decoder | Generation and comprehension of texts. | 2019 | 10% more than BERT | Goal |

| T5 | – | General language tasks | 2019 | Up to 11B | |

| CONTROL | – | Controllable text generation | 2019 | 1.63B | Sales force |

| GPT-3 | GPT | Text generation, code generation, as well as image and audio generation. | 2020 | 175B | open AI |

| SHORTEN | SHORTEN | object classification | 2021 | – | open AI |

| GLIDE | diffusion models | text to image | 2021 | 5B (3.5B + 1.5B diffusion model for an oversampling model) | open AI |

| HTML | bart | General purpose language model. Allows structured HTML hints | 2021 | 400M | Goal |

| ChatGPT | GPT | dialog agent | 2022 | 175B | open AI |

| DALL-E-2 | CLIP, SLIDE | text to image | 2022 | 3.5B | open AI |

| Palm | – | General purpose language model | 2022 | 540B | |

| DQ-BART | bart | Generation and comprehension of texts. | 2022 | Up to 30 times fewer parameters compared to BART | Amazon |

I am a civil engineering graduate (2022) from Jamia Millia Islamia, New Delhi, and I have strong interest in data science, especially in neural networks and its application in various areas.

NEWSLETTER

NEWSLETTER