Weather is a complex system, and small variations at any moment can lead to significant and sometimes unpredictable changes over time. But, cracking this chaotic system is no easy feat. Over centuries, we have been doing multiple things to predict the weather, such as listening to the cricket chirps or looking to the stars for the answers. Is it practical or not? Don’t bother. What if I tell you technology can predict when to pack the umbrella or prepare for a hurricane 10-15 days in advance? Sounds great, right? GenCast by Google Deepmind is DOING it all.

technology has given us the upper hand over the natural unpredictability of weather patterns. With the rise of artificial intelligence, we have moved far beyond traditional methods like observing animal behaviour, folklore, or the position of celestial bodies. Google Deepmind has introduced GenCast, an ai weather model that is setting new standards in weather prediction and risk assessment. Published today in Nature, this advanced model is designed to provide accurate and detailed weather forecasts, including predictions of extreme conditions, up to 15 days in advance. GenCast’s probabilistic approach and ai-driven architecture mark a significant leap forward in weather forecasting, addressing crucial societal needs ranging from daily life planning to disaster management and renewable energy production.

The Need for Advanced Weather Forecasting

Weather impacts nearly every aspect of human life. From daily decisions at home to agricultural practices to producing renewable energy, understanding and predicting weather patterns is critical. Earlier, weather forecasting relies on complex physics-based models that require massive computational power to run simulations. These models often take hours on supercomputers to produce predictions. Furthermore, traditional forecasting typically offers a single, deterministic estimate of future weather conditions, which, while useful, is often not accurate enough to handle uncertainties or extreme weather events. That’s why advanced weather forecasting is crucial.

<h2 class="wp-block-heading" id="h-google-deepmind-s-gencast-the-ai-revolution-in-weather-prediction”>Google Deepmind’s GenCast: The ai Revolution in Weather Prediction

Google’s GenCast adopts a probabilistic ensemble forecasting approach to address these limitations. Unlike traditional models that provide a single forecast, GenCast generates multiple potential scenarios — over 50 in some cases — to provide a range of possible outcomes, complete with the probability of each scenario. This approach not only delivers more accurate predictions but also gives decision-makers a fuller picture of potential weather outcomes, including the level of uncertainty involved.

How GenCast Works?

GenCast is a diffusion model, a type of machine learning model that also powers recent advances in generative ai, such as image, video, and music generation. However, unlike these applications, GenCast has been specifically adapted to account for the spherical geometry of the Earth, allowing it to predict weather patterns in a globally relevant way.

At its core, GenCast learns from historical weather data and generates predictions of future weather conditions based on this learned knowledge. The model was trained on 40 years of weather data from the European Centre for Medium-Range Weather Forecasts (ECMWF), utilizing variables such as temperature, wind speed, and pressure at various altitudes. This allows GenCast to learn and model global weather patterns at a high resolution (0.25°), which significantly enhances its forecasting ability.

GenCast is a probabilistic weather model designed to generate high-accuracy, global 15-day ensemble forecasts. It operates at a fine resolution of 0.25° and outperforms traditional operational ensemble systems, such as the ECMWF’s ENS, in terms of forecasting accuracy. GenCast works by modeling the conditional probability distribution of future weather states based on the current and past weather conditions.

for medium-range weather

Key Features of GenCast

Here are the features:

1. Forecast Resolution and Speed:

- Global Coverage at 0.25° resolution: GenCast produces forecasts at a fine-grained resolution of 0.25° latitude-longitude, offering detailed global weather predictions.

- Fast Forecast Generation: Each 15-day forecast is generated in about 8 minutes using a Cloud TPUv5 device. Moreover, an ensemble of such forecasts can be generated in parallel.

2. Probabilistic Approach:

- GenCast models the conditional probability distribution to predict future weather states.

- The forecast trajectory is generated by conditioning on the initial and previous weather states, and the model iteratively factors the joint distribution over successive states:

3. Model Representation:

- Weather State Representation: The global weather state is represented by six surface variables and six atmospheric variables, each defined at 13 vertical pressure levels on the 0.25° latitude-longitude grid.

- Forecast Horizon: GenCast generates 15-day forecasts, with predictions made every 12 hours, yielding a total of 30 time steps.

4. Diffusion Model Architecture:

- Generative Model: GenCast is implemented as a conditional diffusion model, which iteratively refines predictions from random noise. This type of model has recently gained traction in generative ai, particularly in tasks involving images, sounds, and videos.

- Autoregressive Process: Starting with an initial noise sample, GenCast refines it step by step, conditioned on the previous two states of the atmosphere, to produce a forecast. The process is autoregressive, meaning each step depends on the output of the previous one. This enables the generation of an entire forecast trajectory.

5. Neural Network Architecture:

- Encoder-Processor-Decoder Design: GenCast applies a sophisticated neural network architecture consisting of three main components:

- Encoder: Maps the input (a noisy candidate state) and the conditioning information from the latitude-longitude grid to an internal representation on an icosahedral mesh.

- Processor: A graph transformer processes the encoded data, where each node in the graph attends to its neighbors on the mesh, helping to capture complex spatial dependencies.

- Decoder: Maps the processed information back to a refined forecast state on the latitude-longitude grid.

6. Training:

- Data: GenCast is trained on 40 years of ERA5 reanalysis data (1979-2018) from the European Centre for Medium-Range Weather Forecasts (ECMWF). This data includes a comprehensive set of atmospheric and surface variables used to train the model.

- Diffusion Model Training: The model uses a standard diffusion model denoising objective, which helps in refining noisy forecast samples into more accurate predictions.

7. Ensemble Forecasting:

- Uncertainty Modeling: When generating forecasts, GenCast incorporates uncertainty in the initial conditions. This is done by perturbing the initial ERA5 reanalysis data with ensemble perturbations from the ERA5 Ensemble of Data Assimilations (EDA). This allows GenCast to generate multiple forecast trajectories, capturing the range of possible future weather scenarios.

8. Evaluation:

- Reanalysis Initialization: For evaluation, GenCast is initialized with ERA5 reanalysis data along with the perturbations from the EDA, ensuring that the initial uncertainty is accounted for. This enables the model to generate a robust ensemble of forecasts, providing a more comprehensive outlook of potential future weather conditions.

GenCast represents a significant leap forward in weather forecasting by combining the power of diffusion models, advanced neural networks, and ensemble techniques to produce highly accurate, probabilistic 15-day forecasts. Its autoregressive design, coupled with a detailed representation of atmospheric and surface variables, allows it to generate realistic and diverse weather predictions at a global scale.

<h2 class="wp-block-heading" id="h-ai-powered-speed-and-accuracy”>ai-Powered Speed and Accuracy

One of GenCast’s most impressive features is its speed. As mentioned earlier, using just one Google Cloud TPU v5 chip, GenCast can generate a 15-day forecast in 8 minutes. This is a dramatic improvement over traditional physics-based models, which typically require large supercomputing resources and take hours to generate similar forecasts. GenCast achieves this by running all ensemble predictions in parallel, delivering fast, high-resolution forecasts that traditional models cannot match.

In terms of accuracy, GenCast has been rigorously tested against ECMWF’s ENS (the European Centre for Medium-Range Weather Forecasts’ operational ensemble model), which is one of the most widely used forecasting systems. In 97.2% of the test cases, GenCast outperformed ENS, demonstrating superior accuracy, especially when predicting extreme weather events.

Handling Extreme Weather with Precision

Extreme weather events — such as heatwaves, cold spells, and high wind speeds — are among the most critical factors that need precise forecasting. GenCast excels in this area, providing reliable predictions for extreme conditions that enable timely preventive actions to safeguard lives, reduce damage, and save costs. Whether it’s preparing for a heatwave or high winds, GenCast consistently outperforms traditional systems in delivering accurate forecasts.

Moreover, GenCast shows superior accuracy in predicting the path of tropical cyclones (hurricanes and typhoons). The model can predict storms’ trajectory with much greater confidence, offering more advanced warnings that can significantly improve disaster preparedness.

GenCast Mini Demo

Here are the links to read more:

To run the other models using Google Cloud Compute, refer to gencast_demo_cloud_vm.ipynb.

Note: This package provides four pretrained models:

| Model Name | Resolution | Mesh Refinement | Training Data | Evaluation Period |

|---|---|---|---|---|

| GenCast 0p25deg <2019 | 0.25 deg | 6 times refined icosahedral | ERA5 (1979-2018) | 2019 and later |

| GenCast 0p25deg Operational <2019 | 0.25 deg | 6 times refined icosahedral | ERA5 (1979-2018) Fine-tuned on HRES-fc0 (2016-2021) |

2022 and later |

| GenCast 1p0deg <2019 | 1 deg | 5 times refined icosahedral | ERA5 (1979-2018) | 2019 and later |

| GenCast 1p0deg Mini <2019 | 1 deg | 4 times refined icosahedral | ERA5 (1979-2018) | 2019 and later |

Also Read: GenCast: Diffusion-based ensemble forecasting for medium-range weather

Here we are using:

GenCast 1p0deg Mini <2019, a GenCast model at 1deg resolution, with 13 pressure levels and a 4 times refined icosahedral mesh. It is trained on ERA5 data from 1979 to 2018, and can be causally evaluated on 2019 and later years.

The reason: This model has the smallest memory footprint of those provided and is the only one runnable with the freely provided TPUv2-8 configuration in Colab.

To get the weights containing:

You can access the Cloud storage provided by Google Deepmind. It contains all the data for GenCast, including stats and Parameters.

The GenCast Mini Implementation

Upgrade packages (kernel needs to be restarted after running this cell)

# @title Upgrade packages (kernel needs to be restarted after running this cell).

%pip install -U importlib_metadataPip Install Repo and Dependencies

# @title Pip install repo and dependencies

%pip install --upgrade https://github.com/deepmind/graphcast/archive/master.zipImports

import dataclasses

import datetime

import math

from google.cloud import storage

from typing import Optional

import haiku as hk

from IPython.display import HTML

from IPython import display

import ipywidgets as widgets

import jax

import matplotlib

import matplotlib.pyplot as plt

from matplotlib import animation

import numpy as np

import xarray

from graphcast import rollout

from graphcast import xarray_jax

from graphcast import normalization

from graphcast import checkpoint

from graphcast import data_utils

from graphcast import xarray_tree

from graphcast import gencast

from graphcast import denoiser

from graphcast import nan_cleaningPlotting functions

def select(

data: xarray.Dataset,

variable: str,

level: Optional(int) = None,

max_steps: Optional(int) = None

) -> xarray.Dataset:

data = data(variable)

if "batch" in data.dims:

data = data.isel(batch=0)

if max_steps is not None and "time" in data.sizes and max_steps < data.sizes("time"):

data = data.isel(time=range(0, max_steps))

if level is not None and "level" in data.coords:

data = data.sel(level=level)

return data

def scale(

data: xarray.Dataset,

center: Optional(float) = None,

robust: bool = False,

) -> tuple(xarray.Dataset, matplotlib.colors.Normalize, str):

vmin = np.nanpercentile(data, (2 if robust else 0))

vmax = np.nanpercentile(data, (98 if robust else 100))

if center is not None:

diff = max(vmax - center, center - vmin)

vmin = center - diff

vmax = center + diff

return (data, matplotlib.colors.Normalize(vmin, vmax),

("RdBu_r" if center is not None else "viridis"))

def plot_data(

data: dict(str, xarray.Dataset),

fig_title: str,

plot_size: float = 5,

robust: bool = False,

cols: int = 4

) -> tuple(xarray.Dataset, matplotlib.colors.Normalize, str):

first_data = next(iter(data.values()))(0)

max_steps = first_data.sizes.get("time", 1)

assert all(max_steps == d.sizes.get("time", 1) for d, _, _ in data.values())

cols = min(cols, len(data))

rows = math.ceil(len(data) / cols)

figure = plt.figure(figsize=(plot_size * 2 * cols,

plot_size * rows))

figure.suptitle(fig_title, fontsize=16)

figure.subplots_adjust(wspace=0, hspace=0)

figure.tight_layout()

images = ()

for i, (title, (plot_data, norm, cmap)) in enumerate(data.items()):

ax = figure.add_subplot(rows, cols, i+1)

ax.set_xticks(())

ax.set_yticks(())

ax.set_title(title)

im = ax.imshow(

plot_data.isel(time=0, missing_dims="ignore"), norm=norm,

origin="lower", cmap=cmap)

plt.colorbar(

mappable=im,

ax=ax,

orientation="vertical",

pad=0.02,

aspect=16,

shrink=0.75,

cmap=cmap,

extend=("both" if robust else "neither"))

images.append(im)

def update(frame):

if "time" in first_data.dims:

td = datetime.timedelta(microseconds=first_data("time")(frame).item() / 1000)

figure.suptitle(f"{fig_title}, {td}", fontsize=16)

else:

figure.suptitle(fig_title, fontsize=16)

for im, (plot_data, norm, cmap) in zip(images, data.values()):

im.set_data(plot_data.isel(time=frame, missing_dims="ignore"))

ani = animation.FuncAnimation(

fig=figure, func=update, frames=max_steps, interval=250)

plt.close(figure.number)

return HTML(ani.to_jshtml())Load the Data and initialize the model

Authenticate with Google Cloud Storage

# Gives you an authenticated client, in case you want to use a private bucket.

gcs_client = storage.Client.create_anonymous_client()

gcs_bucket = gcs_client.get_bucket("dm_graphcast")

dir_prefix = "gencast/"Load the model params

Choose one of the two ways of getting model params:

- random: You’ll get random predictions, but you can change the model architecture, which may run faster or fit on your device.

- checkpoint: You’ll get sensible predictions, but are limited to the model architecture that it was trained with, which may not fit on your device.

Choose the model

params_file_options = (

name for blob in gcs_bucket.list_blobs(prefix=(dir_prefix+"params/"))

if (name := blob.name.removeprefix(dir_prefix+"params/"))) # Drop empty string.

latent_value_options = (int(2**i) for i in range(4, 10))

random_latent_size = widgets.Dropdown(

options=latent_value_options, value=512,description="Latent size:")

random_attention_type = widgets.Dropdown(

options=("splash_mha", "triblockdiag_mha", "mha"), value="splash_mha", description="Attention:")

random_mesh_size = widgets.IntSlider(

value=4, min=4, max=6, description="Mesh size:")

random_num_heads = widgets.Dropdown(

options=(int(2**i) for i in range(0, 3)), value=4,description="Num heads:")

random_attention_k_hop = widgets.Dropdown(

options=(int(2**i) for i in range(2, 5)), value=16,description="Attn k hop:")

def update_latent_options(*args):

def _latent_valid_for_attn(attn, latent, heads):

head_dim, rem = divmod(latent, heads)

if rem != 0:

return False

# Required for splash attn.

if head_dim % 128 != 0:

return attn != "splash_mha"

return True

attn = random_attention_type.value

heads = random_num_heads.value

random_latent_size.options = (

latent for latent in latent_value_options

if _latent_valid_for_attn(attn, latent, heads))

# Observe changes to only allow for valid combinations.

random_attention_type.observe(update_latent_options, "value")

random_latent_size.observe(update_latent_options, "value")

random_num_heads.observe(update_latent_options, "value")

params_file = widgets.Dropdown(

options=(f for f in params_file_options if "Mini" in f),

description="Params file:",

layout={"width": "max-content"})

source_tab = widgets.Tab((

widgets.VBox((

random_attention_type,

random_mesh_size,

random_num_heads,

random_latent_size,

random_attention_k_hop

)),

params_file,

))

source_tab.set_title(0, "Random")

source_tab.set_title(1, "Checkpoint")

widgets.VBox((

source_tab,

widgets.Label(value="Run the next cell to load the model. Rerunning this cell clears your selection.")

))

Load the model

source = source_tab.get_title(source_tab.selected_index)

if source == "Random":

params = None # Filled in below

state = {}

task_config = gencast.TASK

# Use default values.

sampler_config = gencast.SamplerConfig()

noise_config = gencast.NoiseConfig()

noise_encoder_config = denoiser.NoiseEncoderConfig()

# Configure, otherwise use default values.

denoiser_architecture_config = denoiser.DenoiserArchitectureConfig(

sparse_transformer_config = denoiser.SparseTransformerConfig(

attention_k_hop=random_attention_k_hop.value,

attention_type=random_attention_type.value,

d_model=random_latent_size.value,

num_heads=random_num_heads.value

),

mesh_size=random_mesh_size.value,

latent_size=random_latent_size.value,

)

else:

assert source == "Checkpoint"

with gcs_bucket.blob(dir_prefix + f"params/{params_file.value}").open("rb") as f:

ckpt = checkpoint.load(f, gencast.CheckPoint)

params = ckpt.params

state = {}

task_config = ckpt.task_config

sampler_config = ckpt.sampler_config

noise_config = ckpt.noise_config

noise_encoder_config = ckpt.noise_encoder_config

denoiser_architecture_config = ckpt.denoiser_architecture_config

print("Model description:\n", ckpt.description, "\n")

print("Model license:\n", ckpt.license, "\n")Load the example data

- Example ERA5 datasets are available at 0.25 degree and 1 degree resolution.

- Example HRES-fc0 datasets are available at 0.25 degree resolution.

- Some transformations were done from the base datasets:

- We accumulated precipitation over 12 hours instead of the default 1 hour.

- For HRES-fc0 sea surface temperature, we assigned NaNs to grid cells in which sea surface temperature was NaN in the ERA5 dataset (this remains fixed at all times).

The data resolution must match the loaded model. Since we are running GenCast Mini, this will be 1 degree.

Get and filter the list of available example datasets

dataset_file_options = (

name for blob in gcs_bucket.list_blobs(prefix=(dir_prefix + "dataset/"))

if (name := blob.name.removeprefix(dir_prefix+"dataset/"))) # Drop empty string.

def parse_file_parts(file_name):

return dict(part.split("-", 1) for part in file_name.split("_"))

def data_valid_for_model(file_name: str, params_file_name: str):

"""Check data type and resolution matches."""

if source == "Random":

return True

data_file_parts = parse_file_parts(file_name.removesuffix(".nc"))

res_matches = data_file_parts("res").replace(".", "p") in params_file_name.lower()

source_matches = "Operational" in params_file_name

if data_file_parts("source") == "era5":

source_matches = not source_matches

return res_matches and source_matches

dataset_file = widgets.Dropdown(

options=(

(", ".join((f"{k}: {v}" for k, v in parse_file_parts(option.removesuffix(".nc")).items())), option)

for option in dataset_file_options

if data_valid_for_model(option, params_file.value)

),

description="Dataset file:",

layout={"width": "max-content"})

widgets.VBox((

dataset_file,

widgets.Label(value="Run the next cell to load the dataset. Rerunning this cell clears your selection and refilters the datasets that match your model.")

))

Load weather data

with gcs_bucket.blob(dir_prefix+f"dataset/{dataset_file.value}").open("rb") as f:

example_batch = xarray.load_dataset(f).compute()

assert example_batch.dims("time") >= 3 # 2 for input, >=1 for targets

print(", ".join((f"{k}: {v}" for k, v in parse_file_parts(dataset_file.value.removesuffix(".nc")).items())))

example_batch

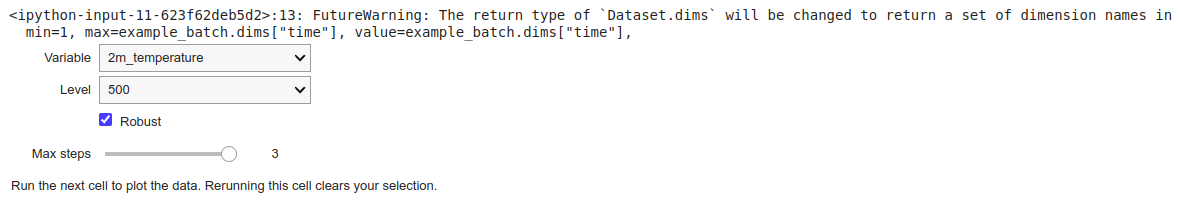

Choose data to plot

plot_example_variable = widgets.Dropdown(

options=example_batch.data_vars.keys(),

value="2m_temperature",

description="Variable")

plot_example_level = widgets.Dropdown(

options=example_batch.coords("level").values,

value=500,

description="Level")

plot_example_robust = widgets.Checkbox(value=True, description="Robust")

plot_example_max_steps = widgets.IntSlider(

min=1, max=example_batch.dims("time"), value=example_batch.dims("time"),

description="Max steps")

widgets.VBox((

plot_example_variable,

plot_example_level,

plot_example_robust,

plot_example_max_steps,

widgets.Label(value="Run the next cell to plot the data. Rerunning this cell clears your selection.")

))

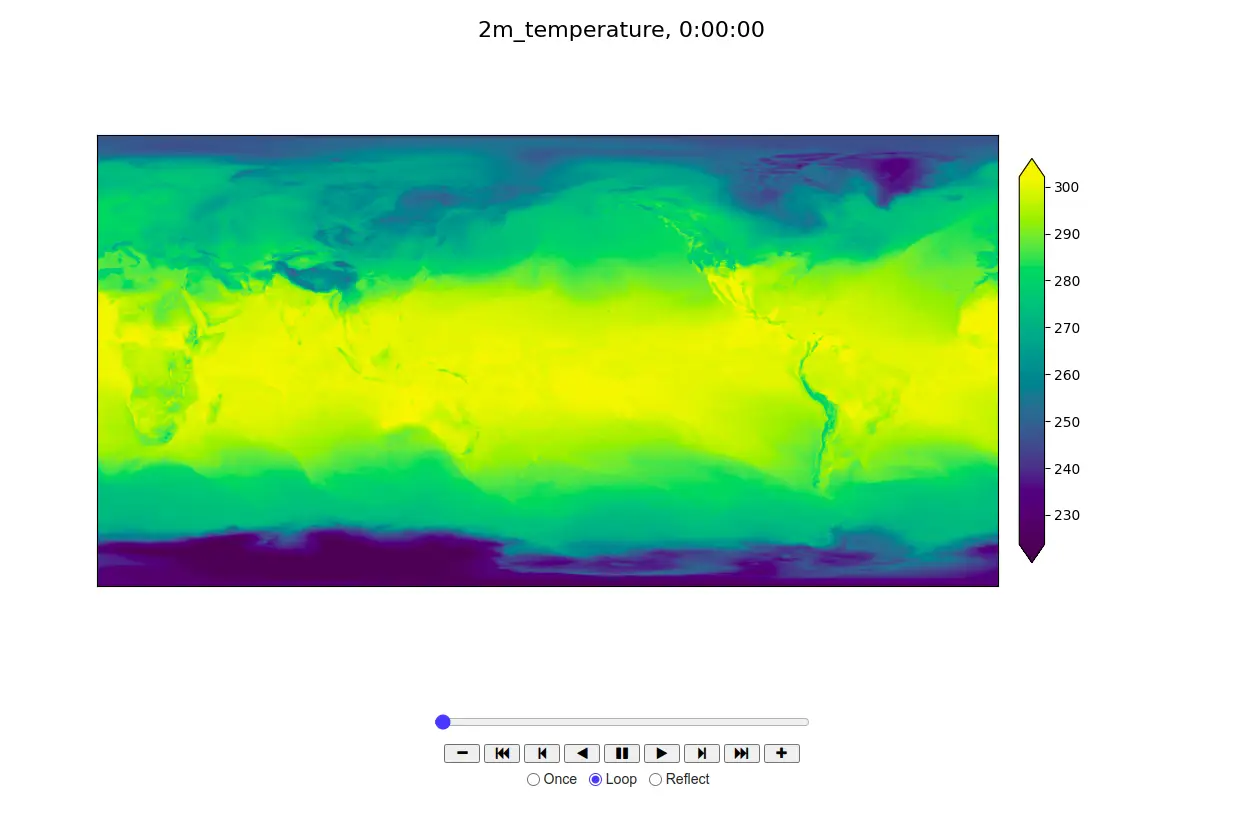

Plot example data

plot_size = 7

data = {

" ": scale(select(example_batch, plot_example_variable.value, plot_example_level.value, plot_example_max_steps.value),

robust=plot_example_robust.value),

}

fig_title = plot_example_variable.value

if "level" in example_batch(plot_example_variable.value).coords:

fig_title += f" at {plot_example_level.value} hPa"

plot_data(data, fig_title, plot_size, plot_example_robust.value)

This output is in Kelvin scale!

Further, for Extract training and eval data, Load normalization data, Build jitted functions, and possibly initialize random weights, Run the model (Autoregressive rollout (loop in python)) , Plot prediction samples and diffs, Plot ensemble mean and CRPS, Train the model, Loss computation and Gradient computation – Check out this repo: gencast_mini_demo.ipynb

Real-World Applications and Benefits

GenCast’s capabilities extend beyond disaster management. Its high-accuracy forecasts can aid in various other sectors of society, most notably renewable energy. For example, better predictions of wind power generation can improve the reliability of wind energy, a crucial part of the renewable energy transition. A proof-of-principle test showed that GenCast was more accurate than ENS in predicting the total wind power generated by groups of wind farms worldwide. This opens the door to more efficient energy planning and, potentially, faster adoption of renewable sources.

GenCast’s enhanced weather predictions will also play a role in food security, agriculture, and public safety, where accurate weather forecasts are essential for decision-making. Whether it’s planning for crop planting or disaster response, GenCast’s forecasts can help guide critical actions.

Also read: GenCast: Our new ai model provides more accurate weather results, faster.

Advancing Climate Understanding

GenCast is part of Google’s larger vision for ai-powered weather forecasting, including other models developed by Google DeepMind and Google Research, such as NeuralGCM, SEEDS, and forecasting floods and wildfires. These models aim to provide even more detailed weather predictions, covering a broader range of environmental factors, including precipitation and extreme heat.

Through its collaborative efforts with meteorological agencies, Google intends to continue advancing ai-based weather models while ensuring that traditional models remain integral to forecasting. Traditional models provide the essential training data and initial weather conditions for systems like GenCast, ensuring that ai and classical meteorology complement each other for the best possible outcomes.

In a move to foster further research and development, Google has decided to release GenCast’s model code, weights, and forecasts to the wider community. This decision aims to empower meteorologists, data scientists, and researchers to integrate GenCast’s advanced forecasting capabilities into their own models and research workflows.

By making GenCast open-source, Google hopes to encourage collaboration across the weather and climate science communities, including partnerships with academic researchers, renewable energy companies, disaster response organizations, and agencies focused on food security. The open release of GenCast will drive faster advancements in weather prediction technology, helping to improve resilience to climate change and extreme weather events.

Conclusion

GenCast represents a new frontier in weather prediction: combining ai and traditional meteorology to offer faster, more accurate, and probabilistic forecasts. With its open-source model, rapid processing, and superior forecasting abilities, GenCast is poised to change the way we approach weather forecasting, risk management, and climate adaptation.

As we move forward, the ongoing collaboration between ai models like GenCast and traditional forecasting systems will be critical in enhancing the accuracy and speed of weather predictions. This partnership will undoubtedly benefit millions of people worldwide, empowering societies to better prepare for the challenges posed by extreme weather events and climate change.

NEWSLETTER

NEWSLETTER