Speech synthesis has become a transformative area of research, focusing on creating natural, synchronized audio outputs from various inputs. Integrating text, video, and audio data provides a more comprehensive approach to mimic human communication. Advances in machine learning, particularly transformer-based architectures, have driven innovations, allowing applications such as multilingual dubbing and personalized speech synthesis to flourish.

A persistent challenge in this field is precisely aligning speech with visual and textual cues. Traditional methods, such as lip-based clipped speech generation or text-to-speech (TTS) models, have limitations. These approaches often need help maintaining synchronization and naturalness in varied scenarios, such as multilingual environments or complex visual contexts. This bottleneck limits its usability in real-world applications that require high fidelity and contextual understanding.

Existing tools rely heavily on single-modality inputs or complex architectures for multi-modal fusion. For example, lip detection models use pre-trained systems to crop input videos, while some text-based systems process only linguistic features. Despite these efforts, the performance of these models remains suboptimal, as they often fail to capture broader visual and textual dynamics that are critical for natural speech synthesis.

Researchers from Apple and the University of Guelph have presented a novel multimodal transformer model called Visatronic. This unified model processes video, text, and voice data through a shared embedding space, leveraging the capabilities of the autoregressive transformer. Unlike traditional multimodal architectures, Visatronic eliminates lip detection preprocessing, offering an optimized solution for generating speech aligned with textual and visual inputs.

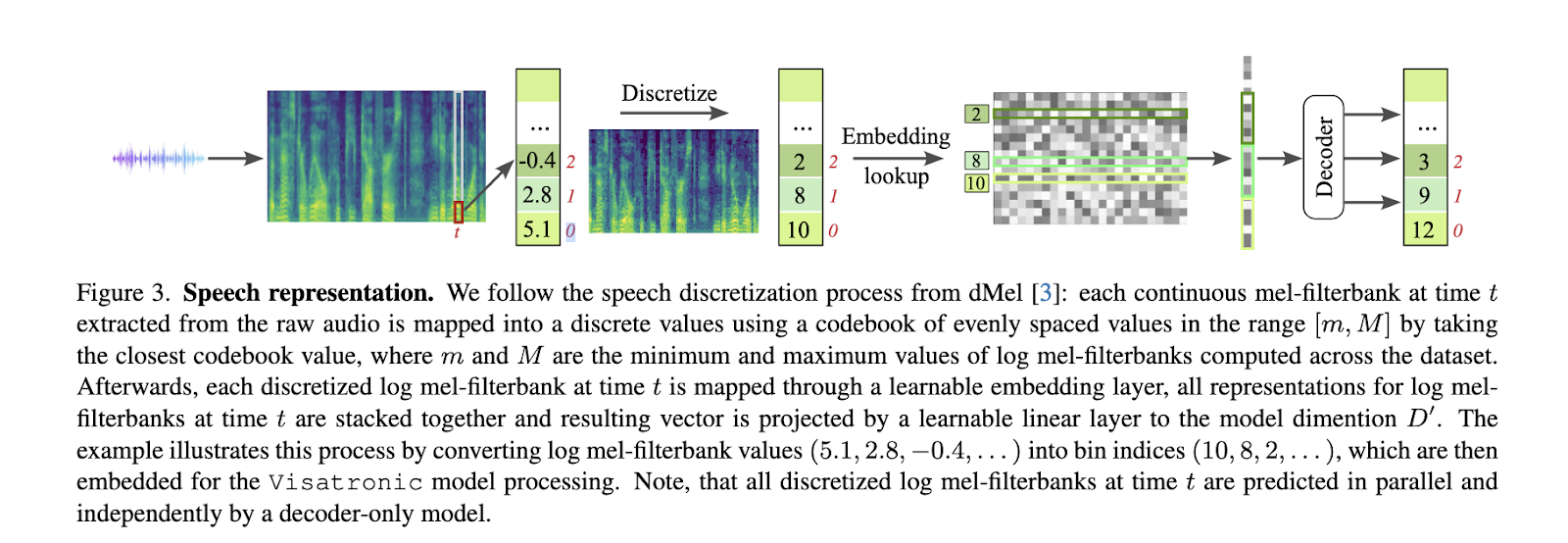

The methodology behind Visatronic is based on the incorporation and discretization of multimodal inputs. A vector-quantized variational autoencoder (VQ-VAE) encodes video inputs into discrete tokens, while speech is quantized into mel spectrogram representations using dMel, a simplified discretization approach. Text inputs undergo character-level tokenization, which improves generalization by capturing linguistic subtleties. These modalities are integrated into a single transformer architecture that enables interactions between inputs through self-attention mechanisms. The model employs time alignment strategies to synchronize data streams with varying resolutions, such as video at 25 frames per second and voice sampled at 25 ms intervals. Additionally, the system incorporates relative positional embeddings to maintain temporal coherence between inputs. Cross-entropy loss is applied exclusively to speech representations during training, ensuring robust optimization and cross-modal learning.

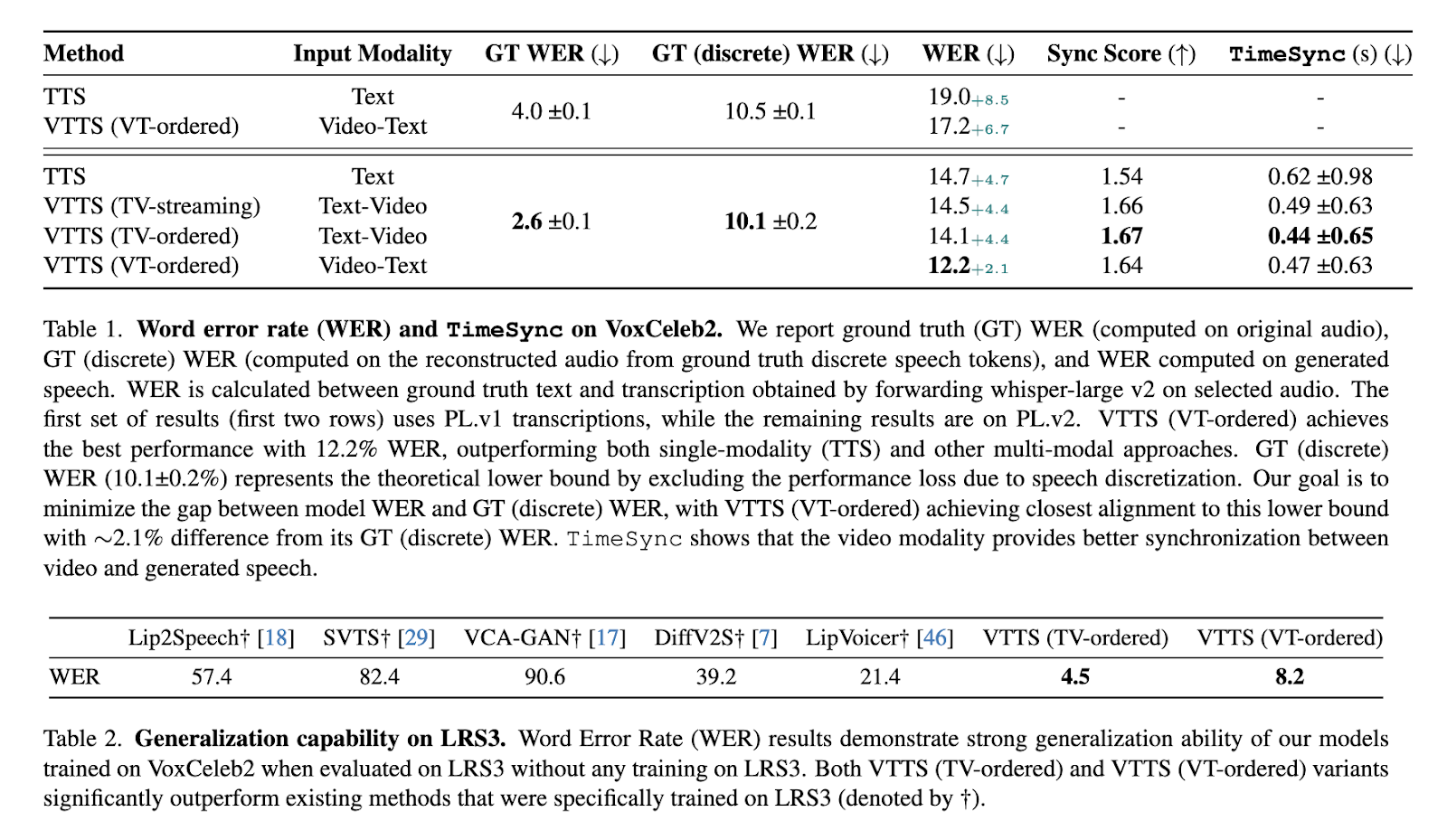

Visatronic demonstrated significant performance gains on challenging data sets. On the VoxCeleb2 dataset, which includes diverse and noisy conditions, the model achieved a word error rate (WER) of 12.2%, outperforming previous approaches. It also achieved a WER of 4.5% on the LRS3 dataset without additional training, demonstrating strong generalization ability. In contrast, traditional TTS systems achieved higher WERs and lacked the timing accuracy needed for complex tasks. Subjective evaluations further confirmed these findings: Visatronic achieved higher intelligibility, naturalness, and synchronization than benchmarks. The VTTS (video-text-to-speech) ordered variant achieved a mean opinion score (MOS) of 3.48 for intelligibility and 3.20 for naturalness, outperforming models trained with textual inputs alone.

The integration of the video modality not only improved content generation but also reduced training time. For example, Visatronic variants achieved comparable or better performance after two million training steps, compared to three million for text-only models. This efficiency highlights the complementary value of combining modalities, as text brings precision to the content while video improves contextual and temporal alignment.

In conclusion, Visatronic represents a breakthrough in multimodal speech synthesis by addressing key naturalness and synchronization challenges. Its unified transformer architecture seamlessly integrates video, text and audio data, delivering superior performance in various conditions. This innovation, developed by researchers at Apple and the University of Guelph, sets a new standard for applications ranging from video dubbing to accessible communication technologies, paving the way for future advances in this field.

Verify the paper. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on <a target="_blank" href="https://twitter.com/Marktechpost”>twitter and join our Telegram channel and LinkedIn Grabove. If you like our work, you will love our information sheet.. Don't forget to join our SubReddit over 55,000ml.

Nikhil is an internal consultant at Marktechpost. He is pursuing an integrated double degree in Materials at the Indian Institute of technology Kharagpur. Nikhil is an ai/ML enthusiast who is always researching applications in fields like biomaterials and biomedical science. With a strong background in materials science, he is exploring new advances and creating opportunities to contribute.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>

NEWSLETTER

NEWSLETTER