Introduction

Generative AI has gained immense popularity in recent years for its ability to create data that closely resembles real-world examples. One of the lesser-explored but highly practical applications of generative AI is anomaly detection using Variational Autoencoders (VAEs). This guide will provide a hands-on approach to building and training a Variational Autoencoder for anomaly detection using Tensor Flow. There will be a few learning objectives from this guide, such as:

- Discover how VAEs can be leveraged for anomaly detection tasks, including both one-class and multi-class anomaly detection scenarios.

- Gain a solid grasp of the concept of anomaly detection and its significance in various real-world applications.

- Learn to distinguish between normal and anomalous data points and appreciate the challenges associated with anomaly detection.

- Explore the architecture and components of a Variational Autoencoder, including the encoder and decoder networks.

- Develop practical skills in using TensorFlow, a popular deep learning framework, to build and train VAE models.

This article was published as a part of the Data Science Blogathon.

Variational Autoencoders(VAE)

A Variational Autoencoder (VAE) is a sophisticated neural network architecture that combines elements of generative modeling and variational inference to learn complex data distributions, particularly in unsupervised machine learning tasks. VAEs have gained prominence for their ability to capture and represent high-dimensional data in a compact, continuous latent space, making them especially valuable in applications like image generation, anomaly detection, and data compression.

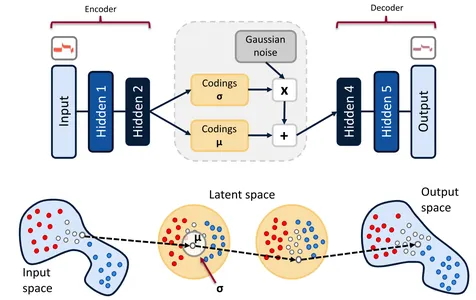

At its core, a VAE comprises two main components: an encoder and a decoder. These components work in tandem to transform input data into a latent space and then back into a reconstructed form.

How Variational Autoencoders operate?

Here’s a brief overview of how VAEs operate:

- Encoder Network: The encoder takes raw input data and maps it into a probabilistic distribution in a lower-dimensional latent space. This mapping is essential for capturing meaningful representations of the data. Unlike traditional autoencoders, VAEs do not produce a fixed encoding; instead, they generate a probability distribution characterized by mean and variance parameters.

- Latent Space: The latent space is where the magic of VAEs happens. It’s a continuous, lower-dimensional representation where data points are positioned based on their characteristics. Importantly, this space follows a specific probability distribution, typically a Gaussian distribution. This allows for generating new data samples by sampling from this distribution.

- Decoder Network: The decoder takes a point in the latent space and maps it back to the original data space. It’s responsible for reconstructing the input data as accurately as possible. The decoder architecture is typically symmetrical to the encoder.

- Reconstruction Loss: During training, VAEs aim to minimize a reconstruction loss, which quantifies how well the decoder can recreate the original input from the latent space representation. This loss encourages the VAE to learn meaningful features from the data.

- Regularization Loss: In addition to the reconstruction loss, VAEs include a regularization loss that pushes the latent space distributions closer to a standard Gaussian distribution. This regularization enforces continuity in the latent space, which facilitates data generation and interpolation.

Understanding Anomaly Detection with VAEs

Anomaly Detection Overview:

Anomaly detection is a critical task in various domains, from fraud detection in finance to fault detection in manufacturing. It involves identifying data points that deviate significantly from the expected or normal patterns within a dataset. VAEs offer a unique approach to this problem by leveraging generative modeling.

The Role of VAEs:

Variational Autoencoders are a subclass of autoencoders that not only compress data into a lower-dimensional latent space but also learn to generate data that resembles the input distribution. In anomaly detection, we use VAEs to encode data into the latent space and subsequently decode it. We detect anomalies by measuring the dissimilarity between the original input and the reconstructed output. If the reconstruction deviates significantly from the input, it indicates an anomaly.

Setting Up Your Environment

Installing TensorFlow and Dependencies:

Before diving into VAE implementation, ensure you have TensorFlow and the required dependencies installed. You can use pip to install TensorFlow and other libraries like NumPy and Matplotlib to assist with data manipulation and visualization.

Preparing the Dataset:

Select an appropriate dataset for your anomaly detection task. Preprocessing steps may include normalizing data, splitting it into training and testing sets, and ensuring it is in a format compatible with your VAE architecture.

Building the Variational Autoencoder (VAE)

Architecture of the VAE:

VAEs consist of two main components: the encoder and the decoder. The encoder compresses the input data into a lower-dimensional latent space, while the decoder reconstructs it. The architecture choices, such as the number of layers and neurons, impact the VAE’s capacity to capture features and anomalies effectively.

Encoder Network:

The encoder network learns to map input data to a probabilistic distribution in the latent space. It typically comprises convolutional and dense layers, gradually reducing the input’s dimensionality.

Latent Space:

The latent space represents a lower-dimensional form of the input data where we can detect anomalies. It is characterized by a mean and variance that guide the sampling process.

Decoder Network:

The decoder network reconstructs data from the latent space. Its architecture is often symmetric to the encoder, gradually expanding back to the original data dimensions.

Training the VAE

Loss Functions:

The training process of a VAE involves optimizing two loss functions: the reconstruction loss and the regularization loss. The reconstruction loss measures the dissimilarity between the input and the reconstructed output. The regularization loss encourages the latent space to follow a specific distribution, usually a Gaussian distribution.

Custom Loss Functions:

Depending on your anomaly detection task, you might need to customize the loss functions. For instance, you can assign higher weights to anomalies in the reconstruction loss.

Training Loop:

The training loop involves feeding data through the VAE, calculating the loss, and adjusting the model’s weights using an optimizer. Training continues until the model converges or a predefined number of epochs is reached.

Anomaly Detection

Defining Thresholds:

Thresholds play a pivotal role in classifying anomalies. Thresholds are set based on the reconstruction loss or other relevant metrics. Careful threshold selection is crucial as it affects the trade-off between false positives and false negatives.

Evaluating Anomalies:

Once we train the VAE and define thresholds, we can evaluate anomalies. We encode input data into the latent space, reconstruct it, and then compare it to the original input. We flag data points with reconstruction errors surpassing the defined thresholds as anomalies.

Python Code Implementation

# Import necessary libraries

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import layers

# Define the VAE architecture

class VAE(tf.keras.Model):

def __init__(self, latent_dim):

super(VAE, self).__init__()

self.latent_dim = latent_dim

self.encoder = keras.Sequential([

layers.InputLayer(input_shape=(28, 28, 1)),

layers.Conv2D(32, 3, activation='relu', strides=2, padding='same'),

layers.Conv2D(64, 3, activation='relu', strides=2, padding='same'),

layers.Flatten(),

layers.Dense(latent_dim + latent_dim),

])

self.decoder = keras.Sequential([

layers.InputLayer(input_shape=(latent_dim,)),

layers.Dense(7*7*32, activation='relu'),

layers.Reshape(target_shape=(7, 7, 32)),

layers.Conv2DTranspose(64, 3, activation='relu', strides=2, padding='same'),

layers.Conv2DTranspose(32, 3, activation='relu', strides=2, padding='same'),

layers.Conv2DTranspose(1, 3, activation='sigmoid', padding='same'),

])

def sample(self, eps=None):

if eps is None:

eps = tf.random.normal(shape=(100, self.latent_dim))

return self.decode(eps, apply_sigmoid=True)

def encode(self, x):

mean, logvar = tf.split(self.encoder(x), num_or_size_splits=2, axis=1)

return mean, logvar

def reparameterize(self, mean, logvar):

eps = tf.random.normal(shape=mean.shape)

return eps * tf.exp(logvar * 0.5) + mean

def decode(self, z, apply_sigmoid=False):

logits = self.decoder(z)

if apply_sigmoid:

probs = tf.sigmoid(logits)

return probs

return logits

# Custom loss function for VAE

@tf.function

def compute_loss(model, x):

mean, logvar = model.encode(x)

z = model.reparameterize(mean, logvar)

x_logit = model.decode(z)

cross_ent = tf.nn.sigmoid_cross_entropy_with_logits(logits=x_logit, labels=x)

logpx_z = -tf.reduce_sum(cross_ent, axis=[1, 2, 3])

logpz = tf.reduce_sum(tf.square(z), axis=1)

logqz_x = -tf.reduce_sum(0.5 * (logvar + tf.square(mean) - logvar), axis=1)

return -tf.reduce_mean(logpx_z + logpz - logqz_x)

# Training step function

@tf.function

def train_step(model, x, optimizer):

with tf.GradientTape() as tape:

loss = compute_loss(model, x)

gradients = tape.gradient(loss, model.trainable_variables)

optimizer.apply_gradients(zip(gradients, model.trainable_variables))

return loss

# Training loop

def train_vae(model, dataset, optimizer, epochs):

for epoch in range(epochs):

for train_x in dataset:

loss = train_step(model, train_x, optimizer)

print('Epoch: {}, Loss: {:.4f}'.format(epoch + 1, loss))Conclusion

This guide has explored the application of Variational Autoencoders (VAEs) for anomaly detection. VAEs provide an innovative approach to identifying outliers or anomalies within datasets by reconstructing data in a lower-dimensional latent space. Through a step-by-step approach, we’ve covered the fundamentals of setting up your environment, building a VAE architecture, training it, and defining thresholds for anomaly detection.

Key Takeaways:

- VAEs are powerful tools for anomaly detection, capable of capturing complex data patterns and identifying outliers effectively.

- Customizing loss functions and threshold values is often necessary to fine-tune anomaly detection models for specific use cases.

- Experimentation with different VAE architectures and hyperparameters can significantly impact the detection performance.

- Regularly evaluate and update your anomaly detection thresholds to adapt to changing data patterns

Frequently Asked Questions

A: Real-time anomaly detection with VAEs is feasible, but it depends on factors like the complexity of your model and dataset size. Optimization and efficient architecture design are key.

A: Threshold selection is often empirical. You can start with a threshold that balances false positives and false negatives, then adjust it based on your specific application’s needs.

A: Yes, other models like Generative Adversarial Networks (GANs) and Normalizing Flows can also be used for anomaly detection, each with its own advantages and challenges.

The media shown in this article is not owned by Analytics Vidhya and is used at the Author’s discretion.

NEWSLETTER

NEWSLETTER