Your contact center serves as a vital link between your company and your customers. Every call to your contact center is an opportunity to learn more about your customers’ needs and how well you’re meeting them.

Most contact centers require their agents to summarize their conversation after each call. Call summary is a valuable tool that helps contact centers understand and gain insights from customer calls. Additionally, accurate call summaries improve the customer journey by eliminating the need for customers to repeat information when transferring to another agent.

In this post, we explain how to use the power of generative ai to reduce effort and improve the accuracy of creating call summaries and layouts. We also show how to quickly get started with the latest version of our open source solution. Live call analysis with agent assistance.

Challenges with call summaries

As contact centers collect more voice data, the need for efficient call summarization has increased significantly. However, most summaries are empty or inaccurate because creating them manually is time-consuming, impacting key agent metrics such as average handle time (AHT). Agents report that summarizing can take up up to a third of the entire call, so they skip it or fill in incomplete information. This hurts the customer experience: Long waits frustrate customers while the agent types, and incomplete summaries mean asking customers to repeat information when it’s transferred between agents.

The good news is that it is now possible to automate and solve the summary challenge thanks to generative ai.

Generative ai helps summarize customer calls accurately and efficiently

Generative ai is powered by very large machine learning (ML) models, called core models (FM), that are pre-trained on large amounts of data at scale. A subset of these FMs focused on natural language understanding are called large language models (LLMs) and are capable of generating contextually relevant and human-like summaries. The best LLMs can process even complex and non-linear sentence structures with ease and determine various aspects, including topic, intent, next steps, outcomes, and more. Using LLM to automate call summarization allows you to summarize customer conversations accurately and in a fraction of the time required for manual summarization. This, in turn, allows contact centers to deliver a superior customer experience while reducing the documentation burden on their agents.

The following screenshot shows an example of the Live Call Analytics with Agent Assist call details page, which contains information about each call.

The following video shows an example of Live Call Analytics with Agent Assist that summarizes a call in progress, summarizes after the call has ended, and generates a follow-up email.

Solution Overview

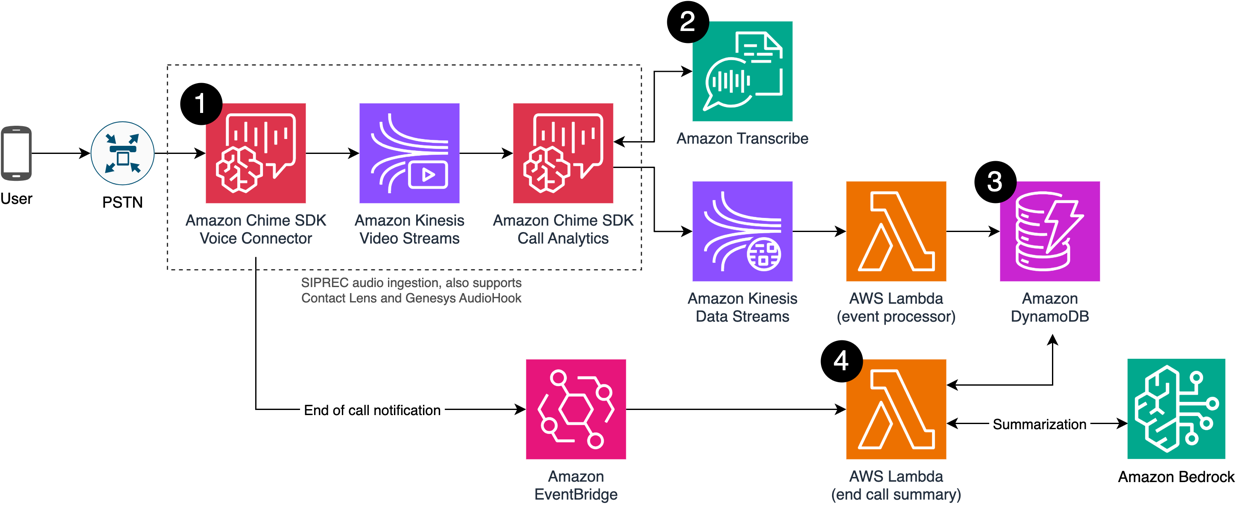

The following diagram illustrates the solution workflow.

The first step in generating abstractive call summaries is to transcribe the client call. Having accurate, ready-to-use transcripts is critical to generating accurate and effective call summaries. Amazon Transcribe can help you create highly accurate transcriptions for your contact center calls. Amazon Transcribe is a feature-packed speech-to-text API with next-generation speech recognition models that are fully managed and continually trained. Clients like New York Times, Loose, Zillow, Wix and thousands of others use Amazon Transcribe to generate highly accurate transcriptions to improve their business results. A key differentiator of Amazon Transcribe is its ability to protect customer data by removing sensitive information from audio and text. While protecting customer privacy and security is important for contact centers in general, it is even more important to mask sensitive information such as bank account information and Social Security numbers before generating automated call summaries, so that they are not injected into the summaries.

For customers already using Amazon Connect, our omnichannel cloud contact center, Contact Lens for Amazon Connect natively offers real-time transcription and analytics capabilities. However, if you want to use generative ai with your existing contact center, we have developed solutions that do most of the heavy lifting associated with transcribing real-time or post-call conversations from your existing contact center, and generating of automated call summaries using generative ai. ai. Additionally, the solution detailed in this section allows you to integrate with your customer relationship management (CRM) system to automatically update your CRM of choice with generated call summaries. In this example, we use our Live Call Analytics with Agent Assist (LCA) solution to generate call transcripts and call summaries in real time with LLM hosted on Amazon Bedrock. You can also write an AWS Lambda function and provide LCA with the function’s Amazon Resource Name (ARN) in the AWS CloudFormation parameters and use the LLM of your choice.

The following simplified LCA architecture illustrates call summary with Amazon Bedrock.

LCA is provided as a CloudFormation template that implements the above architecture and allows you to transcribe calls in real time. The workflow steps are as follows:

- Call audio can be streamed via SIPREC from your telephony system to the Amazon Chime SDK voice connector, which stores the audio in Amazon Kinesis Video Streams. LCA also supports other audio ingest mechanisms, such as Genesys Cloud Audio Hook.

- Amazon Chime SDK Call Analytics then streams the audio from Kinesis Video Streams to Amazon Transcribe and writes the JSON output to Amazon Kinesis Data Streams.

- A Lambda function processes the transcript segments and persists them in an Amazon DynamoDB table.

- After the call completes, Amazon Chime SDK Voice Connector publishes an Amazon EventBridge notification that triggers a Lambda function that reads the persistent transcript from DynamoDB, generates an LLM message (more on this in the next section), and runs an LLM inference with Amazon Bedrock. . The generated summary is persisted in DynamoDB and can be used by the agent in the LCA user interface. Optionally, you can provide a Lambda function ARN that will run after the summary is generated to integrate with third-party CRM systems.

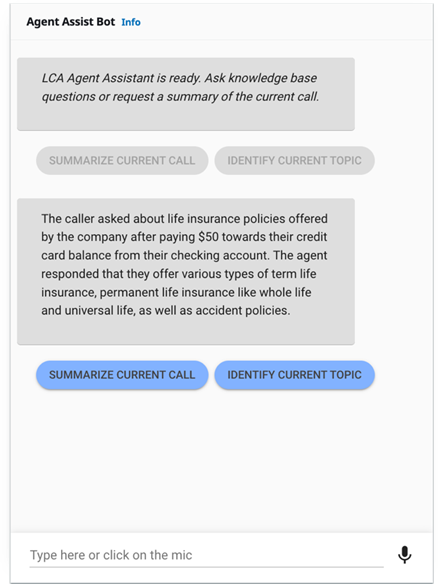

LCA also allows the option to call the summary Lambda function during the call, because at any time the transcript can be retrieved and a message created, even if the call is in progress. This can be useful when a call is transferred to another agent or escalated to a supervisor. Instead of putting the customer on hold and explaining the call, the new agent can quickly read an automatically generated summary, which can include what the current problem is and what the previous agent tried to do to resolve it.

Call summary message example

You can run LLM inferences with rapid engineering to generate and improve your call summaries. You can modify the message templates to see what works best for the LLM you select. The following is an example of the default message for summarizing a transcript with LCA. We replace the {transcript} placeholder with the actual transcript of the call.

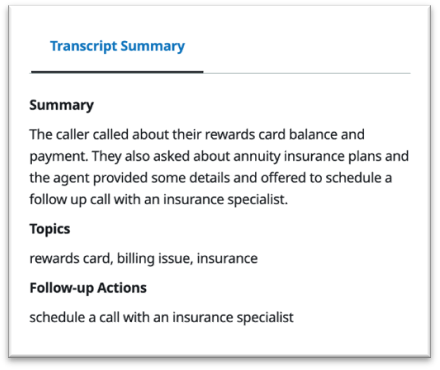

LCA executes the message and stores the generated digest. In addition to the summary, you can direct the LLM to generate almost any text that is important to agent productivity. For example, you can choose from a set of topics that were covered during the call (agent layout), generate a list of required follow-up tasks, or even write an email to the caller thanking them for the call.

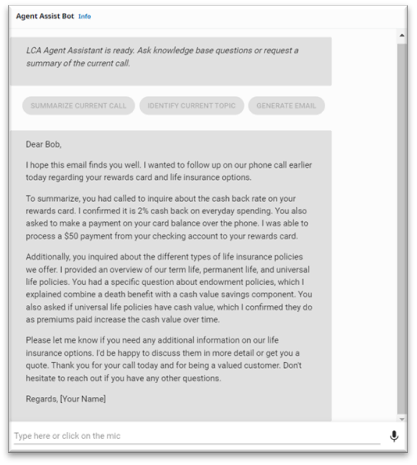

The following screenshot is an example of generating an agent’s follow-up email in the LCA UI.

With a well-designed message, some LLMs also have the ability to generate all of this information in a single inference, reducing inference cost and processing time. The agent can then use the response generated a few seconds after ending the call for their post-contact work. You can also integrate the automatically generated response into your CRM system.

The following screenshot shows an example summary in the LCA user interface.

It is also possible to generate a summary while the call is still in progress (see screenshot below), which can be especially useful for long customer calls.

Before generative ai, agents had to pay attention while also taking notes and performing other tasks as needed. By automatically transcribing the call and using LLM to automatically create summaries, we can reduce the mental load on the agent, so they can focus on delivering a superior customer experience. This also leads to more accurate post-call work, because the transcript is an accurate representation of what happened during the call, not just what the agent took notes or remembered.

Summary

The sample LCA application is provided as open source; Use it as a starting point for your own solution, and help us improve it by contributing fixes and features through GitHub pull requests. To learn about implementing LCA, see Live Call Analytics and Agent Support for Your Contact Center with Amazon Language ai Services. Navigate to the LCA GitHub Repository to explore the code, sign up to receive notifications about new releases, and check out the READ ME for the latest documentation updates. For customers already on Amazon Connect, you can learn more about generative ai with Amazon Connect by reading How contact center leaders can prepare for generative ai.

About the authors

Christopher Lott is a Senior Solutions Architect on the AWS ai Language Services team. He has 20 years of experience in enterprise software development. Chris lives in Sacramento, California and enjoys gardening, aerospace, and traveling the world.

Christopher Lott is a Senior Solutions Architect on the AWS ai Language Services team. He has 20 years of experience in enterprise software development. Chris lives in Sacramento, California and enjoys gardening, aerospace, and traveling the world.

Smriti Ranjan He is a Principal Product Manager on the AWS ai/ML team, focusing on language and search services. Prior to joining AWS, he worked at Amazon Devices and other technology startups leading product and growth functions. Smriti lives in Boston, MA and enjoys hiking, attending concerts, and traveling the world.

Smriti Ranjan He is a Principal Product Manager on the AWS ai/ML team, focusing on language and search services. Prior to joining AWS, he worked at Amazon Devices and other technology startups leading product and growth functions. Smriti lives in Boston, MA and enjoys hiking, attending concerts, and traveling the world.

NEWSLETTER

NEWSLETTER