Generative artificial intelligence (ai) models have opened up new possibilities for automating and improving software development workflows. In particular, the emerging ability of generative models to produce code based on natural language cues has opened many doors to how developers and DevOps professionals approach their work and improve their efficiency. In this post, we provide an overview of how to leverage the advancements of large language models (LLMs) using amazon Bedrock to support developers at various stages of the software development lifecycle (SDLC).

amazon Bedrock is a fully managed service that offers a selection of high-performance base models (FMs) from leading ai companies such as AI21 Labs, Anthropic, Cohere, Meta, Mistral ai, Stability ai, and amazon through a single API, along with a broad set of capabilities to build generative ai applications with security, privacy, and responsible ai.

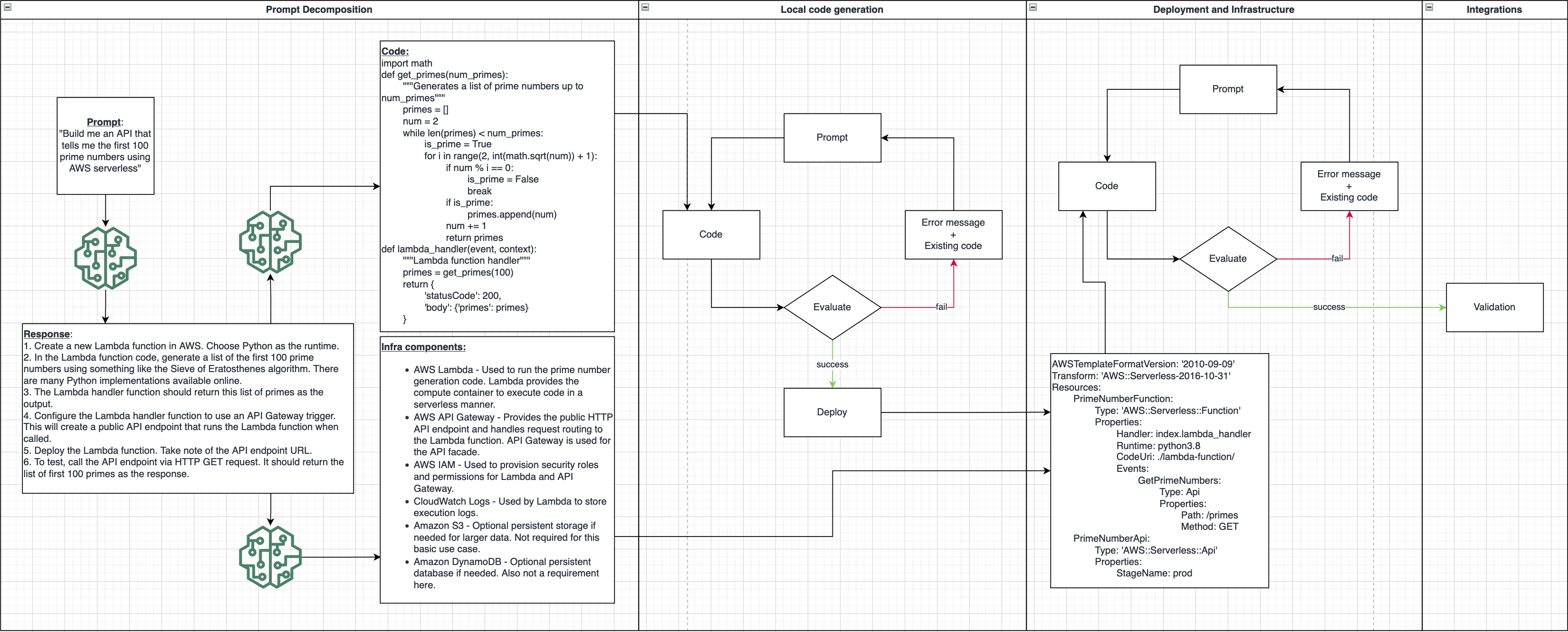

The following process architecture proposes an example SDLC flow that incorporates generative ai in key areas to improve development efficiency and speed.

The goal of this post is to focus on how developers can build their own systems to extend, write, and audit code by using templates within amazon Bedrock instead of relying on off-the-shelf coding assistants. We discuss the following topics:

- A coding assistant use case to help developers write code faster by providing them with suggestions

- How to use LLM code comprehension capabilities to gain insights and recommendations

- An automated application generation use case to generate working code and automatically deploy changes to a working environment

Considerations

It is important to consider a few technical choices when choosing the model and approach to implement this functionality at each step. One such choice is the base model to use for the task. Since each model has been trained on a different data corpus, there will inherently be different task performance per model. Anthropic’s Claude 3 models on amazon Bedrock write code effectively out of the box in many common coding languages, for example, while others may not be able to achieve that performance without further customization. However, customization is another technical choice to make. For example, if your use case includes a less common language or framework, customizing the model through fine-tuning or using Recall Augmented Generation (RAG) may be necessary to achieve production-quality performance, but involves more complexity and engineering effort to implement effectively.

There is a large body of literature that discusses these trade-offs; in this article, we simply outline what needs to be explored on its own. We simply present the context that accompanies the developer’s initial steps in implementing their generative ai-powered software development lifecycle journey.

Coding Assistant

Coding assistants are a very popular use case, with a wealth of examples to choose from. AWS offers several services that can be applied to assist developers, either through online completion from tools like amazon CodeWhisperer, or by interacting with them through natural language using amazon Q. amazon Q for Developers has several implementations of this functionality, such as:

In almost all of the use cases described, there can be an integration with chat interface and wizards. The use cases here focus on more direct code generation use cases using natural language prompts. This should not be confused with online generation tools that focus on automatically completing a coding task.

The main advantage of a wizard over online generation is that you can start new projects based on simple descriptions. For example, you might describe that you want a serverless website that allows users to post in a blog format, and amazon Q can start building out the project by providing sample code and making recommendations on which frameworks to use to do so. This natural language entry point can give you a template and framework to operate from so you can spend more time on the differentiating logic of your application rather than setting up repeatable, off-the-shelf components.

Understanding the code

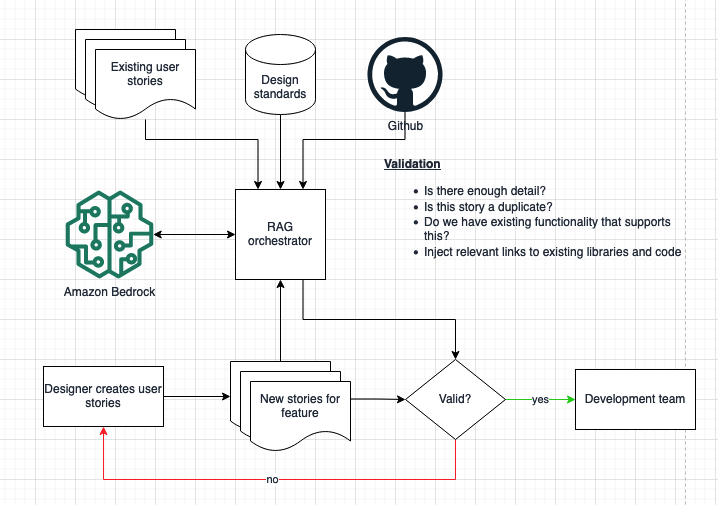

It is common for a company beginning to experiment with generative ai to increase the productivity of its individual developers to use LLMs to infer the meaning and functionality of code to improve the reliability, efficiency, security, and speed of the development process. Human understanding of code is a core part of the software development lifecycle (SDLC) – creating documentation, performing code reviews, and applying best practices. Onboarding new developers can be challenging even for mature teams. Instead of a more experienced developer taking the time to answer questions, an LLM with knowledge of the team’s codebase and coding standards could be used to explain sections of code and design decisions to the new team member. The onboarding developer has everything they need with a fast turnaround time, and the senior developer can focus on building. In addition to user-facing behaviors, this same mechanism can be repurposed to work completely behind the scenes to augment existing continuous integration and continuous delivery (CI/CD) processes as an additional reviewer.

For example, you can use hint engineering techniques to guide and automate the application of coding standards, or include the existing codebase as reference material for using custom APIs. You can also take proactive steps by prefixing each hint with a reminder to follow coding standards and making a call to fetch them from document storage, passing them to the model as context with the hint. As a retroactive measure, you can add a step during the review process to check written code against standards to enforce compliance, similar to how a team code review would work. For example, suppose one of the team's standards is to reuse components. During the review step, the model can read a new code submission, notice that the component already exists in the codebase, and suggest to the reviewer that they reuse the existing component instead of recreating it.

The following diagram illustrates this type of workflow.

Application generation

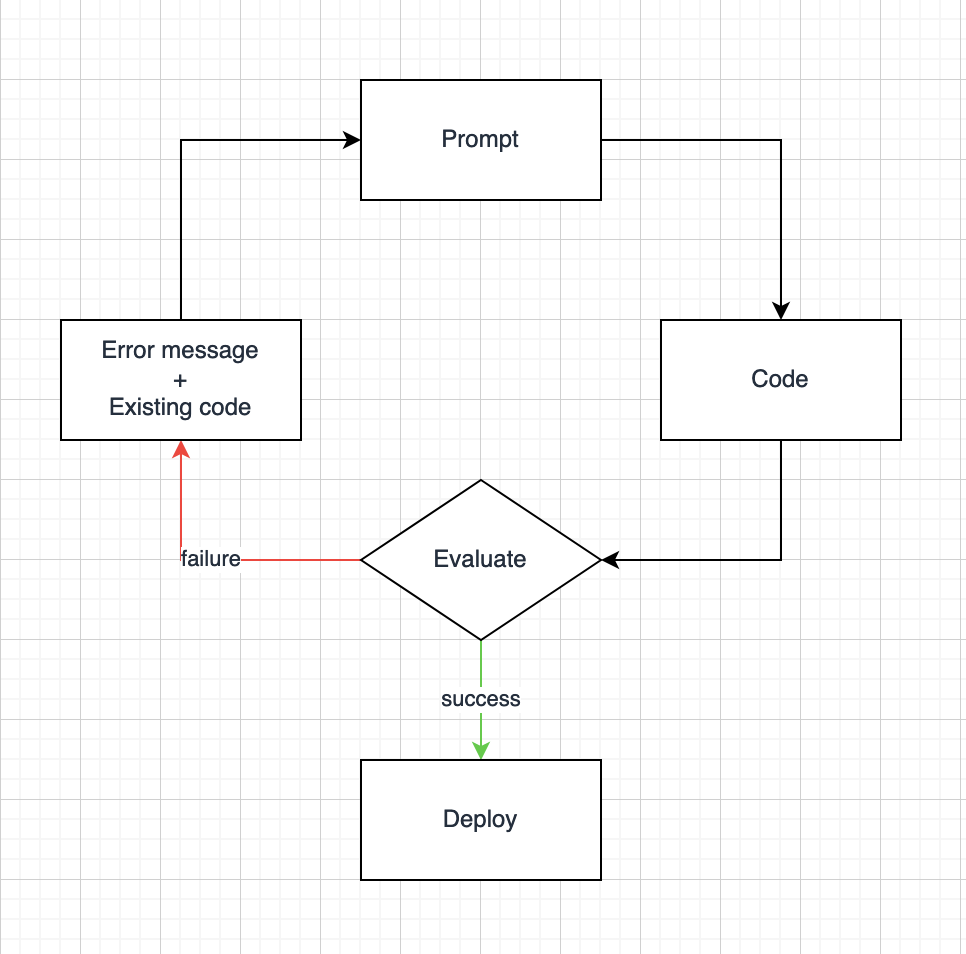

You can extend the concepts of the use cases described in this post to create a complete application generation implementation. In the traditional SDLC, a human creates a set of requirements, makes a design for the application, writes code to implement that design, creates tests and receives feedback on the system from external sources or people, and then the process repeats. The bottleneck in this cycle typically occurs in the implementation and testing phases. An application developer must have substantial technical skills to effectively write code, and numerous iterations are typically required to debug and refine code, even for the most skilled developers. Additionally, a basic understanding of a company’s existing code base, APIs, and intellectual property is critical to implementing an effective solution—something that can take a long time for humans to learn. This can slow down the time to innovation for new teammates or teams with technical skills gaps. As mentioned above, if models with the ability to create and interpret code can be used, pipelines can be created that perform the developer's iterations of the SDLC by inputting the model results as input.

The following diagram illustrates this type of workflow.

For example, natural language can be used to ask a model to write an application that prints all prime numbers between 1 and 100. It returns a block of code that can be run with applicable tests defined. If the program fails to run or some tests fail, the error and the failing code can be fed back into the model, asking it to diagnose the problem and suggest a solution. The next step in the process would be to take the original code, along with the diagnosis and suggested solution, and put the code fragments together to form a new program. The SDLC is then restarted at the testing phase to obtain new results, and either iterated again or produced a working application. Using this basic framework, an increasing number of components can be added in the same way as in a traditional human-based workflow. This modular approach can be continually improved until there is a robust and powerful application generation process that simply takes a natural language message and generates a working application, handling all bug fixing and best practice enforcement behind the scenes.

The following diagram illustrates this advanced workflow.

Conclusion

We are at a point in the generative ai adoption curve where teams can realize real productivity gains from using the variety of techniques and tools available. In the near future, it will be imperative to leverage these productivity gains to remain competitive. One thing we do know is that the landscape will continue to progress and change rapidly, so building a system that is change-tolerant and flexible is critical. Developing components in a modular way allows for stability in the face of an ever-changing technical landscape while being ready to adopt the latest technology every step of the way.

For more information on how to get started developing with LLM, check out these resources:

About the authors

Ian Lenora Ian is an experienced software development leader focused on building high-quality cloud-native software and exploring the potential of artificial intelligence. He has successfully led teams in delivering complex projects across multiple industries, optimizing efficiency and scalability. With a strong understanding of the software development lifecycle and a passion for innovation, Ian seeks to leverage ai technologies to solve complex problems and create intelligent, adaptable software solutions that drive business value.

Ian Lenora Ian is an experienced software development leader focused on building high-quality cloud-native software and exploring the potential of artificial intelligence. He has successfully led teams in delivering complex projects across multiple industries, optimizing efficiency and scalability. With a strong understanding of the software development lifecycle and a passion for innovation, Ian seeks to leverage ai technologies to solve complex problems and create intelligent, adaptable software solutions that drive business value.

Cody Collins Cody is a New York-based Solutions Architect at amazon Web Services, where he collaborates with ISV customers to build cutting-edge cloud solutions. He has extensive experience delivering complex projects across a variety of industries, optimizing efficiency and scalability. Cody specializes in ai/ML technologies, enabling customers to build ML capabilities and integrate ai into their cloud applications.

Cody Collins Cody is a New York-based Solutions Architect at amazon Web Services, where he collaborates with ISV customers to build cutting-edge cloud solutions. He has extensive experience delivering complex projects across a variety of industries, optimizing efficiency and scalability. Cody specializes in ai/ML technologies, enabling customers to build ML capabilities and integrate ai into their cloud applications.

Samit Kumbhani Samit is a Senior Solutions Architect at AWS in the New York City area with over 18 years of experience. He currently collaborates with Independent Software Vendors (ISVs) to build highly scalable, innovative, and secure cloud solutions. Outside of work, Samit enjoys playing cricket, traveling, and cycling.

Samit Kumbhani Samit is a Senior Solutions Architect at AWS in the New York City area with over 18 years of experience. He currently collaborates with Independent Software Vendors (ISVs) to build highly scalable, innovative, and secure cloud solutions. Outside of work, Samit enjoys playing cricket, traveling, and cycling.

NEWSLETTER

NEWSLETTER