Anomaly detection (AD), the task of distinguishing anomalies from normal data, plays a vital role in many real-world applications, such as detecting defective vision sensor products in manufacturing, fraudulent behavior in financial transactionseither network security threats. Depending on the availability of the type of data (negative (normal) vs. positive (abnormal) and the availability of its labels), the AD task involves different challenges.

|

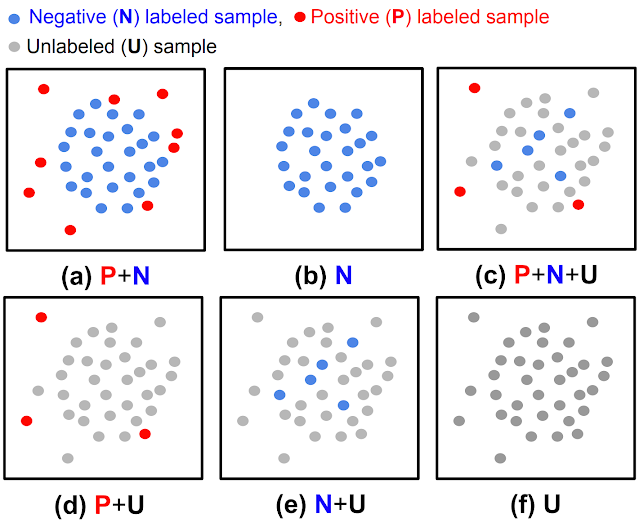

| (a) Fully supervised anomaly detection, (b) only normal anomaly detection, (c, d, e) semi-supervised anomaly detection, (f) unsupervised anomaly detection. |

While most previous work has proven effective for cases with fully labeled data (either (a) or (b) in the figure above), such setups are less common in practice because labels are particularly tedious to obtain. In most scenarios, users have a limited labeling budget, and sometimes there are not even samples labeled during training. Also, even when labeled data is available, there could be biases in the way samples are labeled, leading to distribution differences. Such real-world data challenges limit the achievable accuracy of previous methods in detecting anomalies.

This post covers two of our recent AD articles, published on Transactions in machine learning research (TMLR), which address the above challenges in unsupervised and semi-supervised environments. Wearing data centric approaches, we show cutting-edge results in both. In “Self-Monitored, Refined, Repeated: Improving Unattended Anomaly Detection”, we propose a novel unsupervised AD framework that is based on the principles of unlabeled self-supervised learning and iterative data refinement based on agreement of classifier of a class (OCC) departures. In “SPADE: Semi-supervised anomaly detection under distribution mismatch”, we propose a new semi-supervised AD framework that produces robust performance even under distribution mismatch conditions with limited labeled samples.

Unsupervised Anomaly Detection with SRR: Self-Monitored, Refine, Repeat

Discovering a decision limit for a one-class (normal) distribution (i.e., OCC training) is challenging in totally unsupervised settings, since the unlabeled training data includes two classes (normal and abnormal). The challenge is further compounded as the proportion of anomalies for unlabeled data increases. To build a robust OCC with unlabeled data, excluding likely positive (outlier) samples from the unlabeled data, the process called data refinement is critical. Refined data, with a lower anomaly ratio, is shown to produce superior anomaly detection models.

SRR first refines the data from an unlabeled dataset, then iteratively trains deep plots using the refined data while improving the refinement of the unlabeled data by excluding probably positive samples. For data refinement, a set of OCCs are used, each of which is trained on a disjoint subset of the unlabeled training data. If there is consensus among all OCCs in the set, the data that is predicted to be negative (normal) is included in the refined data. Finally, the refined training data is used to train the final OCC to generate the anomaly predictions.

|

| SRR training with a data refinement module (OCC set), representation learner, and final OCC. (Green/red dots represent normal/abnormal samples, respectively). |

SRR results

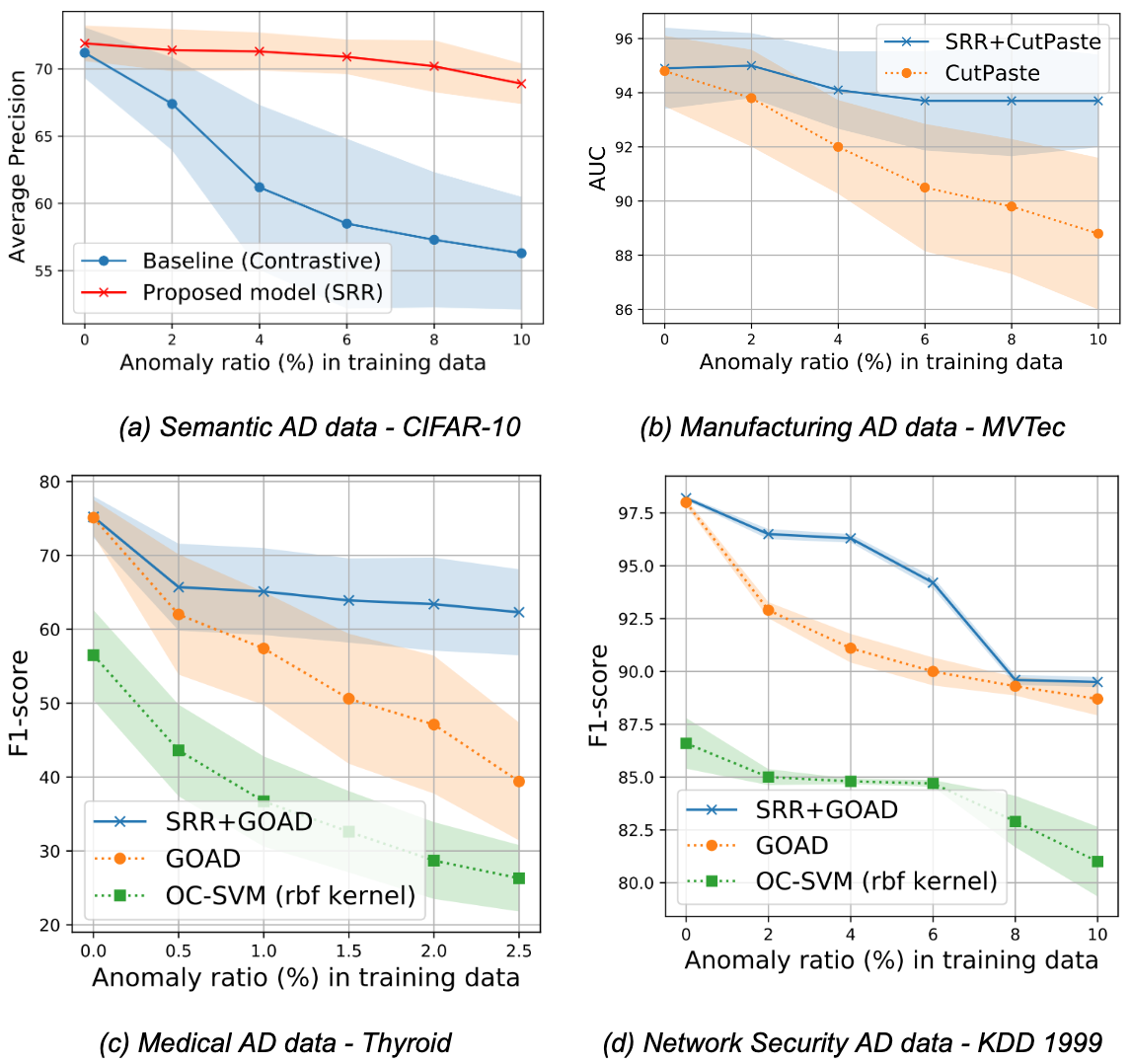

We carried out extensive experiments on various data sets from different domains, including semantic AD (CIFAR-10, Dog vs Cat), real world manufacturing visual AD (MVTec), and real-world tabular AD benchmarks such as medical screening (Thyroid) or network security (KDD 1999) anomalies. We consider methods with little depth (eg, OC-SVM) and deep (eg, STING, cut pasta) Models. Since the anomaly ratio of real-world data can vary, we evaluated models at different anomaly ratios of unlabeled training data and showed that SRR significantly increases AD performance. For example, SRR improves more than 15.0 medium precision (AP) with an abnormality rate of 10% compared to a state of the art unique class deep model in CIFAR-10. Similarly, in MVTec, SRR retains a strong performance, falling below 1.0 abc with an abnormality rate of 10%, while the best existing OCC falls more than 6.0 AUC. Finally, in Thyroid (tabular data), SRR exceeds a state-of-the-art single class classifier for 22.9 F1 score with an abnormality rate of 2.5%.

|

| Across multiple domains, SRR (blue line) significantly increases AD performance with various failure ratios in fully unattended environments. |

SPADE: Semi-supervised pseudo-tagging anomaly detection with assembly

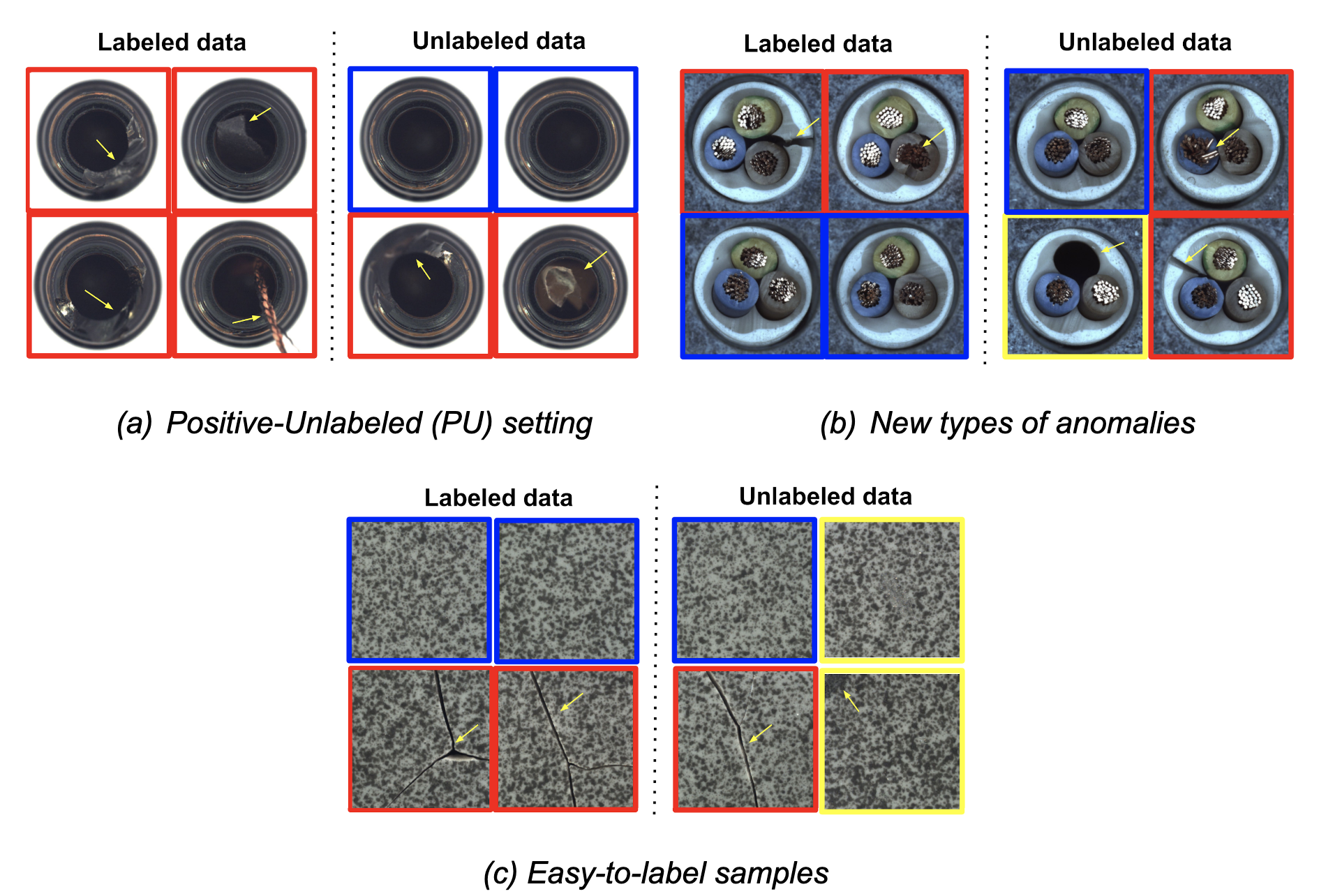

Most semi-supervised learning methods (eg, coincidence correction, WICKER) assume that the labeled and unlabeled data come from the same distributions. However, in practice, distribution mismatch commonly occurs, with labeled and unlabeled data coming from different distributions. One of those cases is positive and no label (PU) or negative and unlabeled (NU) configurations, where the distributions between labeled (positive or negative) and unlabeled (both positive and negative) samples are different. Another cause of distribution change is the collection of additional unlabeled data after labeling. For example, manufacturing processes may continue to evolve, causing the corresponding defects to change and the defect types in the tagged to differ from the defect types in the untagged data. Also, for applications like financial fraud detection and anti money laundering, new anomalies may appear after the data labeling process, as criminal behavior may adapt. Lastly, labelers rely more on easy samples when labeling them; therefore, easy/difficult samples are more likely to be included in the labeled/unlabeled data. For example, with some crowdsourced taggingonly samples with some consensus on the labels (as a measure of confidence) are included in the labeled set.

|

| Three common real-world scenarios with distribution mismatches (blue box: normal samples, red box: known/easy anomaly samples, yellow box: new/hard anomaly samples). |

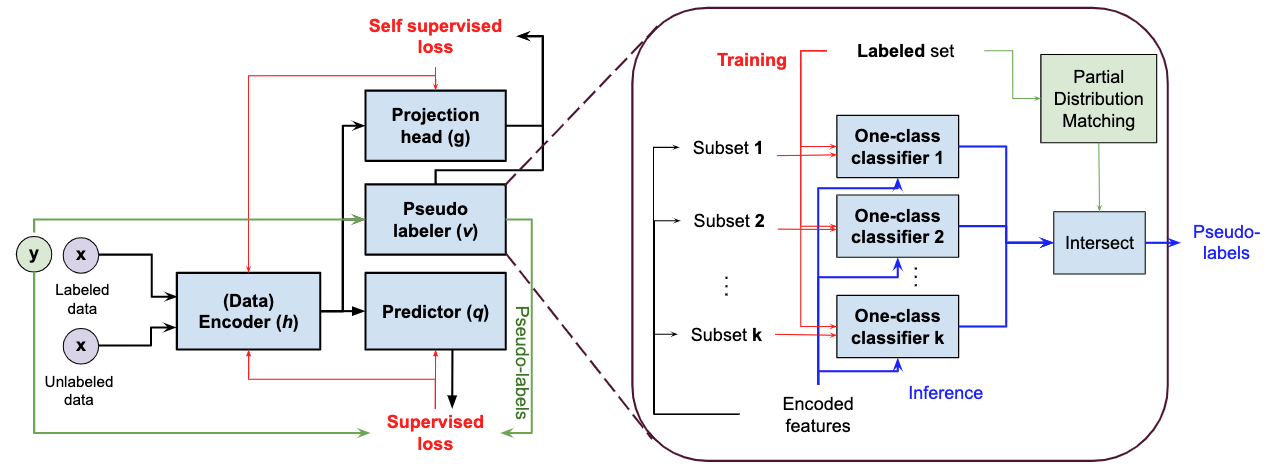

Standard methods of semi-supervised learning assume that labeled and unlabeled data come from the same distribution, so they are suboptimal for semi-supervised AD under distribution mismatch. SPADE uses a set of OCCs to estimate pseudolabels from unlabeled data; it does this regardless of the given positive labeled data, which reduces dependency on labels. This is especially beneficial when there is a distribution mismatch. In addition, SPADE employs partial match to automatically select critical hyperparameters for pseudo-tagging without relying on tagged validation data, a crucial capability given the limited amount of tagged data.

|

| SPADE block diagram zoomed in on the detailed block diagram of the proposed pseudo-labelers. |

sword results

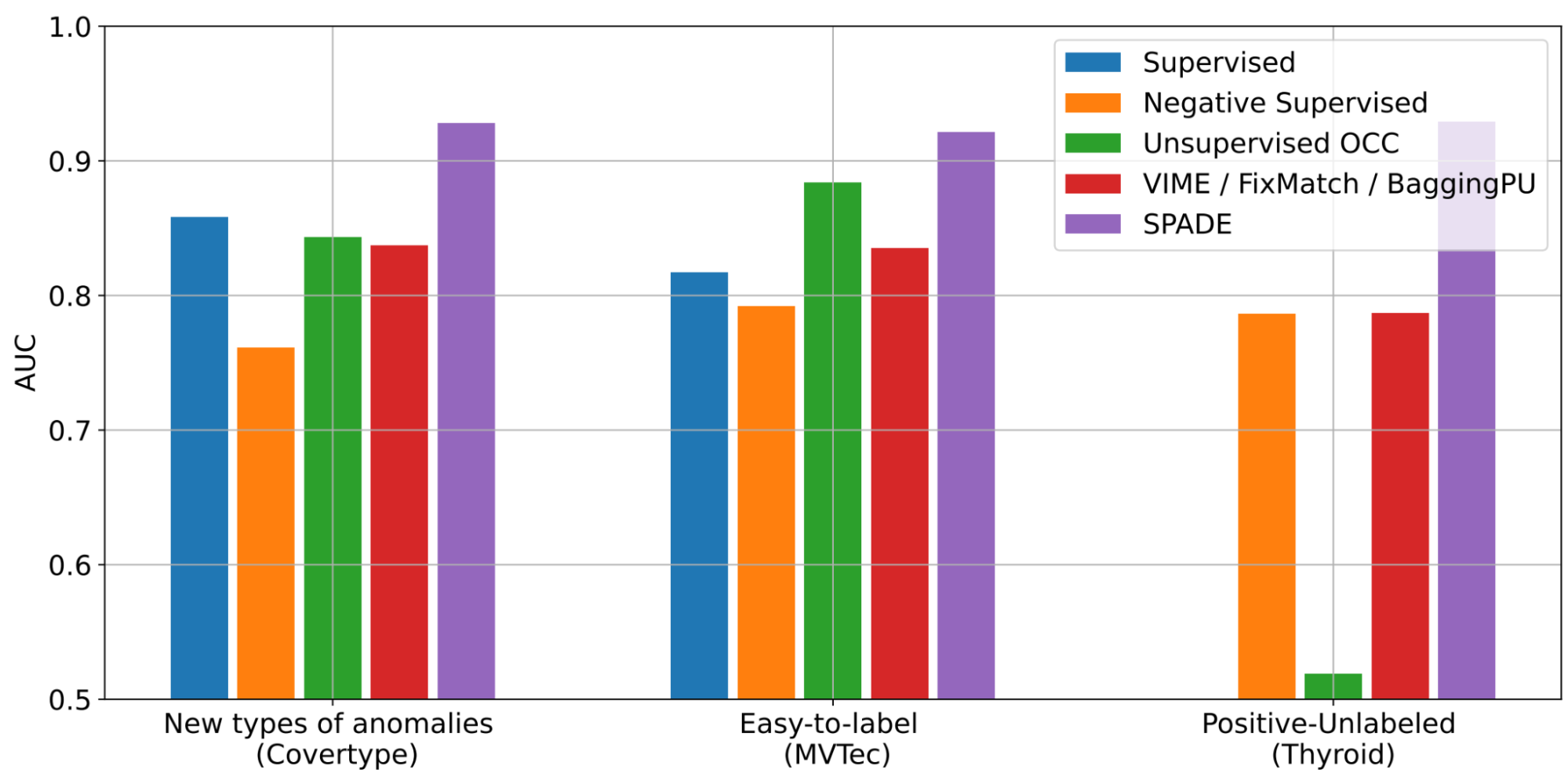

We conducted extensive experiments to show the benefits of SPADE in various real-world environments of distribution mismatch semi-supervised learning. We consider multiple AD data sets for the image (including MVTec) and tabular (including cover type, Thyroid) data.

SPADE shows state-of-the-art semi-supervised anomaly detection performance in a wide range of scenarios: (i) new anomaly types, (ii) easy-to-label samples, and (iii) unlabeled positive samples. As shown below, with new anomaly types, SPADE outperforms next-gen alternatives by 5% AUC on average.

|

| AD performances with three different scenarios across various data sets (cover type, MVTec, Thyroid) in terms of AUC. Some baselines are only applicable to some scenarios. More results with other baselines and data sets can be found in the paper. |

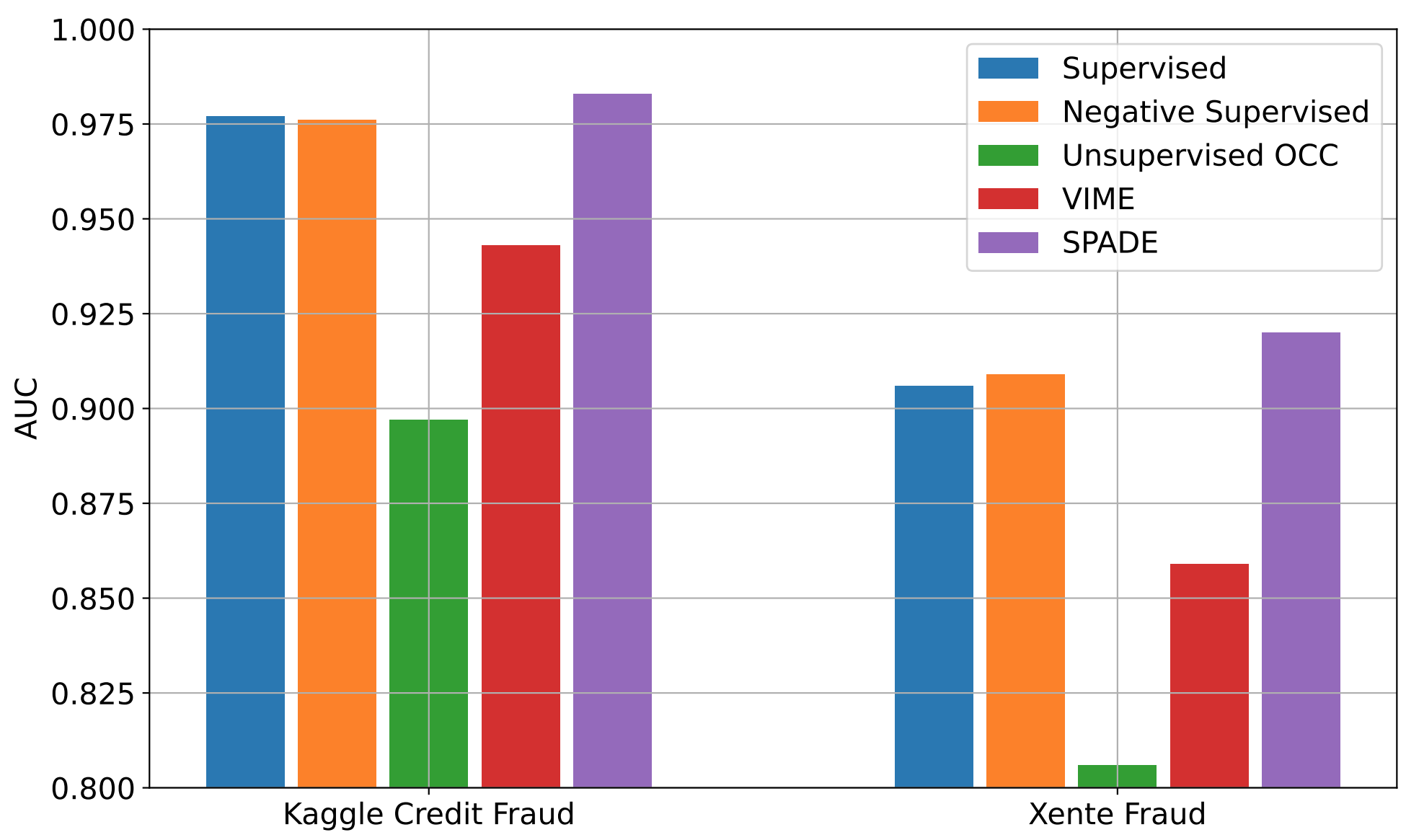

We also evaluate SPADE on real-world financial fraud detection datasets: Kaggle Credit Card Fraud and Xente fraud detection. For these, anomalies evolve (ie, their distributions change over time) and to identify evolving anomalies, we need to keep labeling new anomalies and retrain the AD model. However, labeling would be expensive and time consuming. Even without additional labeling, SPADE can improve AD performance using newly collected labeled data and unlabeled data.

|

| AD performance with time-varying distributions using two real-world fraud detection data sets with a 10% tagging ratio. More baselines can be found in the paper. |

As shown above, SPADE consistently outperforms the alternatives in both data sets, taking advantage of unlabeled data and showing robustness to evolving distributions.

conclusions

AD has a wide range of use cases with significant importance in real-world applications, from detecting security threats in financial systems to identifying faulty behaviors in manufacturing machines.

A challenging and expensive aspect of building an AD system is that anomalies are rare and not easily detected by people. To this end, we have proposed SRR, a canonical AD framework to enable high-performance AD without the need for manual tagging for training. SRR can be flexibly integrated with any OCC and applied on raw data or trainable representations.

Semi-supervised AD is another very important challenge: in many scenarios, the distributions of labeled and unlabeled samples do not match. SPADE introduces a robust pseudo-tagging mechanism using a set of OCCs and a clever way of combining supervised and self-supervised learning. Additionally, SPADE presents an efficient approach to selecting critical hyperparameters without a validation set, a crucial component for data-efficient AD.

Overall, we show that SRR and SPADE consistently outperform the alternatives in various scenarios across multiple types of data sets.

Thanks

We are grateful for the contributions of Kihyuk Sohn, Chun-Liang Li, Chen-Yu Lee, Kyle Ziegler, Nate Yoder, and Tomas Pfister.