In our rapidly evolving digital world, the demand for instant gratification has never been higher. Whether we are looking for information, products or services, we expect our queries to be answered at lightning speed and with pinpoint accuracy. However, the pursuit of speed and accuracy often presents a formidable challenge to modern search engines.

Traditional recovery models face a fundamental trade-off: the more accurate they are, the higher the computational cost and latency. This latency can be a deal breaker and negatively impact user satisfaction, revenue, and energy efficiency. Researchers have been grappling with this conundrum, looking for ways to deliver effectiveness and efficiency in one package.

In a groundbreaking study, a team of researchers from the University of Glasgow has revealed an ingenious solution that harnesses the power of smaller, more efficient transformer models to achieve ultra-fast recovery without sacrificing accuracy. Meet Shallow Cross-Encoders: a novel ai approach that promises to revolutionize the search experience.

Shallow crossover encoders are based on transformer models with fewer layers and reduced computational requirements. Unlike their larger counterparts such as BERT or T5, these handy models can estimate the relevance of more documents within the same time budget, potentially leading to better overall effectiveness in low-latency scenarios.

But training these smaller models effectively is no easy task. Conventional techniques often result in overconfidence and instability, making performance difficult. To overcome this challenge, the researchers introduced an ingenious training scheme called gBCE (generalized binary cross entropy), which mitigates the overconfidence problem and ensures stable and accurate results.

The gBCE training scheme incorporates two key components: (1) a larger number of negative samples per positive instance and (2) the gBCE loss function, which counteracts the effects of negative sampling. By carefully balancing these elements, the researchers were able to train highly effective shallow cross-encoders that consistently outperformed their larger counterparts in low-latency scenarios.

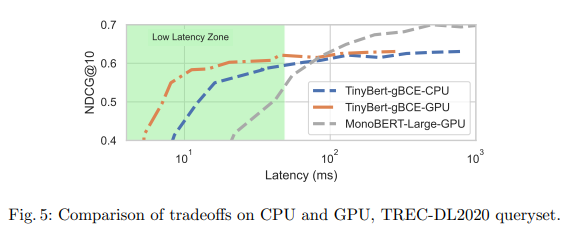

In a series of rigorous experiments, the researchers evaluated a variety of shallow Cross-Encoder models, including TinyBERT (2 layers), MiniBERT (4 layers), and SmallBERT (4 layers), against full-size baselines such as MonoBERT- Large and MonoT5. -Base. The result was extremely impressive.

On the TREC DL 2019 dataset, the tiny TinyBERT-gBCE model achieved an NDCG@10 score of 0.652 when latency was limited to just 25 milliseconds, a staggering 51% improvement over the much larger MonoBERT-Large model (NDCG@10 of 0.431) under the same latency constraint.

However, the advantages of shallow cross-encoders go beyond simple speed and accuracy. These compact models also offer significant advantages in terms of energy efficiency and cost-effectiveness. With their modest memory footprint, they can be deployed on a wide range of devices, from powerful data centers to resource-constrained edge devices, without the need for specialized hardware acceleration.

Imagine a world where your search queries are answered at lightning speed and with pinpoint accuracy, whether you're using a high-end workstation or a modest mobile device. This is the promise of Surface Cross-Encoders, a revolutionary solution that could redefine the search experience for billions of users around the world.

As the research team continues to refine and optimize this innovative technology, we can look toward a future where the trade-off between speed and precision becomes a thing of the past. With shallow cross-encoders at the forefront, the pursuit of instant and accurate search results is no longer a distant dream – it's a tangible reality within our reach.

Review the Paper. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter. Join our Telegram channel, Discord Channeland LinkedIn Grabove.

If you like our work, you will love our Newsletter..

Don't forget to join our 39k+ ML SubReddit

![]()

Vibhanshu Patidar is a Consulting Intern at MarktechPost. He is currently pursuing a bachelor's degree at the Indian Institute of technology (IIT) Kanpur. He is a robotics and machine learning enthusiast with a knack for unraveling the complexities of algorithms that bridge theory and practical applications.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>