In the dynamic realm of computer vision and artificial intelligence, a new approach challenges the traditional trend of building larger models for advanced visual understanding. The current research approach, supported by the belief that larger models produce more powerful representations, has led to the development of gigantic vision models.

Central to this exploration is a critical examination of the prevailing practice of model extension. This scrutiny brings to light the significant resource expenditure and diminishing returns of performance improvements associated with ever-expanding model architectures. It raises a pertinent question about the sustainability and efficiency of this approach, especially in a domain where computational resources are invaluable.

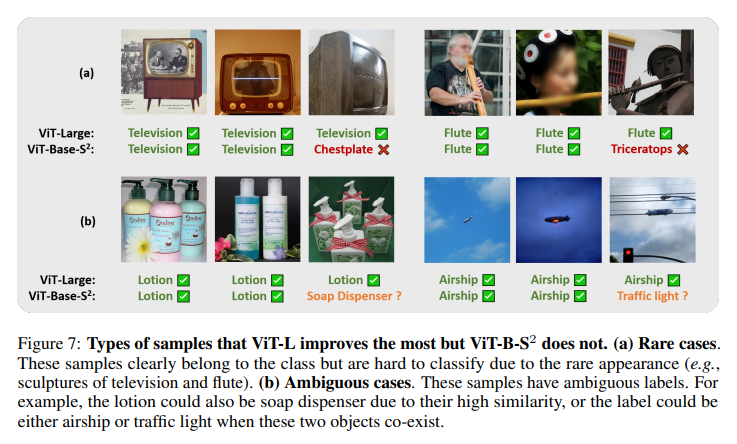

UC Berkeley and Microsoft Research introduced an innovative technique called Scaling in scales (S2). This method represents a paradigm shift, proposing a strategy that diverges from the traditional scaling model. By applying a smaller vision model pre-trained at various image scales, S2 aims to extract multi-scale representations, offering a new lens through which visual understanding can be improved without necessarily increasing model size.

Leveraging multiple image scales produces a composite representation that rivals or exceeds the output of much larger models. Research shows the S2 the technique's prowess on various benchmarks, where it consistently outperforms its larger counterparts in tasks including, but not limited to, classification, semantic segmentation, and depth estimation. It establishes a new state of the art in multimodal LLM (MLLM) visual detail understanding in the V* benchmark, outperforming even commercial models such as Gemini Pro and GPT-4V, with significantly fewer parameters and comparable or reduced computational demands.

For example, in robotic manipulation tasks, the S2 The scaling method on a base size model improved the success rate by approximately 20%, demonstrating its superiority over simply scaling the model size. The detailed understanding ability of LLaVA-1.5, with S2 scaled, achieved notable accuracies, with V* Attention and V* Spatial scoring 76.3% and 63.2%, respectively. These figures underline the effectiveness of S2 and highlight its efficiency and potential to reduce expenditure on computational resources.

This research sheds light on the increasingly pertinent question of whether relentless scaling of model sizes is really necessary to advance visual understanding. Through the lens of S2 Using this technique, it is evident that alternative scaling methods, particularly those that focus on exploiting the multiscale nature of visual data, can provide equally compelling, if not superior, performance results. This approach challenges the existing paradigm and opens new avenues for the development of scalable and resource-efficient models in computer vision.

In conclusion, introduce and validate Scaling on Scales (S2) The method represents a significant advance in computer vision and artificial intelligence. This research convincingly argues for a move away from predominant model size expansion toward a more nuanced and efficient scaling strategy that leverages multi-scale image representations. Doing so demonstrates the potential for achieving state-of-the-art performance on visual tasks. Underlines the importance of innovative scaling techniques to promote computational efficiency and resource sustainability in ai development. the2 The method, with its ability to rival or even surpass the production of much larger models, offers a promising alternative to traditional model scaling, highlighting its potential to revolutionize the field.

Review the Paper and GitHub. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on Twitter. Join our Telegram channel, Discord channeland LinkedIn Grabove.

If you like our work, you will love our Newsletter..

Don't forget to join our 39k+ ML SubReddit

![]()

Sana Hassan, a consulting intern at Marktechpost and a dual degree student at IIT Madras, is passionate about applying technology and artificial intelligence to address real-world challenges. With a strong interest in solving practical problems, she brings a new perspective to the intersection of ai and real-life solutions.

<!– ai CONTENT END 2 –>

NEWSLETTER

NEWSLETTER