Natural language processing (NLP) has advanced significantly with deep learning, driven by innovations such as word embedding and transformative architectures. Self-supervised learning uses large amounts of unlabeled data to create pre-training tasks and has become a key approach for training models, especially in high-resource languages such as English and Chinese. The disparity in NLP resources and performance ranges from high-resource language systems, such as English and Chinese, to low-resource language systems, such as Portugueseand more than 7000 languages around the world. This gap hinders the ability of low-resource language NLP applications to grow and become more robust and accessible. Furthermore, low-resource monolingual models remain small-scale, undocumented, and lack standard benchmarks, making development and evaluation difficult.

Current development methods often use large amounts of data and readily available computational resources for high-resource languages such as English and Chinese. Portuguese NLP mainly uses multilingual models such as mBERT, mT5 and BLOOM or fine-tune models trained in English. However, these methods often overlook the unique aspects of Portuguese. Assessment benchmarks are old or based on English data sets, making them less useful for Portuguese.

To address this, researchers from the university of bonn have developed GigaVerba large-scale Portuguese text corpus of 200 billion tokens, and trained a series of decoder-transformers called Toucan. These models aim to improve the performance of Portuguese-language models by leveraging a substantial and high-quality data set.

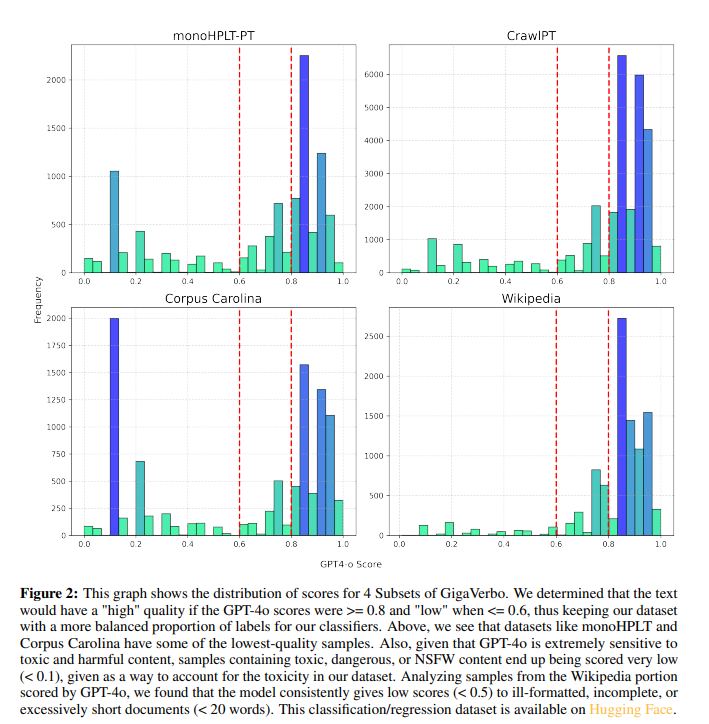

He GigaVerb The dataset is a concatenation of multiple high-quality Portuguese text corpora, refined using custom filtering techniques based on GPT-4 evaluations. The filtering process improved text preprocessing and retained 70% of the data set for the model. Based on the Llama architecture, the Tucano models were implemented using Hugging Face for easy community access. Techniques such as RoPE embeddings, root mean square normalization, and Silu activations were used instead of SwiGLU. Training was performed using a causal language modeling and cross-entropy loss approach. The models range from 160 million to 2.4 billion parameters, with the largest being trained with 515 billion tokens.

Evaluation of these models shows that they perform equally or better than other Portuguese and multilingual models of similar size on various Portuguese benchmarks. Training loss and validation perplexity curves for the four base models showed that larger models generally reduced loss and perplexity more effectively, and the effect was amplified with larger batch sizes. Checkpoints were saved every 10.5 billion tokens and performance was tracked across multiple benchmarks. Pearson correlation coefficients indicated mixed results: some benchmarks, such as CALAME-PT, LAMBADA, and HellaSwag, improved with the scale, while others, such as OAB exams, showed no correlation with token intake. Inverse scaling was observed in billion parameter models, suggesting potential limitations. Performance benchmarks also reveal that Toucan outperforms previous multilingual and Portuguese models in native assessments such as CALAME-PT and tests automatically translated as LAMBADA.

In conclusion, the GigaVerbo and Tucano series improve the performance of Portuguese language models. The proposed work covered the development process, including data set creation, filtering, hyperparameter tuning, and evaluation, with a focus on openness and reproducibility. It also showed the potential to improve low-resource language models through large-scale data collection and advanced training techniques. The contribution of these researchers will be beneficial in providing these necessary resources to guide future studies.

Verify he Paper and Hugging face page. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on <a target="_blank" href="https://twitter.com/Marktechpost”>twitter and join our Telegram channel and LinkedIn Grabove. If you like our work, you will love our information sheet.. Don't forget to join our SubReddit over 55,000ml.

Nazmi Syed is a consulting intern at MarktechPost and is pursuing a bachelor of science degree at the Indian Institute of technology (IIT) Kharagpur. He has a deep passion for data science and is actively exploring the broad applications of artificial intelligence in various industries. Fascinated by technological advancements, Nazmi is committed to understanding and implementing cutting-edge innovations in real-world contexts.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>

NEWSLETTER

NEWSLETTER