With the rise of language models, enormous attention has been paid to improving the learning of LMs to accelerate the learning speed and achieve a certain model performance with as few training steps as possible. This emphasis helps humans understand the limits of LMs amid their increasing computational requirements. It also promotes the democratization of large language models (LLM), benefiting the research and industry communities.

Previous works such as Pretrained Models, Past, Present, and Future, focus on designing effective architectures, using rich contexts, and improving computational efficiency. In h2oGPT: Democratizing Large Language Models, researchers attempted to create open source alternatives to closed source approaches. In Large Batch Optimization for Deep Learning: Training BERT in 76 Minutes, they attempted to overcome the computational challenge of LLMs. These previous works explore practical acceleration methods at the model, optimizer, or data level.

Researchers from CoAI Group, Tsinghua University and Microsoft Research have proposed a theory to optimize LM learning, starting with maximizing the data compression rate. They derive the Law of Learning Theorem to elucidate the optimal learning dynamics. Validation experiments on linear classification and language modeling tasks confirm the properties of the theorem. The results indicate that optimal LM learning improves the coefficients in the LM scaling laws, offering promising implications for practical learning acceleration methods.

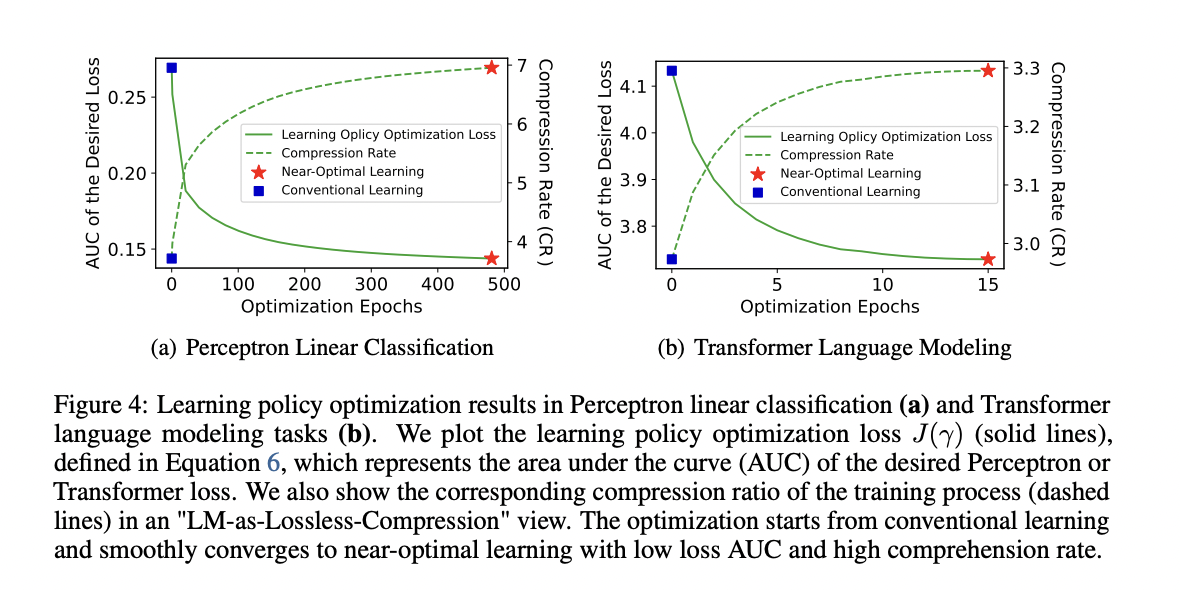

In your method (Optimal language model learning), researchers demonstrated the learning rate optimization principles of LM, including the optimization objective, the property of optimal learning dynamics, and the essential improvement of learning speedup. For the optimization objective, they have proposed to minimize the area under the curve (AUC), a learning process with the lowest AUC loss corresponds to the highest compression ratio. Then, they derived the Learning Law theorem that characterizes the property of dynamics in the LM learning process that achieves the optimality of its objective. Here, a learning policy induces a learning process that determines which data points the LM learns as training progresses.

After conducting experiments on linear classification with Perceptron and language modeling with Transformer, the researchers optimized the learning policies and validated them empirically. Near-optimal policies significantly accelerated learning, improving the loss AUC by 5.50× and 2.41× for Perceptron and Transformer, respectively. The results confirmed the theoretical predictions, demonstrating improved scaling law coefficients by up to 96.6% and 21.2%, promising faster LM training with practical significance.

In conclusion, researchers from CoAI Group, Tsinghua University and Microsoft Research proposed a theory to optimize LM learning to maximize the compression ratio. They derive the Law of Learning theorem, confirming that all examples contribute equally to optimal learning, validated in experiments. The optimal process improves the LM scaling law coefficients, guiding future acceleration methods.

Review the Paper and GitHub. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on Twitter and Google news. Join our 38k+ ML SubReddit, 41k+ Facebook community, Discord channeland LinkedIn Grabove.

If you like our work, you will love our Newsletter..

Don't forget to join our Telegram channel

You may also like our FREE ai Courses….

![]()

Asjad is an internal consultant at Marktechpost. He is pursuing B.tech in Mechanical Engineering at Indian Institute of technology, Kharagpur. Asjad is a machine learning and deep learning enthusiast who is always researching applications of machine learning in healthcare.

<!– ai CONTENT END 2 –>