A gentle introduction to the latest model of multimodal transfusion

Recently, Meta and Waymo published their latest paper:Transfusion: Predicting the next token and spreading images with a multimodal model, which integrates the popular transformer model with the diffusion model for multimodal training and prediction purposes.

I like Meta Previous workThe transfusion model is based on the Flame architecture with early fusion, which takes both the text token sequence and the image token sequence and uses a single Transformer model to generate the prediction. But unlike the prior art, the Transfusion model approaches image tokens differently:

- The image token sequence is generated by a portion of the pre-trained variational autoencoder.

- The transformer's attention to the image sequence is bidirectional rather than causal.

Let’s discuss the following in detail. We’ll first go over the basics, such as autoregressive and diffusion models, and then dive deeper into Transfusion’s architecture.

Autoregressive models

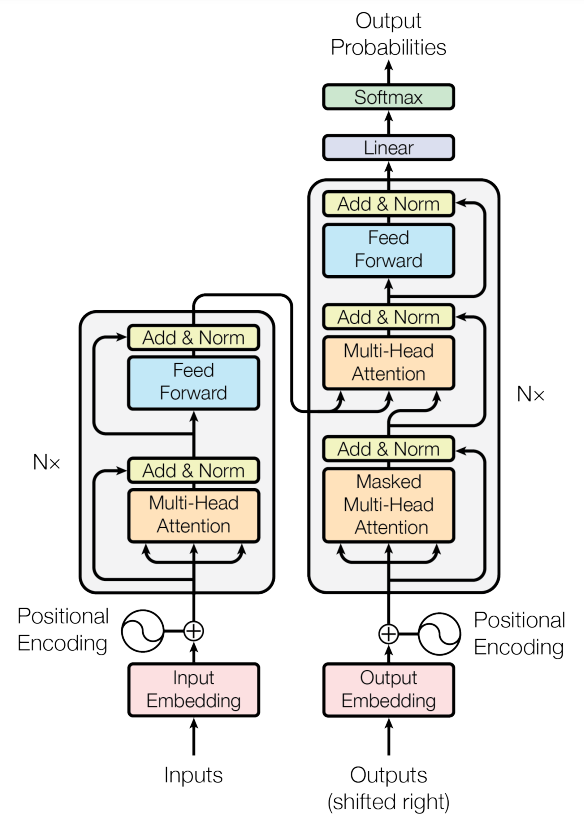

Currently, large language models (LLMs) are mainly based on transformer architectures, which were proposed in the Attention is all you need article published in 2017. The transformer architecture contains two parts: the encoder and the decoder.

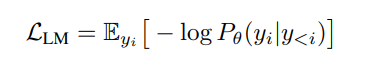

Masked language models like BERT use the encoder part pre-trained with random bidirectional masked token prediction (and next sentence prediction) tasks. For autoregressive models like the later LLMs, the decoder part is usually trained on the next token prediction task, where the LM loss is minimized:

In the above equation, \theta is the set of model parameters, and y_i is the token at index i in a sequence of length n. and

Diffusion models

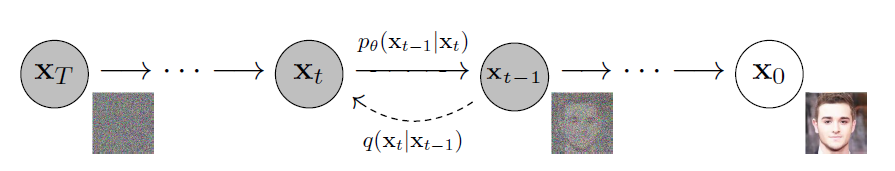

What is Diffusion Model? It is a series of deep learning models that are commonly used in computer vision (especially for medical image analysis) for image denoising/generation and other purposes. One of the most well-known diffusion models is DDPM, which comes from the Probabilistic diffusion models for noise elimination article published in 2020. The model is a parameterized Markov chain containing a backward and forward transition, as shown below.

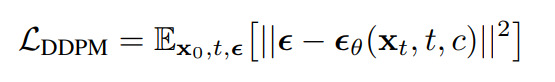

What is a Markov chain? It is a statistical process where the current step only depends on the previous step, and the inverse is the other way around. By assuming a Markov process, the model can start with a clean image by iteratively adding Gaussian noise in the forward process (right -> left in the figure above) and iteratively “learn” the noise using a Unet-based architecture in the reverse process (left -> right in the figure above). This is why we can sometimes view the diffusion model as a generative model (when used from left to right) and sometimes as a denoising model (when used from right to left). The DDPM loss is shown below, where theta is the set of model parameters, \epsilon is the known noise, and \epsilon_theta is the noise estimated by a deep learning model (usually a UNet):

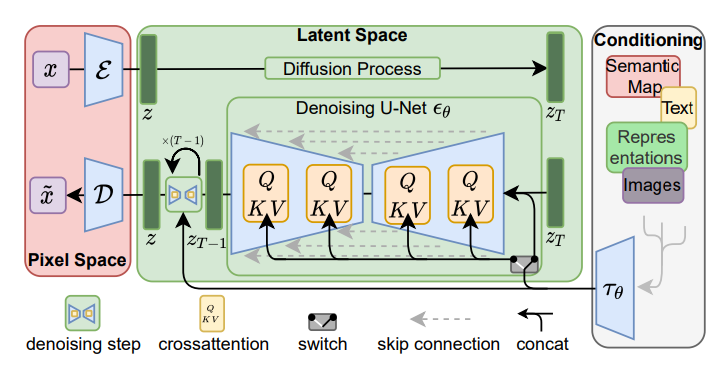

Latent space diffusion model

The idea of diffusion was further extended to the latent space in the CVPR'22 Presentationwhere images are first “compressed” into latent space by using the encoding part of a pre-trained system. Variational autoencoder (VAE). Then, the diffusion and inversion processes are performed in the latent space and mapped back to the pixel space using the decoder part of the VAE. This could greatly improve the learning speed and efficiency since most of the calculations are performed in a lower-dimensional space.

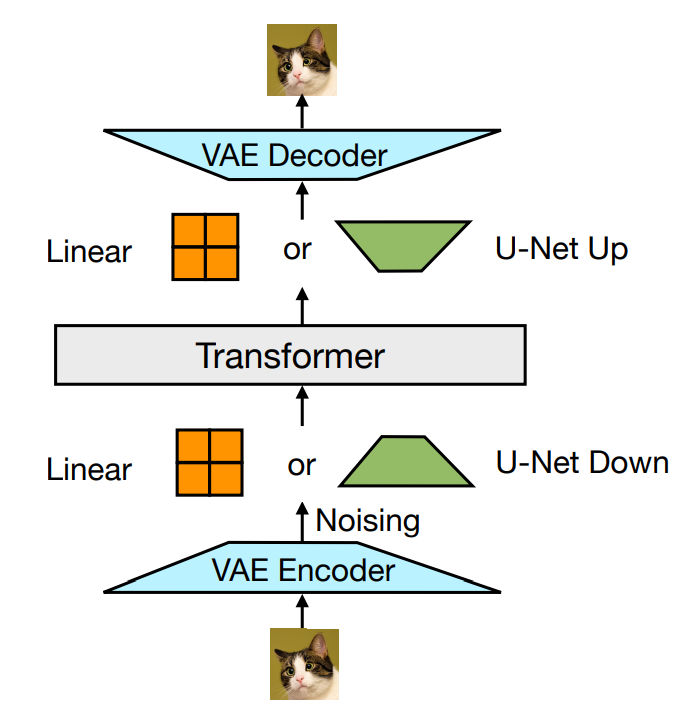

VAE-based image transfusion

The core of the Transfusion model is the fusion between diffusion and transformer for input images. First, an image is split into a sequence of 8*8 patches; each patch is passed to a pre-trained VAE encoder to “compress” it into an 8-element latent vector representation. Then, noise is added to the latent representation and processed by a linear layer encoder/U-Net to generate the “noisy” x_t. Third, the sequence of noisy latent representations is processed by the transformer model. Finally, the outputs are reverse processed by another linear decoder/U-Net before using a VAE decoder to generate the “real” image x_0.

In the actual implementation, the start of image (BOI) token and end of image (EOI) token are padded on both sides of the image rendering sequence before concatenating the text tokens. The self-attention for image training is bidirectional attention, while the self-attention for text tokens is causal. In the training stage, the loss for the image sequence is DDPM loss, while the rest of the text tokens use LM loss.

So why bother? Why do we need such a complicated procedure to process image patch tokens? The article explains that the token space for text and images is different. While text tokens are discrete, image tokens/patches are naturally continuous.In the previous technique, image tokens need to be “discretized” before merging into the transformer model, while directly integrating the diffusion model could solve this problem.

Compare with the state of the art

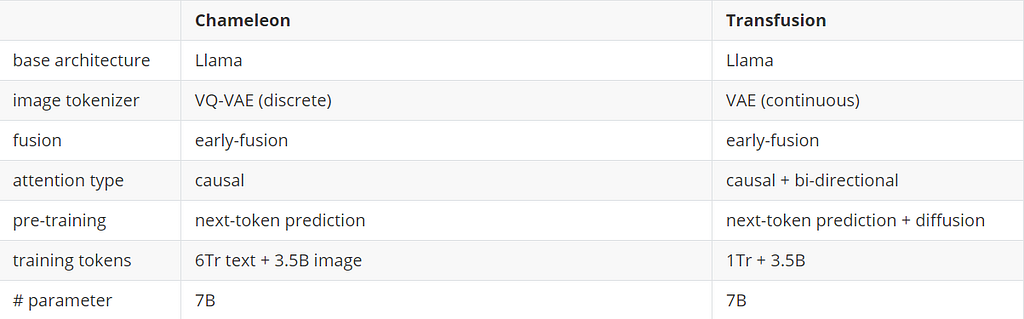

The main multimodal model that the article is compared to is the Chameleon modelthat Meta proposed earlier this year. Here, we compare the difference in architecture and training set size between Chameleon-7B and Transfusion-7B.

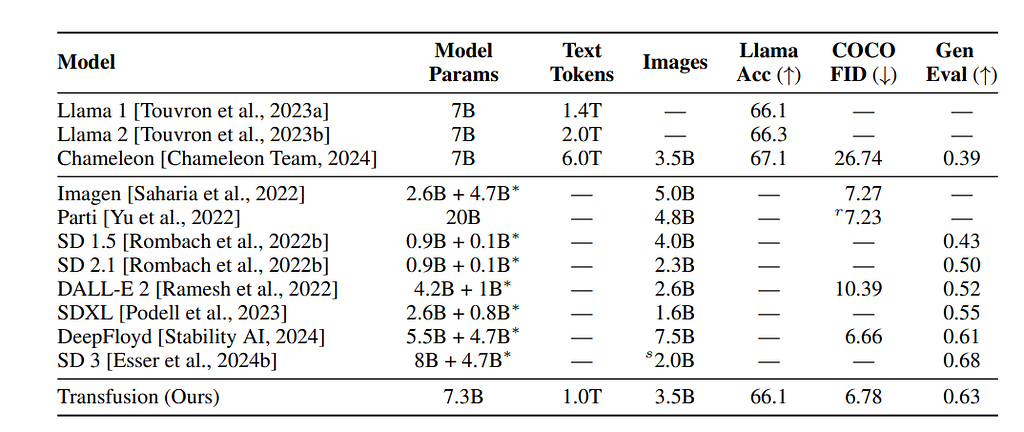

The paper lists the performance comparison with the accuracy of the Llama2 pre-training set, COCO zero-shot Frechet inception distance (FID) and GenEval benchmark. We can see that Transfusion performs much better than Chameleon on the image-related benchmarks (COCO and Gen) while losing very little margin compared to Chameleon, with the same number of parameters.

Additional comments.

Although the idea of the article is very interesting, the “Diffusion” part of Transfusion is not a real Diffusion, as there are only two timestamps in the Markov process. Moreover, the pre-trained VAE makes the model no longer strictly end-to-end. Moreover, the VAE + Linear/UNet + Encoder Transformer + Linear/UNet + VAE design seems so complicated, that it makes the audience can’t help but wonder: is there a more elegant way to implement this idea? Also, I previously wrote about the Latest Apple Post on the generalization benefits of using autoregressive models on images, so it might be interesting to give the “MIM + autoregressive” approach a second thought.

If you find this post interesting and want to discuss it, please leave a comment. I'll be happy to continue the discussion there 🙂

References

- Zhou et al., Transfusion: Predicting the next token and spreading images with a multimodal model. arXiv 2024.

- Team C. Chameleon: mixed-mode early fusion foundation models. arXiv 2024 preprint.

- Touvron et al., Llama: Open and efficient base language models. arXiv 2023.

- Rombach et al., High-resolution image synthesis with latent diffusion models. CVPR 2022.

- Ho et al., Probabilistic diffusion models for noise removal. NeurIPS 2020.

- Vaswani, mindfulness is all you need. NeurIPS 2017.

- Kingma, variational Bayes with automatic encoding. arXiv 2013 preprint.

Transformative? Diffusion? Transfusion! was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.