Introduction

Emotion detection is the most important component of affective computing. It has gained significant momentum in recent years due to its applications in various fields such as psychology, human-computer interaction, and marketing. Critical to the development of effective emotion detection systems are high-quality data sets annotated with emotional labels. In this article, we delve into the six major datasets available for emotion detection. We will explore its characteristics, strengths, and contributions to the advancement of research in the understanding and interpretation of human emotions.

Key factors

When selecting data sets for emotion detection, several critical factors come into play:

- Data quality: Ensure accurate and reliable annotations.

- Emotional Diversity: Representing a wide range of emotions and expressions.

- Data volume: Sufficient samples for robust model training.

- Contextual information: Including relevant context for nuanced understanding.

- Reference status: Recognition within the research community for benchmarking.

- Accessibility: Availability and accessibility for researchers and professionals.

Top 8 Datasets Available for Emotion Detection

Here is the list of top 8 datasets available for emotion detection:

- FER2023

- AffectoNet

- CK+ (Cohn-Kanade extended)

- Make sure

- EMOTIC

- Google Facial Expression Comparison Dataset

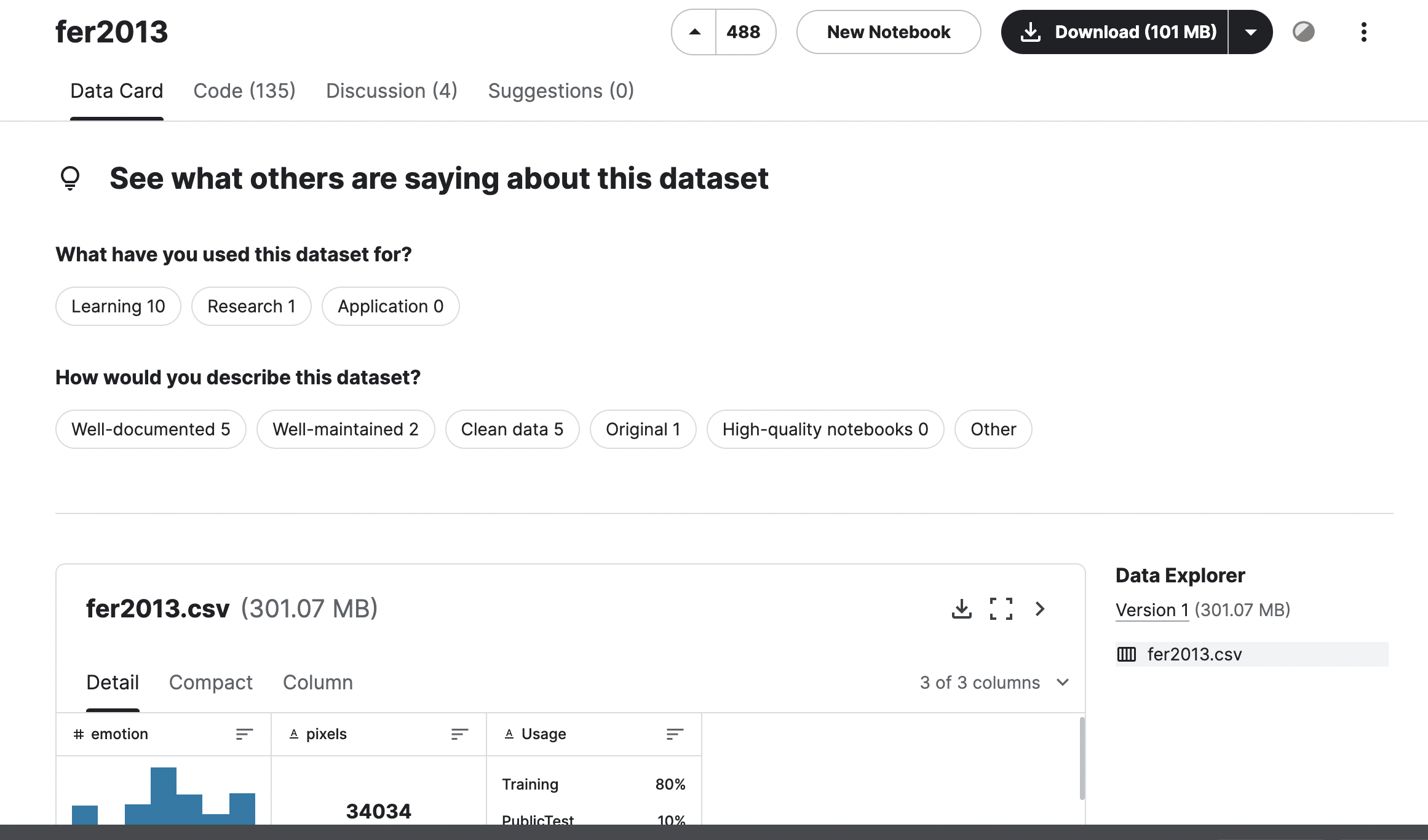

FER2013

The FER2013 dataset is a collection of grayscale facial images. Each image measures 48 × 48 pixels and is marked with one of seven basic emotions: anger, disgust, fear, happiness, sadness, surprise, or neutral. It comprises a total of more than 35,000 images, making it an important resource for emotion recognition research and applications. Originally selected for the Kaggle facial expression recognition challenge in 2013. This dataset has since become a standard benchmark in the field.

Why use FER2013?

FER2013 is a widely used benchmark dataset for evaluating facial expression recognition algorithms. It serves as a reference point for various models and techniques, promoting innovation in emotion recognition. Its extensive data corpus helps machine learning professionals train robust models for various applications. Accessibility promotes transparency and knowledge sharing.

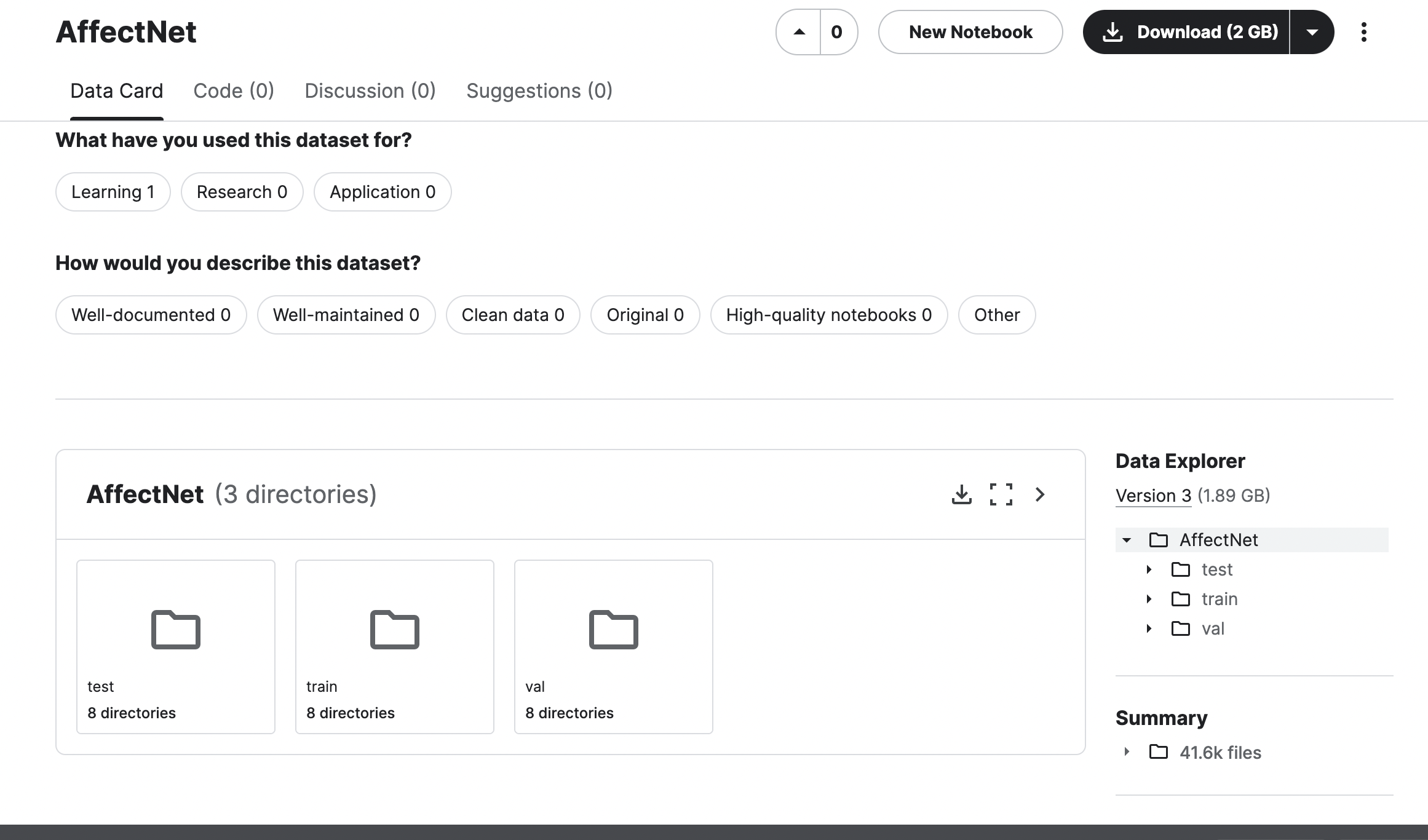

AffectoNet

Anger, disgust, fear, pleasure, sadness, surprise and neutrality are the seven basic emotions annotated in over a million facial photographs on AffectNet. The dataset ensures diversity and inclusion in the representation of emotions by covering a wide range of demographics, including ages, genders, and races. With precise labeling of each image relative to its emotional state, realistic annotations are provided for training and evaluation.

Why use AffectNet?

In facial expression analysis and emotion recognition, AffectNet is essential as it provides a reference data set to evaluate the performance of algorithms and helps academics create new strategies. It is essential for building robust emotion recognition models for use in affective computing and human-computer interaction, among other applications. AffectNet's contextual richness and broad coverage ensure the reliability of trained models in practical environments.

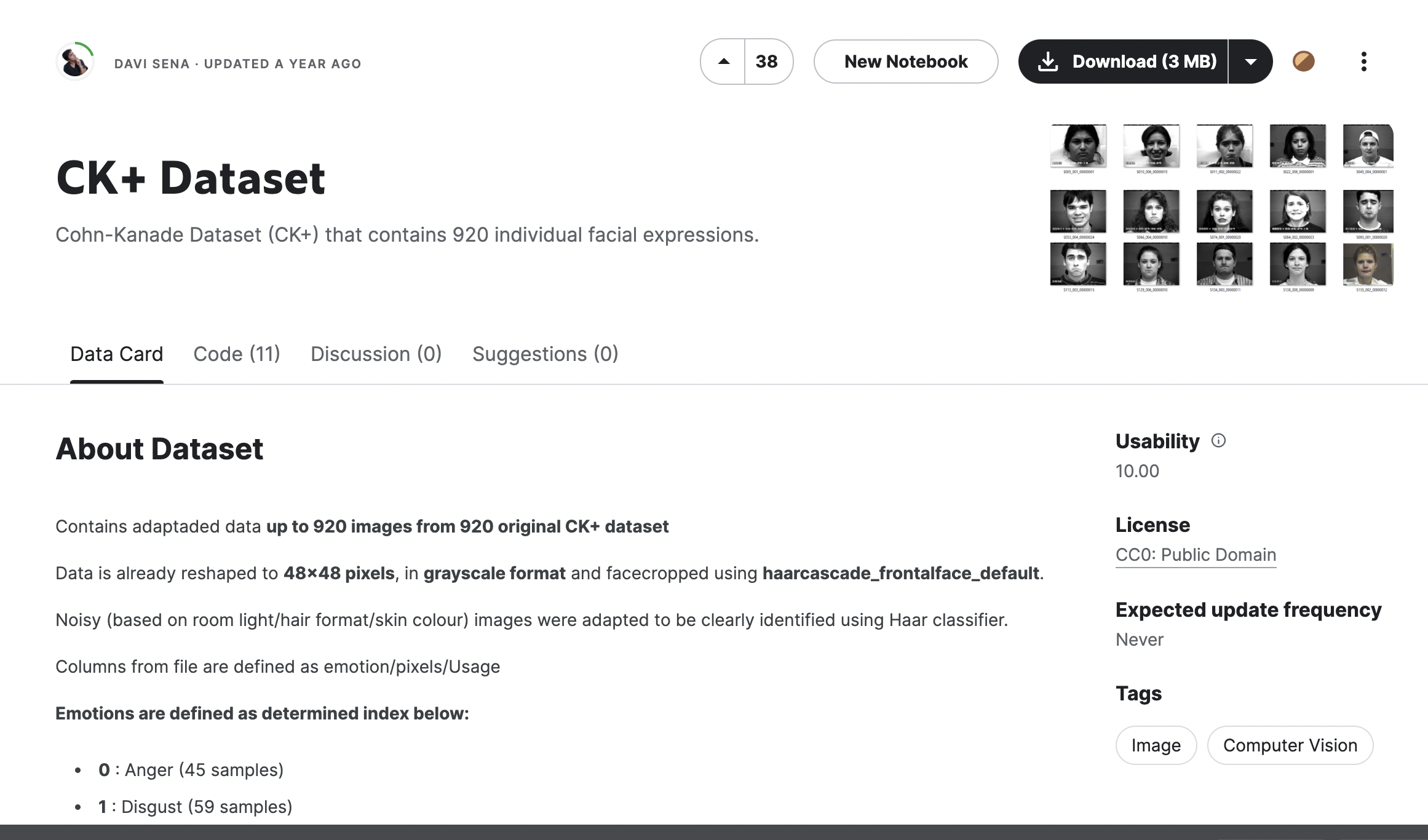

CK+ (Cohn-Kanade extended)

An expansion of the Cohn-Kanade dataset created especially for tasks involving emotion identification and facial expression analysis is called CK+ (Extended Cohn-Kanade). It includes a wide variety of expressions on faces that were photographed in a laboratory under strict guidelines. Emotion recognition algorithms can benefit from the valuable data that CK+ offers as it focuses on spontaneous expressions. CK+, an important resource for affective computing scholars and practitioners, also provides comprehensive annotations such as emotion labels and facial landmark locations.

Why use CK+ (Extended Cohn-Kanade)?

CK+ is a renowned dataset for facial expression analysis and emotion recognition, offering a rich collection of spontaneous facial expressions. Provides detailed annotations for accurate training and evaluation of emotion recognition algorithms. CK+'s standardized protocols ensure consistency and reliability, making it a trusted resource for researchers. It serves as a benchmark for comparing facial expression recognition approaches and opens new research opportunities in affective computing.

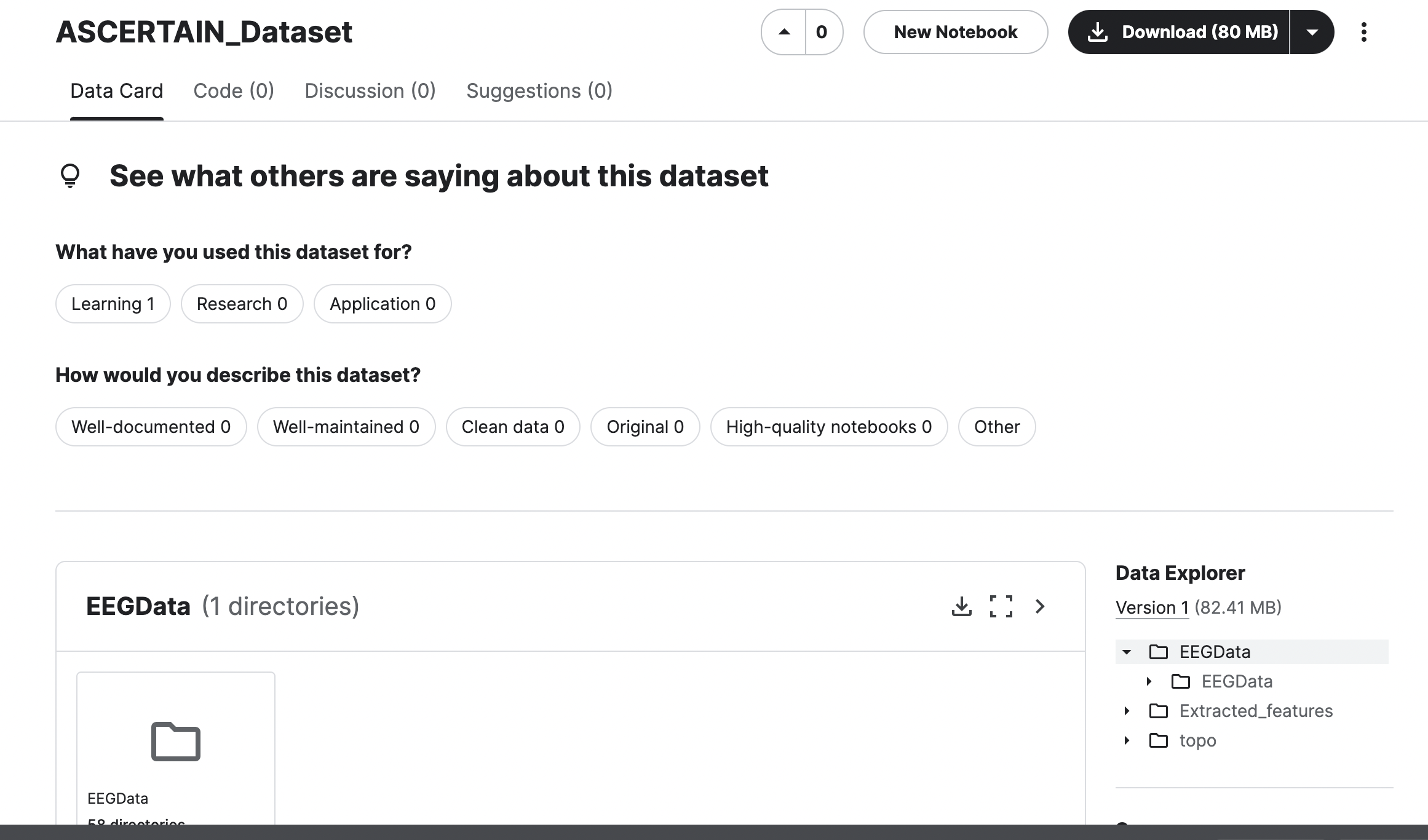

Make sure

Ascertain is a curated dataset for emotion recognition tasks, featuring various facial expressions with detailed annotations. Its inclusiveness and variability make it valuable for training robust models applicable in real-world scenarios. Researchers benefit from its standardized framework to benchmark and advance emotion recognition technology.

Why use Determine?

Ascertain offers several advantages for emotion recognition tasks. Its diverse and well-annotated dataset provides a rich source of facial expressions for training machine learning models. By leveraging Ascertain, researchers can develop more accurate and robust emotion recognition algorithms capable of handling real-world scenarios. Furthermore, its standardized framework makes it easy to benchmark and compare different approaches, driving advances in emotion recognition technology.

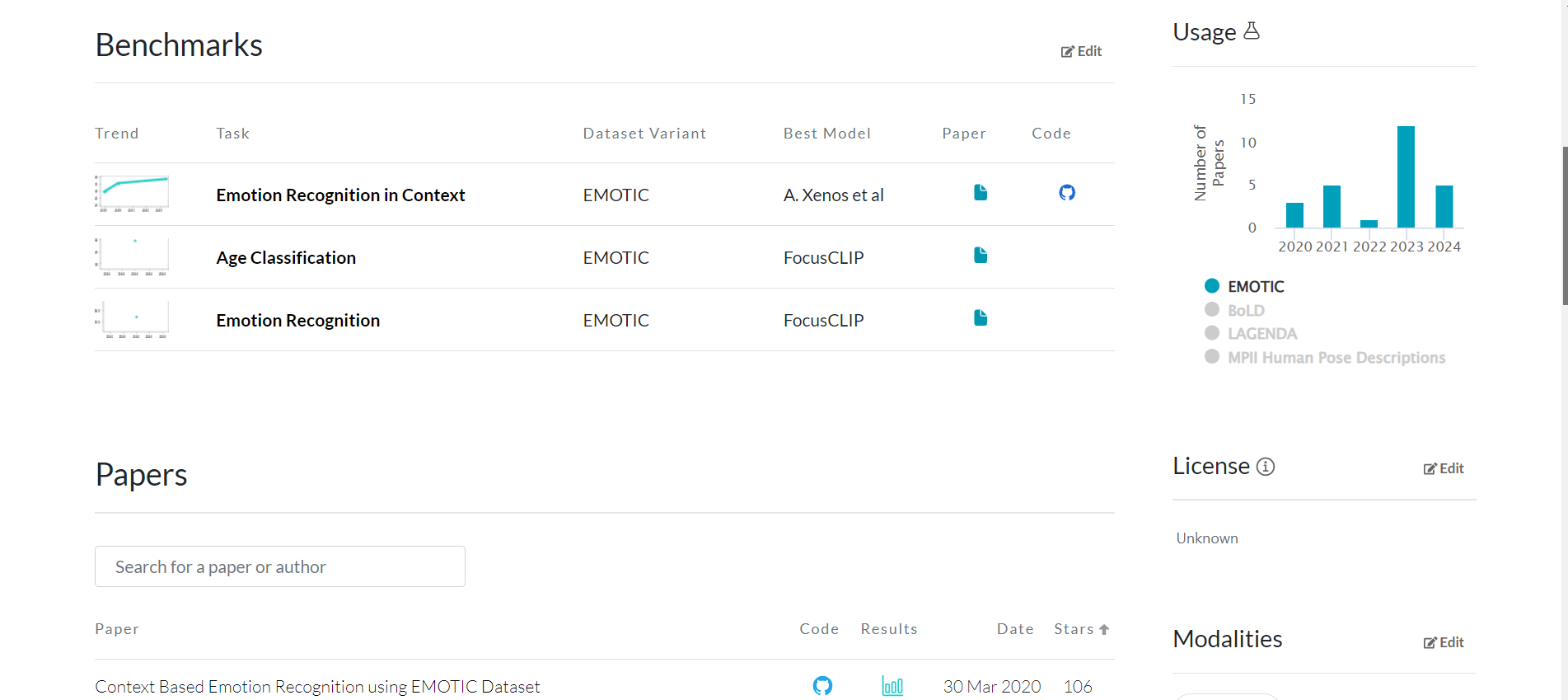

EMOTIC

The EMOTIC dataset was created with contextual understanding of human emotions in mind. It features images of people doing different things and movements. Captures a variety of interactions and emotional states. The dataset is useful for training emotion recognition algorithms in practical situations. Since it is annotated with both thick and fine emotion labels. EMOTIC's contextual understanding approach makes it possible for researchers to create more complex emotion identification algorithms. This improves its usability in real-world applications such as affective computing and human-computer interaction.

Why use EMOTIC?

Because EMOTIC focuses on contextual knowledge, it is useful for training and testing emotion recognition models in real-world situations. This facilitates the creation of more sophisticated and context-aware algorithms, improving their suitability for real-world uses such as affective computing and human-computer interaction.

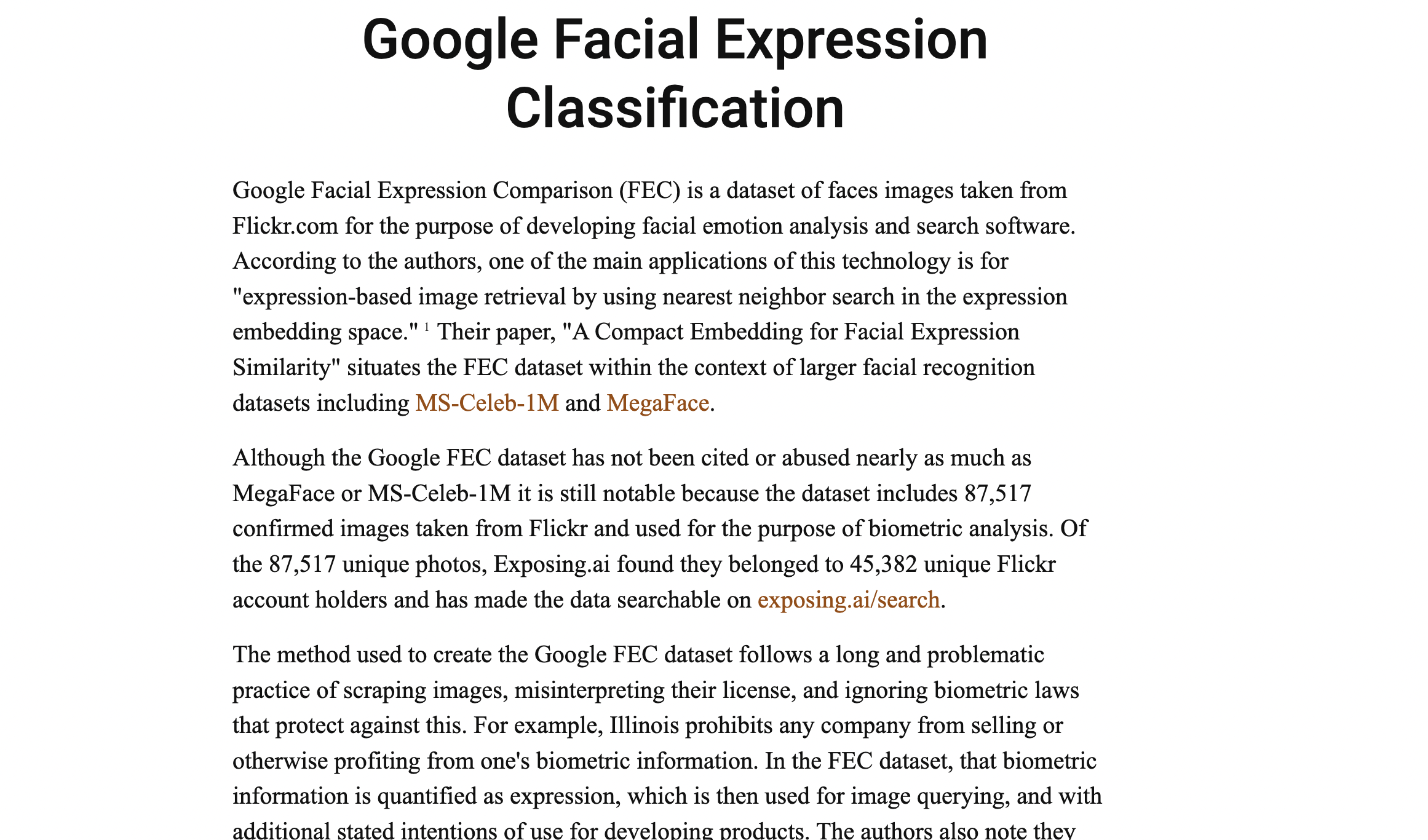

Google Facial Expression Comparison Dataset

A wide range of facial expressions are available for training and testing facial expression recognition algorithms in the Google Facial Expression Comparison (GFEC) dataset. With annotations for different expressions, it allows researchers to create robust models that can accurately recognize and categorize facial expressions. Facial expression analysis is advancing thanks to GFEC, which is a wonderful resource with a wealth of data and annotations.

Why use GFEC?

With its wide variety of expressions and extensive annotations, the Google Facial Expression Comparison (GFEC) dataset is an essential resource for facial expression recognition research. It acts as a standard, facilitating algorithm comparisons and driving improvements in facial expression recognition technology. GFEC is important because it can be used in real-world situations, such as emotional computing and human-computer interaction.

ai/google_fec/” target=”_blank” rel=”noreferrer noopener nofollow”>Get the data set here.

Conclusion

High-quality data sets are crucial for emotion detection and facial expression recognition research. The eight core data sets offer unique features and strengths and meet diverse research needs and applications. These data sets drive innovation in affective computing, improving the understanding and interpretation of human emotions in diverse contexts. As researchers take advantage of these resources, we expect more advances in the field.

You can read more articles from our list here.