Transformers have transformed artificial intelligence, delivering unmatched performance in NLP, computer vision, and multimodal data integration. These models excel at identifying patterns within data through their attention mechanisms, making them ideal for complex tasks. However, the rapid scaling of transformer models needs to be improved due to the high computational cost associated with their traditional structure. As these models grow, they demand significant hardware resources and training time, which increases exponentially with model size. Researchers have set out to address these limitations by innovating more efficient methods for managing and scaling transformer models without sacrificing performance.

The main obstacle when scaling transformers lies in the fixed parameters within their linear projection layers. This static structure limits the model's ability to expand without being completely retrained, which becomes exponentially more expensive as model sizes increase. These traditional models often require comprehensive retraining when architectural modifications occur, such as increasing channel dimensions. Consequently, the computational cost of these expansions grows impractically and the approach lacks flexibility. The inability to add new parameters dynamically stifles growth, making these models less adaptable to evolving ai applications and more costly in terms of time and resources.

Historically, approaches to managing model scalability included duplicating weights or restructuring models using methods such as Net2Net, where duplicated neurons expand layers. However, these approaches often upset the balance of pre-trained models, resulting in slower convergence rates and additional training complexities. While these methods have made incremental progress, they still face limitations in preserving model integrity during scaling. Transformers rely heavily on static linear projections, making parameter expansion costly and inflexible. Traditional models like GPT and other large transformers are often retrained from scratch, incurring high computational costs with each new scaling stage.

Researchers from the Max Planck Institute, Google and Peking University developed a new architecture called symbolic forms. This model fundamentally reinvents transformers by treating model parameters as tokens, allowing for dynamic interactions between tokens and parameters. In this framework, Tokenformer introduces a novel component called token-parameter attention (Pattention) layer, which facilitates incremental scaling. The model can add new parameter tokens without retraining, dramatically reducing training costs. By representing input tokens and parameters within the same framework, Tokenformer enables flexible scaling, providing researchers with a more efficient and resource-aware model architecture that preserves scalability and high performance.

Tokenformer's Pattention layer uses input tokens as queries, while model parameters serve as keys and values, which differs from the standard transformer approach, which relies solely on linear projections. Scaling the model is achieved by adding new key-value parameter pairs, keeping the input and output dimensions constant and avoiding complete retraining. Tokenformer's architecture is designed to be modular, allowing researchers to seamlessly extend the model by incorporating additional tokens. This incremental scaling capability supports efficient reuse of previously trained weights while allowing rapid adaptation to new data sets or larger models without altering the learned information.

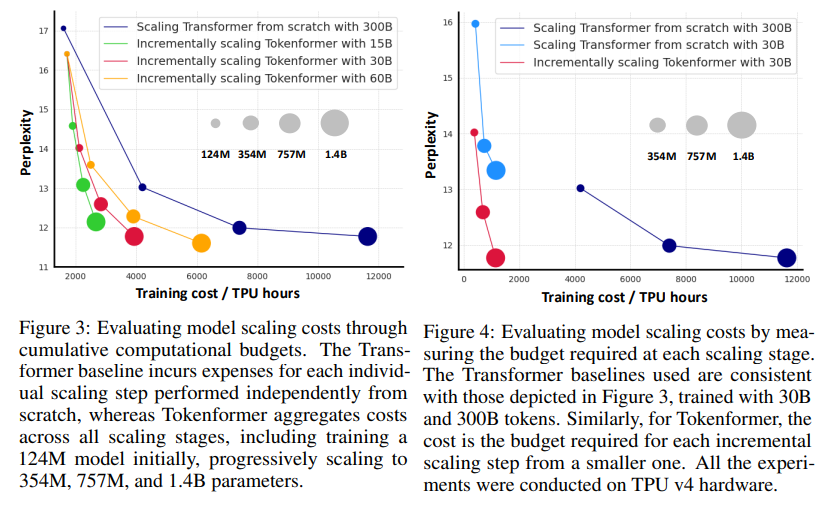

The performance benefits of Tokenformer are notable, as the model significantly reduces computational costs while maintaining accuracy. For example, Tokenformer scaled from 124 million to 1.4 billion parameters with only half the typical training costs required by traditional transformers. In one experiment, the model achieved a test perplexity of 11.77 for a configuration of 1.4 billion parameters, nearly matching the perplexity of 11.63 of a similarly sized transformer trained from scratch. This efficiency means that Tokenformer can achieve high performance across multiple domains, including visual and language modeling tasks, with a fraction of the resource expenditure of traditional models.

Tokenformer presents numerous key findings to advance ai research and improve transformer-based models. These include:

- Substantial cost savings: Tokenformer's architecture reduced training costs by more than 50% compared to standard transformers. For example, scaling parameters from 124 million to 1.4 billion required only a fraction of the budget for transformers trained from scratch.

- Incremental scaling with high efficiency: The model supports incremental scaling by adding new parameter tokens without modifying the core architecture, allowing for flexibility and lower retraining demands.

- Preservation of learned information: The tokenformer retains knowledge from smaller, pre-trained models, which speeds up convergence and prevents loss of learned information during scaling.

- Improved performance in various tasks: In benchmarks, Tokenformer achieved competitive levels of accuracy in visual and language modeling tasks, demonstrating its capabilities as a versatile foundational model.

- Optimized token interaction cost: By decoupling token-token interaction costs from scaling, Tokenformer can more efficiently handle longer sequences and larger models.

In conclusion, Tokenformer offers a transformative approach to scaling transformer-based models. This model architecture achieves scalability and resource efficiency by treating parameters as tokens, reducing costs, and preserving model performance across tasks. This flexibility represents a major advance in transformer design, providing a model that can adapt to the demands of advancing ai applications without the need for retraining. The Tokenformer architecture holds promise for future ai research as it offers a path to develop large-scale models in a sustainable and efficient manner.

look at the Paper, GitHub pageand Models on HuggingFace. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter and join our Telegram channel and LinkedIn Grabove. If you like our work, you will love our information sheet.. Don't forget to join our SubReddit over 55,000ml.

(Sponsorship opportunity with us) Promote your research/product/webinar to over 1 million monthly readers and over 500,000 community members

Sana Hassan, a consulting intern at Marktechpost and a dual degree student at IIT Madras, is passionate about applying technology and artificial intelligence to address real-world challenges. With a strong interest in solving practical problems, he brings a new perspective to the intersection of ai and real-life solutions.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>