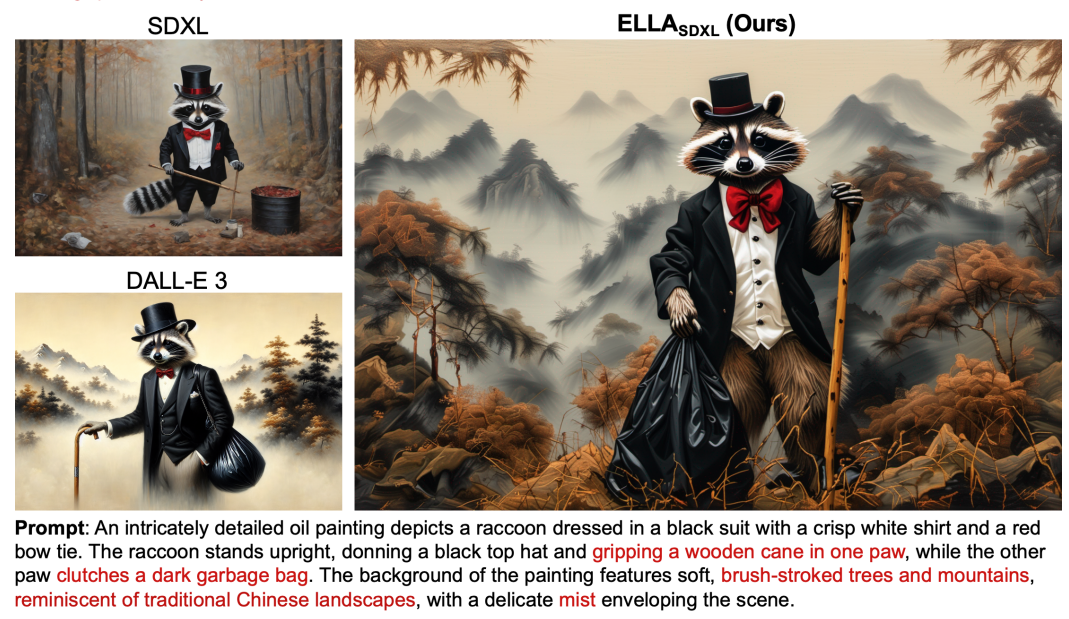

With diffusion models, the field of text-to-image generation has made significant progress. However, current models frequently use CLIP as a text encoder, which restricts their ability to understand complicated prompts with many elements, minute details, complex relationships, and extensive text alignment. To overcome these challenges, this study presents the Efficient Large Language Model Adapter (ELLA), a novel method. By integrating powerful large language models (LLMs) into text-to-image diffusion models, ELLA improves them without requiring U-Net or LLM training. A significant innovation is the Timestep-Aware Semantic Connector (TSC), a module that dynamically extracts conditions that vary with time step from the LLM that has already been trained. ELLA helps interpret long and complex cues by modifying semantic features in several denoising phases.

In recent years, diffusion models have been the main motivation behind text-to-image generation, producing images that are aesthetically pleasing and relevant to the text. However, common models, including CLIP-based variations, struggle with dense prompts, limiting their ability to handle complex connections and exhaustive descriptions of many elements. As a lightweight alternative, ELLA improves upon current models by seamlessly incorporating powerful LLM, eventually increasing fast tracking capabilities and making it possible to understand long, dense texts without the need for LLM or U-Net training.

Pre-trained LLMs, such as T5, TinyLlama or LLaMA-2, are integrated with a TSC in the ELLA architecture to provide semantic alignment throughout the denoising process. TSC automatically adjusts semantic features in multiple denoising stages based on the resampler architecture. Time step information is added to TSC, which improves its ability to extract features from dynamic text and enables better conditioning of the frozen U-Net at different semantic levels.

The paper presents the Dense Prompt Graph Benchmark (DPG-Bench), consisting of 1065 dense and long messages, to evaluate the performance of text-to-image models on dense messages. The dataset provides a more comprehensive evaluation than current benchmarks by evaluating semantic alignment capabilities to address difficult and information-rich signals. Additionally, the suitability of ELLA for use with current community models and subsequent tools is shown, offering a promising avenue for further improvement.

The article provides an insightful summary of relevant research in the fields of compositional models of text-to-image diffusion, text-to-image diffusion models, and their shortcomings when it comes to following complex instructions. It lays the groundwork for ELLA's creative contributions by highlighting the shortcomings of CLIP-based models and the importance of adding powerful LLMs such as T5 and LLaMA-2 to existing models.

Using LLM as text encoders, ELLA's design introduces the TSC for dynamic semantic alignment. In-depth testing is carried out in the research, whereby ELLA is compared to more sophisticated models with dense prompts using DPG-Bench and short composition questions in a subset of T2I-CompBench. The results show that ELLA is superior, especially in following complex directions, compositions with many objects and various attributes and relationships.

The influence of various LLM options and alternative architecture designs on ELLA performance is investigated using ablation research. The robustness of the suggested method is demonstrated by the strong impact of the TSC module design and the selection of LLM on the understanding of the model for both simple and complex indications.

ELLA effectively improves text-to-image creation, allowing models to understand complex prompts without needing to retrain on LLM or U-Net. The paper admits its shortcomings, such as the frozen limitations of U-Net and the sensitivity of MLLM. It recommends directions for future studies, including troubleshooting and investigating further integration of MLLM with diffusion models.

In conclusion, ELLA represents a major advancement in the industry as it opens the door to improved text-to-image generation capabilities without requiring much retraining, which will eventually lead to more efficient and versatile models in this domain.

Review the Paper and GitHub. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on Twitter. Join our Telegram channel, Discord channeland LinkedIn Grabove.

If you like our work, you will love our Newsletter..

Don't forget to join our 38k+ ML SubReddit

![]()

Vibhanshu Patidar is a Consulting Intern at MarktechPost. He is currently pursuing a bachelor's degree at the Indian Institute of technology (IIT) Kanpur. He is a robotics and machine learning enthusiast with a knack for unraveling the complexities of algorithms that bridge theory and practical applications.

<!– ai CONTENT END 2 –>

NEWSLETTER

NEWSLETTER