Large multimodal language models (MLLMs) focus on creating artificial intelligence (ai) systems capable of seamlessly interpreting textual and visual data. These models aim to bridge the gap between natural language understanding and visual understanding, allowing machines to cohesively process diverse forms of input, from text documents to images. Understanding and reasoning across multiple modalities is becoming crucial, especially as ai moves toward more sophisticated applications in areas such as image recognition, natural language processing, and computer vision. By improving the way ai integrates and processes diverse data sources, MLLMs are poised to revolutionize tasks such as image captioning, document understanding, and interactive ai systems.

A major challenge in developing multimodal ai models is ensuring that they perform equally well on text-based and visual language tasks. Often, improvements in one area can lead to a decline in the other. For example, improving a model’s visual understanding can negatively impact its language capabilities, which is problematic for applications that require both, such as optical character recognition (OCR) or complex multimodal reasoning. The key issue is balancing the processing of visual data, such as high-resolution images, while maintaining robust textual reasoning. As ai applications become more advanced, this trade-off becomes a critical bottleneck in the progress of multimodal ai models.

Existing approaches to MLLMs, including models such as GPT-4V and InternVL, have attempted to address this problem using various architectural techniques. These models either freeze the language model during training or employ cross-attention mechanisms to process image and text tokens simultaneously. However, these methods are not without flaws. Freezing the language model during multimodal training often results in poor performance on vision-language tasks. In contrast, open-access models such as LLaVA-OneVision and InternVL have shown marked degradation in text-only performance after multimodal training. This reflects a persistent problem in the field, where advances in one modality come at the expense of another.

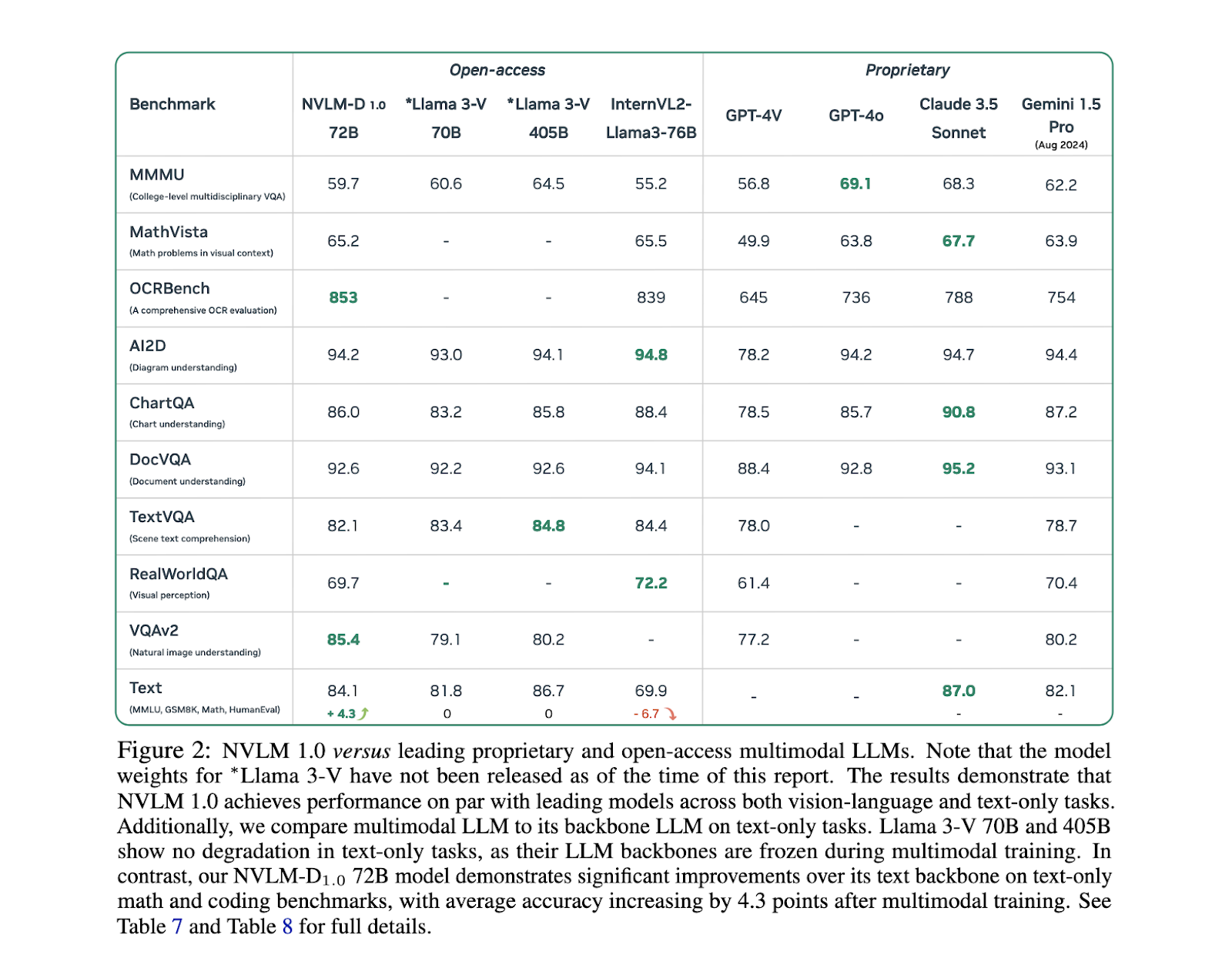

NVIDIA researchers have introduced NVLM 1.0 models, which represent a significant advancement in multimodal language modeling. The NVLM 1.0 family consists of three main architectures: NVLM-D, NVLM-x, and NVLM-H. Each of these models addresses the shortcomings of previous approaches by integrating advanced multimodal reasoning capabilities with efficient text processing. A notable feature of NVLM 1.0 is the inclusion of high-quality text-only supervised fine-tuning (SFT) data during training, allowing these models to maintain and even improve their text-only performance while excelling at vision and language tasks. The research team highlighted that their approach is designed to outperform existing proprietary models like GPT-4V and open-access alternatives like InternVL.

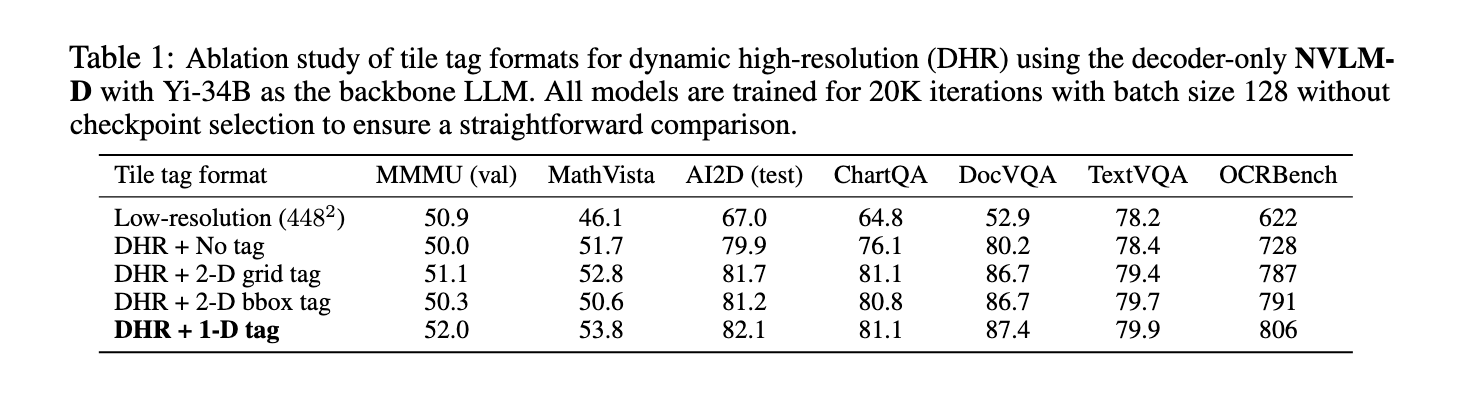

NVLM 1.0 models employ a hybrid architecture to balance text and image processing. NVLM-D, the decoder-only model, handles both modalities in a unified manner, making it particularly well-suited for multimodal reasoning tasks. NVLM-x, on the other hand, is built using cross-attention mechanisms, which improve computational efficiency when processing high-resolution images. The hybrid model, NVLM-H, combines the strengths of both approaches, allowing for more detailed image understanding while retaining the efficiency needed for text reasoning. These models incorporate dynamic tiles for high-resolution photos, significantly improving performance on OCR-related tasks without sacrificing reasoning capabilities. The integration of a 1-D tile labeling system enables accurate processing of image tokens, increasing performance on tasks such as document understanding and reading scene text.

In terms of performance, the NVLM 1.0 models have achieved impressive results in multiple benchmark tests. For example, on text-only tasks such as MATH and GSM8K, the NVLM-D1.0 72B model saw a 4.3-point improvement over its parent text-only version, thanks to the integration of high-quality text datasets during training. The models also demonstrated strong performance in vision and language, with accuracy scores of 93.6% on the VQAv2 dataset and 87.4% on AI2D for visual question answering and reasoning tasks. On OCR-related tasks, the NVLM models significantly outperformed existing systems, scoring 87.4% on DocVQA and 81.7% on ChartQA, highlighting their ability to handle complex visual information. These results were achieved by the NVLM-x and NVLM-H models, which demonstrated superior handling of high-resolution images and multimodal data.

One of the key findings of the research is that NVLM models not only excel at vision-language tasks, but also maintain or improve their performance on text-only tasks, something that other multimodal models struggle to achieve. For example, on text-based reasoning tasks such as MMLU, NVLM models maintained high levels of accuracy, even outperforming their text-only counterparts in some cases. This is particularly important for applications that require robust text understanding alongside visual data processing, such as document analysis and image-text reasoning. The NVLM-H model, in particular, strikes a balance between image processing efficiency and multimodal reasoning accuracy, making it one of the most promising models in this field.

In conclusion, the NVLM 1.0 models developed by NVIDIA researchers represent a significant advancement in large-scale multimodal language models. By integrating high-quality text datasets into multimodal training and employing innovative architectural designs such as dynamic mosaics and tile labeling for high-resolution images, these models address the critical challenge of balancing text and image processing without sacrificing performance. The NVLM family of models not only outperforms leading proprietary systems on vision and language tasks, but also maintains superior text-only reasoning capabilities, marking a new frontier in the development of multimodal ai systems.

Take a look at the PaperAll credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter and join our Telegram Channel and LinkedIn GrAbove!. If you like our work, you will love our fact sheet..

Don't forget to join our SubReddit of over 50,000 ml

FREE ai WEBINAR: 'SAM 2 for Video: How to Optimize Your Data' (Wednesday, September 25, 4:00 am – 4:45 am EST)

Nikhil is a Consultant Intern at Marktechpost. He is pursuing an integrated dual degree in Materials from Indian Institute of technology, Kharagpur. Nikhil is an ai and Machine Learning enthusiast who is always researching applications in fields like Biomaterials and Biomedical Science. With a strong background in Materials Science, he is exploring new advancements and creating opportunities to contribute.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>