Large language models (LLMs) have demonstrated remarkable capabilities in a wide range of natural language processing (NLP) tasks, such as machine translation and question answering. However, a significant challenge remains in understanding the theoretical underpinnings of their performance. Specifically, there is a lack of a comprehensive framework that explains how LLMs generate coherent and contextually relevant text sequences. This challenge is compounded by limitations such as fixed vocabulary size and context windows, which limit the complete understanding of token sequences that LLMs can process. Addressing this challenge is essential to optimize the efficiency of LLMs and expand their real-world applicability.

Previous studies have focused on the empirical success of LLMs, particularly those built on the transformer architecture. While these models perform well on tasks involving sequential token generation, existing research has simplified their architectures for theoretical analysis or neglected the temporal dependencies inherent in token sequences. This limits the scope of their findings and leaves gaps in our understanding of how LLMs generalize beyond their training data. Furthermore, no framework has successfully derived theoretical generalization bounds for LLMs when handling temporally dependent sequences, which is crucial for their broader application in real-world tasks.

A team of researchers from ENS Paris-Saclay, Inria Paris, Imperial College London and Huawei Noah's Ark Lab presents a novel framework by modeling LLM as finite-state Markov chains, where each input sequence of tokens corresponds to a state and transitions between The states are determined by the model's prediction of the next token. This formulation captures the full range of possible token sequences, providing a structured way to analyze LLM behavior. By formalizing LLMs through this probabilistic framework, the study offers insights into their inference capabilities, specifically the stationary distribution of token sequences and the speed at which the model converges to this distribution. This approach represents a significant advance in understanding how LLMs work, as it provides a more interpretable and theoretically informed foundation.

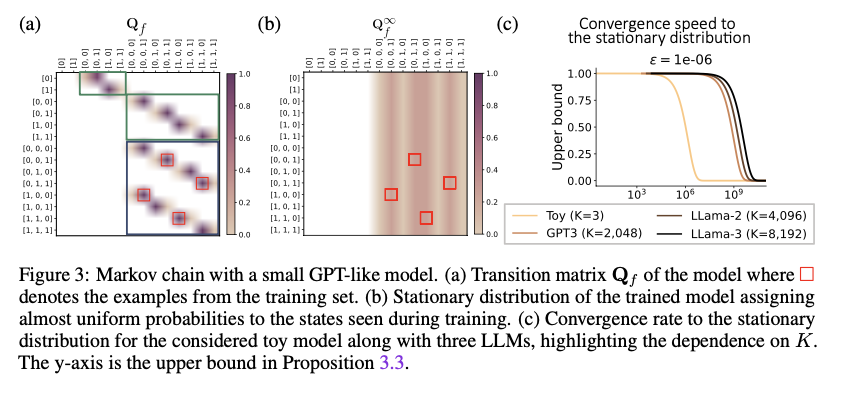

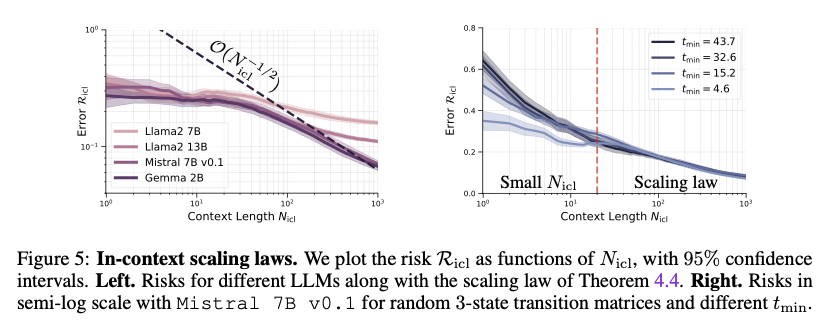

This method constructs a Markov chain representation of LLM by defining a transition matrix Qf, which is sparse and block-structured, and captures the possible output sequences of the model. The size of the transition matrix is O(T^k), where T is the size of the vocabulary and K is the size of the context window. The stationary distribution derived from this matrix indicates the long-term predictive behavior of the LLM across all input sequences. The researchers also explore the influence of temperature on the LLM's ability to traverse the state space efficiently, showing that higher temperatures lead to faster convergence. These insights were validated through experiments with GPT-like models, confirming theoretical predictions.

Experimental evaluation of several LLMs confirmed that modeling them as Markov chains leads to more efficient exploration of the state space and faster convergence to a stationary distribution. Higher temperature settings markedly improved convergence speed, while models with larger context windows required more steps to stabilize. Furthermore, the framework outperformed traditional frequentist approaches in learning transition matrices, especially for large state spaces. These results highlight the robustness and efficiency of this approach in providing deeper insights into the behavior of the LLM, particularly in generating coherent sequences applicable to real-world tasks.

This study presents a theoretical framework that models LLMs as Markov chains, offering a structured approach to understanding their inference mechanisms. By deriving generalization bounds and experimentally validating the framework, the researchers demonstrate that LLMs very efficiently learn sequences of tokens. This approach significantly improves the design and optimization of LLMs, leading to better generalization and performance on a variety of NLP tasks. The framework provides a solid foundation for future research, particularly by examining how LLMs process and generate coherent sequences in various real-world applications.

look at the Paper. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter and join our Telegram channel and LinkedIn Grabove. If you like our work, you will love our information sheet.. Don't forget to join our SubReddit over 50,000ml

(Next Event: Oct 17, 202) RetrieveX – The GenAI Data Recovery Conference (Promoted)

Aswin AK is a Consulting Intern at MarkTechPost. He is pursuing his dual degree from the Indian Institute of technology Kharagpur. He is passionate about data science and machine learning, and brings a strong academic background and practical experience solving real-life interdisciplinary challenges.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>

NEWSLETTER

NEWSLETTER