The development of large language models (LLM) represents a cutting-edge frontier. These models, trained to analyze, generate and interpret human language, are increasingly becoming the backbone of various digital tools and platforms, enhancing everything from simple automated writing assistants to complex conversational agents. Training these sophisticated models is a task that requires significant computational resources and vast data sets. The quest for efficiency in this training process is driven by the need to mitigate environmental impact and manage the increasing computational costs associated with ever-growing data sets.

The traditional method of indiscriminately feeding gigantic data sets to models, in the hope of capturing the vast expanse of linguistic nuances, is inefficient and unsustainable. The brute force approach of this method is being reevaluated in light of new strategies that seek to improve the learning efficiency of LLMs through careful selection of training data. These strategies aim to ensure that each data used in training contains the maximum possible instructional value, thus optimizing training efficiency.

Recent innovations from researchers at Google DeepMind, the University of California, San Diego, and Texas A&M University have led to the development of sophisticated data curation methods that aim to elevate model performance by focusing on the quality and diversity of data from training. These methods employ advanced algorithms to evaluate the potential impact of individual data points on the model's learning path. By prioritizing data that offers a wide variety of linguistic features and selecting examples that are considered to be of high learning value, these strategies seek to make the training process more effective and efficient.

Two prominent techniques in this area are ASK-LLM and DENSITY sampling. ASK-LLM leverages the model's zero-shot reasoning capabilities to evaluate the usefulness of each training example. This innovative approach allows the model to self-select its training data based on a predetermined set of quality criteria. Meanwhile, DENSITY sampling focuses on ensuring a broad representation of linguistic features in the training set, with the goal of exposing the model to as broad a spectrum of language as possible. This method seeks to optimize the data coverage aspect, ensuring that the model encounters a wide range of linguistic scenarios during its training phase.

ASK-LLM, for example, has shown that it can significantly improve model capabilities, even when a large portion of the initial data set is excluded from the training process. This approach speeds up the training schedule and suggests creating high-performance models with substantially less data. The efficiency gains from these techniques suggest a promising direction for the future of LLM training, potentially reducing the environmental footprint and computational demands of developing sophisticated ai models.

The ASK-LLM process involves evaluating training examples through the lens of the model's existing knowledge, effectively allowing the model to prioritize data that it “believes” will most improve its learning. This self-referential data evaluation method marks a significant shift from traditional data selection strategies, emphasizing the intrinsic quality of the data. On the other hand, DENSITY sampling employs a more quantitative measure of diversity, seeking to fill gaps in the model's exposure to different linguistic phenomena by identifying and including underrepresented examples in the training set.

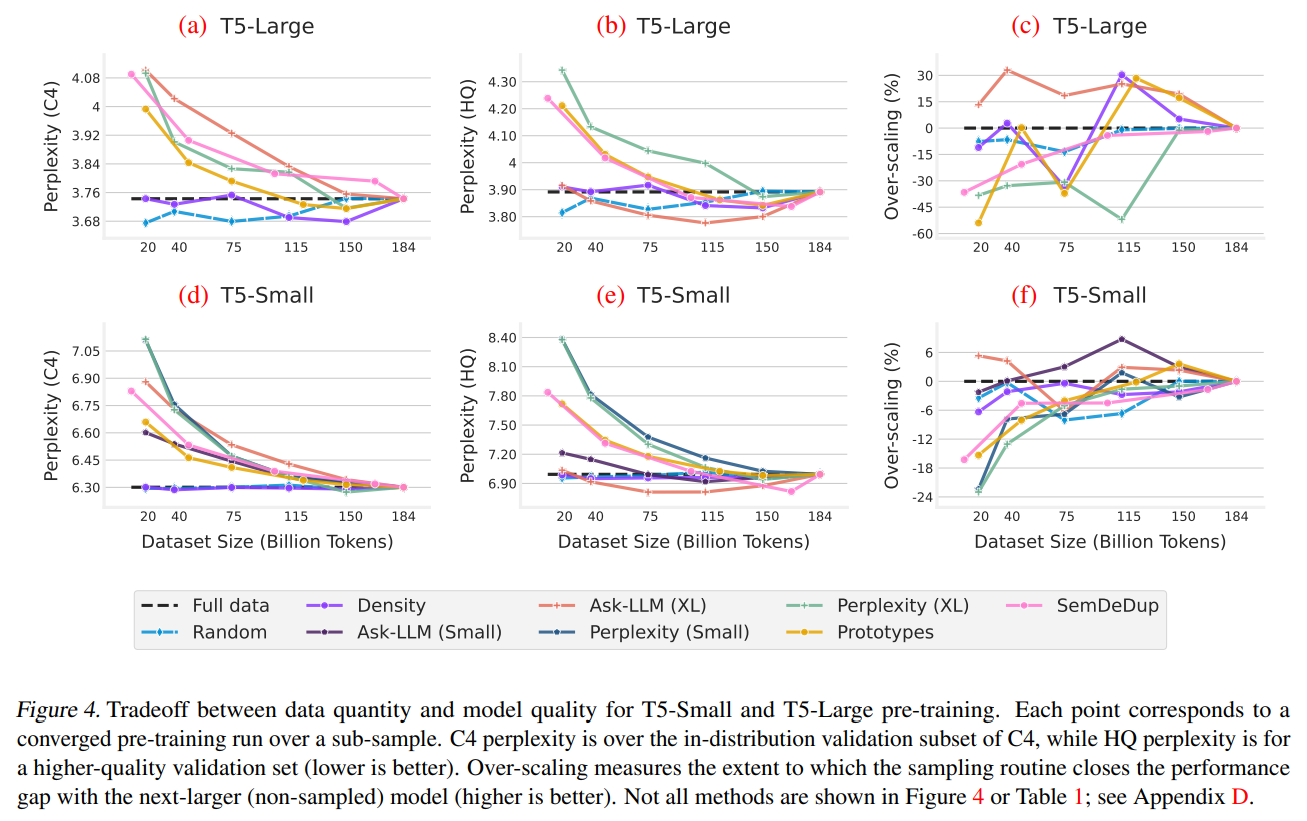

The research results underline the effectiveness of these approaches:

- Models trained on data curated by ASK-LLM consistently outperformed those trained on the full dataset, demonstrating the value of quality-focused data curation.

- DENSITY sampling matched the performance of models trained on full data sets by ensuring diverse linguistic coverage, highlighting the importance of variety in training data.

- The combination of these methods presents a compelling case for a more demanding approach to data selection, capable of achieving superior model performance while potentially reducing resource requirements for LLM training.

In conclusion, exploring data-efficient training methodologies for LLM reveals a promising avenue to improve ai model development. Important findings from this research include:

- The introduction of ASK-LLM and DENSITY sampling as innovative techniques to optimize training data selection.

- Demonstrated improvements in model performance and training efficiency through strategic data curation.

- Potential to reduce computational and environmental costs associated with LLM training, aligning with broader goals of sustainability and efficiency in ai research.

Review the Paper. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on Twitter and Google news. Join our 37k+ ML SubReddit, 41k+ Facebook community, Discord channeland LinkedIn Grabove.

If you like our work, you will love our Newsletter..

Don't forget to join our Telegram channel

![]()

Hello, my name is Adnan Hassan. I'm a consulting intern at Marktechpost and soon to be a management trainee at American Express. I am currently pursuing a double degree from the Indian Institute of technology, Kharagpur. I am passionate about technology and I want to create new products that make a difference.

<!– ai CONTENT END 2 –>

NEWSLETTER

NEWSLETTER