In our ever-evolving world, the importance of sequential decision making (SDM) in machine learning cannot be underestimated. Unlike static tasks, SDM reflects the fluidity of real-world scenarios, ranging from robotic manipulations to evolving healthcare treatments. Just as basic language models such as BERT and GPT have transformed natural language processing by leveraging vast amounts of textual data, pretrained basic models show promise for SDM. Armed with a rich understanding of decision sequences, these models can be tailored to specific tasks, similar to how linguistic models are tailored to linguistic nuances.

However, SDM poses unique challenges, distinct from existing pre-training paradigms in vision and language:

- There is the problem of changing data distribution, where the training data shows variable distributions at different stages, which affects performance.

- Task heterogeneity complicates the development of universally applicable representations due to diverse task configurations.

- Data quality and monitoring pose challenges, as high-quality data and expert guidance are often scarce in real-world scenarios.

To address these challenges, this paper proposes Premier-TACO, a novel approach focused on creating a universal and transferable encoder using a reward-free, dynamics-based, and temporal contrastive pretraining target (shown in Figure 2). By excluding reward cues during pre-training, the model gains the flexibility to generalize across various subsequent tasks. Leveraging a world model approach ensures that the encoder learns compact representations adaptable to multiple scenarios.

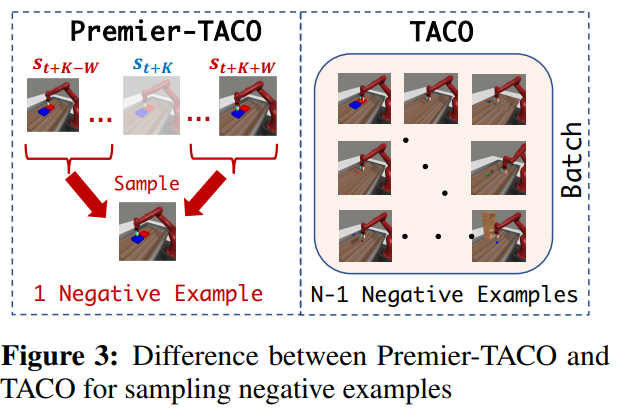

Premier-TACO significantly improves the temporal action contrastive learning objective (TACO) (the difference is shown in Figure 3), extending its capabilities to large-scale multitasking offline pre-training. In particular, Premier-TACO strategically samples negative examples, ensuring that the latent representation captures control-relevant information efficiently.

In empirical evaluations of Deepmind Control Suite, MetaWorld, and LIBERO, Premier-TACO demonstrates substantial performance improvements (shown in Figure 1) in few-chance imitation learning compared to baseline methods. Specifically, in Deepmind Control Suite, Premier-TACO achieves a relative performance improvement of 101%, while in MetaWorld it achieves a 74% improvement, even showing robustness to low quality data.

Furthermore, Premier-TACO pretrained representations exhibit remarkable adaptability to unseen tasks and embodiments, as demonstrated in different robotic manipulation and locomotion tasks. Even when faced with novel camera views or low-quality data, Premier-TACO maintains a significant advantage over traditional methods.

Finally, the approach shows its versatility through fine-tuning experiments, where it improves the performance of large pre-trained models like R3M, bridging domain gaps and demonstrating strong generalization capabilities.

In conclusion, Premier-TACO significantly promotes low-opportunity policy learning by offering a robust and highly generalizable representation pre-training framework. Its adaptability to various tasks, realizations, and data imperfections underlines its potential for a wide range of applications in the field of sequential decision making.

Review the Paper. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on Twitter. Join our 37k+ ML SubReddit, 41k+ Facebook community, Discord Channeland LinkedIn Grabove.

If you like our work, you will love our Newsletter..

Don't forget to join our Telegram channel

![]()

Vineet Kumar is a Consulting Intern at MarktechPost. She is currently pursuing her bachelor's degree from the Indian Institute of technology (IIT), Kanpur. He is a machine learning enthusiast. He is passionate about research and the latest advances in Deep Learning, Computer Vision and related fields.

<!– ai CONTENT END 2 –>

NEWSLETTER

NEWSLETTER