Large language models (LLMs) have demonstrated remarkable prowess in various natural language processing tasks. However, applying them to Recover of information IR tasks remain challenging due to the paucity of IR-specific concepts in natural language. To address this, the idea of fine-tuning instruction has emerged as a critical method to elevate the capabilities and control of LLMs. While LLMs with refined instruction have excelled in generalizing to new tasks, there is a gap in their application to IR tasks.

In response, this work presents a new data set, INTEREST (INstructure tyour datamyt foR.S.search), meticulously designed to improve the search capabilities of LLMs. This data set focuses on three fundamental aspects that prevail in search-related tasks: query understanding, document understanding, and the intricate relationship between queries and documents. INTERS is a comprehensive resource that encompasses 43 data sets deck 20 different search-related tasks.

The concept of Setting instructions It involves fitting pre-trained LLMs into formatted instances represented in natural language. It stands out not only for improving performance on directly trained tasks, but also for allowing LLMs to generalize to new, unseen tasks. In the context of search tasks, unlike typical NLP tasks, the focus is on queries and documents. This distinction drives the categorization of tasks into query understanding, document understanding, and query-document relationship understanding.

Tasks and data sets:

Developing a comprehensive instruction tuning dataset for a wide range of tasks is resource intensive. To avoid this, existing datasets from the IR research community are converted to an instructional format. Categories include (shown in Figure 1):

– Understanding the query: It addresses aspects such as query description, expansion, reformulation, intent classification, clarification, matching, subtopic generation, and suggestions.

– Understanding the document: It covers fact checking, summarizing, reading comprehension, and answering conversational questions.

– Understanding the query-document relationship: It mainly focuses on the task of document reclassification.

The construction of INTERS (shown in Figure 2) is a meticulous process that involves manually creating job descriptions and templates and fitting data samples into these templates. It reflects a commitment to creating a complete and instructive data set.

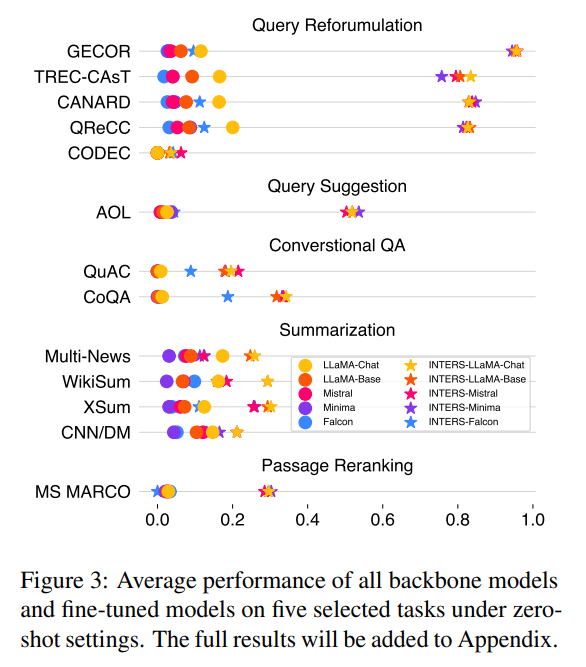

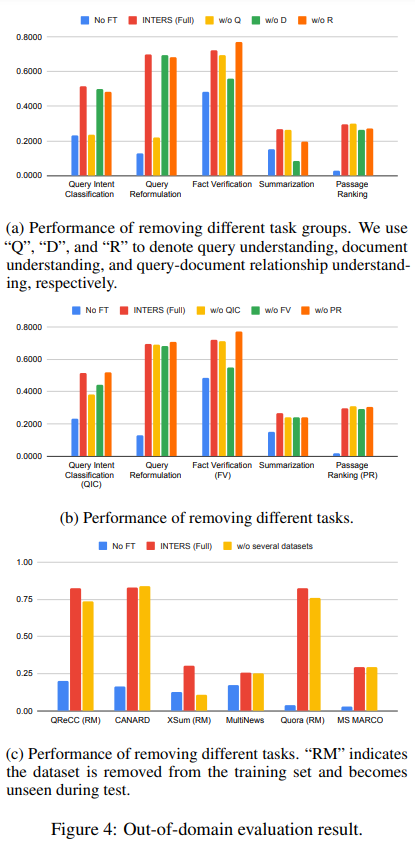

For the evaluation, four LLMs of different sizes are used: Falcon-RW-1B, Minima-2-3B, Mistral-7B and LLaMA-2-7B. In an evaluation in the domain (results shown in Figure 3), where all tasks and data sets are exposed during training, the effectiveness of instruction tuning on search tasks is validated. Beyond assessment in the domain (the results are shown in Figure 4), the authors investigate the generalization of fitted models to new and unseen tasks. Generalization is explored at the group level, task level, and data set level, providing insights into the adaptability of instructionally adjusted LLMs.

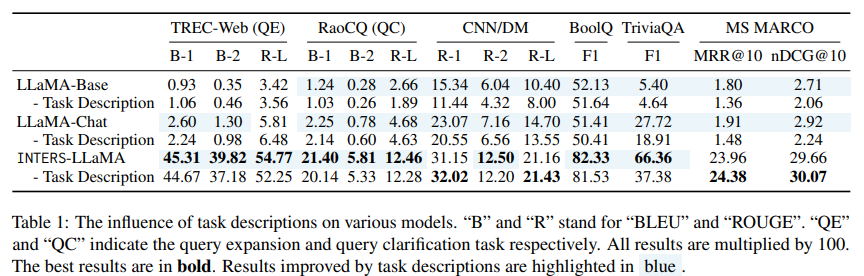

Several experiments aim to understand the impact of different environments within INTERS. In particular, the removal of job descriptions (results present in Table 1) of the data set significantly affects the performance of the model, highlighting the importance of a clear understanding of the task.

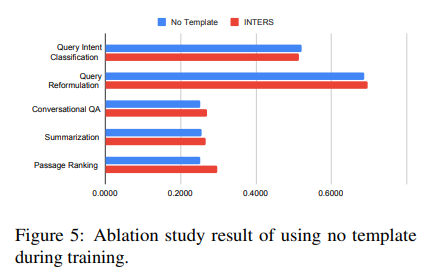

Templates and models that guide the understanding of tasks are essential components of INTERS. Ablation experiments (as in Figure 5) show that the use of instructional templates significantly improves model performance.

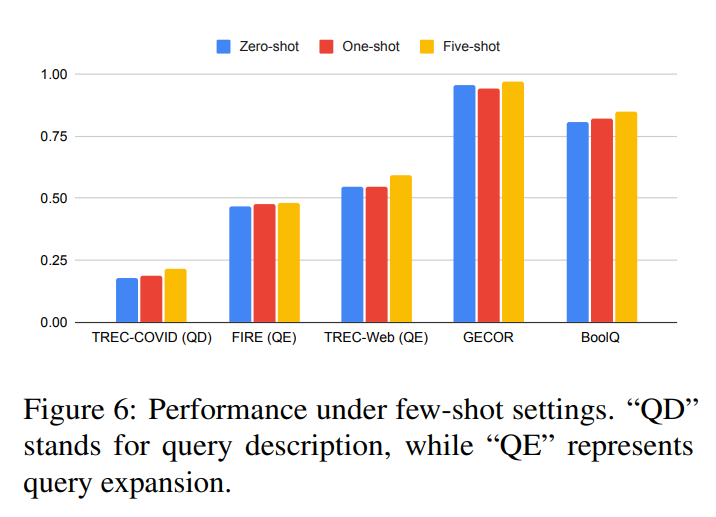

Given the combination of zero-shot and low-shot examples from INTERS, examining low-shot performance is crucial. Testing data sets within the models' input length limits demonstrates (shown in Figure 6) the effectiveness of the data set in facilitating learning in few opportunities.

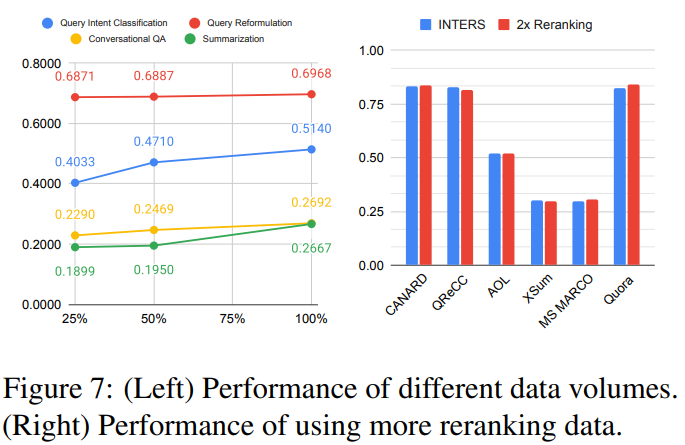

The amount of training data is explored, with experiments (results shown in Figure 7) indicating that increasing the volume of instructional data generally improves model performance, although with different sensitivity across tasks.

In summary, this article presents an exploration of instruction tuning for LLM applied to search tasks, culminating in the creation of the INTERS data set. The dataset proves to be effective in constantly improving the performance of LLMs in various settings. The research delves into critical aspects, shedding light on the structure of instructions, the impact of learning in a few attempts, and the importance of data volumes in the adjustment of instructions. The hope is that this work will catalyze further research in the LLM domain, particularly as it applies to IR tasks, encouraging continued optimization of instruction-based methods to improve model performance.

Review the Paper and GitHub. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on Twitter. Join our 36k+ ML SubReddit, 41k+ Facebook community, Discord channeland LinkedIn Grabove.

If you like our work, you will love our Newsletter..

Don't forget to join our Telegram channel

![]()

Vineet Kumar is a Consulting Intern at MarktechPost. She is currently pursuing her bachelor's degree from the Indian Institute of technology (IIT), Kanpur. He is a machine learning enthusiast. He is passionate about research and the latest advances in Deep Learning, Computer Vision and related fields.

<!– ai CONTENT END 2 –>

NEWSLETTER

NEWSLETTER