Building artificial systems that see and recognize the world in ways similar to human visual systems is a key goal of machine vision. Recent advances in the measurement of population brain activity, together with improvements in the implementation and design of deep neural network models, have made it possible to directly compare the architectural features of artificial networks with those of latent representations of biological brains, revealing crucial details about how these systems work. The reconstruction of visual images from brain activity, such as that detected by functional magnetic resonance imaging (fMRI), is one such application. This is a fascinating but difficult problem because the underlying brain representations are largely unknown and the sample size typically used for brain data is small.

Deep learning models and techniques, such as generative adversarial networks (GANs) and self-supervised learning, have recently been used by scholars to address this challenge. However, these investigations require fine tuning towards the particular stimuli used in the fMRI experiment or training new generative models with fMRI data from scratch. These attempts have shown excellent but limited performance in terms of semantic and pixel fidelity, partly due to the small amount of neuroscience data and partly due to the multiple difficulties associated with building complicated generative models.

Diffusion models, particularly latent diffusion models that require fewer computational resources, are a recent replacement for GAN. However, since BOMs are still relatively new, it’s difficult to have a full understanding of how they work internally.

By using an LDM called Stable Diffusion to reconstruct visual images from fMRI signals, a research team from Osaka University and CiNet attempted to address the aforementioned issues. They proposed a simple framework that can reconstruct high-resolution images with high semantic fidelity without the need to train or tune complex deep learning models.

The dataset used by the authors for this research is the Natural Scenes Dataset (NSD), which provides data collected from an fMRI scanner over 30 to 40 sessions during which each subject viewed three replays of 10,000 images.

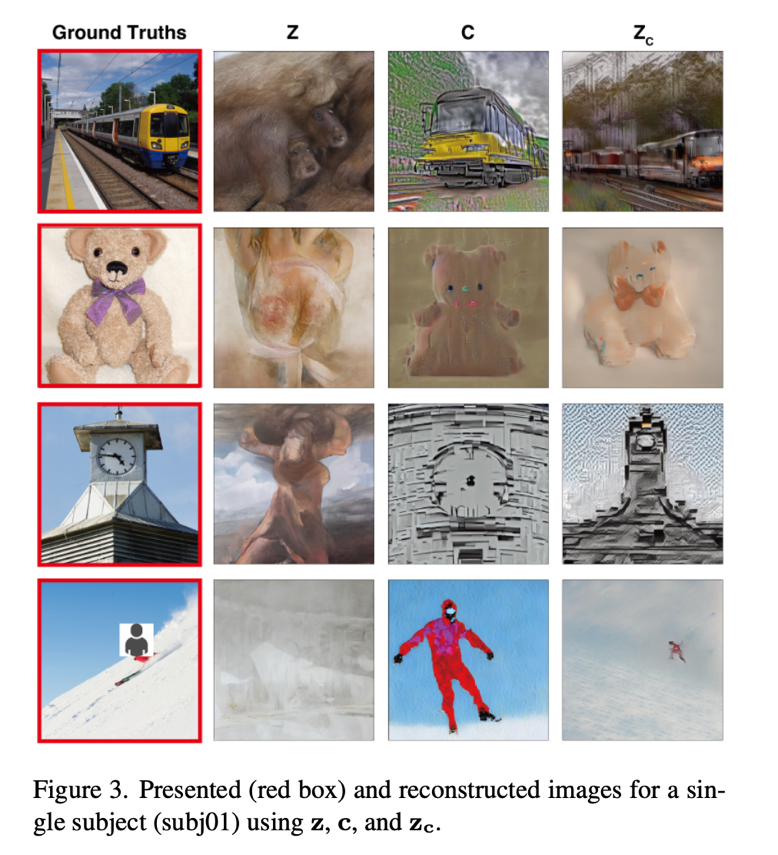

To start with, they used a latent diffusion model to create images from text. In the figure above (above), z is defined as the generated latent representation of z that has been modified by the model with c, c is defined as the latent representation of the texts (which describe the images), and zc is defined as the latent representation of the original image that has been compressed by the autoencoder.

To analyze the decoding model, the authors followed three steps (top figure, center). First, they predicted a latent representation z of the presented image X from fMRI signals within the early visual cortex (blue). z was then processed by a decoder to produce a coarse decoded image Xz, which was then encoded and passed through the broadcast process. Finally, the noisy image was added to a c-representation of latent text decoded from fMRI signals within the superior visual cortex (yellow) and denoised to produce zc. Starting with zc, a decoding module produced a final reconstructed image Xzc. It is important to underline that the only training required for this process is to linearly map the fMRI signals to the LDM, zc, z, and c components.

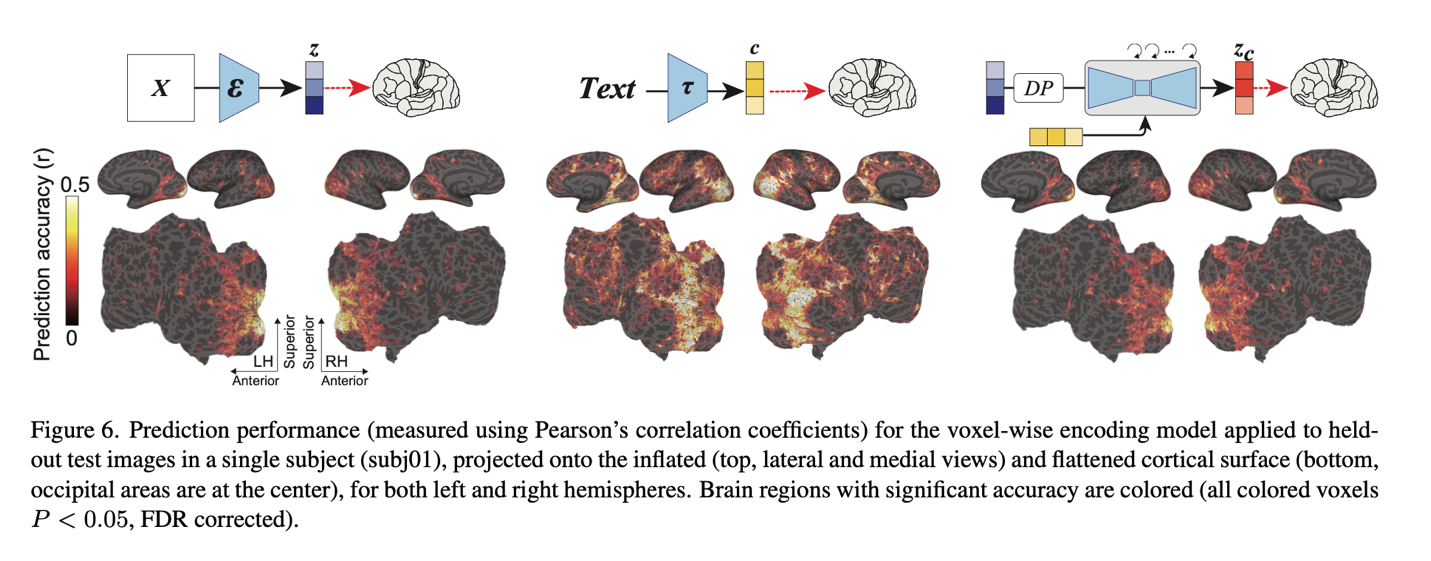

Starting with zc, zyc, the authors performed a coding analysis to interpret the internal operations of the MDLs by mapping them to brain activity (top, bottom figure). The results of image reconstruction from representations are shown below.

Images that were recreated using simply z had visual consistency with the original images, but their semantic value was lost. On the other hand, images that were only partially reconstructed using c produced images that had high semantic fidelity but inconsistent visuals. The validity of this method was demonstrated by the ability of zc-retrieved images to produce high-resolution images with high semantic fidelity.

Final analysis of the brain reveals new information about the DM models. In the back of the brain, the visual cortex, all three components achieved great predictive performance. In particular, z provided strong prediction performance in the early visual cortex, which is located in the posterior part of the visual cortex. Furthermore, it demonstrated strong predictive values in the superior visual cortex, which is the front part of the visual cortex, but smaller values in other regions. On the other hand, in the superior visual cortex, c led to the best prediction performance.

review the Paper and project page. All credit for this research goes to the researchers of this project. Also, don’t forget to join our 16k+ ML SubReddit, discord channeland electronic newsletterwhere we share the latest AI research news, exciting AI projects, and more.

Leonardo Tanzi is currently a Ph.D. Student at the Polytechnic University of Turin, Italy. His current research focuses on human-machine methodologies for intelligent support during complex interventions in the medical field, using Deep Learning and Augmented Reality for 3D assistance.

NEWSLETTER

NEWSLETTER

Recommended reading: Leveraging TensorLeap for effective transfer learning: bridging domain gaps

Recommended reading: Leveraging TensorLeap for effective transfer learning: bridging domain gaps