In the rapidly advancing domain of artificial intelligence, efficiently operating large language models (LLMs) on consumer-grade hardware represents a significant technical challenge. This problem arises from the inherent trade-off between model size and computational efficiency. Compression methods, including direct and multiple codebook quantization (MCQ), have offered partial solutions to minimize the memory requirements of these ai giants. However, these approaches often compromise model performance, leaving a gap for innovation in extreme model compression techniques.

A pioneering strategy called Additive Quantization for Language Models (AQLM) by researchers from HSE University, Yandex Research, Skoltech, IST Austria and NeuralMagic focused on minimizing this trade-off objective by reducing the bit count per model parameter to a range surprisingly low 2 to 3 bits. This strategy adopts and refines additive quantization, a technique that was previously limited to information retrieval for the specific challenges of LLM compression.

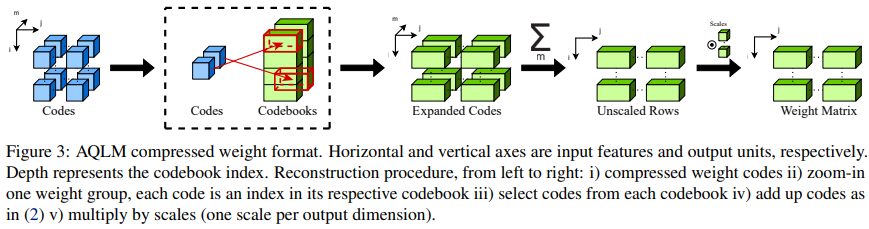

AQLM excels at preserving and, in some cases, improving the accuracy of compressed models, particularly in scenarios that demand extreme compression. This is achieved through a novel dual approach that includes learned additive quantization of weight matrices in a way that adapts to input variability and sophisticated joint optimization of codebook parameters across all layer blocks. . This dual strategy propels AQLM to the forefront of LLM compression technologies, setting new standards in the field.

One of the notable features of AQLM is its practical applicability on various hardware platforms. The researchers behind AQLM have provided implementations that demonstrate the effectiveness of the method on GPU and CPU architectures, ensuring its usefulness in real-world applications. This practicality is supported by a detailed evaluation of contemporary compression techniques, where AQLM consistently outperforms its competitors. It shines especially at extreme compression settings, demonstrating a remarkable ability to minimize model size without degrading performance. This is evidenced by AQLM's superior performance on metrics such as model perplexity and accuracy in zero-shot tasks, highlighting its efficiency in maintaining compressed model integrity.

Comparative analysis of AQLM with other leading compression methodologies reveals its unique position in the LLM compression landscape. Unlike other approaches that often require a trade-off between model size and accuracy, AQLM maintains or improves performance across a spectrum of metrics. This advantage is particularly evident in extreme compression, where AQLM sets new benchmarks in efficiency and effectiveness. The success of the method in this domain is a testament to the innovative approach taken by the researchers, combining learned additive quantization with joint optimization techniques to achieve unparalleled results.

In conclusion, AQLM emerges as an innovative approach in the search for efficient compression of LLMs. By addressing the critical challenge of reducing model size without sacrificing accuracy, AQLM paves the way for deploying advanced ai capabilities across a broader range of devices. Its innovative use of additive quantization tailored to LLMs and practical implementations of the method on various hardware platforms mark a significant step forward in making ai more accessible. AQLM's impressive performance, validated through rigorous evaluations, positions it as a model of innovation in LLM compression.

Review the Paper and GitHub. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on Twitter. Join our Telegram channel, Discord channeland LinkedIn Grabove.

If you like our work, you will love our Newsletter..

Don't forget to join our 38k+ ML SubReddit

![]()

Muhammad Athar Ganaie, consulting intern at MarktechPost, is a proponent of efficient deep learning, with a focus on sparse training. Pursuing an M.Sc. in Electrical Engineering, with a specialization in Software Engineering, he combines advanced technical knowledge with practical applications. His current endeavor is his thesis on “Improving Efficiency in Deep Reinforcement Learning,” which shows his commitment to improving ai capabilities. Athar's work lies at the intersection of “Sparse DNN Training” and “Deep Reinforcement Learning.”

<!– ai CONTENT END 2 –>