The advancement of artificial intelligence depends on the availability and quality of training data, particularly as multimodal core models gain importance. These models are based on diverse data sets spanning text, speech, and video to enable language processing, speech recognition, and video content generation tasks. However, The lack of transparency regarding the origins and attributes of data sets creates significant barriers. Using training data that is geographically and linguistically biased, inconsistently licensed, or poorly documented introduces ethical, legal, and technical challenges. Understanding gaps in data provenance is essential to advancing responsible and inclusive ai technologies.

ai systems face a critical problem in the representation and traceability of data sets, which limits the development of unbiased and legally sound technologies. Current data sets often rely heavily on a few web-based or synthetically generated sources. These include platforms such as YouTube, which accounts for a significant portion of voice and video data sets, and Wikipedia, which dominates text data. This dependency results in data sets not adequately representing underrepresented languages and regions. Besides, Unclear licensing practices for many data sets create legal ambiguities, with more than 80% of widely used data sets carrying some form of implicit or undocumented restriction, even though only 33% are licensed. Explicitly for non-commercial use.

Attempts to address these challenges have traditionally focused on specific aspects of data curation, such as removing harmful content or mitigating bias in text datasets. However, these efforts are often limited to single modalities and lack a comprehensive framework for evaluating data sets across modalities such as voice and video. The platforms that host these datasets, such as HuggingFace or OpenSLR, often lack the mechanisms to ensure metadata accuracy or enforce consistent documentation practices. This fragmented approach underscores the urgent need for a systematic audit of multimodal data sets that comprehensively considers their sourcing, licensing, and representation.

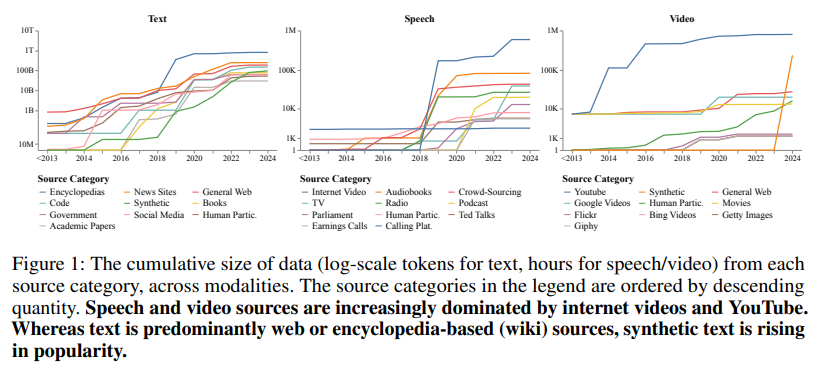

To close this gap, Data Provenance Initiative researchers conducted the largest longitudinal audit of multimodal data sets, examining nearly 4,000 public data sets created between 1990 and 2024. The audit spanned 659 organizations from 67 countries, covering 608 languages and nearly 1.9 million hours of voice and video data. This extensive analysis revealed that web and social media platforms now account for the majority of training data, and that synthetic sources are also growing rapidly. The study highlighted that while only 25% of text datasets have explicitly restrictive licenses, almost all content coming from platforms like YouTube or OpenAI carries implicit non-commercial restrictions, raising questions about legal compliance and ethical use.

The researchers applied a meticulous methodology to annotate data sets, tracing their lineage back to the sources. This process uncovered significant inconsistencies in the way data is licensed and documented. For example, While 96% of text datasets include commercial licenses, more than 80% of their source materials impose restrictions that are not included in the dataset documentation. Similarly, video datasets relied heavily on proprietary or restricted platforms, with 71% of video data originating from YouTube alone. These findings underscore the challenges practitioners face in accessing data responsibly, particularly when data sets are repackaged or relicensed without preserving their original terms.

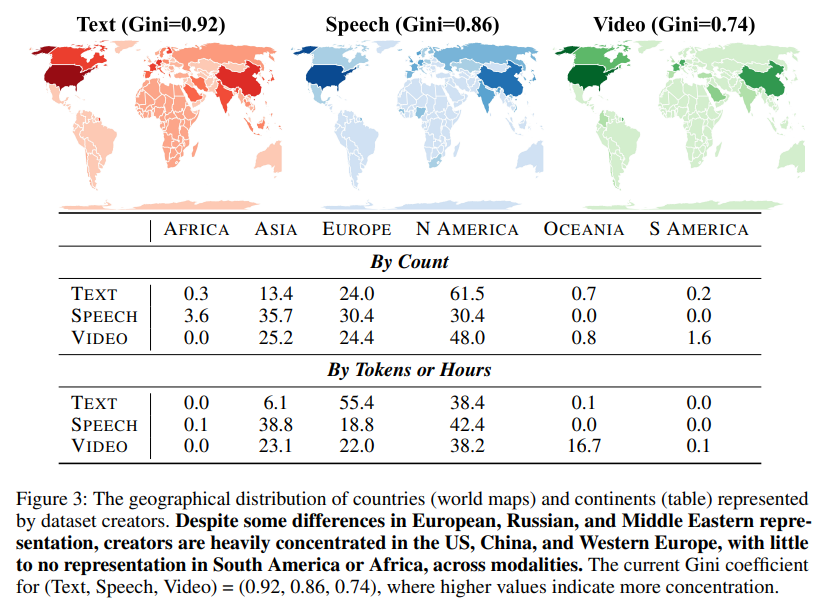

Notable findings from the audit include the predominance of web-sourced data, particularly voice and video. YouTube emerged as the largest source, contributing nearly 1 million hours of speech and video content, surpassing other sources such as audiobooks or movies. Synthetic data sets, while still a smaller portion of the overall data, have grown rapidly and models like GPT-4 contribute significantly. The audit also revealed stark geographic imbalances. North American and European organizations accounted for 93% of text data, 61% of voice data, and 60% of video data. In comparison, regions such as Africa and South America collectively accounted for less than 0.2% across all modalities.

Geographic and linguistic representation remains a persistent challenge despite nominal increases in diversity. Over the past decade, the number of languages represented in training data sets has increased to over 600, yet measures of equality in representation have not shown any significant improvement. The Gini coefficient, which measures inequality, remains above 0.7 for geographic distribution and above 0.8 for linguistic representation in text data sets, highlighting the disproportionate concentration of contributions from Western countries. In the case of speech data sets, while the representation of Asian countries such as China and India has improved, African and South American organizations continue to lag far behind.

The research provides several critical conclusions and offers valuable information for developers and policymakers:

- More than 70% of voice and video data sets are derived from web platforms such as YouTube, while synthetic sources are becoming increasingly popular and account for almost 10% of all text data tokens.

- While only 33% of the data sets are explicitly non-commercial, more than 80% of the source content is restricted. This mismatch complicates legal compliance and ethical use.

- North American and European organizations dominate the creation of data sets, with African and South American contributions less than 0.2%. Linguistic diversity has nominally grown, but remains concentrated in many dominant languages.

- GPT-4, ChatGPT, and other models have contributed significantly to the rise of synthetic data sets, which now represent an increasing proportion of training data, particularly for creative and generative tasks.

- Lack of transparency and persistent Western-centric biases demand more rigorous audits and equitable practices in curating data sets.

In conclusion, This comprehensive audit sheds light on the growing reliance on synthetic and web-crawled data, persistent inequalities in representation, and the complexities of licensing across multimodal datasets. By identifying these challenges, the researchers provide a roadmap for creating more transparent, equitable and accountable ai systems. Their work underscores the need for continued vigilance and measures to ensure that ai serves diverse communities fairly and effectively. This study is a call to action for practitioners, policymakers, and researchers to address structural inequalities in the ai data ecosystem and prioritize transparency in data provenance.

Verify he Paper. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on <a target="_blank" href="https://twitter.com/Marktechpost”>twitter and join our Telegram channel and LinkedIn Grabove. Don't forget to join our SubReddit over 60,000 ml.

Trending: LG ai Research launches EXAONE 3.5 – three frontier-level bilingual open-source ai models that deliver unmatched instruction following and broad context understanding for global leadership in generative ai excellence….

Sana Hassan, a consulting intern at Marktechpost and a dual degree student at IIT Madras, is passionate about applying technology and artificial intelligence to address real-world challenges. With a strong interest in solving practical problems, he brings a new perspective to the intersection of ai and real-life solutions.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>