Researchers have identified a critical need for models designed specifically for Chinese applications in large language models. The YAYI2-30B model addresses this imperative by refining existing paradigms, with the goal of overcoming limitations found in models such as MPT-30B, Falcon-40B and LLaMA 2-34B. The central challenge revolves around developing a model capable of understanding knowledge in various domains and excelling in mathematical reasoning and programming tasks.

Existing models, such as MPT-30B, Falcon-40B and LLaMA 2-34B, represent the latest in large language models. However, a team of researchers from Beijing Wenge technology Co., Ltd. and the Institute of Automation of the Chinese Academy of Sciences introduced a pioneering solution in YAYI2-30B, a multilingual model meticulously designed for Chinese applications. Unlike conventional architectures, YAYI2-30B adopts a decoder-only approach, differentiating itself by incorporating FlashAttention 2 and MQA to accelerate training and inference processes. This innovative methodology lays the foundations for a model designed to surpass its predecessors in efficiency and performance.

The complexities of YAYI2-30B's architecture are revealed as researchers delve into the unique features that set it apart. The exclusive design of the decoder, enriched with FlashAttention 2 and MQA, stands out as a testament to the model's commitment to efficiency. Through the strategic use of distributed training, employing Zero Redundancy Optimizer (ZeRO) stage 3, gradient checkpoints and AdamW optimizer, YAYI2-30B shows higher efficiency and superior performance.

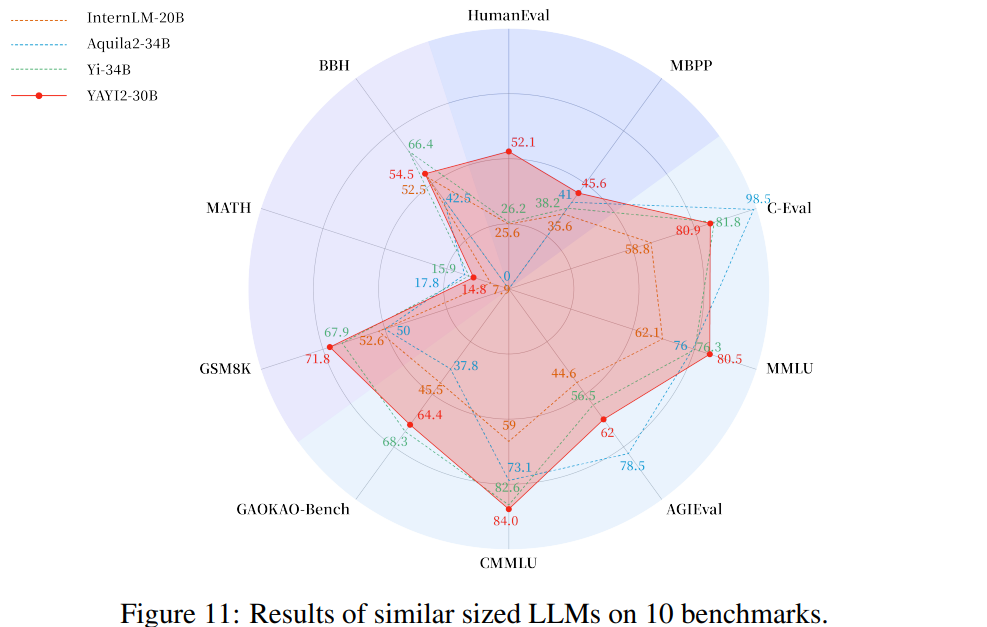

Meticulous supervised fine-tuning (SFT) and reinforcement learning from human feedback (RLHF) alignment processes contribute to the adaptability and proficiency of the model on various benchmarks. Evaluations from MMLU, AGIEval, CMMLU, GSM8K, HumanEval and MBPP underline YAYI2-30B's versatility and highlight its prowess in knowledge understanding, mathematical reasoning and programming tasks.

The model's real-world applicability is a testament to the successful fusion of FlashAttention 2, MQA, and alignment processes. YAYI2-30B emerges as an incremental improvement and leap forward in large language models. Its strategic design and superior performance attest to the researchers' dedication to overcoming existing challenges.

In conclusion, the tireless efforts of the research team are realized through YAYI2-30B. Strategic alignment processes and innovative architecture position YAYI2-30B as a pioneer in large language models, specially designed for Chinese applications. Researchers' commitment to refining large language models is evident in YAYI2-30B's ability to understand and reason across domains and execute complex programming tasks. The path to addressing language understanding challenges in Chinese applications takes a notable leap with the arrival of YAYI2-30B, showing the potential for innovative advancements in this field. However, users are urged to approach their implementation responsibly, given the potential impact on safety-critical scenarios.

Review the Paper. All credit for this research goes to the researchers of this project. Also, don't forget to join. our SubReddit of more than 35,000 ml, 41k+ Facebook community, Discord channel, LinkedIn Graboveand Electronic newsletterwhere we share the latest news on ai research, interesting ai projects and more.

If you like our work, you'll love our newsletter.

![]()

Madhur Garg is a consulting intern at MarktechPost. He is currently pursuing his Bachelor's degree in Civil and Environmental Engineering from the Indian Institute of technology (IIT), Patna. He shares a great passion for machine learning and enjoys exploring the latest advancements in technologies and their practical applications. With a keen interest in artificial intelligence and its various applications, Madhur is determined to contribute to the field of data science and harness the potential impact of it in various industries.

<!– ai CONTENT END 2 –>