Researchers are increasingly focused on creating systems that can handle multimodal data exploration, which combines structured and unstructured data. This involves analyzing text, images, videos and databases to answer complex queries. These capabilities are crucial in healthcare, where medical professionals interact with patient records, medical images, and textual reports. Similarly, multimodal exploration helps interpret databases with metadata, textual critiques, and images of artworks in art curation or research. The perfect combination of these types of data offers significant potential for decision making and insight.

One of the main challenges in this field is allowing users to query multimodal data using natural language. Traditional systems have difficulty interpreting complex queries that involve multiple data formats, such as requesting trends in structured tables while analyzing the content of related images. Additionally, the absence of tools that provide clear explanations of query results makes it difficult for users to trust and validate the results. These limitations create a gap between advanced data processing capabilities and real-world usability.

Current solutions attempt to address these challenges using two main approaches. The first integrates multiple modalities into unified query languages, such as NeuralSQL, which embeds visual language functions directly into SQL commands. The second uses agent workflows that coordinate multiple tools to analyze specific modalities, exemplified by CAESURA. While these approaches have advanced the field, they fail to optimize task execution, ensure explainability, and address complex queries efficiently. These shortcomings highlight the need for a system capable of dynamic adaptation and clear reasoning.

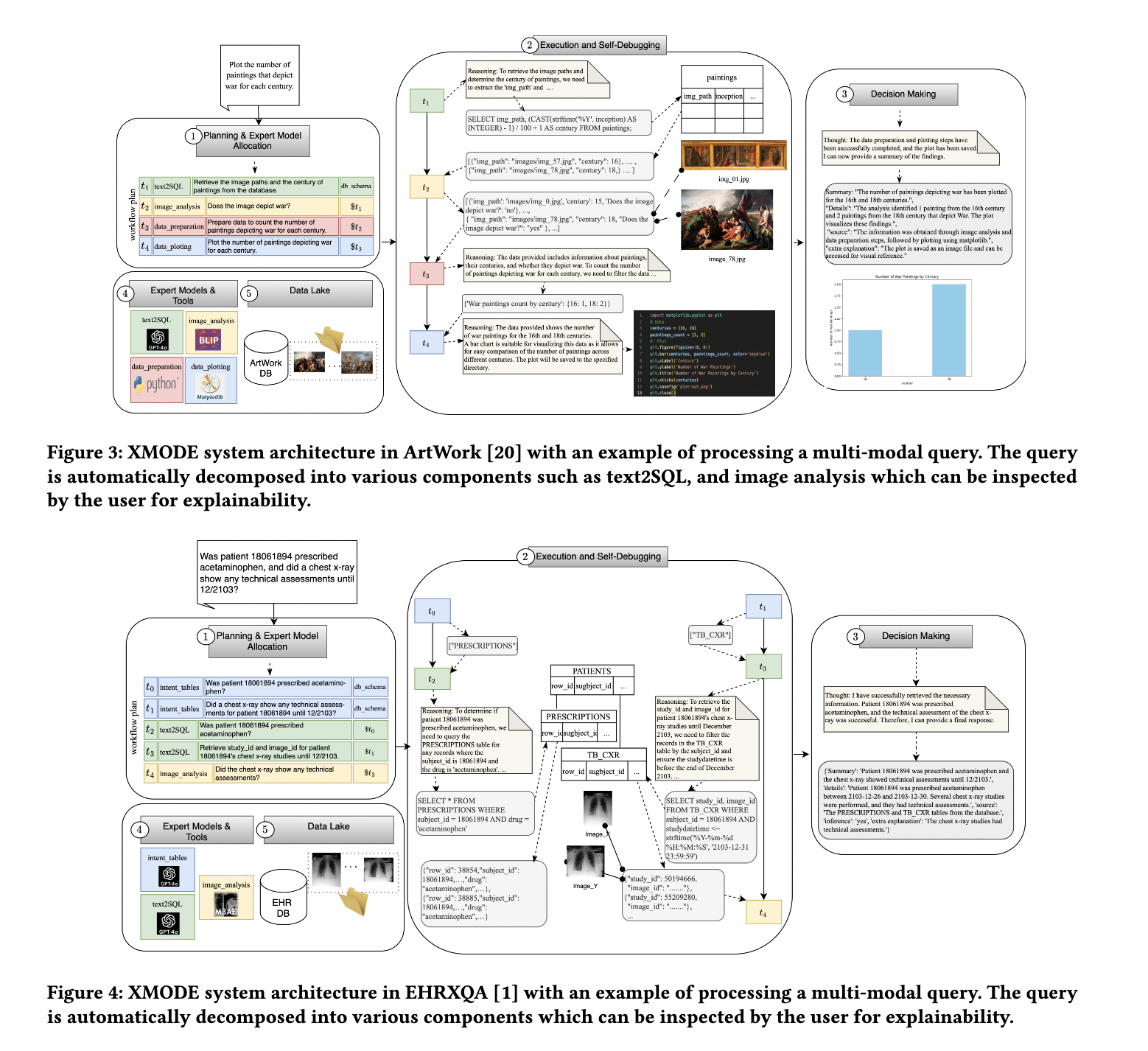

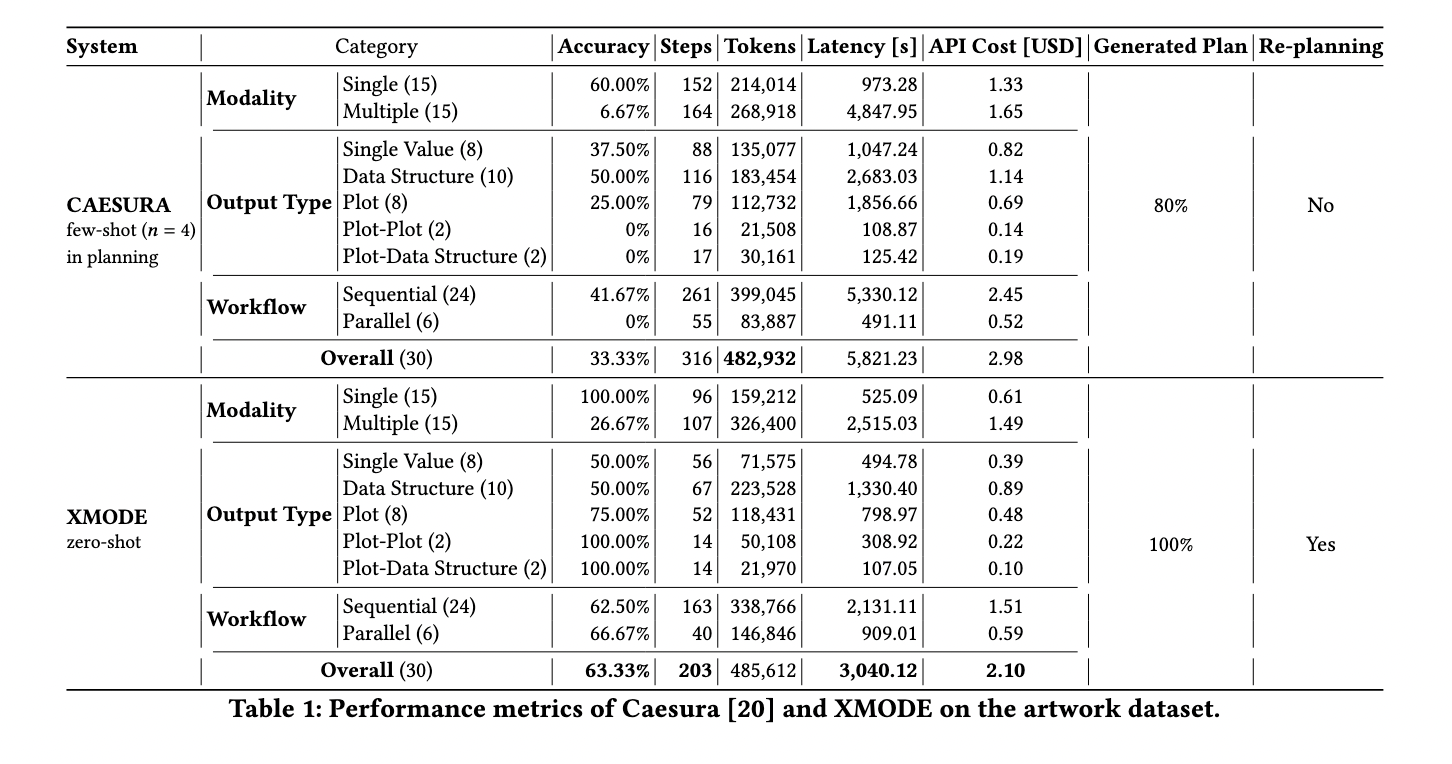

Researchers at the Zurich University of Applied Sciences have presented XMODE, a novel system designed to address these problems. XMODE enables the exploration of explainable multimodal data using a Large Language Model (LLM)-based agent framework. The system interprets user queries and decomposes them into subtasks such as SQL generation and image analysis. By creating workflows represented as directed acyclic graphs (DAGs), XMODE optimizes the sequencing and execution of tasks. This approach improves efficiency and accuracy compared to state-of-the-art systems such as CAESURA and NeuralSQL. Additionally, XMODE supports task rescheduling, allowing you to adapt when specific components fail.

The XMODE architecture includes five key components: expert model planning and allocation, execution and self-debugging, decision making, expert tools, and a shared data repository. When a query is received, the system builds a detailed workflow of tasks, assigning them to appropriate tools such as SQL generation modules and image analysis models. These tasks are executed in parallel whenever possible, reducing latency and computational costs. Additionally, XMODE's self-debugging capabilities allow you to identify and rectify errors in task execution, ensuring reliability. This adaptability is essential for handling complex workflows involving various data modalities.

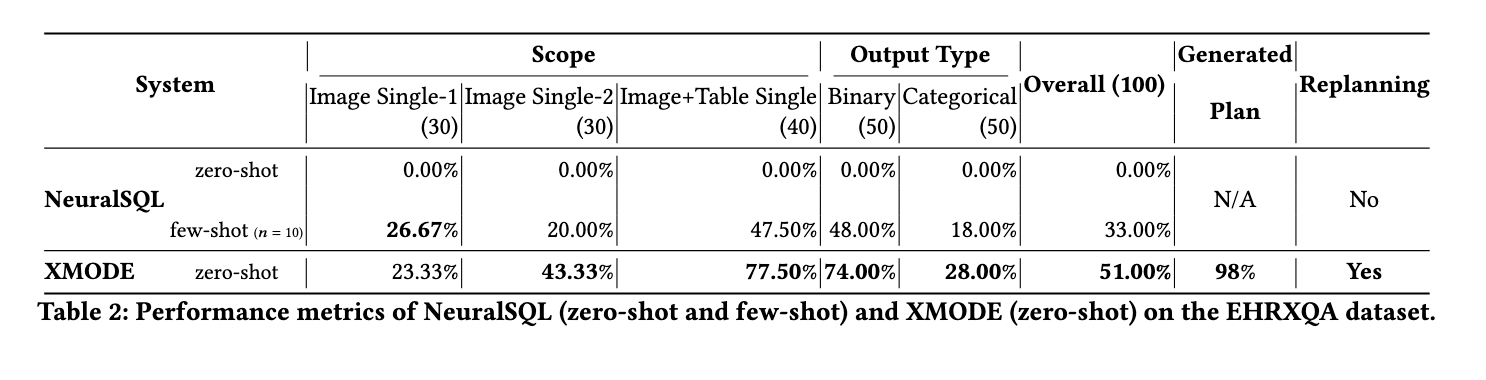

XMODE demonstrated superior performance during testing on two data sets. On a dataset of artworks, XMODE achieved an overall accuracy of 63.33%, compared to 33.33% for CAESURA. He excelled at handling tasks that require complex results, such as graphs and combined data structures, achieving 100% accuracy in generating graph graph results and graph data structures. Additionally, XMODE's ability to run tasks in parallel reduced latency to 3040 milliseconds, compared to CAESURA's 5821 milliseconds. These results highlight its efficiency in processing natural language queries on multimodal datasets.

On the electronic health record (EHR) dataset, XMODE achieved an accuracy of 51%, outperforming NeuralSQL in multi-table queries, with a score of 77.50% compared to NeuralSQL's 47.50%. The system demonstrated strong performance in handling binary queries, achieving 74% accuracy, significantly higher than NeuralSQL's 48% in the same category. XMODE's ability to adapt and replan tasks contributed to its strong performance, making it particularly effective in scenarios requiring detailed reasoning and multimodal integration.

XMODE effectively addresses the limitations of existing multimodal data exploration systems by combining advanced planning, parallel task execution, and dynamic replanning. Its innovative approach allows users to query complex data sets efficiently, ensuring transparency and explainability. With demonstrated improvements in accuracy, efficiency and cost-effectiveness, XMODE represents a significant advancement in the field and offers practical applications in areas such as healthcare and art curation.

Verify he Paper. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on <a target="_blank" href="https://twitter.com/Marktechpost”>twitter and join our Telegram channel and LinkedIn Grabove. Don't forget to join our SubReddit over 60,000 ml.

Trending: LG ai Research launches EXAONE 3.5 – three frontier-level bilingual open-source ai models that deliver unmatched instruction following and broad context understanding for global leadership in generative ai excellence….

Nikhil is an internal consultant at Marktechpost. He is pursuing an integrated double degree in Materials at the Indian Institute of technology Kharagpur. Nikhil is an ai/ML enthusiast who is always researching applications in fields like biomaterials and biomedical science. With a strong background in materials science, he is exploring new advances and creating opportunities to contribute.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>

NEWSLETTER

NEWSLETTER