Question answering (QA) is a crucial area in natural language processing (NLP), which focuses on developing systems that can accurately retrieve and generate answers to user queries from vast data sources. Retrieval-augmented generation (RAG) improves the quality and relevance of answers by combining information retrieval with text generation. This approach filters out irrelevant information and presents only the most pertinent passages for extensive language models (LLMs) to generate answers.

One of the major challenges in QA is the limited scope of existing datasets, which often use single-source corpora or focus on short, extractive responses. This limitation makes it difficult to assess the ability of LLMs to generalize across different domains. Current methods, such as Natural Questions and TriviaQA, rely heavily on Wikipedia or web documents, which are insufficient to assess performance across multiple domains. As a result, there is a significant need for more comprehensive evaluation frameworks that can test the robustness of QA systems across multiple domains.

Researchers at AWS ai Labs, Google, Samaya.ai, Orby.ai, and the University of California, Santa Barbara have introduced Long-form RobustQA (LFRQA) to address these limitations. This new dataset includes human-written long-form responses that integrate information from multiple documents into coherent narratives. Spanning 26,000 queries across seven domains, LFRQA aims to assess the cross-domain generalization capabilities of LLM-based RAG-QA systems.

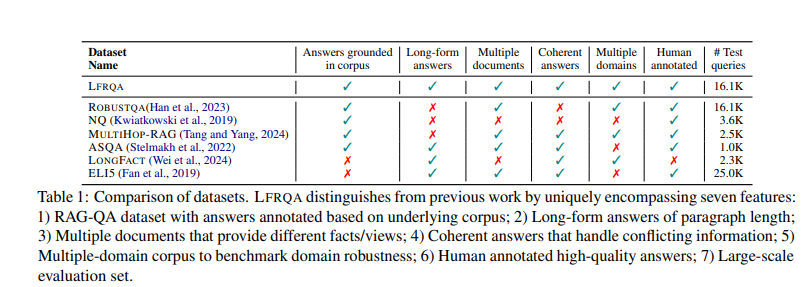

LFRQA distinguishes itself from previous datasets by offering extensive corpus-based responses, ensuring consistency and spanning multiple domains. The dataset includes annotations from multiple sources, making it a valuable tool for evaluating QA systems. This approach addresses the shortcomings of extractive QA datasets, which often fail to capture the comprehensive and detailed nature of modern LLM responses.

The research team introduced the RAG-QA Arena framework to leverage LFRQA to evaluate QA systems. This framework employs model-based evaluators to directly compare LLM-generated responses to LFRQA’s human-written responses. By focusing on consistent, long-form responses, RAG-QA Arena provides a more accurate and challenging benchmark for QA systems. Extensive experiments demonstrated a high correlation between model-based and human-written evaluations, validating the effectiveness of the framework.

Researchers employed several methods to ensure the high quality of LFRQA. Annotators were asked to combine short, extractable responses into coherent, extended responses, incorporating additional information from the documents where necessary. Quality control measures included random audits of annotations to ensure completeness, consistency, and relevance. This rigorous process resulted in a dataset that effectively assesses the interdisciplinary robustness of quality control systems.

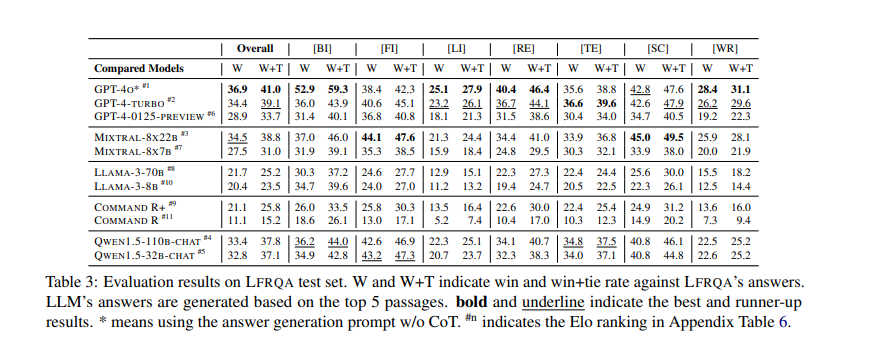

The performance results of the RAG-QA Arena framework show significant findings. Only 41.3% of the answers generated by the most competitive LLMs were preferred over the human-written LFRQA answers. The dataset demonstrated a strong correlation between model-based and human-written assessments, with a correlation coefficient of 0.82. Furthermore, the assessment revealed that LFRQA answers, which integrated information from up to 80 documents, were preferred in 59.1% of cases compared to the answers from the leading LLMs. The framework also highlighted a 25.1% gap in performance between intra-domain and extra-domain data, emphasizing the importance of cross-domain assessment for developing robust QA systems.

In addition to its comprehensive nature, LFRQA includes detailed performance metrics that provide valuable insights into the effectiveness of quality control systems. For example, the dataset contains information on the number of documents used to generate responses, the consistency of those responses, and their fluency. These metrics help researchers understand the strengths and weaknesses of different quality control approaches, thereby guiding future improvements.

In conclusion, the research led by AWS ai Labs, Google, Samaya.ai, Orby.ai, and the University of California, Santa Barbara highlights the limitations of existing QA assessment methods and presents LFRQA and RAG-QA Arena as innovative solutions. These tools offer a more comprehensive and challenging benchmark for assessing the interdisciplinary robustness of QA systems, significantly contributing to the advancement of NLP and QA research.

Review the PaperAll credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter and join our Telegram Channel and LinkedIn GrAbove!. If you like our work, you will love our Newsletter..

Don't forget to join our Over 47,000 ML subscribers on Reddit

Find upcoming ai webinars here

Nikhil is a Consultant Intern at Marktechpost. He is pursuing an integrated dual degree in Materials from Indian Institute of technology, Kharagpur. Nikhil is an ai and Machine Learning enthusiast who is always researching applications in fields like Biomaterials and Biomedical Science. With a strong background in Materials Science, he is exploring new advancements and creating opportunities to contribute.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>