Software engineering agents have become essential for managing complex coding tasks, particularly in large repositories. These agents use advanced language models to interpret natural language descriptions, analyze code bases, and implement modifications. Its applications include debugging, feature development, and optimization. The effectiveness of these systems depends on their ability to handle real-world challenges, such as interacting with large repositories and running tests to validate solutions, which makes the development of such agents exciting and challenging.

The lack of comprehensive training environments is one of the main challenges in this area. Many existing datasets and benchmarks, such as SWE-Bench and R2E, focus on isolated problems or are based on synthetic instructions that do not represent the complexities of real-world coding tasks. For example, while SWE-Bench offers test cases for validation, its training dataset lacks executable environments and dependency configurations. This discrepancy limits the usefulness of existing benchmarks for training agents capable of addressing the nuanced challenges of software engineering.

Efforts to address these challenges have previously relied on tools such as HumanEval and APPS, which support evaluation of isolated tasks but fail to integrate complexities at the repository level. These tools often lack a coherent link between natural language problem descriptions, executable code bases, and comprehensive testing frameworks. As a result, there is a pressing need for a platform that can bridge these gaps by offering real-world tasks within functional and executable environments.

Researchers from UC Berkeley, UIUC, CMU, and Apple have developed SWE-Gym, a novel environment designed to train software engineering agents. SWE-Gym integrates 2,438 Python tasks from GitHub issues across 11 repositories, providing pre-configured executable environments and expert-validated test cases. This platform presents an innovative approach by combining the complexity of real-world tasks with automated testing mechanisms, creating a more effective training ecosystem for language models.

The SWE-Gym methodology focuses on replicating real-world encoding conditions. Tasks are derived from GitHub issues and combined with repository snapshots and corresponding unit tests. The dependencies for each task are meticulously configured, ensuring the accuracy of the executable environment. These configurations were validated semi-manually through rigorous processes involving around 200 hours of human annotation and 10,000 hours of CPU core, resulting in a robust training data set. The researchers also introduced a subset of 230 tasks, SWE-Gym Lite, which focuses on simpler, independent problems, allowing for rapid prototyping and evaluation.

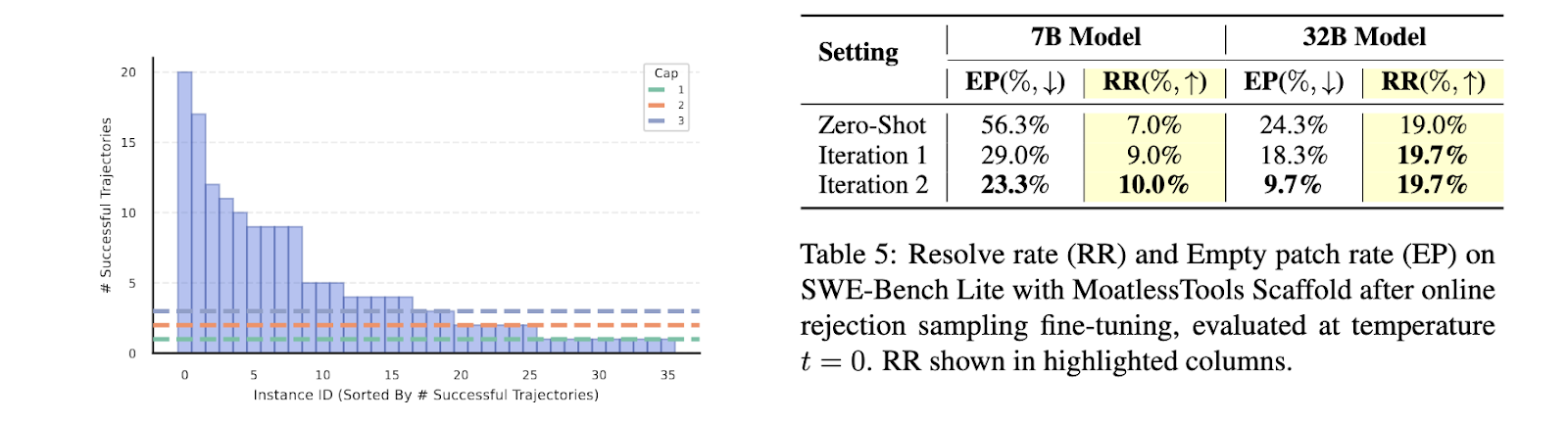

The performance evaluation of SWE-Gym demonstrated its significant impact on the training of software engineering agents. Using the Qwen-2.5 Coder model, the optimized agents achieved marked improvements in task resolution across SWE-Bench benchmarks. Specifically, solve rates increased from 20.6% to 32.0% in SWE-Bench Verified and from 15.3% to 26.0% in SWE-Bench Lite. These gains represent a significant jump from previous benchmarks for open language models. Additionally, SWE-Gym-supported agents reduced failure rates in stuck scenarios by 18.6% and improved task completion rates in real-world environments.

The researchers also explored the inference time scale using a verifier trained on agent trajectories taken from SWE-Gym. This approach allowed agents to generate multiple solution trajectories for a given problem, selecting the most promising one using a reward model. The verifier achieved a Best@K score of 32.0% on SWE-Bench Verified, demonstrating the environment's ability to improve agent performance through scalable computing strategies. These results emphasize the potential of SWE-Gym to improve both the development and evaluation of software engineering agents.

SWE-Gym is a fundamental tool to advance research on software engineering agents. Addressing the limitations of the above benchmarks and providing a realistic and scalable environment provides researchers with the resources necessary to develop robust models capable of solving complex software challenges. With its open source release, SWE-Gym paves the way for significant advances in the field, setting new standards for the training and evaluation of software engineering agents.

Verify he Paper and GitHub. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on <a target="_blank" href="https://twitter.com/Marktechpost”>twitter and join our Telegram channel and LinkedIn Grabove. Don't forget to join our SubReddit over 60,000 ml.

UPCOMING FREE ai WEBINAR (JANUARY 15, 2025): <a target="_blank" href="https://info.gretel.ai/boost-llm-accuracy-with-sd-and-evaluation-intelligence?utm_source=marktechpost&utm_medium=newsletter&utm_campaign=202501_gretel_galileo_webinar”>Increase LLM Accuracy with Synthetic Data and Assessment Intelligence–<a target="_blank" href="https://info.gretel.ai/boost-llm-accuracy-with-sd-and-evaluation-intelligence?utm_source=marktechpost&utm_medium=newsletter&utm_campaign=202501_gretel_galileo_webinar”>Join this webinar to learn actionable insights to improve LLM model performance and accuracy while protecting data privacy..

Nikhil is an internal consultant at Marktechpost. He is pursuing an integrated double degree in Materials at the Indian Institute of technology Kharagpur. Nikhil is an ai/ML enthusiast who is always researching applications in fields like biomaterials and biomedical science. With a strong background in materials science, he is exploring new advances and creating opportunities to contribute.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>